Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I would love the ability to double click a un-named tab and rename it for 'temp' workflows.

eg - "New Workflow*" to "working on macro update"...

Reason:

- when designer crashes it is a huge pain to go through auto saves with "New Workflow*" names to find the one you need

- I work on a lot of projects at once and pull bits of code out and work on small subset and then get destracted and have to move over to another project. With mulitpule windows and tabs open it gets confusing with 10 'new worflow' tabs open.

- Allows for better orginaization of open tabs - can drag tabs into groups and in order to know where to start from last time.

-

API SDK

-

Category Developer

Hi!

Can you please add a tool that stops the flow? And I don't mean the "Cancel running worfklow on error" option.

Today you can stop the flow on error with the message tool, but there's not all errors you want to stop for.

Eg. I can use 'Block until done' activity to check if there's files in a specific folder, and if not I want to stop the flow from reading any more inputs.

Today I have to make workarounds, it would be much appreciated to have a easy stop-if tool!

This could be an option on the message tool (a check box, like the Transient option).

Cheers,

EJ

-

API SDK

-

Category Developer

All the tools in the interface should be populated and assigned/selected with dynamic values.

Now we have the option of populating a set of values for Tree/List/Dropdown.

But we do not have the selecting some of them by default / while its loading.

And Other controls like TextBox/NumericUpDown/CheckBox/RadioButton also should be controlled by values from database.

For example If I have a set of three radio buttons, I should be selecting a radio button based on my database values while the workflow loading.

Hello,

please remove the hard limit of 5 output files from the Python tool, if possible.

It would be very helpful for the user to forward any amount of tables in any format with different columns each.

Best regards,

-

API SDK

-

Category Developer

I think it would be extremely helpful to have an in-DB Detour so that you could filter a user's information without having to pull it out of DB and then put it back in for more processing. A time where this would be useful is if you have a large dataset and don't want to pull the entire dataset out of the DB because it will take a long time to pull it. This would be applicable for filtering a large dataset by a specific state chosen by the user or possibly a region. The Detour in the developer tools actually seems like it would do the job necessary, it just needs to connect to the In-DB tools.

When passing a data connection to the Dynamic Input Tool as a string and using the 'Change Entire File Path' option, the password parameter of the connection string is not encrypted and is displayed in the metadata source information.

We have since changed our macro that was using this method, but wanted to raise awareness of this situation. I suggest that the same procedure used to encrypt the password in all other connection methods be called if the workflow is configured to pass a password through the input as a string.

-

API SDK

-

Category Developer

Building a custom tool is nice, but the best way to show someone how to use it is to have an example. It would be great if we could package example workflows into a yxi file so our custom tools have samples to start from.

-

API SDK

-

Enhancement

-

API SDK

-

Category Developer

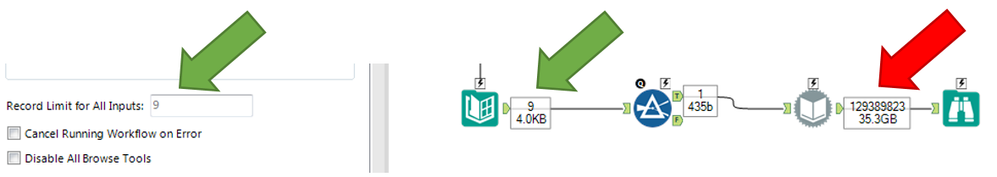

Would love to see the Workflow - Configuration > Runtime > Record Limit for All Inputs option extended to Dynamic Input tools.

-

API SDK

-

Category Developer

Hi All,

This is a fairly straightforward request. I'd like to be able to pass through interface tool values to the workflow events the same way I would pass it through to a tool in the workflow (%Question.<tool name>%). One use-case for this is that we are calling a workflow and passing in an ID, and if this workflow fails, I'd like to trigger an event that will call back to the application and say this specific workflow for this ID failed.

The temporary solution is to have the workflow write to a temp file and have the event reference that temp file, but this is clunky and risky if there are parallel runs occurring.

Best,

devKev

Currently, when a scheduled job is running (and logging is enabled), the log file is locked for use.

Thanks!

-

API SDK

-

Category Developer

-

API SDK

-

Category Developer

With SSIS, you can invoke user precedence contraint(s) to where you will not run any downstream flows until one or more flows complete. A simple connector should allow you to do this. Right now, I have my workflow(s) in containers, and have to disable / enable different workflows, which can be time consuming. Below is a better definition:

Precedence constraints link executables, containers, and tasks in packages in a control flow, and specify conditions that determine whether executables run. An executable can be a For Loop, Foreach Loop, or Sequence container; a task; or an event handler. Event handlers also use precedence constraints to link their executables into a control flow.

-

API SDK

-

Category Developer

If you have a complex SQL query with a number of dynamic substitutions (e.g. Update WHERE Clause, Replace a Specific String), it would be nice to be able to optionally ouput the SQL that is being executed. This would be particuarly useful for debugging.

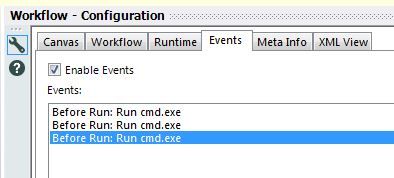

I've been using Events a fair bit recently to run batches through cmd.exe and to call Alteryx modules.

Unfortunately, the default is that the events are named by when the action occurs and what is entered in the Command line.

When you've got multiple events, this can become a problem -- see below:

It would be great if there was the ability to assign custom names to each event.

It looks like I should be able do this by directly editing the YXMD -- there's a <Description> tag for each event -- but it doesn't seem to work.

-

API SDK

-

Category Developer

Implement a process to have looping in the workflow without resorting to Macros. Although macros do, generally, solve the issue, I find them confusing and non-intuitive.

I would suggest looping through the use of two new tools: A StartLoop and EndLoop tool.

The start loop would have two (or more) input anchors. One anchor would be for the initial input and the other(s) for additional iterative inputs. The start loop would hold all iterative inputs until the original inputs have passed the gate and then resubmit them in order returned to the start loop.

The end loop would have three output anchors. One anchor would be for data exiting the loop upon reaching the exit condition. Another loop would be for the iterative (return) data. Note that transformations can be performed on the data BEFORE it re-enters the loop. The third would be an "overloop" exit anchor. This would be for any data that failed to meet the exit condition within the (configurable) maximum iteration expression. The data from the overloop anchor could be dealt with as required by the business rules for the unsatisfied data after being output from the EndLoop tool

The primary configurations would be on the EndLoop tool, where you would indicate the exit condition and the maximum iteration expression. The tool would also create an iteration counter field. As part of the configuration you could have a check box to "retain iteration count field on exit". If checked, the field would be maintained. If not checked, the field would be dropped for the data as it exits the loop.

This would making looping a bit more intuitive and it would be graphically self-documenting as well. Worth a mention at least.

-

API SDK

-

Category Developer

This may be a bit of a pipe dream but having an interface that would automatically and efficiently implement quantum computing functionality against different back ends would position Alteryx to be a user friendly interface to the quantum computing realm. My feeling is that, at the end of the day, most people will know quantum programming about as well as they currently know GPU programming, which is to say not at all. They'll need an easy-to-use tool to translate their wants to some form of quantum speed-up. Q#, Qiskit, Cirq and Bra-ket are neat, but suppose Alteryx had a "quantum solver" tool that would handle a lot of the dirty work of setting up, say, a quantum Grover Search, where the user just describes what they need.

I know some of the heavy hitters are already trying to simplify the interface to the quantum realm (e.g. as of 1/1/2021: Google Cirq, Microsoft Q#, IBM Aqua, AWS Braket all moving beyond basic enablement into realms of user friendliness.)

Just a thought!

-

API SDK

-

New Request

-

API SDK

-

Category Developer

Using the Download Tool, when doing a PUT operation, the tool adds a header "Transfer-Encoding: chunked". The tool adds this silently in the background.

This caused me a huge headaches, as the PUT was a file transfer to Azure Blob Storage, which was not chunked. At time of writing Azure BS does not support chunked transfer. Effectively, my file transfer was erroring, but it appeared that I had configured the request correctly. I only found the problem by downloading Fiddler and sniffing the HTTPS traffic.

Azure can use SharedKey authorization. This is similar to OAuth1, in that the client (Alteryx) has to encrypt the message and the headers sent, so that the Server can perform the same encryption on receipt, and confirm that the message was not tampered with. Alteryx is effectively "tampering with the message" (benignly) by adding headers. To my mind, the Download tool should not add any headers unless it is clear it is doing so.

If the tool adds any headers automatically, I would suggest that they are declared somewhere. They could either be included in the headers tab, so that they could be over-written, or they could have an "auto-headers" tab to themselves. I think showing them in the Headers tab would be preferable, from the users viewpoint, as the user could immediately see it with other headers, and over-ride it by blanking it if they need to.

-

API SDK

-

Category Developer

-

Tool Improvement

Hi alteryx can you please create a poll or an forms to fill or approval processes kind of tools . I know we have some analytics app tools but can we create something like google forms where we can easily create forms and get data outputs. Emails notifications for those forms and approvals .. etc ..

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets