Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hi Team,

Download tool is not updated their encryption policy. This stool still support SHA-1 but as per the organization requirements they want higher encryption mechanism. As in my case we are using SFTP connection and we want to download the data but my SFTP server using SHA-2 encryption due to which we not able to configure the workflow.

Please upgrade the Download tool for better experience in alteryx.

Regards,

Kaustubh

-

API SDK

-

Category Developer

Hi!

Can you please add a tool that stops the flow? And I don't mean the "Cancel running worfklow on error" option.

Today you can stop the flow on error with the message tool, but there's not all errors you want to stop for.

Eg. I can use 'Block until done' activity to check if there's files in a specific folder, and if not I want to stop the flow from reading any more inputs.

Today I have to make workarounds, it would be much appreciated to have a easy stop-if tool!

This could be an option on the message tool (a check box, like the Transient option).

Cheers,

EJ

-

API SDK

-

Category Developer

Within the Dynamic rename tool there is an option to ignore missing fields.

It would be great if this was a bit more "Dynamic", for example if you wish to ignore duplicate field names for example.

Otherwise you are left with warnings in a perfectly functioning workflow which some users may wish to control.

-

API SDK

-

Category Developer

Hi Team,

Could there be an enhancement made on the Dynamic Input tool to configure based on name.

This idea came up as I wanted to use an excel file and combine all the sheets. Currently, the tool imports data based on position, if there could be an option to import the data to configure the import based on name, that would be helpful.

-

API SDK

-

Category Developer

Hello,

please remove the hard limit of 5 output files from the Python tool, if possible.

It would be very helpful for the user to forward any amount of tables in any format with different columns each.

Best regards,

-

API SDK

-

Category Developer

Hello,

the Python scripts sometimes get lost or updated when the user forgets to save his/her Jupyter notebook.

Please enhance.

Thanks and best regards,

Fabian Rudolf

-

API SDK

-

Category Developer

When working with APIs it is quite common to use the JSON parse tool to parse out the download data which has been returned from the API. However the JSON data may be missing key:value pairs as they are not in the response. This causes issues with downstream tools where there are missing fields. The current workaround for this is to use either the Crew macro Ensure fields, or union on a text input file to force the missing fields downstream.

The issue with this is:

1) Users may not be aware of the requirement to ensure fields are present

2) You need to know the names of all the fields to include in the ensure fields macro

Therefore the feature request is to add an option to the JSON parse tool to add the model schema as an input.

For example with the UK companies house API, to get a list of all the directors at a company the model schema is

{

"active_count": "integer",

"etag": "string",

"items": [

{

"address": {

"address_line_1": "string",

"address_line_2": "string",

"care_of": "string",

"country": "string",

"locality": "string",

"po_box": "string",

"postal_code": "string",

"premises": "string",

"region": "string"

},

"appointed_on": "date",

"country_of_residence": "string",

"date_of_birth": {

"day": "integer",

"month": "integer",

"year": "integer"

},

"former_names": [

{

"forenames": "string",

"surname": "string"

}

],

"identification": {

"identification_type": "string",

"legal_authority": "string",

"legal_form": "string",

"place_registered": "string",

"registration_number": "string"

},

"links": {

"officer": {

"appointments": "string"

},

"self": "string"

},

"name": "string",

"nationality": "string",

"occupation": "string",

"officer_role": "string",

"resigned_on": "date"

}

],

"items_per_page": "integer",

"kind": "string",

"links": {

"self": "string"

},

"resigned_count": "integer",

"start_index": "integer",

"total_results": "integer"

}

But fields such as "resigned_on" are not always present in the data if there are no directors who have resigned. Therefore to avoid a user missing the requirement for unidentified fields needing to be added, if there was an optional input which took the model schema and therefore created the missing fields would greatly improve the API development process and minimise future errors being encountered once a workflow is in production.

-

API SDK

-

Category Developer

The Dynamic Input will not accept inputs with different record layouts. The "brute force" solution is to use a standard Input tool for each file separately and then combine them with a Union Tool. The Union Tool accepts files with different record layouts and issues warnings. Please enhance the Dynamic Input tool (or, perhaps, add a new tool) that combines the Dynamic Input functionality with a more laid-back, inclusive Union tool approach. Thank you.

-

API SDK

-

Category Developer

We could really use a proper API Tool for Input, rather than rely on curl queries, etc. that end up requiring many tools to parse into a proper table form, even using the JSON tools!

I for one deal regularly with cloud APIs, and pulling their data. We need an API Input tool that can handle various auth methods, Headers, Params, Body data, etc and that will ALSO handle converting the typical output (JSON) into two outputs - Meta info, and the table-compatible info.

I'm moving from direct SQL query to using API, and I literally have 15 Tools and steps required to create the same table data that the single SQL query tool gave me. In one case, I have to have an 18 tool Container that just handles getting a Bearer Token before I can pass that on to another container that actually does the curl query, etc and it's 15 tools needed to manage the output JSON into proper table-style data. (Yes, I already use the JSON tools, but the data requires massaging before that tool can work right).

As an add-on, we should also be able to make aliases for the API connection so we aren't having to put user/pass information into the workflow at any point. Interfaces are nice, but not really useful in automated workflow runs.

There's got to be a better way!

-

API SDK

The Download tool is so much more than Downloads. Think about the situation where you are using the Download tool to upload invoices and try explaining that to co-workers. "Oh yes - I'm going to implement the API to upload the invoices using the Alteryx download tool..." Could we call it the Curl tool or something?

-

API SDK

-

Category Developer

In normal output tool, when file type is csv, it is possible to custom select the delimiter. It would be great to be able to have the same option in the Azure Data Lake output tool, so for example you can write a pipe delimited file to your ADLS storage account.

Building a custom tool is nice, but the best way to show someone how to use it is to have an example. It would be great if we could package example workflows into a yxi file so our custom tools have samples to start from.

-

API SDK

-

Enhancement

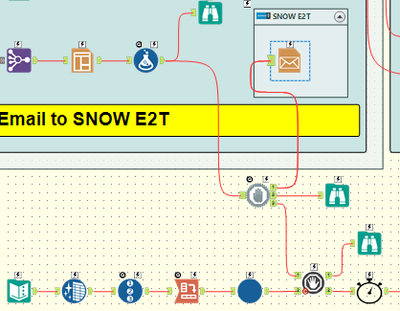

I have a workflow that sends an email to ServiceNow to create tickets. I have another workflow that runs after that one to pull the ticket numbers used for reporting. I thought I could combine the 2 workflows using a Block Until Done and then Wait a Second set for 120 seconds but I have found that the Email tool "runs as the last tool in your workflow".

Is it possible to change the runtime so it runs when called on?

-

API SDK

-

Category Developer

While it is good to have the test tool stop outputs based on tests, it isn't too insightful to users on the full details of these error messages. It would be more useful to have an output location for a test if these errors appear so that end users can troubleshoot instead of the workflow developer having to work through all of the error messaging.

There are workarounds for this that can be used, but they are often extensive and require the addition of significant logic. Adding optional outputs to the test tool would be a simple fix that could save a lot of hours of debugging when end users find an error.

-

API SDK

-

Category Developer

-

Enhancement

Hello all,

As of today, you can use the Dynamic Select Field with two options

-by types (you can dynamically select all, all date, etc..)

-by formula

I suggest 2 easy improvements

-from a list field. You connect a field list to a second entry with a "Field name" field

-from flow : You connect a flow to a second entry and the common fields are selected

Best regards,

Simon

-

API SDK

-

Category Developer

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

It would be very helpful if there was a tool that could stop the workflow without throwing an error. Currently, you can use the message tool to throw an error on a certain condition, and then enable the "Cancel Running Workflow on Error" option in the Runtime settings, but when the workflow is stopped in this way, many other tools don't function such as the Output Data and Email tools. Simply adding a tool that stops the workflow without erroring that also allows the other tools to finish their job would be great.

-

API SDK

-

Category Developer

-

New Request

It would be fantastic if the Detour tool worked outside for a Standard Workflow, and not just for Analytic Apps and Macros. I can think of many instances where this would be very useful.

-

API SDK

-

Category Developer

To allow users to pull data from Power BI, eg. datasets and usage data, to allow it to be manipulated in Alteryx.

-

API SDK

-

New Request

Hi!

So Dynamic Select is a wonderful tool - but in Formula mode it effectively acts as a filter. It drops all of the other fields which don't match the filter and they disappear - floating in the workflow ether, dreaming of the Join tool or other way they can be given XML life anew. It would be super cool if in stead of just having those Fields which are true exit and continue into the workflow if the False fields could be launched back into the workflow space via a False anchor like on a filter tool....

Hypothetical situation - I'm looking to isolate some fields and convert them to a different format based upon name or other characteristic. I'm doing this not to jettison my data set, but to improve it. I run dynamic select and multi-field tool, and suddenly I'm scratching my head. How do I rejoin my workflow with my new and improved data easily? The most direct, albeit stylistically immature way is apparently to a a new_ to my newly created new type fields, join the old fields versus the datastream and drop both of the old fields in place of the New_ versions (soon to shed their prefixes in a dynamic rename)... It works, but it could be much easier.

Thanks!

-

API SDK

-

Category Developer

- New Idea 374

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

245 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,602 -

Documentation

64 -

Engine

134 -

Enhancement

405 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

225 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 13 | |

| 5 | |

| 2 | |

| 2 | |

| 2 |