Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

I've obviously been doing lots of work with APIs for this to be my second idea posted today which relates to an improved based on recent work with APIs, but I also believe this is wider reaching.

I've been using Alteryx now for over 4 years and always assumed implicit behaviour of the select tool, so would add a select tool as best practice into a workflow after input tools to catch any data type issues. However I discovered that only fields where you either change the data type, length or field name result in that behaviour being configured and subsequently ensured. I discovered this as part of API development where I had an input field which was a string e.g. 01777777. Placing a select tool after this shows this is a string data type, however if the input was changed to 11777777 the select tool changes to a numeric data type. Therefore downstream formulas such as concatenating two strings would fail.

The workaround to this is to change the select tool to string:forced, which is fine when you know about it, but I suspect that a large majority of users don't. Plus if you have something like 2022-01-26 which is recognised initially as a string, then the forced option will be string:forced, however if you wanted it to be date:forced you need to add a first select tool to change to date, and a second select tool to change to string:forced.

Therefore my suggestion is to add a checkbox option in the select tool to Force all field types, which would update the xml of the tool and therefore ensure what I currently assume would be implicit behaviour is actually implemented.

Connect to Azure SQL Database with Azure AD also with Multi-Factor Authentication is a crucial feature nowadays. The tool should be configurable by interface tools so we can change the database within the same Azure Database server.

There is a workaround to use ODBC for this but it does not support caching credentials and that's why problematic to use. The credential prompt is appearing every time we run the workflow. With ODBC it's also required to have a separate DSN for every database in the same server.

To make it easy for users there should be a native connector for this feature. The user experience should be easy as it's in an azure data lake connector.

Dear Alteryx GUI Gang,

I'll create a container and then customize the colours, margins, transparency, border and then want consistency for other containers. It would be nice to have a format painter function (brush) to apply the format of one container to another. This of course could be extended to other tools like comments. There might be a desire to apply this to more tools too, but the comments and containers would be my focus as they are almost always custom configured.

Cheers,

Mark

Hello all,

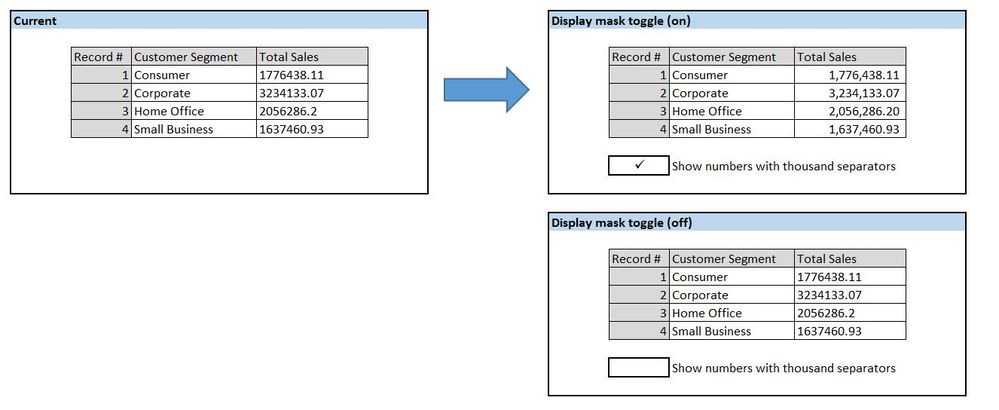

When looking at the Results window, I often find it a headache to read the numeric results because of the lack of commas. I understand that incorporating commas into the data itself could make for some weird errors; however, would it be possible to toggle an option that displays all numeric fields with proper commas and right-aligned in the Results window? I am referring to using a display mask to make numeric fields look like they have the thousands separator while retaining numeric functionality (as opposed to converting the fields to strings).

What do you think?

Similar to https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Custom-Functions-in-AMP/idc-p/845446#M16381, it would be great to have AMP allow for custom C++ functions. Custom XML functions were added in 21.1 for AMP, so custom C++ functions would be the natural next step!

cc: @jdunkerley79 @TonyaS

Our company often builds applications where we need the ability for it to dynamically update dropdowns based on a user's previous selections.

For example:

- A user needs to select their Server, database, and table for analysis (3 dropdowns).

- When the user selects their server, a query is run to get a list of all databases on that server. Then the database dropdown will automatically populate with this list of databases.

- The user then makes a database selection, and a query is then run to get all tables within that database. The table dropdown will automatically populate with this list of tables.

- The user makes their table selection, and then runs their analysis using the server, database, and table variables with values that they have selected from each dropdown.

We can do this in other programs, but unfortunately the lack of dynamic selections/dependent dropdowns is a big limitation for us when building Alteryx applications. Our current workarounds are chaining applications together, or using PyQt within the workflow. Chaining is clunky and often causes unforeseen issues when uploading to Server with errors that are non-descriptive, and using PyQt comes with Python versioning issues.

If this interactivity can somehow be added to Alteryx applications it would be a huge upgrade to our current Alteryx processes. Any suggestions for further workarounds would also be helpful!

Thank you,

Amanda

There is duplicated action in the table tool to force the user decide the decimal places.

In the normal situation, all the data preparation process has been completed prior to the Table tool, we just want to leverage on this tool to format the header or incorporate conditional formatting. However, once the Table tool is connected and we have to re-configure the decimal places for all the numeric columns, the column names will be varied from year to year and it brings additional manual intervention to the workflow.

We recommend to provide flexibility for us to take the original upstream data source without changing the underlying data set.

Lack of tools in Alteryx to extract data from True PDF. The current set of tools (Computer Vision) only allow us to extract data from images which is not ideal for True PDF documents in terms of accuracy.

Hi, I was looking for this but couldn't find a similar idea, so I post a new one. If someone knows about a similar idea, please ask the moderators to mer

CountChars(<String>, <char to count>,<case sensitive>)

Where <char to count> and <case sensitive> are optional parameters.

If <char to count> is not provided, the funtion will return the total character count within the <String>.

If <char to count> is provided, it'll return the number of ocurrences of that character within the <String>.

PS: For those tempted to suggest a workaround, I've been using REGEX_CountMatches() for this. Actually, the focus is to simplify user's experience and workflow performance providing a native function, instead of using REGEX which it's very demmanding on resources.

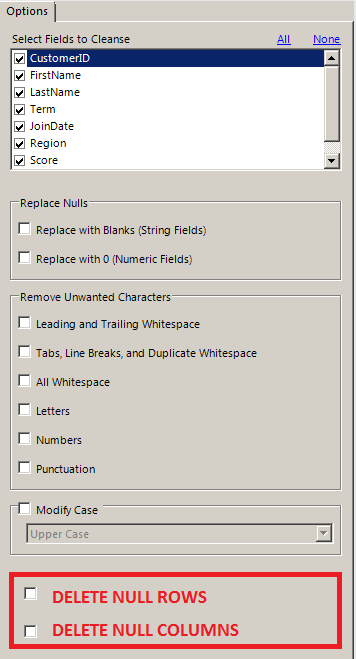

There are few workarounds for this task, but it would be really very easy if Data Cleansing Tool could delete Null Rows and Null Columns. After all its just a macro which can be modified and re-packaged into Alteryx Designer.

Currently, to delete a null row requires multiple columns validation for common Null attributes,

similarly to delete a null column every column has to be compared on a row-level and flagged for removal. Both of these approaches are clumsy.

Wouldn't it be so simple if Data Cleansing Tool gave such check boxes !!!

Right now it is not possible to open .xlsx files in Alteryx that has restricted access to specific users from the excel file, even when you are logged in to Alteryx and Excel with the same user. If it is possible to make Alteryx recognize which users/email addresses should be able to input a file to Alteryx I think it would be a great enhancement. To get around the problem we are currently changing the file restrictions through right clicking on it -> Properties -> Security, but this is time consuming and not a smooth fix.

All the best,

Elin

Hello,

I had a business case requiring a cost effective and quick storage solution for real time online sourced survey data from customers. A MongoDB instance would fit the need, so I quickly spun up a cluster on Mongo Atlas. Atlas was launched by MongoDB in 2016 as a database-as-a-service deployed on AWS. All instances for Atlas require TLS/SSL to connect. Currently, the Alteryx MongoDB connector does not support TLS/SSL connections and doesn't work against Atlas. So, I was left with a breakdown in my plan that would require manual intervention before ingesting data to Alteryx (not ideal).

Please consider expanding this functionality on all connectors. I am building Alteryx out in my agency as a data platform that handles sensitive customer information (name, address, email, etc.). Most tools I use to connect to secure servers today support this type of connection and should be a priority for Alteryx to resolve.

Thanks,

Mike Schock

I recently came to know that Alteryx doesn't support Denodo Data sources. We at our company are using Denodo as a data virtualization tool and also Alteryx is used for data blending. The request is for Alteryx to start supporting Denodo as a data source so that our company can reach out to Alteryx for any support related issues with Denodo.

Hi Alteryx Devs -

It would be *really tight* to have a drop down interface tool that would support auto completion based on a odbc connection to a table/column or ajax call. I recently had a situation wherein we need to give the users the ability to select an address, then run a workflow. But the truth is, our address data is terrible, and what I really needed was to be able to let the users start typing the address, then give them a list of choices to pick from, they pick the correct (but usually wrongly formatted) address, and then I send that value into the workflow.

I could not find a decent way to give a gallery user a reliable way to pick an address from our list, so eventually wound up having to write an ajax piece to handle the auto completion, capture the user input, then post to a service that would in turn, interact with gallery through the API, get the response, and send it back calling page, and back to the user. A significant amount of work to put into something that is an exceedingly common web operation of auto completion.

This would make a lot of gallery operations flow so much more naturally.

Thanks for listening!

brian

From Wikipedia

Druid is a column-oriented, open-source, distributed data store written in Java. Druid is designed to quickly ingest massive quantities of event data, and provide low-latency queries on top of the data.[1] The name Druid comes from the shapeshifting Druid class in many role-playing games, to reflect the fact that the architecture of the system can shift to solve different types of data problems. Druid is commonly used in business intelligence/OLAP applications to analyze high volumes of real-time and historical data.[2] Druid is used in production by technology companies such as Alibaba,[2] Airbnb,[2] Cisco,[3] eBay,[4] Netflix,[5] Paypal,[2], Yahoo.[6] and Wikimedia Foundation [7]

More and more companies are going from Hive to Druid for Dataviz needs, maybe it's time to look for Druid Integration with Alteryx?

I reported this to the support team but was told it was by design and to post here.

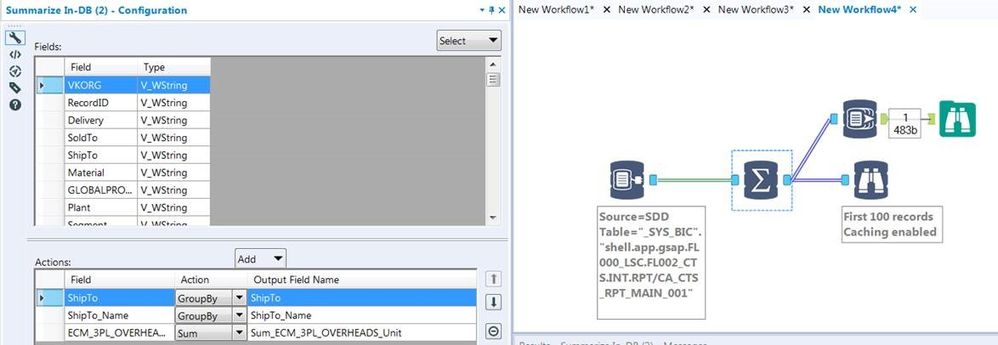

In-DB Inefficient SQL

I would like to report that the In-DB tools are generating horribly inefficient SQL code for simple operations. It seems no matter what tools you use every statement is starting with a nested 'Select * From'.

Example Simple workflow:

This is a simple Select and Group by but the SQL Generated is:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM (SELECT * FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001") AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

This is taking a very long time to execute:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 15.752 seconds (server processing time: 15.699 seconds)

Whereas if I take the same query and remove the nested Select *:

SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit"

FROM "_SYS_BIC"."shell.app.gsap.FL000_LSC.FL002_CTS.INT.RPT/CA_CTS_RPT_MAIN_001" AS "a"

GROUP BY "ShipTo", "ShipTo_Name"

It is very quick:

Statement 'SELECT "ShipTo", "ShipTo_Name", SUM("ECM_3PL_OVERHEADS_Unit") AS "Sum_ECM_3PL_OVERHEADS_Unit" FROM ...'

successfully executed in 1.211 seconds (server processing time: 1.157 seconds)

So Alteryx is generating queries up to x13 slower than they should be thereby defeating the point of using In-DB. As you can imagine in a workflow where we have multiple Connect In-DB tools this is a really substantial amount of time. Example used above is from SAP HANA DB has 1.9m rows and ~90 columns but we have much bigger tables/views than this.

If you look you will see its same behaviour for all In-DB tools where each tool creates another nested Select with its particular operator.

MY SUGGESTION:

So my suggestion is that Alteryx should combine the SQL of the first few tools and avoid using SELECT * completely unless no Select tools have been used. So it should combine:

- Connect In-DB + Select

- Connect In-DB + Filter

- Connect In-DB + Summarise

Preferably it should combine/flatten everything up until the first join or union. But Select + Filter are a must!

Note it seems some DB's can cope OK with un-nesting these big nested queries in the query plans for some Tables but normally not for Views. But some cannot cope at all and so the In-DB tools cannot even be used to Browse 100 records (due to select *).

In the Gallery, the File Browse tool returns the file location on the server where the file was uploaded. This allows the file to then be read in as input to a workflow.

If you need the file path of the original file location, you have to add a Text Input for the user to manually add it.

In my case (#00293302), I used a chained app to populate a list box for the user to select the Sheet Names they would like to process through the application. Unfortunately, since I was not able to capture the original file location the application errored out. This is due to the second app using the file location on the server where the file was uploaded, which is provided by the first workflow. This file location (from the Browse tool) is a temporary file location, where inputs are immediately deleted after processing.

Want to test this out? Create an application where you Output the file path from a File Browse tool.

i know.....grrr, this doesn't match your original file location!

Thank you,

Mark

Alteryx Server was recently updated to allow TLS-mediated connections to the MongoDB persistence layer. This allowed us to switch off of the embedded MongoDB to a highly-available MongoDB Atlas cluster. To our surprise after the switch, when we went to edit our workflows that make use of the persistence layer's data (Server Usage Report, etc.) to hit the new Atlas cluster, we found that the MongoDB Input tool does not support TLS connections. This absolutely needs to be changed. Based on organizational constraints, Atlas is our only option for a HA persistence layer. We absolutely have to have TLS support for the MongoDB Input tool. There is no other way for us to natively query our server persistence layer in Designer. Please bring the MongoDB Input tool into alignment with the MongoDB connections that are supported by Alteryx Server.

It would be very helpful to have an output of the workflow into a step by step document. so someone who does not have access to Alteryx can undestand the steps taken to create the flow hence the result or output.

Tableau v2018.3 introduced multiple table extracts. These are particularly useful for fact table to fact table joins and fact table to entitlement table joins for row-level security where the number of rows created by the join and/or size of join results would be prohibitively large. Also they are useful for fact table to spatial joins where we might have multiple spatial objects (for example custom province/district/health facility catchment) for each row of fact table data.

So in Alteryx I'd like to be able to specify 2+ tables & their join keys and then write out a .hyper multiple tables extract.

Jonathan

- New Idea 300

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 168

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,557 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

208 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- nkmcn on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections