Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Hello

Cartesian product is a common issue when joining dataset with a bad key. What I suggest is an option to check if there will be a cartesian product on the join tool.

-there is a label "Cartesian product (non join key uniqueness) detection"

-under it a drop down menu with three choices

-do nothing

-fail

-warning

Algo :

if do nothing==> well... do nothing more than actual behaviour.

if "fail" or "warning" : count distinct of join key versus count row on each side of the join. If none is unique, display a warning or an error message.

Best regards,

Simon

I would like to propose three feature enhancements for the Cross Tab tool under the Transform tool category.

1. Bringing Concat Unique functionality, which is an idea that is currently in Coming Soon status.

2. Adding Start and End in addition to Separator, similar to the Concatenate Properties found in the Summarize tool.

3. Changing the Default Size from 2048 to 1073741823 (max V_WString size). It is common for especially new users to ignore the truncation errors and potentially miss important data that may need to be processed downstream.

Is it possible to add sort functionality to the Sample tool in Designer, similar to the 'Sample Based on Order' functionality in the Sample tool in Designer Cloud? This would cut down on the Sort + Sample tool combo in Designer!

Thanks!

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

Currently when debug mode is entered in analytic apps and macros, the direct inputs to the app/macro when the error occurred are hardcoded into a workflow in debug mode, so that errors can be more easily detected.

However, inputs into analytic apps also create global variables which can be used in the more code-heavy aspects of Alteryx such as the Formula Tool. These are not updated in the same way which can cause workflows to break in debug mode - it would be really helpful if global variables could be updated in the same way as the inputs into tools are.

In short:

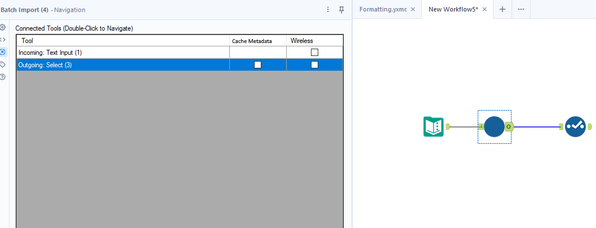

Add an option to cache the metadata for a particular tool so that it doesn't forget when using tool that have dynamic metadata such as batch macros or alteryx metadata engine can't resolve such as python tool.

Longer explanation:

The Problem:

One of the issues I often encounter when making dynamic workflows or ones that require calling external services is that Alteryx often forgets the metadata of what columns to expect. This causes the workflow to forget configuration of downstream tools when a workflow is first opened or when the metadata engine refreshes. There is currently the option to disable the metadata engine from automatically refreshing but this isn't a good option because you miss out on much of the value it brings.

Some of the common tools where I encounter this issue:

- Json parse

- Batch macros

- Python tool

- Regex parsing to rows

Solution:

Instead could we add an option to cache the metadata for a particular tool, this would save the metadata from the last time the workflow ran to within the workflows XML so that it persists when closed and reopened. Then when the metadata engine runs when it gets to this tool instead of resolving the metadata from the tool it instead uses the saved version in the XML. Obviously when it actually runs it would ignore this and any errors would still occur.

This could be an option in navigation pane of each tool. Mockup below:

This would make developing dynamic workflows far easier and resolve issues of configuration being lost when the metadata changes and alteryx forgets the options.

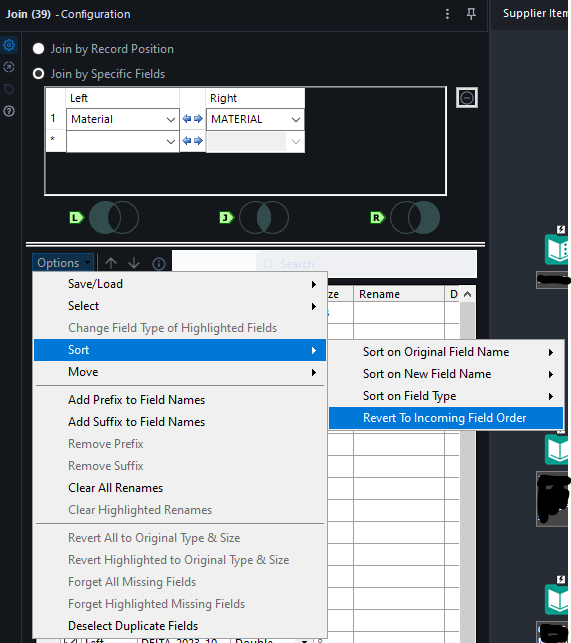

One of the most frequent issues I have with my workflow is when the Join Tool reorders columns for no reason. There is an option in the configuration to have the columns revert to the incoming order. It would be nice if the workflow automatically had this set as a default, or provide a toggle option in the settings for this to happen. In many of my flows I've had to create macros to keep the integrity of the column order or remember to go into the settings of this tool and re order the columns before a workflow run.

It would be very helpful to have an output of the workflow into a step by step document. so someone who does not have access to Alteryx can undestand the steps taken to create the flow hence the result or output.

Containers are a great feature. They allow us to create larger workflows in smaller canvases, and manage the flow and appearance of our work. However the design whether intentional or flawed that allows the container window to interact with the layers behind it is annoying. Connection wires should not redirect within a container because of things on the canvas behind the container. Likewise if I have a container open, I should not be able to grab a tool or container behind the open container through the container canvas. Please fix this flaw.

I try to use the Comment tool for documentation within workflows for team members (and my future self when I have to revisit it months after I built it). It would be helpful to be able to use markdown formatting inside the tool.

This might even encourage more documentation. *fingers crossed*

Hi there,

When you connect to a DB using a connection string or an alias - this shows up in the Workflow Dependancies in a way that is very useful to allow you to identify impacts if a DB is moved or migrated.

However - in 2023.1, if you use DCM then the database dependancies just show up as .\ which makes dependancy management much more difficult.

Please could you add the capability to view the DCM dependancies correctly in the dependancy window?

BTW - this workflow Dependancy Window would be a great place to build a simple process to move existing DB connections to a DCM connection!

CC: @wesley-siu @_PavelP

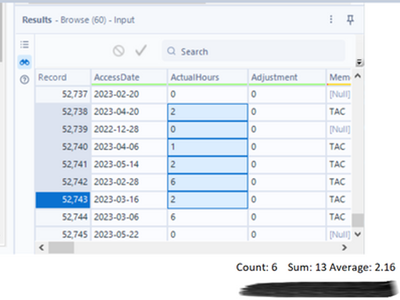

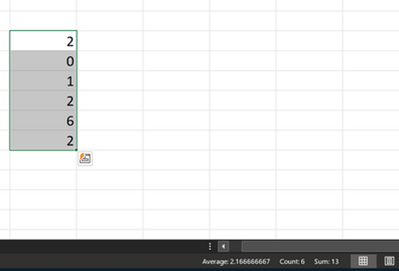

Alteryx should seriously consider incorporating certain Excel features into its Brows tool, as they greatly enhance usability and functionality.

Currently, when selecting specific records in the Brows tool, users are unable to obtain important metrics such as sum, average, or count without resorting to additional steps, such as adding a summary tool or filters.

However, envisioning the integration of a concise bar below the message result window that provides these essential statistics, which are immensely beneficial to users, would undoubtedly elevate the Brows tool to the next level.

By implementing this enhancement, Alteryx would make a significant impact and establish the Brows tool as a must-have resource.

Hi there,

the Snowflake documentation only refers to connection strings which use a DSN such as this page Snowflake | Alteryx Help which refers to the connection string as odbc:DSN=Simba_Snowflake_JWT;UID=user;PRIV_KEY_FILE=G:\AlteryxDataConnectorsTeam\OAuth project\PEMkey\rsa_key.p8;PRIV_KEY_FILE_PWD=__EncPwd1__;JWT_TIMEOUT=120

However - for canvasses which need to be productionized on Alteryx Server - it is critical to use dsn-less connection strings so that the canvasses can be deployed and run on any worker node without having to set up DSNs on every worker node.

A DSN-less connection string looks like this:

ODBC:DRIVER={SnowflakeDSIIDriver};UID=UserName;pwd=Password;WAREHOUSE=compute_wh;SERVER=server.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user|||NEWTESTDB.PUBLIC.MYTESTTABLE

Please could you consider making an update to the help texts to provide and describe a DSN-free connection string as well as the DSN driven connections?

Many thanks

Sean

Hello,

As of today, there are only few packages that are embedded with Alteryx Python tool. However :

1/Python becomes more and more popular. We will use this tool intensively in the next years

2/Python is based on existing packages. This is the force of the language

3/On Alteryx, adding a package is not that easy : you need to have admin rights and if you want your colleagues to open your workflow, it also means that he has to install it himself. In corporate environments, it means loosing time, several days on a project.

Personnaly, I would Polars, DuckDB.. that are way faster than Panda.

Parquet is a very fast, efficient and widely used data format, currently only below Parquet compression algorithms are supported and we cannot use Alteryx to read the parquet file that generated by other processes. This limits our usage in Alteryx.

Read support: Snappy and Gzip compression algorithms.

- Write support: Snappy only

It would be great for Alteryx to support all types of Parquet format so we can maximize the use of Alteryx in data analysis.

Requesting a reduced-cost, read-only license to allow for additional users in our organization be directly review workflows for UAT and control testing. Currently, the only individuals who can see the detail of Alteryx workflows directly are those with a full designer license or temporary trial license. In our Alteryx control structure, we have additional reviewers confirming the workflow who do not have licenses, which requires copious amounts of screenshots and/or direct meetings with our licensed designers to walkthrough the flows step-by-step. It would be much more efficient to provide a license that would allow folks to click through the integrations themselves, potentially allowing for comments and annotations, but without the ability to make direct changes. This would be much more cost efficient for our organization and allow for better workflow review and control.

I find it extremely annoying having to individually disable/enable control containers in a workflow. It would be nice if there was a way to select all control containers that I want to disable/enable and then be able to right click and do it quickly in one motion. This would save me a lot of time when working with 10+ control containers.

Hi is it possible to add sheet names (to spreedsheet files) to the output of a file directory tool

Please update the Render tool to allow users to name the Excel sheet for the output. Alteryx currently errors when using same naming convention that works in normal Output tool.

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 169

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections