Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Many times input files like csv / txt / excel comes with spaces ' ' in column names and we use rename feature of Select tool to either remove the space or replace the Space with Underscore. It will be very nice to have additional functionality on Select Tool as below - 1> A check box saying replace Space with underscore for all/selected columns. 2> Remove spaces from all/selected column names 3> Remove special characters from all/selected column names. It will be very helpful feature and will save a good amount of developer, where he keeps renaming each and every field to get rid of special characters or spaces in column names. Also many times to when in hurry we don't rename columns names and in Tables the column names appears very untidy like embedded in double quotes. e..g "ship-from dea/hin/customer id", "sum(ecs qty sold (eu))", "sum(ecs qty sold (pu))", "ship-from dea/hin/customer id" . Above mentioned examples are actual column names got created in Redshift tables when the rename was not done for column names.. I am sure it will be a very helpful feature for all the Alteryx developers.

In addition to the existing functionality, it would be good if the below functionality can also be provided.

1) Pattern Analysis

This will help profile the data in a better way, help confirm data to a standard/particular pattern, help identify outliers and take necessary corrective action.

Sample would be - for emails translating 'abc@gmail.com' to 'nnn@nnnn.nnn', so the outliers might be something were '@' or '.' are not present.

Other example might be phone numbers, 12345-678910 getting translated to 99999-999999, 123-456-78910 getting translated to 999-999-99999, (123)-(456):78910 getting translated to (999)-(999):99999 etc.

It would also help to have the Pattern Frequency Distribution alongside.

So from the above example we can see that there are 3 different patterns in which phone numbers exist and hence it might call for relevant standadization rules.

2) More granular control of profiling

It would be good, that, in the tool, if the profiling options (like Unique, Histogram, Percentile25 etc) can be selected differently across fields.

A sub-idea here might also be to check data against external third party data providers for e.g. USPS Zip validation etc, but it would be meaningful only for selected address fields, hence if there is a granular control to select type of profiling across individual fields it will make sense.

Note - When implementing the granular control, would also need to figure out how to put the final report in a more user friendly format as it might not conform to a standard table like definition.

3) Uniqueness

With on-going importance of identifying duplicates for the purpose of analytic results to be valid, some more uniqueness profiling can be added.

For example - Soundex, which is based on how similar/different two things sound.

Distance, which is based on how much traversal is needed to change one value to another, etc.

So along side of having Unique counts, we can also have counts if the uniqueness was to factor in Soundex, Distance and other related algorithms.

For example if the First Name field is having the following data -

Jerry

Jery

Nick

Greg

Gregg

The number of Unique records would be 5, where as the number of soundex unique might be only 3 and would open more data exploration opportunities to see if indeed - Jerry/Jery, Greg/Gregg are really the same person/customer etc.

4) Custom Rule Conformance

I think it would also be good if some functionality similar to multi-row formula can be provided, where we can check conformance to some custom business rules.

For e.g. it might be more helpful to check how many Age Units (Days/Months/Year) are blank/null where in related Age Number(1,10,50) etc are populated, rather than having vanila count of null and not null for individual (but related) columns.

Thanks,

Rohit

Hi,

It'd be great to have a specific connector for Hubspot. It' a marketing automation Platform such as Marketo.

Thanks.

Following unexpected behaviour from the Render tool where outputting to a UNC Path (see post) in a Gallery Appliction, on advice of support raising this idea to introduce consistent behavior across all tools where utilising a UNC Path.

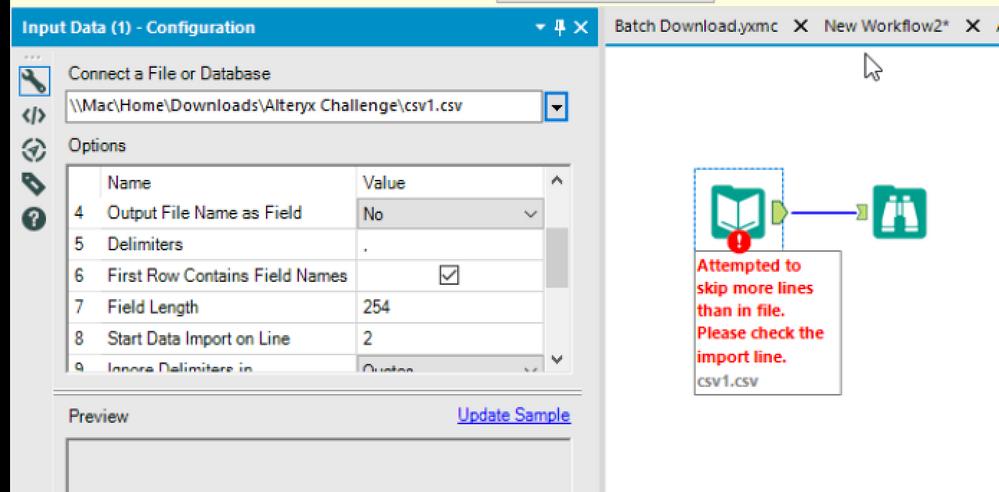

When running Alteryx using parallels we were unable to start a CSV file on a specific line (greater than 1).

Message: Attempted to skip more lines than in file. Please check the import line.

The filename includes a UNC path. We attempted to modify the workflow dependency to be relative (all options were tried). Alteryx could not/did not change the path as when using parallels the directory is always UNC.

This problem was posted in the discussions and I'm entering it here in ideas for remediation.

Cheers,

Mark

I want modification of the Email Tool to support running it at a specific point, defined by developer, within a workflow where currently "The Email tool will always be the last tool to run in a workflow".

We use the tool to send notification of completion of jobs and sometimes attach outputs but we would like to be able to also send notifications at the start or at key points within a workflows processing. Currently the email tool is forced to be the last tool run in a flow, even if you use block until done tool to force order of path execution to hit the email tool first.

If we could add a setting to the configuration to override the current default, of being the last tool run, to allow it to run at will within a flow that would be awesome! And of course we would want the same ability for texting, be it a new feature of the email tool or a new tool all its own.

The Texting option refers to an issue in Andrew Hooper's post seeking enhancement of the email tool for texting, search on "Email tool add HTML output option" or use link...

It would be nice to improve upon the 'Block Until Done' tool.

Additional Features I could see for this tool:

1: Allow Any tool (even output) to be linked as an incoming connection to a 'Block Until Done' tool.

2: Allow Multiple Tools to be linked to a 'Block Until Done' tool. (similar to the 'Union' tool)

The functionality I see for this is to enable Alteryx set the Order of Operation for workflows and Allowing people to automate processes in the same way that people used to do them. I understand there's a work around using Crew Macros (Runner/Conditional Runner) that can essentially accomplish this; howerver (and I may be wrong). But it feels like a work around, instead of the tool working the way one would expect; and I'm loosing the ability to track/log/troubleshoot my workflow as it progresses (or if it has an issue)

Happy to hear if something like that exists. Just looking for ways to ensure order of operation is followed for a particular workflow I am managing.

Thanks,

Randy

Would save an extra select for parsing text files in correct format for dates and times.

I think the Nearest Neighbor Algorithm is one of the least used, and most powerful algorithms I know of. It allows me to connect data points with other data points that are similar. When something is unpredictable, or I simply don't have enough data, this allows me to compare one data point with its nearest neighbors.

So, last night I was at school, taking a graduate level Econ course. We were discussing various distance algorithms for a nearest neighbor algorithm. Our prof discussed one called the Mahalanobis distance. It uses some fancy matrix algebra. Essentially it allows it it to filter out the noise, and only match on distance algorithms that are truly significant. It takes into account the correlation that may exists within variables, and reduces those variables down to only one.

I use Nearest Neighbor when other things aren't working for me. When my data sets are weak, sparse, or otherwise not predictable. Sometimes I don't know that particular variables are correlated. This is a powerful algorithm that could be added into the Nearest Neighbor, to allow for matches that might not otherwise be found. And allow matches on only the variables that really matter.

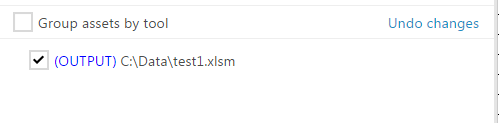

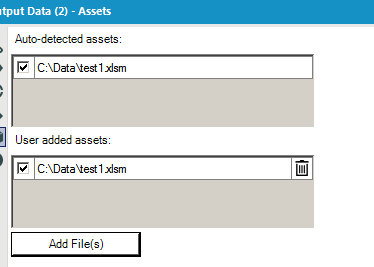

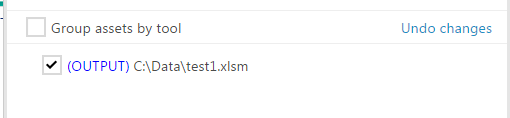

tl;dr It would be great if auto-detected assets on output tools were included when exporting/saving to the gallery.

Suppose I have an output to my C drive and try to package that file when exporting or saving. It gives me the option to package my file:

The only problem is, that file isn't actually saved with the package; instead, it just creates an externals folder where it will write the file to. But the file itself isn't included. The current work around is to go to your output tool and add that file manually as a user asset:

Notice that I had to manually add the same file that was already auto-detected. Now when I go to export, I get the same screen as before:

The big difference is that now that I've added the file as a user asset, the file itself is included in the export.

In conclusion, it would be great if auto-detected assets on output tools were included when exporting/saving to the gallery (so that it has the same behavior as user-added assets).

Modify the taskbar icon while according to workflow status to provide a visual queue when workflows are running/completed, like the SAS running man or the Teradata sunglasses... The Alteryx A could be going places.

Idea:

A funcionality added to the Impute values tool for multiple imputation and maximum likelihood imputation of fields with missing at random will be very useful.

Rationale:

Missing data form a problem and advanced techniques are complicated. One great idea in statistics is multiple imputation,

filling the gaps in the data not with average, median, mode or user defined static values but instead with plausible values considering other fields.

SAS has PROC MI tool, here is a page detailing the usage with examples: http://www.ats.ucla.edu/stat/sas/seminars/missing_data/mi_new_1.htm

Also there is PROC CALIS for maximum likelihood here...

Same useful tool exists in spss as well http://www.appliedmissingdata.com/spss-multiple-imputation.pdf

Best

I was with my friends at Limited Brands yesterday and they pointed out to me a way to improve Alteryx. While designing a workflow, each time you add an input tool to the canvas you literally have to start from scratch to add additional tables from either a db datasource or file source (e.g. access). With other tools, you can drag multiple tables to the canvas at once and come back later and add more inputs without having to select your source, see a list of tables etcetera.

On their behalf (they may post another suggestion), I am posting this idea.

Thanks,

Mark

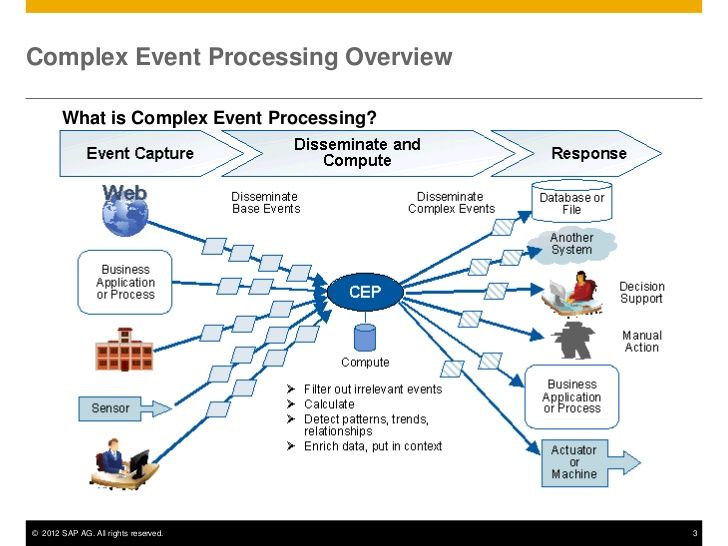

The idea is specific for streaming analytics;

Alteryx seems it can be scheduled frequently to see if there is an update to a file in question.

It would be awesome to enable a listener which will be auto triggered when;

- ay new line is added to a data set (probably a log file) or

- an update to an existing row in a database (then the relevant score will be recalculated for ex.)

It will be straight forward to provide reactive responses to a log file and voila!

Alteryx automation and alteryx server will become a "complex event processor"...

Here is a link to wiki page for CEP: https://en.wikipedia.org/wiki/Complex_event_processing

@GeorgeM would you think this will put Alteryx to another gartner MQ* as well?

*https://www.gartner.com/doc/3165532/add-event-stream-processing-business

JIRA is used very widely in industry as a defect tracker, issue management system, in many cases even as a super-simple-workflow platform.

Could you please consider adding a JIRA connector to Alteryx so that teams can connect to a JIRA instance directly via the API?

Many thanks

S

Very simple. Use the wheel button on the mouse to reconfigure connections between nodes. You click on the origin or end and drop into the new anchor point.

PLEASE add a count function to Formula/Multi-Row Formula/Multi-Field Formula!

I have searched for alternatives but am just confused about how to store the result for the total number of rows from Summarise or Count Records in a variable that can then be used within a Formula tab. It should not be that difficult to just add equivalents to R's nrow() and ncol().

It would be great if you could link a comment box to an object. This would be great because if the objects moves for what ever reason the comment would stay with it.

Random forest doesn't go well with missing values and create gibberish error results for Alteryx users.

Here are two quick options better to add the new version;

1) na.omit, which omits the cases form your data when tere a re missing values... you loose some observations though...

na.action=na.omit

2) na.roughfixreplaces missing values with mean for continuous and mode for categorical variables

na.action=na.roughfix

Best

- New Idea 377

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 31 | |

| 7 | |

| 3 | |

| 3 | |

| 3 |