Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Under the new licensing system (licenses.alteryx.com), I don't have the ability to truly release a license seat without user interaction. Currently, I have to revoke the license and then have that user start up alteryx on their machine to complete the process. Until the user starts up alteryx, I cannot reallocate that license.

I would like the ability to obsolete a license where as soon as I click the button in the licensing portal, I can immediately reallocate that license to somebody else. This functionality existed in the previous licensing system and is not available in the new licensing system.

I have a problem when transferring records between different O365 Sharepoint Sites. It seems that Alteryx cannot maintain 2 separate connections at the same time. I can transfer fine if I read from one site to a temp file and then, in another workflow, read from the file and write to the second site.

I can work around the problem using Block until Done, but there are some situations where I need to be able to compare between lists in 2 different sites and write back to one or both depending on the results. it would be much more convenient to be have multiple connections open simultaneously. I'm aware that Alteryx uses the SharePoint API to move information around. This API does allow multiple connections. I'm not familiar with the internals of how Alteryx accesses the API, perhaps the OAuth token is shared through out the workflow process, but this should be posssible

Thanks for considering this

Dan

Geohash is a latitude/longitude geocode system (public domain). It is a hierarchical spatial data structure which subdivides space into buckets of grid shape.

Geohashes offer properties like arbitrary precision and the possibility of gradually removing characters from the end of the code to reduce its size (and gradually lose precision).

As a consequence of the gradual precision degradation, nearby places will often (but not always) present similar prefixes. The longer a shared prefix is, the closer the two places are.

http://en.wikipedia.org/wiki/Geohash

https://github.com/sharonjl/geohash-net

https://github.com/simplegeo/libgeohash/blob/master/geohash.c

Can you add the flexibility to access fields based on its position or index like a[1], a[2],... a[n]. a[1] being the first column. Also an option to get max[a] can give the last column and min[a] give the first column. In this way, we can easily subset the dataset. Most cases, we are handling survey data which has 1000s of columns and when we need to select certain columns, we have to manually select the column checkbox and its painful to select 100s of columns. It will be nice if there is an option to select based on ID or index. It will also be useful while doing multi-field formula with more number of fields, because currently there is no option to write formulaes based on field Name column in it.

Regards,

Jeeva.

A lot of popular machine learning systems use a computer's GPU to speed up some of the math to a huge degree. The header on this article on Medium shows a 15x difference from a high-end CPU vs a high-end GPU. It could also create an improvement in the spatial tools. Perhaps Alteryx should add this functionality in order to speed up these tools, which I can imagine are currently some of the slowest.

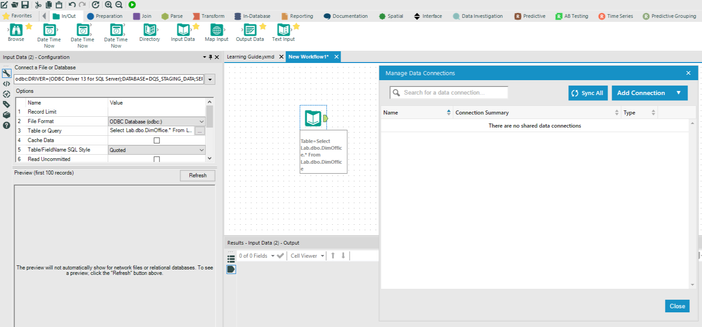

When I add a data connection to my canvas - it's only added to the Data Connections window under certain circumstances (e.g. when I use an alias, or the SQL connection wizard) rather than showing ALL data connections.

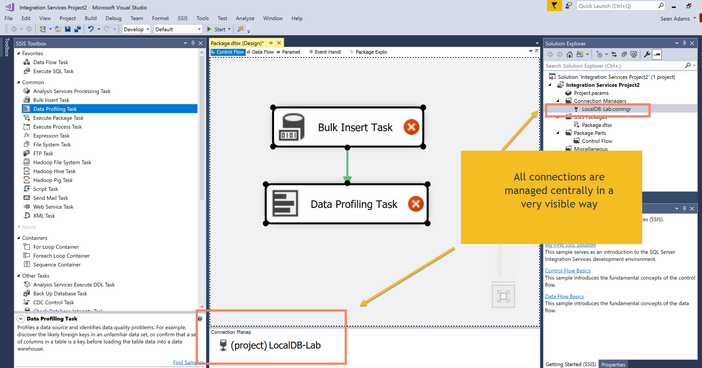

Given the importance of data connections for Alteryx flows - it would be better if ALL data connections were grouped together under a Data Connection Manager, which was as visible as the results window not buried deep in the menu system - and you could also then use this spot to change; share; alias etc.

In Microsoft SSIS there's a useful example of how this could be done - where the connections are very visibly a collection of assets that can be seen and updated centrally in one place. So if you have 5 input tools which ALL point to the same database - you only need to update the connection on your designer in one place - irrespective of whether this is a shared connection or not.

One of the biggest areas of time spent is in basic data cleaning for raw data - this can be dramatically simplified by taking a hint from the large ETL / Master data Management vendors and making this core Alteryx.

Server Side

- Allow the users of the server & connect product to define their own Business Types (what Microsoft DQS calls "Domains")

- Example may be a currency code - there are many different synonyms, but in essence you want your data all cleaned back to one master list

- Then allow for different attributes to be added to these business types

- Currency code would have 2 or 3 additional columns: Currency name; Symbol; Country of issue

- Similar to Microsoft DQS - allow users to specify synonyms and cleanup rules. For example - Rupes should be Rupees and should be translated to INR

- You also need cross business type rules - if the country is AUS then $ translates to AUD not to USD.

- These rules are maintained by the Data Steward responsibility for this Business Type.

- This master data needs to be stored and queryable as a slowly changing dimension (preferrably split into a latest & history table with the same ID per entry; and timestamps and user audit details for changes)

Alteryx Designer:

- When you get a raw data set - user can then tag some fields as being one of these business types

- Example: I have a field bal_cur (Balance Currency) - I tag this as Business Type "Currency"

- Then Alteryx automatically checks the data; and applies my cleanup rules which were defined on the server

- For any invalid entries - it marks these as an error in the canvas; and also adds them to a workflow for the data steward for this Business Type on the server - value is set to an "unmapped" value. (ID=-1; all text columns set to "unmapped")

- For any valid entries - it gives you the option to add which normalised (conformed) columns you want - currency code; description; ID; symbol; country of issue

Data Steward Workflow:

- The data steward is notified that there is an invalid value to be checked

- They can either mark this as a valid value (in which case this will be added to the knowledge base for this business type) or a synonym of some other valid value; or an invalid value

Cleanup Audit & Logs:

- In order to drive upstream data cleaning over time - we would need to be able to query and report on data cleanups done by source; by canvas; by user; by business type; and by date - to report back to the source system so that upstream data errors can be fixed at source.

Many thanks

Sean

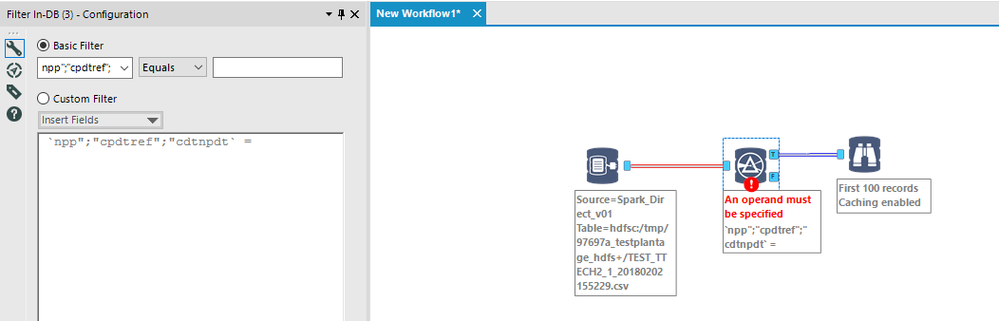

As of today, we cannot choose the field separator when we read a csv file. In France, the common separator for csv is the semi-colon (;)

It leads to this kind of thing in a filter :

When using the Cross Tab tool, the Count option under Method for Aggregating Values only appears when the field selected for Values for New Columns is a numeric field. I can add a numeric RecordID before the Cross Tab and then count on that, but why can't the Cross Tab count non-numeric fields? The Summarize tool can.

I suppose I could just bookmark this page, but that wouldn't help others. I frequently forget (I'm getting old) the format strings while creating custom datetime formulas. Is there a quick way to get to these format strings when in the context of creating a datetimeparse/datetimeformat formula?

Cheers,

Mark

Regularly put true in or false in expecting it to work in a formula.

This request is largely based on the implementation found on AzureML; (take their free trial and check out the Deep Convolutional and Pooling NN example from their gallery). This allows you to specify custom convolutional and pooling layers in a deep neural network. This is an extremely powerful machine learning technique that could be tricky to implement, but could perhaps be (for example) a great initial macro wrapped around something in Python, where currently these are more easily implemented than in R.

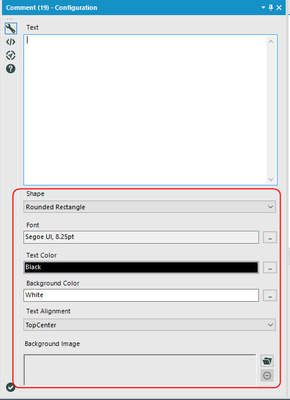

The COMMENT tool has a number of default settings. It would save me a lot of time if the Designer could remember the last settings used.

This is the panel I am referring to:

We would add a "simple" option to the existing Filter tool with some basic controls that would automatically create the expression for you.

Do others think this would be helpful?

Geoff Jones

Alteryx, Inc

Product Manager – User Experience

Our Alteryx users query a number of different data sources. Some of these include external servers outside our control.

To avoid any issues regarding locking, we use the Read Uncommitted function as part of the Data Input tool as part of our baseline design, probably 95% of the time or more.

It would be very beneficial for our organization if there was a way for us to set this option to be checked by default, so that it was one less thing users needed to remember when configuring a quick data pull.

- New Idea 301

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 169

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

222 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

211 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,558 -

Documentation

64 -

Engine

127 -

Enhancement

348 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

209 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections