Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Currently : the "Label" element in the Interface Designer Layout View is a single line text input.

Why could it be impoved : the "Label" element is often used to add a block of text in an analytical application interface. And adding a block of text in a single line text input is **staying polite** quite the struggle.

Solution : make this single line text input a text box just like the formula editor.

Hi

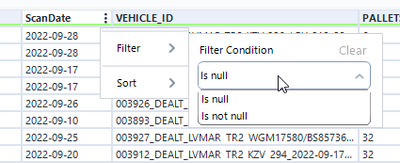

I think its super frustrating not beeing able to search for dates, or date ranges in the result window.

Lets say I have a dataset with 2 dates, date picked up and date delivered.

Then I need to search broad in the result window, for a given date. With search in both coloumns.

Could we please have a simple specific date search, and maybe a from-to date? Like in the filter?

Or go all the way and look at excel date filter?

Kind regards

For years, Alteryx would gracefully rename conflicting field names. It would issue a warning and that's it.

We recently upgraded from 2019.4 to 2022.1.1.30961 and now an error is produced:

Oops!

The field X was renamed conflicting with an existing field name

I searched for "rename" in every release notes version applicable and I do not see this. Is it a bug? Is it a feature?

We have an Alteryx server with thousands of workflows built by dozens of users with varying skill levels. This is a tremendous amount of work to go through and fix all the failing workflows.

Please help me understand this change because there was seemingly no warning and now we are scrambling.

I can be picky about how my workflows are laid out. Oftentimes, the connector between tools has a "mind of its own" as to what direction it goes and how it crosses other objects. I'd like to see the ability to control the connector lines with "elbows" that can be positioned in custom locations and directions, like an MS Visio diagram. Alternatively, add a simple "pin" tool could be added to the canvas and it's only function is to take in and send out a connector line by defining the input and output location. The input and output locations could be defined angularly/radial in degrees, for example. Image attached below of existing workflow with a "troublesome" connector and the concept of "elbows" and "pins" added as an alternate control mechanism. Both would be great! :)

In a similar vein to the forthcoming enhancement of being able to disable a specific output tool, my idea is to have the inverse where you can globally disable all outputs and then enable specific ones only. This should help reduce the number of clicks required/avoid workarounds using containers to obtain this functionality and allow users to be very specific in which outputs run and don't run as required.

Salesforce Connector:

I just want to see what credentials I have used. I do not want to change them. A link to "view the data" and not only "Change Credentials" would be helpful.

Also, when replacing connectors (due to a version change), it makes sense not to reassign the objects and fields and keep the old settings.

I have a use case where I am transitioning workflows to someone -

One workflow leverages the outputs of one workflow as inputs in to another - it would be awesome if I could include a link on a tool or comment box that would automatically open the exact output tool. Right now I am taking pictures and mentioning the names of files and tool-ids. Seems like it would be an easier way.

Would it be possible to have alteryx create the IAM user + password? If that's possible, we can create an IAM role that would allow you to assume this role.

Can alteryx create this type of user and assume our role?

Sometimes I need to connect to the data in my Database after doing some filtering and modeling with CTEs. To ensure that the connection runs quicker than by using the regular input tool, I would like to use the in DB tool. But is doesn't working because the in DB input tool doesn't support CTEs. CTEs are helpful for everyday life and it would be terribly tedious to replicate all my SQL logic into Alteryx additionally to what I'm already doing inside the tool.

I found a lot of people having the same issue, it would be great if we can have that feature added to the tool.

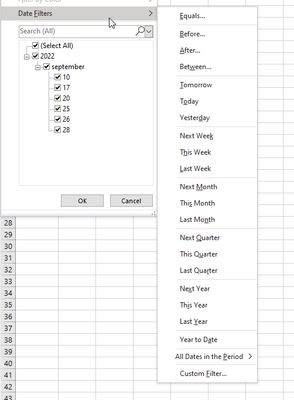

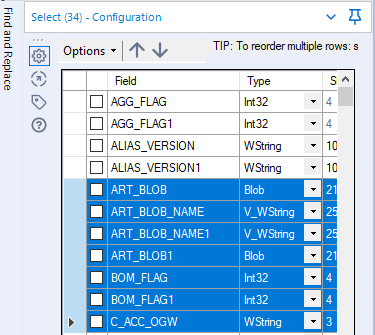

If the tables in the config window has lots of rows, it is quite complicated to find those of interest.

Please add a filter or search option (e.g. by the field name) to display only the relevant rows.

It would also be helpful to select or deselect multiple selected rows with one click.

Find an example from the "Select-Tool":

Can we have a User Setting that allows the users to select if Alteryx should prevent the computer to go into Sleep or Hibernate mode when running a workflow?

Using the Output tool to send data to a formatted spreadsheet apparently doesn't preserve formatting if the entire column is formatted. I'd love this changed to keep the formatting when its applied to an entire column. See this thread in Designer Discussions.

The Summarize tool returns NULL when performing a Mode operation. This doesn't seem to be documented anywhere in Alteryx documentation nor the community. Please fix this behaviour.

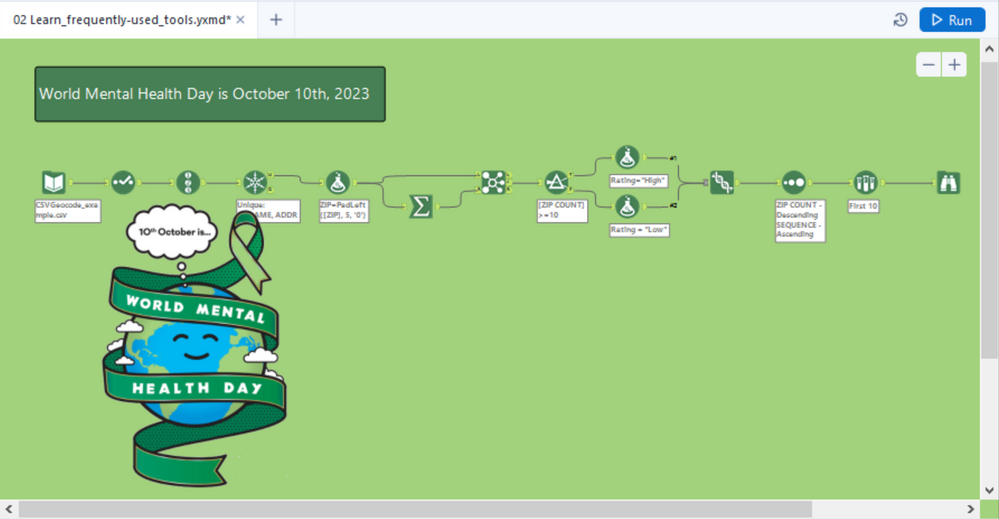

Create social and holiday themes for icons and set the canvas to an appropriate color. Here's a world mental health day example:

If you need help, ask me how I did it.

Cheers,

Mark

P.S. I realize that 2023 is next year. Please don't wait that long :)

Would love to have the ability to connect S3 to alteryx using the AWS IAM role instead of needing an AWS access key/secret key.

IT will not hand out the Access/secret key so it would be great to connect to S3 without needing a password.

Please add in a feature to connect to S3 via AWS IAM roles.

Hello Alteryx Community,

If like me, you've been developing in Alteryx for a few years, or if you find yourself as a new developer creating solutions for your organization - chances are you'll need to create some form of support procedure or automation configuration file at some point. In my experience, the foundation of these files is typically explaining to users what each tool in the workflow is doing, and what transformations to the data are being made. These are typically laborious to create and often created in a non-standardized way.

The proposal: Create Alteryx Designer native functionality to parse a workflow's XML and translate the tool configurations into a step by step word document of a given workflow.

Although the expectation is that after something like this is complete a user may need to add contextual details around the logic created, this proposal should eliminate a lot of the upfront work in creating these documents.

Understand some workflow may be very complex but for a simple workflow like the below, a proposed output could be like the below, and if annotations are provided at the tool level, the output could pick those up as well:

Workflow Name: Sample

1) Text Input tool (1) - contains 1 row with data across columns test and test1. This tool connects to Select Tool (2).

2) Select Tool (2) - deselects "Unknown" field and changes the data type of field test1 to a Double. This tool connects to Output (3).

3) Output (3) - creates .xlsx output called test.xlsx

Most databases treat null as "unknown" and as a result, null fails all comparisons in SQL. For example, null does not match to null in a join, null will fail any > or < tests etc. This is an ANSI and ISO standard behaviour.

Alteryx treats null differently - if you have 2 data sets going into a join, then a row with value null will match to a row with value null.

We've seen this creating confusion with our users who are becoming more fluent with SQL and who are using inDB tools - where the query layer treats null differently than the Alteryx layer.

Could we add a setting flag to Alteryx so that users can turn on ISO / ANSI standard processing of Null so that data works the same at all levels of the query stack?

Many thanks

Sean

Creating tool which summarize all operations performed in workflow might be beneficial for people who are working on others i.e. where SQL code is required for data transformation.

Overview might be enhanced for example by adding to the side of the panel displaying a list of tools in the highlighted area or summarize how many tools are in the selected area.

- New Idea 291

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 167

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 2 |