Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Very beneficial will be adding extra row in Input tool (while we importing CSV) where we can define escape character. This functionality will help to avoid errors that currently occur when importing such files.

It might very helpful if we use the same annotation for group of tools. Now it is possible when we copy annotation from one tool to other tool, however maybe option for example "use existing" with dropdown of existing annotations will be more automated. Tool comment allows to do something similar (describing multiple tools in process) however tools must be arranged next to each other to make its use clear.

As in title - it might be helpful to define custom name when you are using Transpose tool instead default nomenclature "Name" and "Value".

Hey gang, just another QoL suggestion from me!

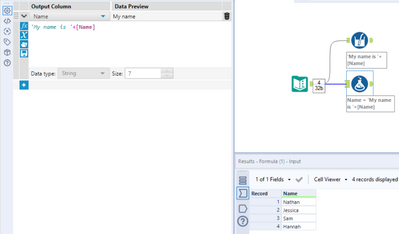

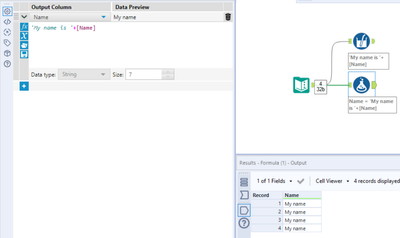

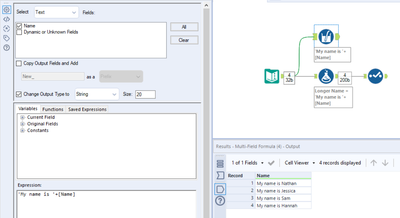

Currently, when applying changes to an existing field that will take the outcome beyond the current field size, we have to use an additional Select tool to get around truncation:

The usual route here is to either a) use a Select tool beforehand to increase the field size:

Or b) create a new field and then remove the 'old' one in a Select tool afterwards, also renaming the replacement here:

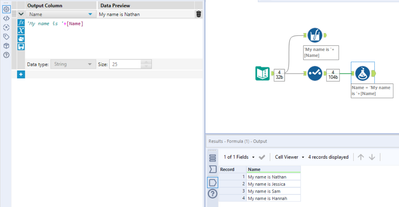

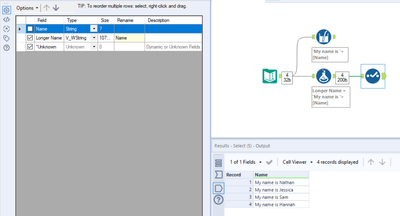

Given that we could just do this in one using the Multi-Field Formula tool:

My request is pretty simple here - can the 'Change Output Type to' configuration also be added to the standard Formula tool? The ability to also update the name of the output would be brilliant as well if possible. Cheers!

Auto Field tools help optimally size and assign data types to your data for better performance but this conversion process can be memory intensive with large datasets. What if you could right-click an Auto Field tool to convert it to a standard select tool with the new data types and sizes much like the existing ability to right-click convert inputs into macro inputs or browse tools into outputs? This would eliminate the need to manually transfer the results of the Auto Field tool into a select tool for production workflows!

Hi all,

I'm trying my best to think of the most secure way to do this and struggling within Alteryx using the Download tool in its current format.

I am using an Internal API Manager to retrieve data but this particular API requires additional "Headers" values for username and pw beyond my standard OAuth2 flow to the API Manager. Now I can run this locally but in order to save this down to our network as a workflow or to ideally run it from Gallery I should not be leaving credentials in open text anywhere so that anyone looking at the workflow or the underlying xml can grab these creds. Surely quite an easy one to mask or can this be made more dynamic to retrieve credentials from a Key Vault for example? e.g. Azure Key Vault?

Can we add masking to the Download Tool Header Values?

Thanks,

Ciaran

let’s suppose I am working a bank accounts and I do want to make sure I end up with minimum required information hence I would pull the template provided and work backward from there to manipulate the data. The template would include as well a predefined tableau output for example!

For very complex canvases and api data pulls that take a long time, it would be great that as we're working through the canvas to put flags or some setting that would allow us to keep data already pulled into a tool. This way I can set a certain tool to keep all of its data and then all tools i work on from that point forward will pull from that tool rather than from the beginning of the canvas.

for ex.

input tool --> api tool --> formatting tools --> new tools being worked on

if i can set the end of the formatting tools to keep all data then when i run the canvas only the new tools being worked on would get refreshed

i hope that's clear... currently it's very frustrating that any small change i make, i have to rerun the whole canvas and that takes a while

Hello all,

Big picture : on Hadoop, a table can be

-internal (it's managed by Hive or Impala, and act like any other database)

-external (it's managed by hadoop, can be shared among the different hadoop db such as hive and impala and you can't delete it by default when dropping the table

for info, about suppression on external table :

https://docs.cloudera.com/HDPDocuments/HDP3/HDP-3.1.4/using-hiveql/content/hive_drop_external_table_...

Alteryx only creates internal tables while it would be nice to have the ability to create external tables that we can query with several tools (Hive, Impala, etc).

It must be implemented

-by default for connection

-by tool if we want to override the default

Best regards,

Simon

Hello,

As of today, we can't choose exactly the file format for Hadoop when writing/creating a table. There are several file format, each wih its specificity.

Therefore I suggest the ability to choose this file format :

-by default on connection (in-db connection or in-memory alias)

-ability to choose the format for the writing tool itself.

Best regards,

Simon

Currently if we have to read multiple files though dynamic input, most of the times the files error out due to Schema error and we have to create a batch macro, if there is an option added wherein by right clicking dynamic input it gives and option to create batch macro, a simple batch macro with control parameter, Input tool and macro-output, this will save time in recreating the macro every time

I am having to render my Alteryx formatted reports to Excel and then upload the report to Google Sheets

It would be very useful (and improve the less well known Alteryx Reporting capabilities) to be able to render straight to a Google Sheet and preserve the formatting.

Thanks

The Idea behind the Password Masking is - we have "Download Tool" from the "Developer Tab" - which is used to Download files from the given site. For example, let's take Mainframe. I have a scenario where the Alteryx Workflow should connect to the Mainframe FTP Server, download the required file which is used for downstream transformation. For the download, I get the Username and Password information from the Database table (to reduce manual intervention and prevent errors). While passing the Username and Password as a parameter to the Download Tool Macro (Custom Macro - accepts the Username/Password, Filename dynamically) - the Alteryx Workflow will obviously show the username and password in the result window (as it is considered as an output data from Input Tool). Now I want that particular password field to be masked, so whenever the particular Workflow is shared to the User - the password field remains unexposed. I know there's a way to mask a particular field using "MD5 HASH" formula, but that helps to mask anything related to Dataset and not a password (as it may consider it as a new string and not a valid password). This feature would be really beneficial to Developers who use the download tool often. A New Tool or a Custom Macro - embedding this feature would be great for users who needs Masking functionality.

Two very useful functions

According to https://www.w3schools.com/sql/func_mysql_least.asp

The LEAST() function returns the smallest value of the list of arguments.

example : SELECT LEAST("w3Schools.com", "microsoft.com", "apple.com");

returns "apple.com"

GREATEST works exactly the same but returns the greatest value of the list of argument

As of today, Alteryx proposes max and min to deal with that, but it only works with number and , I think, it's an ambiguous syntax : Max and Min works both as an aggregation function and as a row function. I love to separate these two notions.

Having a more standard means also more interoperability.

On a related topic, the coalesce function is proposed here : https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Coalesce-function/idi-p/841014

Best regards,

Simon

Hello all,

I suggest a new string function Repeat()

Repeat() forms a string consisting of the input string repeated the number of times defined by the second argument.

Repeat(text[, repeat_count])

Repeat('to',3) gives tototo

It's also a standard SQL function

https://www.w3schools.com/sql/func_mysql_repeat.asp

Best regards,

Simon

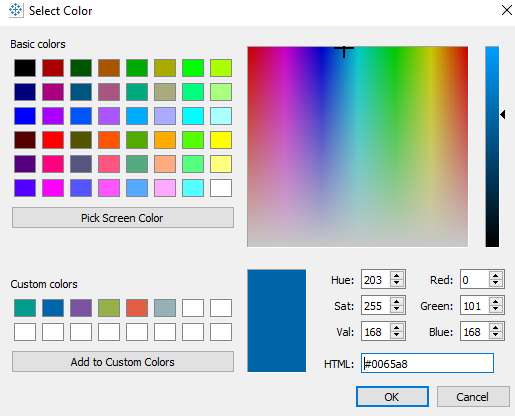

In Alteryx Designer I like to use Containers with very specific color, transparency and border settings something I've asked can be built into defaults somehow in another Product suggestion which I cannot find. What's very useful to me is defining processes into the Tool categories, i.e. ingestion = In/Out = Green, Preparation tools = Prep Category = Blue, this standardised across all workflows is good practice when managing an estate of workflows.

In Tableau when editing 'Colors' there is a box you can enter the #0065a8 code for a color you wish to use, perhaps from Brand guidelines or in my case the same # as the Tool Category Colors themselves. I have to go into Tableau, pick screen color, create a custom colour then regenerate on Alteryx side.

Can we add "pick a screen color' and / or 'HTML: #0065a8' like you can do in Tableau?

Hey Designer Gurus + @NicoleJ ,

Here's a picture of my canvas (running):

I'd like to be able to see COUNTS and PERCENT completion as the workflow is running. In my case, the numbers are BIG and they are prioritized as BACK compared with the lines. In the case of % complete, they obfuscate (fancy term for block) the progress of the tool.

Currently, if I want to watch the water boil, paint dry or the workflow crawl/walk/run I must change the workflow before saving it to maximize the distance between the tools. I'd like to be able to see both the COUNTS and % complete without the added effort. My idea is to have someone at Alteryx figure out an enhancement to this without engaging the likes of @Hollingsworth who'll devise some evil keyboard shortcut.

Cheers,

Mark

Hi, I would like to suggest to have subtitles for interactive videos.

As there are many non-native speakers whose 1st language is not english, including me, I feel the video accent pace is sometimes too fast . Having sometimes helps to understand the content better without needing to pause multiple times if pace of the talk seems fast.

Please help incorporate these as I believe as it would benefit many learners.

I hope the addition is not too complex.

Thank you for consideration

Connecting to Smartsheets using Alteryx Desktop (and by extension, Alteryx Server) is extremely cumbersome. If a user wants to read data from Smartsheet, they are required to get an API token (preferred) or use a username/password

Then do one of the following to read data from Smartsheets:

1. a. Install a ODBC driver

b. Configure a DSN connection for ODBC

c. Use the input data using a generic ODBC connection

or

2. Use python

To write data to Smartsheets, a user can use Python or upload the data using an API call - both very hard for end users to use especially if they're not Python developers.

Regardless, all of these are problematic. On the server I manage, I have over 15 ODBC connections to Smartsheets and it's getting very hard to upgrade the server hardware because of them. Creating a native connector for input/output of data to Smartsheets will eliminate a headache of managing ODBC connections, and make it simple for Alteryx Desktop users to read and write data.

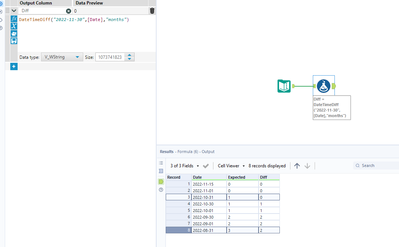

Highlighted in this post: Solved: DateDiff question - Alteryx Community The DateDiff function under certain conditions does not work as you would expect and I suspect most people would not notice the inaccuracy.

Here is the formula for the Results Column below:

DateTimeDiff("2022-11-30",[Date],"months")

| Date | Expected | Result |

| 2022-11-15 | 0 | 0 |

| 2022-10-31 | 1 | 0 |

| 2022-09-30 | 2 | 2 |

| 2022-08-31 | 3 | 2 |

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |