Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

The Table tool does not allow renaming a field so that it breaks at a specific point.

Fields like "H.S. Dropouts Non-Hispanic" and "H.S. Dropouts Whites" need a forced break after Dropouts.

Now we get random breaks like

H.S. Dropouts

Non-Hispanic

H.S. Dropouts White

The Rename Field function in the Basic Table tool would be a great place to allow forced breaks.

Thanks!

Hi,

I think it would be great if the run time of a workflow could be displayed by tool or container. This would make refining the workflow at completion a lot easier and also help with thinking of better solutions. Even cooler would be some kind of speed heat map.

Thanks

Idea to have the option to include the workflow "meta info" (last tab of the workflow configuration when clicking on the cavas) when printing the workflow.

The Meta Info desciption and author sections would be particularly of value. Currently on the long file name is embedded in the header.

Add the ability in the select records tool to use formulas not just record numbers.

Many of today's APIs, like MS Graph, won't or can't return more than a few hundred rows of JSON data. Usually, the metadata returned will include a complete URL for the NEXT set of data.

Example: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

This will require that the "Encode URL" checkbox in the download tool be checked, and the metadata "nextLevel" output will have the same URL plus a $skiptoken=xxxxx value. That "nextLevel" url is what you need to get the next set of rows.

The only way to do this effectively is an Iterative Macro .

Now, your download tool is "encode URL" checked, BUT the next url in the metadata is already URL Encoded . . . so it will break, badly, when using the nextLevel metadata value as the iterative item.

So, long story short, we need to DECODE the url in the nextLevel metadata before it reaches the Iterative Output point . . . but no such tool exists.

I've made a little macro to decode a url, but I am no expert. Running the url through a Find Replace tool against a table of ASCII replacements pulled from w3school.com probably isn't a good answer.

We need a proper tool from Alteryx!

Someone suggested I use the Formula UrlEncode ability . . .

Unfortunately, the Formula UrlEncode does NOT work. It encodes things based upon a straight ASCII conversion table, and therefore it encodes things like ? and $ when it should not. Whoever is responsible for that code in the formula tool needs to re-visit it.

Base URL: https://graph.microsoft.com/v1.0/devices?$count=true&$top=999&$filter=(startswith(operatingSystem,'W...') or startswith(operatingSystem,'Mac')) and (approximateLastSignInDateTime ge 2022-09-25T12:00:00Z)

Correct Encoding:

HI,

Not sure if this Idea was already posted (I was not able to find an answer), but let me try to explain.

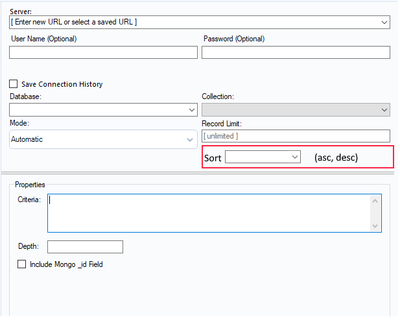

When I am using Mongo DB Input tool to query AlteryxService Mongo DB (in order to identify issues on the Gallery) I have to extract all data from Collection AS_Result.

The problem is that here we have huge amount of data and extracting and then parsing _ServiceData_ (blob) consume time and system resources.

This solution I am proposing is to add Sorting option to Mongo input tool. Simple choice ASC or DESC order.

Thanks to that I can extract in example last 200 records and do my investigation instead of extracting everything

In addition it will be much easier to estimate daily workload and extract (via scheduler) only this amount of data we need to analyze every day ad load results to external BD.

Thanks,

Sebastian

When you first pull the the download tool onto the workspace the password on the connection Tab is already populated plus you don't see a cursor when you click in the box! you only see a cursor if click in the box and then type. Can you please change this so it behaves the same as the the username box and leave it blank?

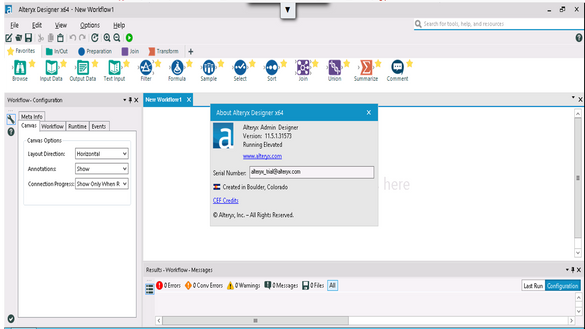

Alteryx has a 3 hour demo session on CloudShare which is very useful for quick demos...

How about having most up to date version of Alteryx as a demo as a starter?

- Unfortunately it's 11.5 right now... Not 11.7!!!

- I'm demoing the older, slower version to the clients.

Idea:

Similar to the Formula Tool how it shows an example based on the first record. Could the Filter Tool have a example based on the first record showing how the formula in the filter would handle that record? It would either show in an example field for the true sample or false sample based on the criteria. I feel this would be very useful for more complex formulas such as AND/OR.

Ex.

Filter Formula: [Count] <= 1

True Sample: [Blank]

False Sample: 4 (Value of first record which was filtered from [Count])

I was working on the file and there are multiple sheets need to be pulled from one excel file. I was not sure how we can give one source of information and pull multiple sheet from one source as per the requirement. So wanted to submit this idea to create a toll which can pull any sheet(s) from one input tool as per requirement.

When you import a csv file, I sometimes use a "TAB" as delimiter. In section 5 Delimiters I want that as an option.

I have learned that it is possible to wright "\t" but a normal choice would bed nice.

Currently, the Parse Address tool cannot parse a field where the entire address, including so-called "last line" information (city/state/zip), is in all in the same field - it can only parse a street address contained in one field and last line information contained in a second field. Can this tool be enhanced so that it can parse a full address in a single field?

Need a tool that can remove nulls in 2 ways:

1. Remove rows with null values

2. Remove columns with null values

Thinking you're able to open the config file like you might a macro. You pull it open, adjust/modify it , you resave it.

Save/Load Unique Key Tool Configuration – similar to saving/loading other tools configs (ie: select, join, append, etc)

Alteryx can add more advanced machine learning capabilities, such as deep learning and neural networks, to its existing set of predictive modeling tools. This will allow users to perform more complex and accurate analyses, and enable them to tackle more sophisticated machine learning problems. For example, Alteryx can add support for deep learning frameworks like TensorFlow and Keras, which will allow users to use pre-trained models or create their own models for image and natural language processing.

Alteryx can improve the data connectivity options by adding more built-in connectors to various data sources such as big data platforms, cloud-based services, and IoT devices. This will enable users to easily access and import data from a wider range of sources, without the need for complex coding or manual data preparation. For example, Alteryx can add connectors to popular big data platforms like Hadoop and Spark, or cloud-based services like AWS and Azure, which will allow users to easily import data from these platforms into Alteryx for analysis.

Hi,

Would it be possible to add to the Workflow Configuration / Runtime options so that we can disable all 'Connector' input tools from connecting with the external source. At the moment, I need to put the workflow within a Container so that I can disable this rather than have it refresh from the source every time it is run. This means that I could have all my code within the same workflow but make selections that control whether it refreshes from the source data or uses a local copy that has previously been downloaded.

I hope that this makes sense....

Peter

For the summary tool, allow for the field data definition type of the output.

1. Schedules에 등록된 workflows 목록에서 여러개를 선택해서 한번에 once schedule을 등록할 수 있는 기능(button)이 있었으면 좋겠습니다. 많은 workflows 등록하고 관리하는 경우에, 네트워크 장애 등으로 실행이 제대로 되지 않았을 경우 한꺼번에 schedules를 추가하고 실행 해야하는데 현재로서는 하나하나 Designer에서 열어서 실행시키거나 schedule에 다시 각각 등록하는 수밖에 없습니다. 일괄적으로 실행시킬 수 있는 기능이 있다면 관리가 매우 편해질 것입니다.

2. open api를 이용하여 데이터를 download 할 경우, 웹 사이트에 따라 안되는 경우가 많습니다. 안되면 결국 python을 이용해서 데이터를 download 하는데요, Designer의 download를 이용해서 데이터를 받을 수 있으면 좋겠습니다. 이미 많은 이슈가 있는 것으로 알고있습니다.

- New Idea 290

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

198 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,550 -

Documentation

64 -

Engine

127 -

Enhancement

342 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 10 | |

| 7 | |

| 5 | |

| 5 | |

| 3 |