Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Whenever I output the Count using the Summarize Tool I am unable to tell it to sort the results by Count and am forced to grab a sort tool. It would be nice to offer a sort option from within the Summarize tool itself instead of requiring a subsequent sort tool or to use the Results window to manually sort it.

When we create new workflows, we like to have them in our company template, to stnadardise documentation. This makes it easier for a supervisor to review, and for a colleague to pick up the workflow and understand what is going on. For instance, we have all data input on the left, and all error checks and workflow validation on the right, and a section at the top with the workflow name, project name, purpose etc. We have a workflow that we use as a template with containers, boxes and images all in the appropriate places

It would be great if there was an option to select a workflow as a template. When a new workflow is opened, it would load this template rather than having a blank canvas.

95% of the times I see myself using the Directory Tool, it is only to access the FullPath content, so I immediatly add a Select tool to deselect the other attributes the tool returns.

Is there any chance to add a checkbox to only retrieve FullPath?

I couldn't find a previous idea on this, but let me know if it already exists.

It would be helpful to be able to filter within the results window of a Browse tool for all "Not OK" records (records with leading/trailing spaces, embedded newlines, etc.) I can already filter for null and empty values, but this would be helpful for cleaning up data. I want to see the "dirty" data before taking out leading/trailing spaces or embedded new lines to see if there is something I'm missing in the data that needs to be further parsed or modified.

It would be really nice to be able to easily trace a selected field through a workflow (see below, trace in green). This would greatly help with troubleshooting.

When you use Create Points tool - you then almost always need to use a Select tool to rename that point.

Can we please add a single text field to the Create Points tool - which would then allow us to create and name a point in one step?

Please could you enhance the Alteryx download tool to support SFTP connections with Private Key authentication as well. This is not currently supported and all of our SFTP use cases use PK.

I am having to render my Alteryx formatted reports to Excel and then upload the report to Google Sheets

It would be very useful (and improve the less well known Alteryx Reporting capabilities) to be able to render straight to a Google Sheet and preserve the formatting.

Thanks

Two very useful functions

According to https://www.w3schools.com/sql/func_mysql_least.asp

The LEAST() function returns the smallest value of the list of arguments.

example : SELECT LEAST("w3Schools.com", "microsoft.com", "apple.com");

returns "apple.com"

GREATEST works exactly the same but returns the greatest value of the list of argument

As of today, Alteryx proposes max and min to deal with that, but it only works with number and , I think, it's an ambiguous syntax : Max and Min works both as an aggregation function and as a row function. I love to separate these two notions.

Having a more standard means also more interoperability.

On a related topic, the coalesce function is proposed here : https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Coalesce-function/idi-p/841014

Best regards,

Simon

Hi all,

https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Tool-Mastery-RegEx/tac-p/74936

@AlexKo did an excellent article on RegEx, and Mark @MarqueeCrew Frisch has helped me out of many pickles with Regex - and one of the things that I've discussed with a few folk on the community is that Regex is super-powerful ( @Ken_Black made this same comment) and can do way more than we initially understand.

The problem is not one of the power of the tool, but rather the onramp to using it (it's painful to do/experiment/run/try etc, it doesn't give you any visual guides or hints when you've got it right or wrong, etc)

My method is to hop straight on to http://regex101, paste in sample text, and figure out the right RegEx in their AWESOME UI which really make this into a 5 minute job, and makes me feel like I've scored at least one victory today (it is so easy, you actually feel more powerful and competent).

Could we bring some of this great User Interaction design into the RegEx tool? I honestly believe that if the RegEx tool was as easy and approachable as RegEx101.com (or why not go one better than them), we'd see an explosion in usage and creativity.

Thank you all

Sean

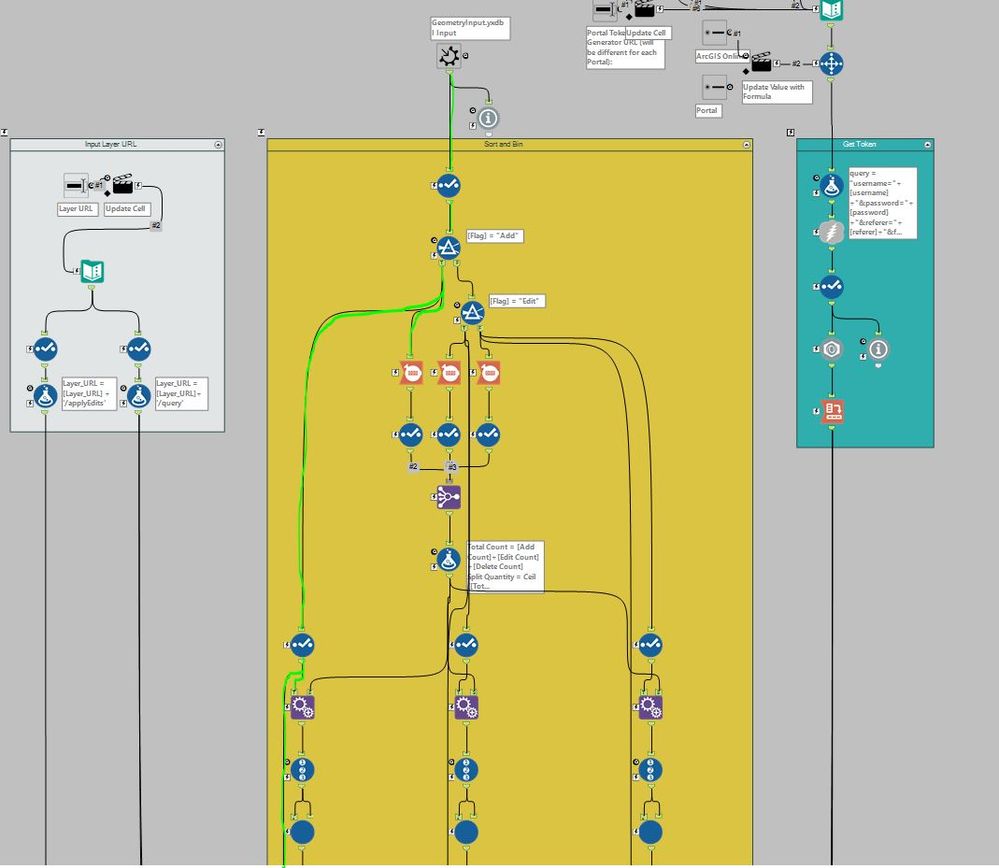

When I create a new table in a in-Db workflow, I want to specify some contraints, especially the Primary Key/Foreign Key

For PK/FK, the UX could be either the selection of some fields of the flow or a free field (to let the user choose a constant).

From wikipedia :

In the relational model of databases, a primary key is a specific choice of a minimal set of attributes (columns) that uniquely specify a tuple (row) in a relation (table).[a] Informally, a primary key is "which attributes identify a record", and in simple cases are simply a single attribute: a unique id.

So, basically, PK/FK helps in two ways :

1/ Check for duplicate, check if the value inserted is legit

2/ Improve query plan, especially for join

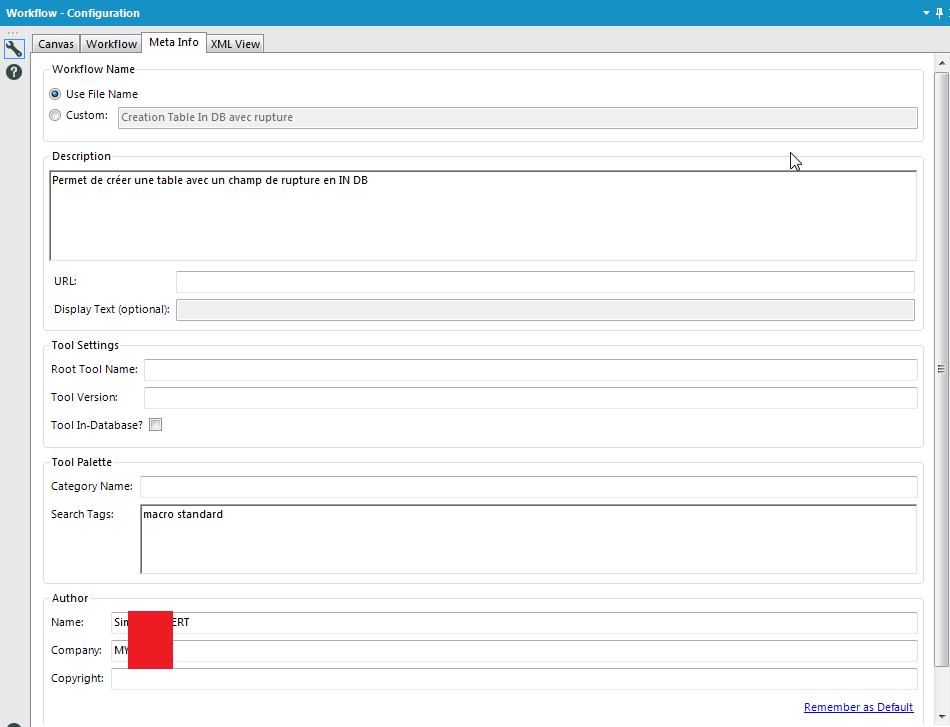

I love Workflow Meta info, especially the ability to put the Author, the search tags,the version, the description, etc...

But why can't we use it as Engine Constant? It doesn't seem very hard to implement and it would change life for development.

Extend the MongoDB tool to work with Atlas MongoDB instances.

It would be great to have an option in the Output Data tool to write the workflow name to the Info properties of Excel outputs.

Maybe something like this:

So that whenever you open an Excel file you always have a way of finding the name of the workflow that created the file.

This would make it so much easier as I often have to share Excel files with colleagues and customers and then need a way of tracking them back to workflows weeks or months later.

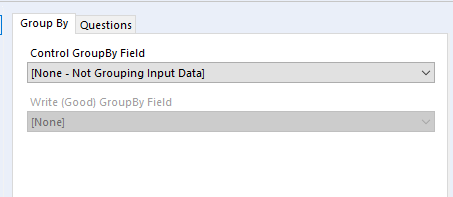

I rarely use the Group By tab on batch macros, but it's unfortunately always the first tab that pops up. When I have a questions tab on a batch macro, it would be great if it appeared first (ie I should see the questions tab when I click on my batch macro.) Thanks!

Trying to solve some use cases, I realized that I had to simulate the factorial behaviour.

Having a factorial formula can make this process easier.

Thanks!

Tools within a workflow needs to be able to run in parallel whereever applicable.

For example: Extracting 10 million rows from one source, 12 million rows from a different source to perform blending.

currently the order of execution is the order in which tools are dragged into the canvas. Hence Source1 first, Source2 second and then the JOIN.

Here Source1 & Source2 are completely independent, hence can be run in parallel. Thus saving the workflow execution time.

Execution time is quite crucial when you have tight data loading window.

Hopefully alteryx considers this in the next release!

If a tool fails, there should be a way to customise the error message. Currently a way to do it: log all messages in a file, read that file with another workflow, then customise the messages (Alteryx workflow error handling - Alteryx Community). However, there should be a more convenient solution. We should be able to:

- Find/replace parts of a message.

- Specify, which tools messages to modify.

- Change the message type.

- Change the order of the messages in the results window, to prioritise the critical ones.

- Pick which messages cannot be hidden by "xxx more errors not displayed".

This would especially help for macros, as sometimes we have a specific tool failing within a macro and producing a non-user friendly message.

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

It would be great if you could include a new Parse tool to process Data Sets description (Meta data) formatted using the DCAT (W3C) standard in the next version of Alteryx.

DCAT is a standard for the description of data sets. It provides a comprehensive set of metadata that can be used to describe the content, structure, and lineage of a data set.

We believe that supporting DCAT in Alteryx would be a valuable addition to the product. It would allow us to:

- Improve the interoperability of our data sets with other systems (M2M)

- Make it easier to share and reuse our data sets

- Provide a more consistent way to describe our data sets

- Bring down the costs of describing and developing interfaces with other Government Entities

- Work on some parts of making our data Findable – Accessible – Interopable - Reusable (FAIR)

We understand that implementing support for this standards requires some development effort (eventually done in stages, building from a minimal viable support to a full-blown support). However, we believe that the benefits to the Alteryx Community worldwide and Alteryx as a top-quality data preparation tool outweigh the cost.

I also expect the effort to be manageable (perhaps a macro will do as a start) when you see the standard RDF syntax being used, which is similar to JSON.

DCAT, which stands for Data Catalog Vocabulary, is a W3C Recommendation for describing data catalogs in RDF. It provides a set of classes and properties for describing datasets, their distributions, and their relationships to other datasets and data catalogs. This allows data catalogs to be discovered and searched more easily, and it also makes it possible to integrate data catalogs with other Semantic Web applications.

DCAT is designed to be flexible and extensible, so they can be used to describe a wide variety. They are both also designed to be interoperable, so they can be used together to create rich and interconnected descriptions of data and knowledge.

Here are some of the benefits of using DCAT:

- Improved discoverability: DCAT makes it easier to discover and use KOS, as they provide a standard way of describing their attributes.

- Increased interoperability: DCAT allows KOS to be integrated with other Semantic Web applications, making it possible to create more powerful and interoperable applications.

- Enhanced semantic richness: DCAT provides a way to add semantic richness to KOS , making it possible to describe them in a more detailed and nuanced way.

Here are some examples of how DCAT is being used:

- The DataCite metadata standard uses DCAT to describe data catalogs.

- The European Data Portal uses DCAT to discover and search for data sets.

- The Dutch Government made it a mandatory standard for all Dutch Government Agencies.

As the Semantic Web continues to grow, DCAT is likely to become even more widely used.

DCAT

- Reference Page: https://www.w3.org/TR/vocab-dcat/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/dcat-ap-donl

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Data_Catalog_Vocabulary

RDF

- Reference Page: https://www.w3.org/TR/REC-rdf-syntax/

- Dutch (NL) Standard: https://forumstandaardisatie.nl/open-standaarden/rdf

- WIKI Pedia on DCAT: https://en.wikipedia.org/wiki/Resource_Description_Framework

- New Idea 317

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 171

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 57

- Partner Dependent 4

- Inactive 674

-

Admin Settings

21 -

AMP Engine

27 -

API

11 -

API SDK

223 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

212 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

398 -

Category Prescriptive

1 -

Category Reporting

200 -

Category Spatial

82 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

91 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,569 -

Documentation

64 -

Engine

129 -

Enhancement

362 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

25 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

81 -

Machine Learning

13 -

My Alteryx

1 -

New Request

212 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

25 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

82 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections