Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

We are experiencing performance issues with fetching schema/table/columns info on Alteryx Designer when using Vertica DB.

From the troubleshoot with Alteryx support, the query hitting "odbc_columns" is contributing to the performance issue. Vertica DBA suggests to use "columns" instead of "odbc_columns". Submitting this request to change the query.

Refer to case 00551930 for more info.

Hi -

We are using the new(ish) Anaplan connector tools; in particular, the "Anaplan Output" tool (send data TO Anaplan).

The issue that I'm having is that the Anaplan Output Tool only accepts a CSV file. This means that I must run one workflow to create the CSV file, then another workflow to read the CSV file and feed the Anaplan Output Tool.

If it were possible to have an output anchor on the Output tool that would simply pass the CSV records through to the Anaplan Output tool, the workflows would be drastically simplified.

Thanks,

Mark Chappell

Alteryx really needs to show a results window for the InDB processes. It is like we are creating blindly without it. Work arounds are too much of a hassle.

Current:

Currently in Result window we have datacleanse, fileter and sort functionality which makes life easier.

We dont have column Rename and Data type change functionality. In order to do that we need to drag a tool for the same for Rename of column.

Expectaion:

Result Tool should be capable enough to Rename column and Data type.

It will save a lot of time ,

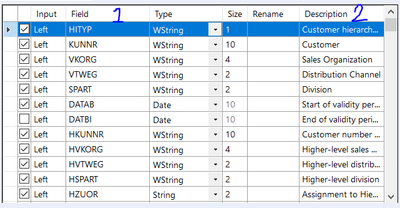

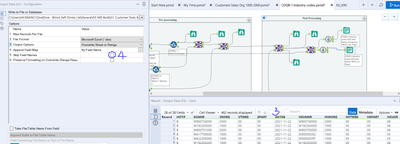

By default output always print Headers as shown in item 1 below, I am looking to print item 2 as Header. Item 3 is my output.

I tried item 4 below, doesn’t work.

Please consider this in your future releases. It saves lot of time as the Outputs can contain hundreds of fields and output files are shared with User community who understands the Field description much more than Field name. For example SAP Field-KUNNR does not mean nothing to a User than its description 'Customer'.

A check box on the Output Tool should able to toggle the selection between Field or its Description.

You might argue that the Rename column can be used, agree it would be difficult to manually type in hundreds of fields. As an alternative you can provide automatic Rename population with Description.

Hi

Sometimes when your using a filter you want to let everything pass through. Which means you have to remove the filter or go around it.

In stead of beeing able to out comment your filter. And the filter tool see's that as no filter.

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/BigQuery-Input-Error/td-p/440641

The BigQuery Input Tool utilizes the TableData.List JSON API method to pull data from Google Big Query. Per Google here:

- You cannot use the TableDataList JSON API method to retrieve data from a view. For more information, see Tabledata: list.

This is not a current supported functionality of the tool. You can post this on our product ideas page to see if it can be implemented in future product. For now, I would recommend pulling the data from the original table itself in BigQuery.

I need to be able query tables and views. Not sure I know how to use tableDataList JSON API.

The look of the canvas is important. Being able to toggle between viewing classic icons (as seen throughout this video: https://www.youtube.com/watch?v=DJwgYYP_xlA), to the extent available, and current icons would provide users with more variety. As a secondary benefit (when toggled to show the classic icons), users would be able to differentiate between the tools that are not classic (i.e., the newer tools that don't have an older design) and the classic tools.

Thank you very much.

Hi,

The imputation tool allows exchange of numbers. It would be great if we are given the option to impute string values and NULL value too

The Input Data and Text Input Tools are visually distinct, so it's easy to see when a workflow is inputting live (File) or static (Text) data.

The Macro Input tool has the same appearance whether it's inputting a File or Text data, so you have to open the tool configuration to see whether it's inputting live (File) or static (Text) data. It would be great if there was a way to visually distinguish these two cases, perhaps even separating the macro tool into two tools, one for Files and one for Text.

The current Export Workflow user experience is extremely frustrating and it sometimes takes me several attempts to export the workflow with all of the correct assets. Some ideas for improving the UX:

- Allow the width of the window to be expanded or maximized. I often have many assets that start with the same folder structure name and I have to scroll to the right for each one to decide whether to check or uncheck it.

Have a display option for "Group asset by Type" (e.g., Input, Output, Macro). I typically only package up my workflows with the embedded macros, not the Inputs or Outputs. (This is especially important during development and testing, when interim yxdb's are saved to facilitate QC and trouble-shooting.) I would like an easy way to "Check all Macros" without having to go through the list one-by-one. I may have over 100 assets; with the current UX, it's really hard to get all the right assets checked.

- Add an option to filter the display to see only the assets that have been checked.

- Add a way to copy the asset list (checked and/or unchecked) to the clipboard. This would allow us to confirm that all of the assets needed are included BEFORE EXPORTING.

- Add an option Select All or Deselect All

On the SELECT object - add a column "Value if Null". This would work like a COALESCE in SQL. For string fields, an empty string or "" would need to be an available option.

Debugging could be dramatically simplified if each canvas object had the ability to be disabled/enabled. If disabled, the workflow would still pass through the object, but the object itself would be ignored.

The find and replace tool currently does not run row by row, and finds anything in the find column, and replaces it with anything in the replace column. I was under the impression and designed my workflow to use this as a row by row find and replace, not entire columns.

A simple fix would be to allow users to group by RecordID, which should also speed up the find / replace tool for larger data sets I would imagine.

What I am going to do in the meantime is use Regex to replace the word out.

Thanks!

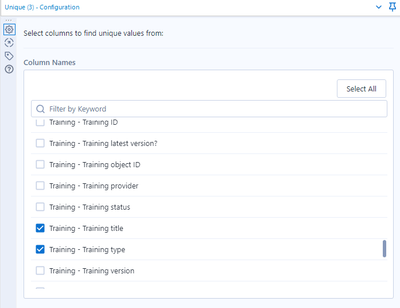

It would be nice if the fields which are selected for the Unique tool can be easily visible. (by way of grouping selected fields etc)

The issue is that if a few out of many fields are selected to be considered for Unique, it is hard to review/check which are the fields that have been selected in the Unique Tool configuration.

Here's an example. It is difficult to see all the fields which have been selected. (There are 7 fields selected in this example.)

I the current Output Data Tool, choosing a bulk Loader option, say for Teradata, the tool automatically requests the first column to be the primary index. That is absolutely incorrect, especially on Teradata because of how it might be configured. My Teradata Management team notifies me that the created table, whether in a temp space or not, becomes very lopsided and doesn't distribute the "amps" appropriately.

They recommend that instead of that, I should specify "NO PRIMARY INDEX" but that is not an option in the Output Tool.

The Output tool does not allow any database specific tweaks that might actually make things more efficient.

Additionally, when using the Bulk Loader, if the POST SQL uses the table created by the bulk loading, I get an error message that the data load is not yet complete.

It would be very useful if the POST SQL is executed only and only after the bulk data is actually loaded and complete, not probably just cached by Teradata or any database engine to be committed.

Furthermore, if I wanted either the POST SQL or some such way to return data or status or output, I cannot do so in the current Output Tool.

It would be very helpful if there was a way to allow that.

All the other data types get basic filters but time doesn't get any besides a NULL check:

If i draw a line that crosses the pacific ocean, the path is split in half, and connected by a line that goes over the Atlantic..

This isn't just cosmetic.. if i intersect this object with another polygon, it will show that the two objects interact even though they should not.. The only way i can fix this is to manually divide my polyline into two objects where they cross the pacific ocean.

Please fix this.

Hello all,

EDIT : stupid me : it's an excel limitation in output, not an alteryx limitation :( Can you please delete this idea ?

I had to convert some string into dates and I get this error message (both with select tool and DateTime tool) :

ConvError: Output Data (10): Invalid date value encountered - earliest date supported is 12/31/1899 error in field: DateMatch record number: 37399

This is way too early. Just think to birthdate or geological/archeological data !

Also : other products such as Tableau supports earlier dates!

Hope to see that changed that soon.

Best regards,

Simon

Would be great if you could support Snowflake window functions within the In-DB Summarize tool

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 5 | |

| 5 | |

| 3 | |

| 3 |