Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

There is a great functionality in Excel that lets users "seek" a value that makes whatever chain of formulas you might have work out to a given value. Here's what Microsoft explains about goal seek: https://support.office.com/en-us/article/Use-Goal-Seek-to-find-a-result-by-adjusting-an-input-value-...

My specific example was this:

In the excel (attached), all you have to do is click on the highlighted blue cell, select the “data” tab up top and then “What-if analysis” and finally “goal seek.” Then you set the dialogue box up to look like this:

Set cell: G9

To Value: 330

By changing cell" J6

And hit “Okay.” Excel then iteratively finds the value for the cell J6 that makes the cell G9 equal 330. Can I build a module that will do the same thing? I’m figuring I wouldn’t have to do it iteratively, if I could build the right series of formulas/commands. You can see what I’m trying to accomplish in the formulas I’ve built in Excel, but essentially I’m trying to build a model that will tell me what the % Adjustment rate should be for the other groups when I’ve picked the first adjustment rate, and the others need to change proportionally to their contribution to the remaining volume.

There doesn't really seem to be a way to do this in Alteryx that I can see. I hate to think there is something that excel can do that Alteryx can't!

Currently we resort to using a manual create table script in redshift in order to define a distribution key and a sort key in redshift.

See below:

http://docs.aws.amazon.com/redshift/latest/dg/tutorial-tuning-tables-distribution.html

It would be great to have functionality similar to the bulk loader for redshift whereby one can define distribution keys and sort keys as these actually improve the performance greatly with larger datasets

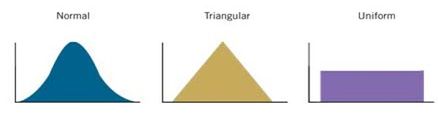

One of the common methods for generalization of different types of normal and beta distributions is triangular.

Though Alteryx doesn't have a function for this, even excel doesn't have this but

- SAS (randgen(x, "Triangle", c)) and

- Mathematica (TriangularDistribution[{min,max},c]) like tools include one.

Can we add something like randtriangular(min,mode,max)?

I have my solution attached, but this will ease the flow...

Best

Constantly using rand() function but also need;

- Normal distribution function like we have in Excel and

- Triangular distribution function too...

Idea: can we please add normdist() and triang(min,mode,max) functions...

Best

Edit: for normal dist. attached a discretized example...

Hi, All.

As a newbie, I am impressed with Alteryx's ability to deal with lots of formats / connections when importing / imputing data. In a pretty simple way

However, I feel it misses something much more "basic", in my opinion at least. The option of telling Alteryx which decimal separator occurs in database being imported. Like Excel, SAS, IBM SPSS, to name a few, all of them do... Having a default of comma being the decimal separator, but letting the user opting to change it. Numbers in US are separated (integer part from non-integer) by a dot. The entire rest of the world (or almost all of it, there are other exceptions) uses comma instead...

I have posted a flow to deal with it on Alteryx Gallery (it is attached here), but it is, at least in my opinion, something cumbersome that should be pretty straightforward.

So... Is this something I feel alone, or is this a suggestion that could be thought as an improvement for Input & Output tools in future releases of Alteryx?

My best regards,

Bruno.

Idea:

I know cache-related ideas have already been posted (cache macros; cache tools), but I would like it if cache were simply built into every tool, similar to the way it is on the Input Tool.

Reasoning:

During workflow development, I'll run the workflow repeatedly, and especially if there is sizeable data or an R tool involved, it can get really time consuming.

Implementation ideas:

I can see where managing cache could be tricky: in a large workflow processing a lot of data, nobody would want to maintain dozens of copies of that data. But there may be ways of just monitoring changes to the workflow in order to know if something needs to be rebuilt or not: e.g. suppose I cache a Predictive Tool, and then make no changes to any tool preceeding it in the workflow... the next time I run, the engine should be able to look at "cache flags" and/or "modified tool flags" to determine where it should start: basically start at the "furthest along cache" that has no "modified tools" preceeding it.

Anyway, just a thought.

We don't have Server. Sometimes it's easy to share a workflow the old fashioned way - just email a copy of it or drop it in a shared folder somewhere. When doing that, if the target user doesn't have a given alias on their machine, they'll have issues getting the workflow to run.

So, it would be helpful if saving a workflow could save the aliases along with the actual connection information. Likewise, it would then be nice if someone opening the workflow could add the aliases found therein to their own list of aliases.

Granted, there may be difficulties - this is great for connections using integrated authentication, but not so much for userid/password connections. Perhaps (if implemented) it could be limited along these lines.

In the Report Map tool, I'm locked from changing the 'Background Color' menu, and the color appears to be set to R=253, G=254, B=255, which is basically white.

However, when we use our TomTom basemap, we see that the background is actually blue, despite what's listed in the Background Color window. (This goes beyond the 'Ocean' layer, and appears to cover all space 'under' the continents and ocean.) Since we oftren print large maps of the east coast, this tends to use a lot of blue ink. I've attached a sample image to illustrate this.

My solve to-date has been to edit the underlying TeleAtlas text file and change the default background (117 157 181) to white (255 255 255). Unfortunately, we lose these changes with each data update.

Could Alteryx unlock the Background Color menu, and have it affect the 'base' layer, underneath oceans and continents in TomTom maps? Not sure how it might affect aerial imagery.

Would it be possible to change the default setting of writing to a tde output to "overwrite file" rather than the "create new file" setting? Writing to a yxdb automatically overwrites the old file, but for some reason we have to manually make that change for writing to a tde output. Can't tell you how many times I run a module and have it error out at the end because it can't create a new file when it's already been run once before!

Thanks!

Just ran into this today. I was editing a local file that is referenced in a workflow for input.

When I tried to open the workflow, Alteryx hangs.

When I closed the input file, Alteryx finished loading the workflow.

If the workflow is trying to run, I can understand this behavior but it seems odd when opening the workflow.

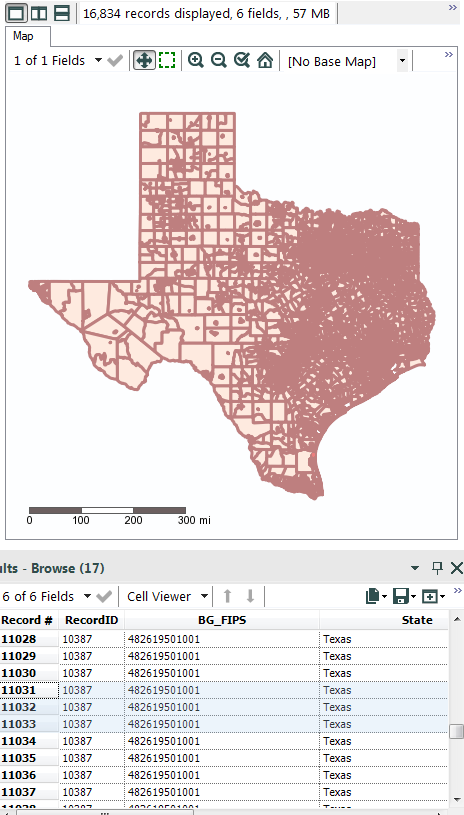

When viewing spatial data in the browse tool, the colors that show a selected feature from a non-selected one are too similar. If you are zoomed out and have lots of small features, it's nearly impossible to tell which spatial feature you have selected.

Would be a great option to give the user the ability to specify the border and/or fill color for selected features. This would really help them stand out more. The custom option would also be nice so we can choose a color that is consistent with other GIS softwares we may use.

As an example, I attached a pic where I have 3 records selected but takes some scanning to find where they are in the "map".

Thanks

There is MD5 hashing capability,using MD5_ASCII(String) and MD5_UNICODE(String) found under string functions

it seems to be possible to encrypt/mask sensitive data...

BUT! using the following site it's child's play to decrypt MD5 --> https://hashkiller.co.uk/md5-decrypter.aspx

I entered password and encrypt it with MD5 giving me 5f4dcc3b5aa765d61d8327deb882cf99

Site gave me decrypted result in 131 m/s...

It may be wise to have;

SHA512_ASCII(String) and

SHA512_UNICODE(String)

Best

Altan Atabarut @Atabarezz

Google Big Query can contain so-called complex queries, this means that a table "cell" can host an array. These arrays are currently not supported in the SIMBA ODBC driver for Google Big Query (GBQ), ie. you can't download the full table with arrays.

All Google Analytics Premium exports to GBQ contain these arrays, so as an example, please have a look at the dataset provided via this link:

https://support.google.com/analytics/answer/3416091?hl=en

The way to be able to query these tables is via a specific GBQ SQL query, for instance:

Select * From flatten(flatten(flatten(testD113.ga_sessions,customDimensions),hits.customVariables),hits.customDimensions).

This is not currently supported from within the Input tool with the Simba ODBC driver for GBQ, which I would like to suggest as a product idea.

Thanks,

Hans

Regularly put true in or false in expecting it to work in a formula.

As I understand SFTP support is planned to be included in the next release (10.5). Is there plans to support PKI based authentication also?

This would be handy as lots of companies are moving files around with 3rd parties and sometimes internally also and to automate these processes would be very helpful. Also, some company policies would prevent using only Username/Password for authentication.

Anybody else have this requirement? Comments?

I know there's the download but have a look at that topic, the easiest solution so far is to use an external API with import.io.

I'm coming from the excel world where you input a url in Powerquery, it scans the page, identify the tables in it, ask you which one you want to retrieve and get it for you. This takes a copy and paste and 2 clics.

Wouldn't it be gfreat to have something similar in Altery?

Now if it also supported authentication you'd be my heroes 😉

Thanks

Tibo

I've found that double-clicking in the expression builder results in varying behavior (possibly due to field names with spaces?)

It would be great if double-clicking a field selected everything from bracket to bracket inclusive, making it simpler to replace one field with another.

For instance:

Current:

IF [AVG AGE] >=5 THEN "Y" ELSE "N" ENDIF

Desired:

IF [AVG AGE] >=5 THEN "Y" ELSE "N" ENDIF

I periodically consume data from state governments that is available via an ESRI ArcGIS Server REST endpoint. Specifically, a FeatureServer class.

For example: http://staging.geodata.md.gov/appdata/rest/services/ChildCarePrograms/MD_ChildCareHomesAndCenters/Fe...

Currently, I have to import the data via ArcMap or ArcCatalog and then export it to a datatype that Alteryx supports.

It would be nice to access this data directly from within Alteryx.

Thanks!

This request is largely based on the implementation found on AzureML; (take their free trial and check out the Deep Convolutional and Pooling NN example from their gallery). This allows you to specify custom convolutional and pooling layers in a deep neural network. This is an extremely powerful machine learning technique that could be tricky to implement, but could perhaps be (for example) a great initial macro wrapped around something in Python, where currently these are more easily implemented than in R.

Very confusing.

DateTimeFormat

- Format sting - %y is 2-digit year, %Y is a 4-digit year. How about yy or yyyy. Much easier to remember and consistent with other tools like Excel.

DateTimeDiff

- Format string - 'year' but above function year is referenced as %y ?? Too easy to mix this up.

Also, documentation is limited. Give a separate page for each function and an overview to discuss date handling.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |