Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Top Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Debugging could be dramatically simplified if each canvas object had the ability to be disabled/enabled. If disabled, the workflow would still pass through the object, but the object itself would be ignored.

The find and replace tool currently does not run row by row, and finds anything in the find column, and replaces it with anything in the replace column. I was under the impression and designed my workflow to use this as a row by row find and replace, not entire columns.

A simple fix would be to allow users to group by RecordID, which should also speed up the find / replace tool for larger data sets I would imagine.

What I am going to do in the meantime is use Regex to replace the word out.

Thanks!

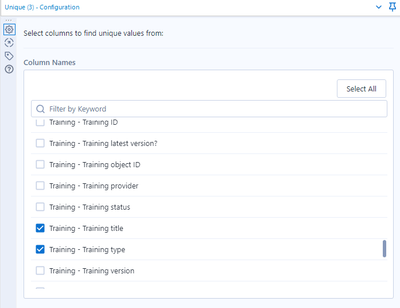

It would be nice if the fields which are selected for the Unique tool can be easily visible. (by way of grouping selected fields etc)

The issue is that if a few out of many fields are selected to be considered for Unique, it is hard to review/check which are the fields that have been selected in the Unique Tool configuration.

Here's an example. It is difficult to see all the fields which have been selected. (There are 7 fields selected in this example.)

I the current Output Data Tool, choosing a bulk Loader option, say for Teradata, the tool automatically requests the first column to be the primary index. That is absolutely incorrect, especially on Teradata because of how it might be configured. My Teradata Management team notifies me that the created table, whether in a temp space or not, becomes very lopsided and doesn't distribute the "amps" appropriately.

They recommend that instead of that, I should specify "NO PRIMARY INDEX" but that is not an option in the Output Tool.

The Output tool does not allow any database specific tweaks that might actually make things more efficient.

Additionally, when using the Bulk Loader, if the POST SQL uses the table created by the bulk loading, I get an error message that the data load is not yet complete.

It would be very useful if the POST SQL is executed only and only after the bulk data is actually loaded and complete, not probably just cached by Teradata or any database engine to be committed.

Furthermore, if I wanted either the POST SQL or some such way to return data or status or output, I cannot do so in the current Output Tool.

It would be very helpful if there was a way to allow that.

Many tab files lately (I am finding when they are created in mapinfo 16) Alteryx cannot read. I have posted about this in other forums but wanted to bring it up on the product ideas section as well.

All the other data types get basic filters but time doesn't get any besides a NULL check:

If i draw a line that crosses the pacific ocean, the path is split in half, and connected by a line that goes over the Atlantic..

This isn't just cosmetic.. if i intersect this object with another polygon, it will show that the two objects interact even though they should not.. The only way i can fix this is to manually divide my polyline into two objects where they cross the pacific ocean.

Please fix this.

Hello all,

EDIT : stupid me : it's an excel limitation in output, not an alteryx limitation :( Can you please delete this idea ?

I had to convert some string into dates and I get this error message (both with select tool and DateTime tool) :

ConvError: Output Data (10): Invalid date value encountered - earliest date supported is 12/31/1899 error in field: DateMatch record number: 37399

This is way too early. Just think to birthdate or geological/archeological data !

Also : other products such as Tableau supports earlier dates!

Hope to see that changed that soon.

Best regards,

Simon

Would be great if you could support Snowflake window functions within the In-DB Summarize tool

The Find/Replace Tool in the Join tools is set to "Find Any Part of Field".

However, 99% of time, I select the "Entire Field", hence would like there to be a way to have the Entire Field checked as my standard box filled in.

It would be nice if Alteryx knew which field is most likely what I will use, based on always checking that box, or for none of them to be selected, to make sure I will select the correct one.

Most of the times when I have a mistake its because of this tool and it being set to "Any Part of Field" and I missed to change it.

Would imagine most people use this tool to find an exact match?

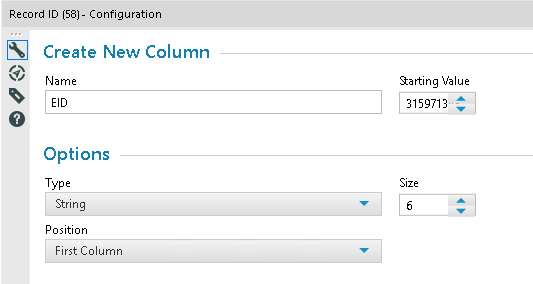

user is not able to see the complete value

only 7 digits are showing up

The current Azure Data Lake tool appears to lack the ability to connect if fine grained access is enabled within ADLS using Access Control Lists (ACL)

It does work when Role Based Access Control (RBAC) is used by ACL provides more fine grained control to the enviroment

For example using any of the current auth types: End-User Basic, End-User (advanced) or Service-to-Service if the user has RBAC to ADLS the connector would work

In that scenario though the user would be granted to an entire container which isn't ideal

- azureStorageAccount/Container/Directory

- Example: azureStorageAccount/Bronze/selfService1 or azureStorageAccount/Bronze/selfService2

- In RBAC the user is granted to the container level and everything below so you cannot set different permissions on selfService 1 or selfService2 which may have different use cases

The ideal authentication would be to the directory level to best control and enable self service data analytic teams to use Alteryx

- In this access pattern the user would only be granted to the directory level (e.g. selfService1 or selfService2 from above)

The existing tool appears to be limited where if don't have access at the container level but only at the directory level then the tool cannot complete the authentication request. This would require the input for the tool to be able to select a container (aka file system name) from the drop down that included the container+ the directory

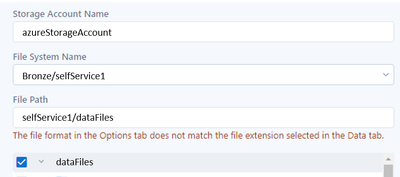

- Screenshot example A below shows how the file system name would need to be input

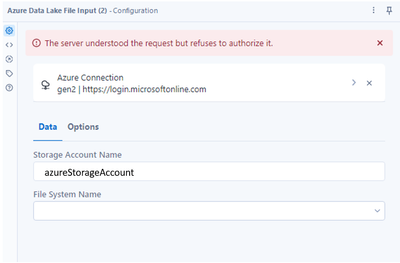

- Screenshot example B below shows what happens if you have ACL access to ADLS at the directory level and not at the container level

Access control model for Azure Data Lake Storage Gen2 | Microsoft Docs

Example A

Example B

i using dynamic input tool a lot. when reading list of sheet. i will simply put a.xlsx|||Sheet1 (as random file name)

However, when it run in the workflow. it will verify the existing file (A.xslx) instead. it will stop due to error (old file not found)

suggestion:

1. verifying new path (file) and not old path (file) , or

2. option to ignore error

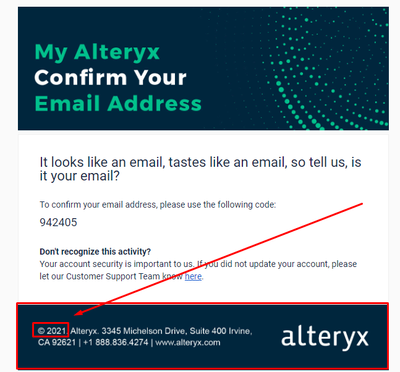

This is not exactly a new feature but I didn't know where else to send it.

I just received an email from Alteryx and I noticed that the footer is an image and not dynamic.

And there you see that the year is still 2021. A good idea would be to insert a code that would grab the year automatically from the actual date.

Prezados, boa tarde. Espero que estejam todos bem.

A sugestão acredito eu pode ser aplicada tanto na ferramenta de entrada de dados, quanto na ferramenta de texto para colunas.

Existem colunas com campos de texto aberto e que são cadastrados por áreas internas aqui da empresa. Já tentamos alinhar para que esses caracteres, que muitas das vezes são usadas como delimitadores, não sejam usados nesses campos. Porém achei melhor buscar uma solução nesse sentido, para evitar qualquer erro nesse sentido.

A proposta é ser possível isolar essa coluna que existem esses caracteres especiais, para que não sejam interpretadas como delimitadores pelo alteryx, fazendo pular colunas e desalinhando o relatório todo.

Obrigado e abraços

Thiago Tanaka

Prezados boa tarde. Espero que estejam bem.

Minha ideia/sugestão vem para aprimoramento da ferramenta "Texto para Colunas" (Parse), onde podemos delimitar colunas com caracteres de delimitação.

Atualmente, a delimitação não ocorre pro cabeçalho, tendo que ser necessário outros meios para considerar o cabeçalho como uma linha comum, para depois torná-lo como cabelho, ou tratar somente o cabeçalho de forma separada.

Seria interessante que a propria ferramenta de texto para coluna já desse a opção de delimitar a coluna de cabeçalho da mesma forma.

Obrigado e abraços

Thiago Tanaka

Prezados espero que estejam bem.

Gostaria de sugerir um aprimoramento para os erros comuns de conhecimento do Alteryx.

Quando rodamos o fluxo de trabalho e ao final algum erro é sinalizado no histórico, normalmente, não é possível entender ao certo o que precisa ser corrigido para sanar o problema.

A susgestão está em transformar o erro que é sinalizado no histórico, em link para que a pessoa clique no erro e seja direcionado para alguma documentação dentro do forum ou documentação, e que facilite a solução do problema para o usuário. Algo parecido com o que ocorre com os exemplos que existem da possibilidade de uso das ferramentas.

Obrigado e abraços

Thiago Tanaka

The Basic Data Profile tool cannot handle files larger than about 40 MB and 33 fields. When I add the 34th field, and the file size stays at 40 MB (Browse tool rounding), it breaks.

I'm trying to get the count of non-nulls for the "Empl Current" field. Adding the 34th field drops the non-null count down from the correct 25,894 to 26, and if I add more fields, the count of non-nulls drops to zero.

The Basic Data Profile tool is configured with a 10 million limit on exact count and 100,000 limit on unique values.

The whole point of the BDP tool is to get one's hands around large data files that are too big to manually inspect, so this tiny limit is really a problem.

I often have to cache my workflow at certain points to do further development/analysis since the run time is so long. I can't express how frustrating it is when i need to edit a formula tool that's like 2 tools behind the cache but the whole entire cache is lost when i have to edit it. why can't the cache be kept up until the tool that was edited??

Performance profiles work at the tool level. When I want to evaluate the performance of a group of tools, I have to click on them one at a time, log the performance, and calculate manually. I want to be able to click on a container full of tools or lasso some myself and view the granular and subtotaled performance profile.

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |