Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

When packaging a workflow, or uploading to a Server environment the ability to manage the assets which need to be included is critical, particularly in more complex solutions which may have numerous dependencies.

The asset management display should be modified to present two column with the first showing just the file name and extension of the asset, and the second column can then show the full path of the asset. This easy change would would prevent the need for scrolling left and right to see the file name when longer paths are utilized.

An alternative approach would be to allow the window to be resizable so the user could see everything without the need to scroll.

The ability to filter/sort the assets by type would also be useful with the following categories: Macros (.yxmc), Data Files (supported file types from file input screen), Other Assets.

-

Enhancement

-

UX

Currently when debug mode is entered in analytic apps and macros, the direct inputs to the app/macro when the error occurred are hardcoded into a workflow in debug mode, so that errors can be more easily detected.

However, inputs into analytic apps also create global variables which can be used in the more code-heavy aspects of Alteryx such as the Formula Tool. These are not updated in the same way which can cause workflows to break in debug mode - it would be really helpful if global variables could be updated in the same way as the inputs into tools are.

-

Category Apps

-

Enhancement

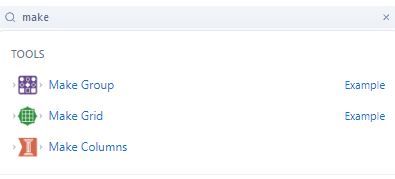

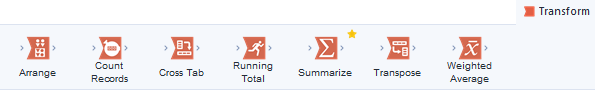

I was just responding to a post about the Make Columns tool, and I noticed that there is not an example workflow for this tool built into Designer. It is also missing from the Transform category, so I never think of it.

-

Documentation

-

Enhancement

Changing the Macro Input tool in an existing macro is dangerous and can result in unmapped fields or lost connections in workflows using the macro. For example, we have a widely used macro for which we'd like to change the name of an input field, change it's default type from Date to DateTime, make it optional while keeping other fields mandatory. Currently, we cannot find a solution which would not require us to fix each workflow using the macro after changing it. We should be able to change the field names, field types (e.g. String to V_WString, Date to DateTime), select optional fields and do other modifications to Macro Input without having to update each workflow using the macro. The new Macro Input UI could be enhanced with a window similar to that of Select tool's. Technically, the Macro Input fields could have a unique ID by which they would be recognised in workflows, so the field names would just be aliases that could be changed without losing the mapping. In summary, we are restricted to our initial setup of Macro Input and it is very complicated to change it afterwards, especially if the macro is used widely.

-

Category Interface

-

Enhancement

Apologies if this has been suggested already - did a search and didn't see anything similar.

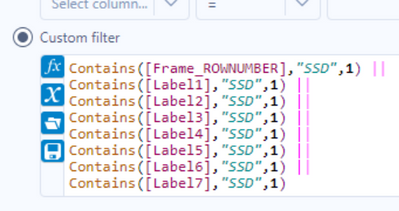

This is a quality of life/UX idea. The search functionality in the results pane essentially does a 'contains' search on all of the columns (see below screenshots for the filter inserted by the 'apply data manipulations button). As I build workflows and profile the data, it'd be helpful if I could click one or more columns and limit the search bar to just those fields.

Right now, depending on the dataset I could get rows returned by the search due to the search term appearing in columns that aren't relevant. To workaround this I could add select tools to limit the columns or do more robust filters in a filter tool, but having it built in would be very helpful.

-

Enhancement

-

UX

The more python and R development I do the more I want to use the shortcutes [CTRL] + [ENTER] to run my workflow,

Is it possible to add this as a second way to run the workflow?

I'm thinking its going to have to have a new shortcut anyways with cloud as [CTRL] + [R] would refresh the page! :D

Asking for a friend :D

-

Enhancement

-

Scheduler

Hello all,

A few weeks ago Alteryx announced inDB support for GBQ. This is an awesome idea, however to make it run, you should use Oauth2 Authentication means GBQ API should be enabled. As of now, it is possible to use Simba ODBC to connect GBQ. My idea is to enhance the connection/authentication method as we have today with Simba ODBC for Google BigQuery and support inDB. It is not easy to implement by IT considering big organizations, number of GBQ projects and to enable API for each application. By enhancing the functionality with ODBC, this will be an awesome solution.

Thank you for voting

Albert

-

Category In Database

-

Enhancement

When I import an Excel file in to Alteryx I get an error: “shared strings root=x:sst” and Alteryx cannot read the file.

I can work around this by manually opening and saving the excel before importing it into Alteryx but this is not ideal, especially considering the automation implications.

I believe this may be happening because the XLSX generated by the source of the report has a prefix “x:” in all the tags in the Shared String XML embedded in Excel. See: https://learn.microsoft.com/en-us/office/open-xml/working-with-the-shared-string-table

Essentially, it would appear Alteryx is not able to read generated Excel sheets which has the prefix "x:" (e.g. from a bot). The second file which has been opened and saved in Excel manually can be read by Alteryx correctly.

Example of file as exported from ”BOT”:

How the same file looks once it is manually opened and saved:

Ideally Alteryx would read the file as is, i.e. with the "X:SST" tag seen above as having to manually open and save the excel before it can be read is rather clunky.

Thanks!

-

Enhancement

-

Scheduler

I think the undo/redo capabilities in Alteryx could be greatly improved. Here is an idea that I think would be beneficial...

I'd like to see which exact tools are affected by my undo/redo actions. An idea was suggested a couple years ago to move your location on the canvas, but that was not added to the roadmap. Instead, is it possible to add the tool ID to the undo menu so that it is obvious which tool each line is detailing?

This is the current debug menu that shows your previous actions:

When a tool is created, the ID can be displayed in this menu, but this is not shown when a change is made to an existing tool. My suggestion is that the menu would say:

4. Change Sort (3) Properties

This same change should be made in the Edit dropdown menu.

-

Enhancement

-

UX

Would be nice to have a way to cache-uncache all inputs or a selected group of tools. Caching and Uncaching workflows with many input tools or slow data-read tools gets to be a bit cumbersome. Would be a nice QoL improvement :)

I looked around for something like this but didn't see a solution, so thought I'd recommend. Please let me know if something like this exists already natively in designer desktop.

-

Enhancement

-

UX

Hi there,

When creating a database connection - Alteryx's default behaviour is to create an ODBC DSN-linked connection.

However DSN-linked connections do not work on a large server env - because this would require administrators to create these DSNs on every worker node and on every disaster recovery node, and update them all every time a canvas changes.

they are also not fully safe becuase part of the configuration of your canvas is held in the DSN - and so you cannot just rely on the code that's under version control.

So:

Could we add a feature to Alteryx Designer that allows a user to expand a DSN into a fully-declared conneciton string?

In other words - if the connection string is listed as

- odbc:DSN=DSNSnowFlakeTest;UID=Username;PWD=__EncPwd1__|||NEWTESTDB.PUBLIC.MYTESTTABLE

Then offer the user the ability to expand this out by interrogating the ODBC Connection manager to instead have the fully described connection string like this:

odbc:DRIVER={SnowflakeDSIIDriver};UID=Username;pwd=__EncPwd1__;authenticator=Snowflake;WAREHOUSE=compute_wh;SERVER=xnb27844.us-east-1.snowflakecomputing.com;SCHEMA=PUBLIC;DATABASE=NewTestDB;Staging=local;Method=user

NOTE: This is exactly what users need to do manually today anyway to get to a DSN-less conneciton string - they have to craete a file DSN to figure out all the attributes (by opening it up in Notepad) and then paste these into the connection string manually.

Thanks all

Sean

-

Admin Settings

-

Category Developer

-

Enhancement

-

User Settings

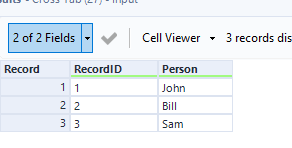

Cross tab automatically alphabetizes the column headers this can be a little awkward when unioning on column position later on. Would be nice to have this as an optional feature through a tick box on the tool.

-

Enhancement

-

UX

We aren't getting a huge amount of help from support on this, so I'm posting this idea to raise awareness for the product teams responsible for the Salesforce connectors and the embedded Python environment.

This post from user Dubya describes the issue in detail:

I have a workflow with several salesforce tools in it, which works fine on my machine. But we need another alteryx user in our office to be able to access, run and maintain the workflow too, via their machine and copy of alteryx designer.

However we're finding that the salesforce inputs and outputs can only be authenticated on one machine at a time.

When the other new user opens the original workflow from the shared network location, the salesforce tools display an error "Salesforce Input (1): {'error': 'invalid_grant', 'error_description': 'authentication failure'}" and the tools fail to load any data. But we can see the full query in the tool and we can even set the custom query option and validate the query successfully, which suggests the source is being correctly connected to and queried, but we just cant run the tool.

The only way to run the tool successfully is to change the credentials and re-authenticate the tool. However this then de-authenticates the original machine, and when we open up the workflow on there and try to run ying the workflow brings back the same error.

We've both tried this authentication back and forth on our own machines and each time one of us re-authenticates, it de-authenticates the other, leading to it triggering the error.

Can someone help explain what's going on and how to fix it, as this doesn't bode well for our collaboration.

We're both running:

The latest build of version of designer 2021.2 (original machine also running desktop automation)

Salesforce Input Tool v4.1.0

Salesforce Output Tool v1.3.0

My response here identifies that this is a problem for our organization as well:

We're experiencing the same issue. It appears to be related to how the tool handles password and security token decryption. I've found that when you modify the related registry entry from "true" to "false", you can see in the tool's xml that the encrypted password and security token are still in there. I'm not sure what else is going on behind the scenes beyond that, but that ought to be addressable by the product teams handling the Salesforce connectors and the Python installation embedded in Designer.

The only differences in our environment compared to u/Dubya's are that we're running on 2020.4 and attempting to use Salesforce Input Tool v4.2.4.

This is a must have for anyone who needs the ability to share workflows among multiple users. This is part of a series of problems that these updated connectors have been plagued with since introducing them years ago, and no one at Alteryx seems to care enough to truly fix the problems. Salesforce is a core system for our organization, so having tools that utilize the latest version of Salesforce's APIs is very important to us. The additional features that the Input tool provides are welcome, but these bugs have to be sorted out in order for us to extract any kind of value out of them. If the "deprecated" Salesforce tools were ever to be removed from Designer while there are issues with the "new" connectors, we would have no choice other than to never upgrade Designer/Server again and be forced to look for another product to serve as our ETL platform.

Please, please, please address this.

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

-

Enhancement

-

Machine Learning

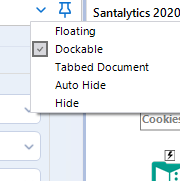

I set up my canvas how I want it, but I will sometimes undock or auto-hide the canvas windows (Results, Configuration, etc.). My suggestion is to add a Locked Dock as a selection that will allow for resizing, but not undocking.

-

Enhancement

-

UX

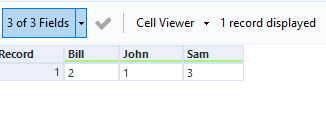

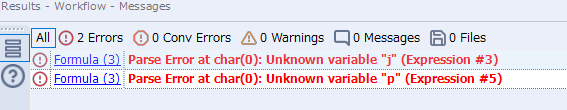

I want to jump to expression #3 of formula (3), when I see following error message. Now I can jump to formula (3), but only expression #1 is opened, not #3. If I have 30 expressions, it is hard to find #20 in 30s.

-

Enhancement

-

UX

Hi currently if you use the cross tab tool and the names of the new fields should have special characters they end up being replaced in the new headers with underscores "_", and then need to be updated in someway. It would be great if this was all done in the tool. In other words the new headers have the special characters as desired

-

Category Transform

-

Enhancement

Need a way to highlight lines whether that means right-clicking and selecting a color or what-not, but just having the lines become black & BOLD doesn't cut it. It's not easy on the eyes. If I could click this line/connector and make it bright green that would be ideal and then I can see where it connects better when zooming out.

-

Enhancement

-

UX

Instead of using the arrows, I think it would be nice to be able to drag and drop the questions to rearrange them in the Interface Designer. This would go more hand in hand with the drag and drop experience of Alteryx.

Additionally, when a lot of interface tools are on the canvas, Designer really slows down if you need to rearrange the order of the tools in the Interface Designer. I would like to see if there is any way that this can be sped up.

Thanks!

-

Enhancement

-

UX

When building API calls within Alteryx there are a few common steps required

1) Build out the URI for the API call (base URL plus any query parameters)

2) Deal with authentication, such as basic authentication requires taking a key and secret, base 64 encoding and passing this into the tool

3) parsing the results out and processing these downstream

For this idea I am specifically focusing on step 3 (but it would be great to have common authentication methods in-built within the download tool (step 2)!).

There are common steps required to parse out the results, such as using Filter (to check for a 200 response), JSON parse, text to columns and then cross tab to get the results into a readable format. These will all be common steps anyone who has worked with APIs will be familiar with:

This is all fine for a regular user to quickly add in and configure these tools. However there is no validation here for the JSON result being as expected, which when embedding an API into a batch macro or analytic app means it can easily fail.

One example of a failure which I've recently come across is where the output JSON doesn't have all fields (name:value pairs) depending the json response. For example using the UK Companies House API, when looking at the ceased to act field at this endpoint - https://developer-specs.company-information.service.gov.uk/companies-house-public-data-api/resources... the ceased to act field only appears in the results if a person has actually ceased to act. This is important if you have downstream tools such as a formula to create a field [Active] where you have:

IF ISNull([ceased_to_act]) THEN "Active" ELSE "Ceased to Act" ENDIFHowever without modification the macro / app will error if any results are returned where there is not this field.

A workaround is to add in the Crew Ensure Fields or union on a list of fields, to ensure that the Cease to Act field is present in the output for all API calls. But looking at some other tools it would be good if an expected Schema could be built in to the download tool to do this automatically.

For example in Power Automate this is achieved as follows:

I am a big advocate of not making things unnecessarily complicated. Therefore I would categorise this as an ease of use feature to improve the experience of working with APIs within Alteryx and make APIs (as load of integrations are API based) accessible to as many users as possible.

-

API SDK

-

Category Developer

-

Enhancement

-

UX

- New Idea 291

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 167

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 5 | |

| 3 | |

| 3 | |

| 3 | |

| 2 |