Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Often when we need to use the Filter tool and select the Custom filter option, it requires us to write conditions such as "IF", "OR" and so on.

Was hoping if some suggestions or hints could be embedded in the custom filter for those of us who have no experience even in basic coding.

-

Category Preparation

-

Desktop Experience

The English-language ASCII sequence is consistent with the order shown in the following:

blank ! " # $ % & ' ( ) * + , - . /0 1 2 3 4 5 6 7 8 9 : ; < = > ? @

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z[ ] ˆ_

a b c d e f g h i j k l m n o p q r s t u v w x y z { } ~

I would find it very useful to have the ability to use a dictionary sort in these tools.

-

Category Preparation

-

Desktop Experience

This can be a simple add on to the sample tool or possibly a separate tool. This would give you the ability to select which row the Header row(and use field values for header) and/or data starts. This is particularly useful if someone has extra rows with report titles and information, etc. This tool could also select rows to skip, ie blank rows in matrices or rows that do not contain data.

-

Category Preparation

-

Desktop Experience

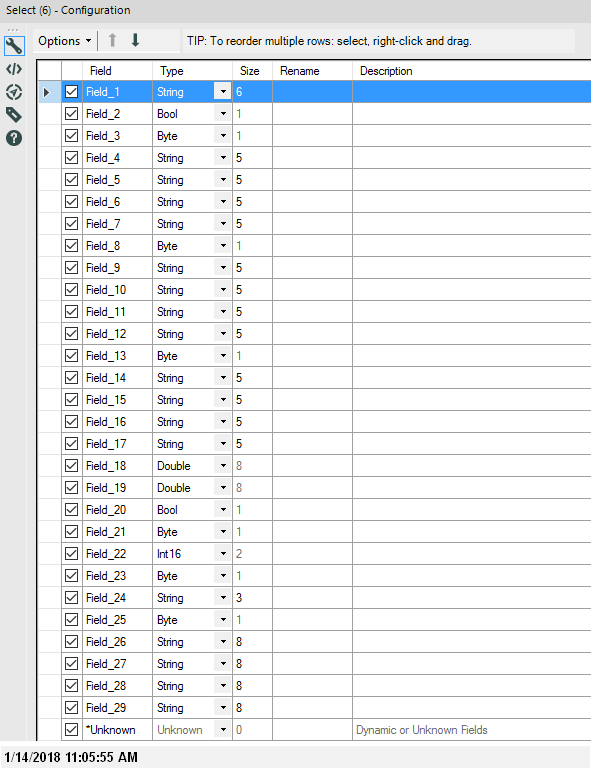

Sometimes the field names for an imported file are very unhelpful. Consider the image below as an example. Even though the AUTO FIELD tool has been applied to this imported CSV file it is still necessary to inpect the source data before assigning field names and descriptions.

MY SUGGESTION: insert a data preview column in the Configuration view between SIZE and RENAME. This data preview column would show the first row of data but a scroll function would allow the user to advance to the next or previous record within this view. This feature would enhance the productivity of analysts in the data preparation phase of their work.

-

Category Preparation

-

Desktop Experience

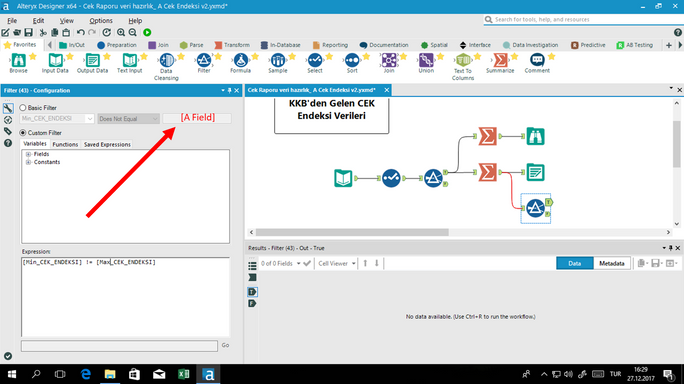

Looking forward to be able to select one of the available fields from a drop-down menu when populating a filter tool...

-

Category Preparation

-

Desktop Experience

I ❤️ Alteryx but sometimes I miss some of the abilities Tableau has to visualize the data output while designing.

When working with data containing many fields, I find myself changing the order of columns, running the workflow, and repeating... over and over until I get the right "look".

I know you can right-click and drag in the configuration pane of the select tool but I was thinking that it would be great if we could also do the same in the results pane and see the changes live.

-

Category Preparation

-

Desktop Experience

In Many of our tools,Before processing any file We create backup and move it to some backup with the datetime stamp.

Can we have such option like "CreateBackup" with timestamp in input and output tools?

-

API SDK

-

Category Developer

-

Category Input Output

-

Category Preparation

When several fields are marked the marking of check box should apply to all selected fields.

When changing Type the change should also apply to all selected fields.

There should also be shortcut keys for doing this.

This is a really common task and would save a lot of time. I'm actually really surprised it does not already exist in Alteryx Designer.

-

Category Preparation

-

Desktop Experience

When adding multiple integer fields together in a formula tool, if one of the integer values is Null, the output for that record will be 0. For example, if the formula is [Field_A] + [Field_B] + [Field_C], if the values for one record are 5 + Null + 8, the output will be 0. All in all, this makes sense, as a Null isn't defined as a number in any way - it's like trying to evaluate 5 + potato. However, there is no error or warning indicating that this is taking place when the workflow is run, it just passes silently.

Is there any way to have this behavior reported as a warning or conversion error when it happens? Again, the behavior itself makes sense, but it would be great to get a little heads up when it's happening.

-

Category Preparation

-

Desktop Experience

Hello all,

Within the databases that I work in, I often find that there is duplicated data for some columns, and when using a unique tool, I have little control of what is deemed the unique record and which is deemed the duplicate.

A fantastic addition would be the ability to select which record you'd like to keep based on the type + a conditional. For example, if I had:

| Field 1 | Field 2 | Field 3 |

| 1 | a | NULL |

| 2 | a | 15 |

I would want to keep the non-null field (or non-zero if I cleansed it). It'd be something like "Select record where [Field 1] is greatest and [Field 2] is not null" (which just sounds like a summarize tool + filter, but I think you can see the wider application of this)

I know that you can either change the sort order beforehand, use a summarize tool, or go Unique > Filter duplicates > Join > Select records -- but I want the ability to just have a conditional selection based on a variety of criteria as opposed to adding extra tools.

Anyways, that's just an idea! Thanks for considering its application!

Best,

Tyler

-

Category Preparation

-

Desktop Experience

In my use of the Data Cleansing I want all the fields to be cleansed. Selecting the ALL choice selects all the fields, however if new fields are added later they are not automatically added. Perhaps the addition of a UNKNOWN choice as in the SELECT tool.

Thanks!

-

Category Preparation

-

Desktop Experience

It will be nice to have a tool which can salce/standardize/Normalize a variable or set of variables to a selected range eg. (0 to 1) ( 0 to pi ) (-1 to 1) etc.. or user specified range.

Althoug its possilbe to do it using fomula tool which include creating new summary variables and several steps - I think its worth to have it as a tool given the whole philosophy of alteryx is to keep it easy and quick.

-

Category Preparation

-

Desktop Experience

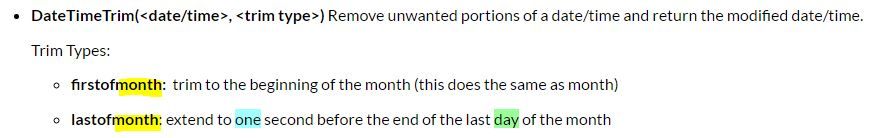

It was great to find the DateTimeTrim function when trying to identify future periods in my data set.

It would be even better if in addition to the "firstofmonth" , "lastofmonth" there could be "firstofyear", "lastofyear" functionality that would find for instance Jan, 1 xxxx plus one second and Jan, 1 xxxx minus one second. (Dec, 31 xxxx 12:58:59) respectively.

I'm not sure if time down to the second would even be needed but down to the day period.

-

Category Data Investigation

-

Category Preparation

-

Category Time Series

-

Desktop Experience

It would help if there is some option provided wherein one can test the outcome of a formula during build itself rather than creating dummy workflows with dummy data to test same.

For instance, there can be a dynamic window, which generates input fields based on those selected as part of actual 'Formula', one can provide test values over there and click some 'Test' kind of button to check the output within the tool itself.

This would also be very handy when writing big/complex formulas involving regular expression, so that a user can test her formula without having to

switch screens to third party on the fly testing tools, or running of entire original workflow, or creating test workflows.

-

Category Data Investigation

-

Category Preparation

-

Category Transform

-

Desktop Experience

It would be really useful to have a Join function that updated an existing file (not a database, but a flat or yxdb file).

The rough SAS equivalent are the UPDATE and MODIFY Statements

http://support.sas.com/documentation/cdl/en/basess/58133/HTML/default/viewer.htm#a001329151.htm

The goal would be to have a join function that would allow you to update a master dataset's missing variables from a transaction database and, optionally, to overwrite values on the master data set with current ones, without duplicating records, based on a common key.

The use case is you have an original file, new information comes in and you want to fill in the data that was originally missing without overwriting the original data (if there is data on the transaction file for that variable). In this case only missing data is changed.

Or as a separate use case, you had original data which has now been updated and you do want to overwrite the original data. In this case any variable with new values is updated, and variables without new values is left unchanged.

Why this is needed: if you don't have a Oracle type database, it is difficult to do this task inside of Alteryx and information changes over time (customers buy new products, customers update profiles, you have a file that is missing some data, and want to merge with a file that has better data for missing values, but worse data for exisitng values (it is from a different time period (e.g. older)). In theory you could do this with "IF isnull() Then replace" statements, but you'd have to build them for each variable and have a long data flow to capture the correct updates. Now is is much faster to do it in SAS and import the updated file back into Alteryx.

-

Category Preparation

-

Desktop Experience

Idea:

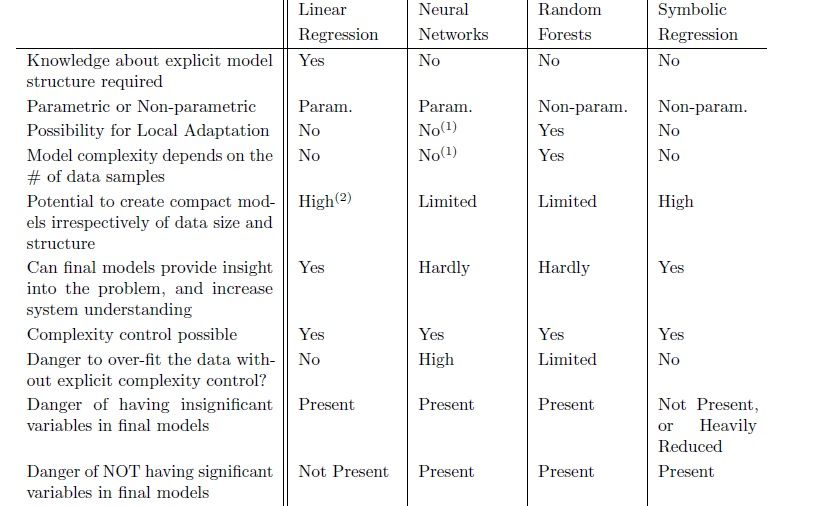

In forecasting and in commercial/sme risk scoring there is a need for trying vast number of algebraic equations which is a very cumbersome prosess. Let's add symbolic regression as a new competitive capability.

Rationale:

Summations, ratios, power transforms and all combinations of a like are needed to be tested as new variables for a forecasting or prediction model. Doing this by hand manually is a though and long business... And there is always a possibility for one to skip a valuable combination.

Symbolic regression is a novel techinique for automatically generating algebraic equations with use of genetic programming,

In every evolution a variable is selected checked if the equation is discriminatitive of the target variable at hand. In every next step frequently observed variables will be selected more likely.

Benefit for clients:

This method produces variables mainly with nonlinear relationships. It is a technique that will help in corporate/commercial/sme risk modelling, such that powerful risk models are generated from a hort list of B/S and P/L based algebraic equations.

There is potential use cases in algorithmic trading as well...

There are 3 very interesting world problems solved with symbolic regression here.

A very relevant thesis by sean Wouter is attached as a pdf document for your reading pleasure...

R side of things:

I've found Rgp package for genetic programming, here is a link.

Competition:

I haven't seen something similar in SAS, SPSS but there is this; http://www.nutonian.com/products/eureqa/

Also there is Bruce Ratner's page

-

Category Predictive

-

Category Preparation

-

Desktop Experience

When using ./*.csv for an input (relative path and wildcard), a preview does not show in the Properties area of the input tool. Can this be added?

-

Category Preparation

-

Desktop Experience

-

Category Preparation

-

Desktop Experience

Solution: Please have the Multi-Field Formula tool behave as the Formula tool does by persisting the field Description values.

Thanks!

John Hollingsworth

-

Category Preparation

-

Desktop Experience

Anytime you create a formula in the formula tool, you get a data preview based on the values in the first row of data. However, if you have a complex "IF c THEN t ELSEIF c2 THEN t2 ELSE f ENDIF" formula then the data combination that gives a TRUE result will likely exist on another row. Therefore, you need to run the workflow, or place a filter tool upstream to isolate the specific row, to test if the formula result is correct.

It would be easier if you could select the Input anchor of the Formula tool, then filter the data in the results window to isolate the row in questions, then the data preview would be based on that filtered data set. I believe this would save a lot of time in the workflow development phase.

-

Category Preparation

-

Desktop Experience

-

Enhancement

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets

| User | Likes Count |

|---|---|

| 7 | |

| 5 | |

| 3 | |

| 2 | |

| 2 |