Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Please add a toggle for Dark Mode as Alteryx, after all these years of using it, is burning out my retinas.

The OS and most apps have a Dark Mode theme so flipping back to a bright white canvas is very jarring. I tried to adjust the canvas colors in a more muted way but never can get it to work satisfactorily and still be as easy to read as the retina burning default.

Hello all,

As of today, when you want to retrieve or create a file on Apache Spark for Databricks, you have only two choices : CSV and Avro

However it's clearly missing parquet file type :

-it's faster

-it's better for storage

-it's standard and already supported as input/output of Alteryx or for HDFS so doesn't seem hard to add here.

Best regards,

Simon

Hello,

I think I have neer wrotten an easier idea : the tooltip for the run workflow button should indicate the keyboard shortcut (ctrl+R). So simple, so intuitive..

Best regards,

Simon

I am aware that an Auto-Documenter tool is available in the Gallery, but that has not been maintained since 2020.

It would be great if Alteryx could have that as an added feature to the Designer as an option for end-users to utilize.

The breakdown of it can be done via XML parsing as such:

<Nodes>: Configuration of tools

<Connections>: The tools used

<Properties>: Workflow properties

Right now, the current workaround is for users to export their XML, and the internal Alteryx development team has to build another workflow that reads the XML accordingly + parses it to fit what is needed.

It would be better for Alteryx to build something more robust, and perhaps even include some elements of AiDIN which they are promoting now.

Sometimes I want to set up a filter to compare the values in two fields in my data set. The basic filter option would be much more powerful and configuration would be quicker if this option allowed this.

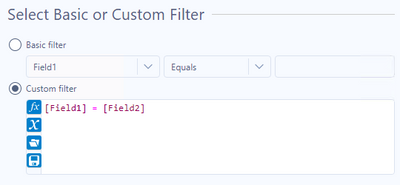

For example, currently I must use a custom filter to check if Field1 and Field2 are equal:

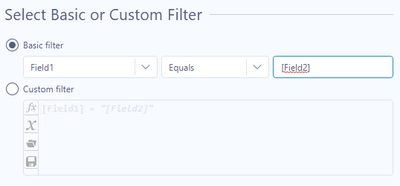

I would love to have the option to either use a static value in the basic filter (as you can now) or select a field name from a dropdown:

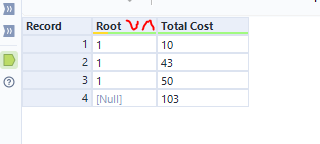

Adding a up and down arrow on each column would make it easier and quicker to sort columns then the drop down menu or sort tool

We have discussed on several occasions and in different forums, about the importance of having or providing Alteryx with order of execution control, conditional executions, design patterns and even orchestration.

I presented this idea some time ago, but someone asked me if it was posted, and since it was not, I’m putting it here so you can give some feedback on it.

The basic concept behind this idea is to allow us (users) to have:

- Design Patterns

- Repetitive patterns to be reusable.

- Select after and Input tool

- Drop Nulls

- Get not matching records from join

- Conditional execution

- Tell Alteryx to execute some logic if something happens.

- Record count

- Errors

- Any other condition

- Order of execution

- Need to tell Alteryx what to run first, what to run next, and so on…

- Run this first

- Execute this portion after previous finished

- Wait until “X” finishes to execute “Y”

- Orchestration

- Putting all together

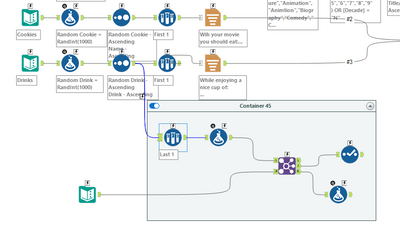

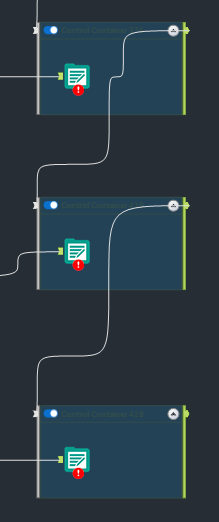

This approach involves some functionalities that are already within the product (like exploiting Filtering logic, loading & saving, caching, blocking among others), exposed within a Tool Container with enhanced attributes, like this example:

The approach is to extend Tool Container’s attributes.

This proposition uses actual functionalities we already have in Designer.

So, basically, the Tool Container gets ‘superpowers’, with the addition of some capabilities like: Accepting input data, saving the contents within the container (to create a design pattern, or very commonly used sequence of tools chained together), output data, run the contents of the tools included in the container, etc.), plus a configuration screen like:

- Refers to the actual interface of the Tool Container.

- Provides the ability to disable a Container (and all tools within) once it runs.

- Idea based on actual behavior: When we enable or disable a Tool Container from an interface Tool.

- Input and output data to the container’s logic, will allow to pickup and/or save files from a particular container, to be used in later containers or persist data as a partial result from the entire workflow’s logic (for example updating a dimensions table)

- Based on actual behavior: Input & Output Data, Cache, Run Command Tools, and some macros like Prepare Attachment.

- Order of Execution: Can be Absolute or Relative. In case of Absolute run, we take the containers in order, executing their contents. If Relative, we have the options to configure which container should run before and after, block until previous container finishes or wait until this container finishes prior to execute next container in list.

- Based on actual behavior: Block until done, Cache, Find Replace, some interface Designer capabilities (for chained apps for example), macros’ basic behaviors.

- Conditional Execution: In order to be able to conditionally execute other containers, conditions must be evaluated. In this case, the idea is to evaluate conditions within the data, interface tools or Error/Warnings occurrence.

- Based on actual behavior: Filter tool, some Interface Tools, test Tool, Cache, Select.

- Notes: Documentation text that will appear automatically inside the container, with options to place it on top or below the tools, or hide it.

This should end a brief introduction to the idea, but taking it a little further, it will allow even to have something like an Orchestration layout, where the users can drag and drop containers or patterns and orchestrate them in a solution, like we can do with the Visual Layout Tool or the Interactive Chart tool:

I'm looking forward to hear what you think.

Best

When working on a complex, branching workflow I sometimes go down paths that do not give the correct result, but I want to keep them as they are helpful for determining the correct path. I do not want these branches to run as they slow down the workflow or may produce errors/warnings that muddy debugging the workflow. These paths can be several tools long and are not easily put in a container and disabled. Similar to the Cache and Run Workflow feature that prevents upstream tools from refreshing i am suggesting a Disable all Downstream Tools feature. In the workflow below the tools in the container could be all disabled by a right click on the first sample tool in the container.

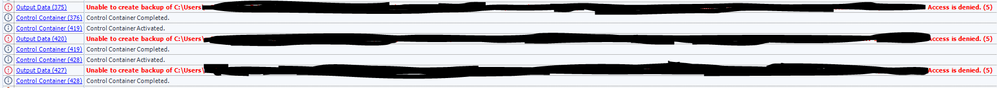

Allow users the ability to add a delay on the connection between Control Container tools. I frequently have to rerun workflows that use the control container because the workflow has not registered that the file was properly closed on outputting from one output tool to the next. The network drives haven't resolved and show that the file is still open while its moved on to the next control container. Users should have an option in the Configuration screen to add a delay before a signal is sent for the next container to run.

In the past I was able to use a CReW tool (Wait a Second) in conjunction with the Block Until Done tool to add the delay in manually. But I have since converted all of my workflows over to Control Containers. Since then half of the times the workflow has run I encounter the following errors.

Currently there is a function in Alteryx called FindString() that finds the first occurrence of your target in a string. However, sometimes we want to find the nth occurrence of our target in a string.

FindString("Hello World", "o") returns 4 as the 0-indexed count of characters until the first "o" in the string. But what if we want to find the location of the second "o" in the text? This gets messy with nested find statements and unworkable beyond looking for the second or third instance of something.

I would like a function added such that

FindNth("Hello World", "o", 2) Would return 7 as the 0-indexed count of characters until the second instance of "o" in my string.

I would like to propose three feature enhancements for the Cross Tab tool under the Transform tool category.

1. Bringing Concat Unique functionality, which is an idea that is currently in Coming Soon status.

2. Adding Start and End in addition to Separator, similar to the Concatenate Properties found in the Summarize tool.

3. Changing the Default Size from 2048 to 1073741823 (max V_WString size). It is common for especially new users to ignore the truncation errors and potentially miss important data that may need to be processed downstream.

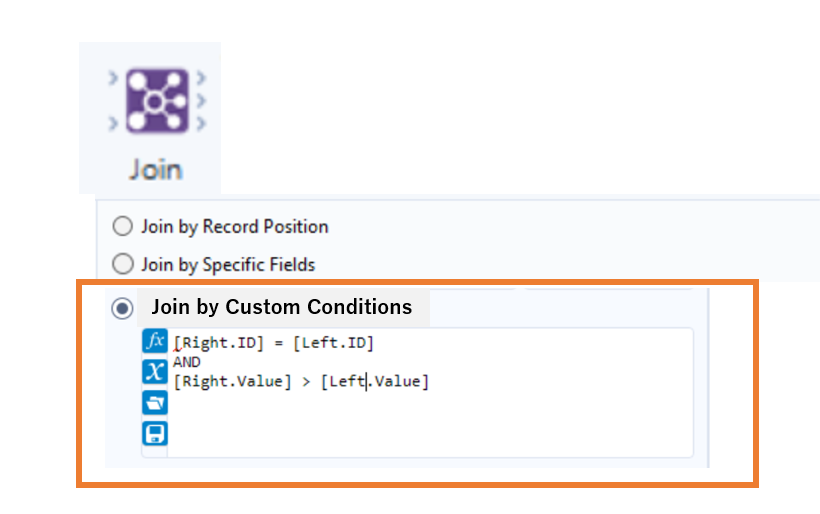

I want a feature to enable join by custom conditions. Currently, in Join tool, allowed condition is only equality of specific fields and specific position, however, in SQL, we can join data by much more flexible conditions like;

SELECT TableA.id FROM TableA INNER JOIN TableB ON TableA.id=TableB.id and TableA.value > TableB.value

Of course, my idea can be easily realized by using combination of Appendix Field + Filter tool, but I meant to say is that Appendix-Fields is quite expensive operation in calculation cost, and it would generate many unnecessary records, which is annoying us in case of handling a huge dataset.

I suppose this kind of flexible conditions can be specified by using expression editor, thereby configuration window of this feature would look like the below image; Adding one more radio button option, and expression editor similar to one used in Filter tool.

Any positive/negative feedback on my idea would be appreciated. Thank you for your attention!

Hello --

Many times, I want to summarize data by grouping it, but to really reduce the number of rows, some data needs to be concatenated.

The problem is that some data that is group is repeated and concatenating the data will double, triple, or give a large field of concatenated data.

As an example:

Name State

| A | New York |

| A | New York |

| A | New Jersey |

| B | Florida |

| B | Florida |

| B | Florida |

The above, if we concatenate by State would look like:

| A | New York, New York, New Jersey |

| B | Florida, Florida, Florida |

What I propose is a new option called Concatenate Unique so I would get:

| A | New York, New Jersey |

| B | Florida |

This would prevent us from having to use a Regex formula to make the column unique.

Thanks,

Seth

For companies that have migrated to OneDrive/Teams for data storage, employees need to be able to dynamically input and output data within their workflows in order to schedule a workflow on Alteryx Server and avoid building batch MACROs.

With many organizations migrating to OneDrive, a Dynamic Input/Output tool for OneDrive and SharePoint is needed.

- The existing Directory and Dynamic Input tools only work with UNC path and cannot be leveraged for OneDrive or SharePoint.

- The existing OneDrive and SharePoint tools do not have a dynamic input or output component to them.

- Users have to build work arounds and custom MACROS for a common problem/challenge.

- Users have to map the OneDrive folders to their machine (and server if published to the Gallery)

- This option generates a lot of maintenance, especially on Server, to free up space consumed by the local version when outputting the data.

The enhancement should have the following components:

OneDrive/SharePoint Directory Tool

- Ability to read either one folder with the option to include/exclude subfolders within OneDrive

- Ability to retrieve Creation Date

- Ability to retrieve Last Modified Date

- Ability to identify file type (e.g. .xlsx)

- Ability to read Author

- Ability to read last modified by

- Ability to generate the specific web path for the files

OneDrive/SharePoint Dynamic Input Tool

- Receive the input from the OneDrive/SharePoint Directory Tool and retrieve the data.

Dynamic OneDrive/SharePoint Output Tool

- Dynamically write the output from the workflow to a specific directory individual files in the same location

- Dynamically write the output to multiple tabs on the same file within the directory.

- Dynamically write the output to a new folder within the directory

Hello all,

Apache Doris ( https://doris.apache.org/ ) is a modern datawarehouse with a lot of ambitions. It's probably the next big thing.

You can read the full doc here https://doris.apache.org/docs/get-starting/what-is-apache-doris but to sum it up, it aims to be THE reference solution for OLAP by claiming even better performance than Clickhouse, DuckDB or MonetDB. Even benchmarks from the Clickhouse team seem to agree.

Best regards,

Simon

Ability to color the connector lines to symbolize a path or data. This would help when you have multiple sources into a Join to determine that a path is still the same set of data when you have multiple paths created.

Hello,

As of today, we can't choose exactly the file format for Hadoop when writing/creating a table. There are several file format, each wih its specificity.

Therefore I suggest the ability to choose this file format :

-by default on connection (in-db connection or in-memory alias)

-ability to choose the format for the writing tool itself.

Best regards,

Simon

The Append Fields tool will issue a Warning if/when the Source data stream has no records that reads something like this:

Append Fields (823) There are no records present in the source.

I can imagine many situations when this issue should be flagged as a Warning. However, I have use cases when both the Source and Target data streams are expected to be empty. Because it is a common, expected scenario, I do not want it flagged as a Warning for the user.

My Idea: provide another option to suppress warnings for this situation.

Perhaps it could be a standalone checkbox, for example:

[x] Suppress Warning when both source and target streams are empty

Alternatively, the tool currently has 3 options to manage warnings or errors related to "too many" records. Perhaps this could be added as a 4th option to the dropdown list, although that would necessitate changing the label slightly.

Hello all,

As of today, you must set which database (e.g. : Snowflake, Vertica...) you connect to in your in db connection alias. This is fine but I think we should be able to also define the version, the release of the database. There are a lot of new features in database that Alteryx could use, improving User Experience, performance and security. (e.g. : in Hive 3.0, there is a catalog that could be used in Visual Query Builder instead of querying slowly each schema)

I think of a menu with the following choices :

-default (legacy) and precision of the Alteryx default version for the db

-autodetect (with a query launched every time you run the workflow when it's possible). if upper than last supported version, warning message and run with the last supported version settings.

-manual setting a release (to avoid to launch the version query every time). The choices would be every supported alteryx version.

Best regards,

Simon

Hello all,

As of now, you have two very distinct kinds of connection :

-in memory alias

-in database alias

It happens than every single time I use a in-database alias I have to create the same for in memory since some operations cannot be realized in in-database (such as pre-sql or interface tools)

What does that mean for us :

-more complex settings operations/training/tests

-unefficient worflows that have to deal with two kinds of alias.

What I propose :

-a single "connection alias", that can be used either for in-db either for in-memory,

-one place to configure

-the in-db or in-memory being dependant on the tools you use

Best regards,

Simon

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 4 | |

| 3 | |

| 3 | |

| 2 | |

| 2 |