Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Tools within a workflow needs to be able to run in parallel whereever applicable.

For example: Extracting 10 million rows from one source, 12 million rows from a different source to perform blending.

currently the order of execution is the order in which tools are dragged into the canvas. Hence Source1 first, Source2 second and then the JOIN.

Here Source1 & Source2 are completely independent, hence can be run in parallel. Thus saving the workflow execution time.

Execution time is quite crucial when you have tight data loading window.

Hopefully alteryx considers this in the next release!

When I'm organizing my workflow, sometimes I want to move a whole tool container on the canvas. Currently, the only way to do this is to first find the header then select and drag this. When the ends of the container is off screen, it can be hard to know how much I wanted to move my container to get it where I wanted relative to the other tools around it. I feel like it would be nice to be able to select anywhere on the tool container and drag it around (possibly holding right click and dragging so that current tool selection capabilities aren't hindered).

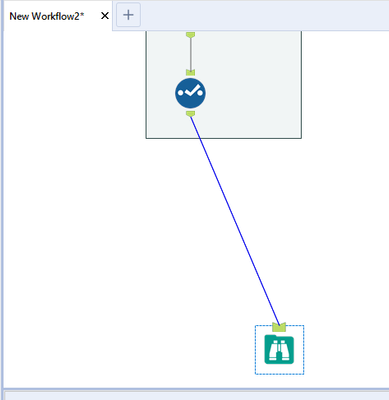

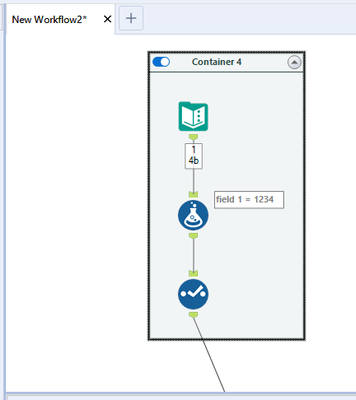

In the (simplified) images below, you'll see that I want my tool container to vertically align just above the browse tool:

I can't currently see the top of the tool container to move it, though, so I must first navigate to that part of the workflow to select the header.

I noticed through the ODBC driver log that Alteryx doesn't care about the kind of base I precise. It tests every single kind of base to find the good one and THEN applies the queries to get the metadata info.

Here an example. I have chosen an Hive in db connection. If I read the simba logs, i can find those lines :

Mar 01 11:37:21.318 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER(), APPLICATION_ID() from system.iota

Mar 01 11:37:22.863 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select USER as USER_NAME from SYSIBM.SYSDUMMY1

Mar 01 11:37:23.454 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from rdb$relations

Mar 01 11:37:23.546 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select first 1 dbinfo('version', 'full') from systables

Mar 01 11:37:23.707 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select #01/01/01# as AccessDate

Mar 01 11:37:23.868 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: exec sp_server_info 1

Mar 01 11:37:24.093 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select top (0) * from INFORMATION_SCHEMA.INDEXES

Mar 01 11:37:24.219 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT SERVERPROPERTY('edition')

Mar 01 11:37:24.423 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select DATABASE() as `database`, VERSION() as `version`

Mar 01 11:37:24.635 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from sys.V_$VERSION at where RowNum<2

Mar 01 11:37:25.230 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select cast(version() as char(10)), (select 1 from pg_catalog.pg_class) as t

Mar 01 11:37:25.415 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select NAME from sqlite_master

Mar 01 11:37:25.756 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select xp_msver('CompanyName')

Mar 01 11:37:26.156 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select @@version

Mar 01 11:37:26.376 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: select * from dbc.dbcinfo

Mar 01 11:37:26.522 INFO 5264 HardyDataEngine::Prepare: Incoming SQL: SELECT @@VERSION;

I can understand that when Alteryx doesn't know the kind of base he tries everything.. (eg : in memory visual query builder) but here, I have selected the Hive database and I have to loose more than 5 seconds for nothing.

This has probably been mentioned before, but in case it hasn't....

The dynamic input tool is useful for bringing in multiple files / tabs, but quickly stops being fit for purpose if schemas / fields differ even slightly. The common solution is to then use a dynamic input tool inside a batch macro and set this macro to 'Auto Configure by Name', so that it waits for all files to be run and then can output knowing what it has received.

It's a pain to create these batch macros for relatively straightforward and regular processes - would it be possible to have this 'Auto Configure by Name' as an option directly in the dynamic input tool, relieving the need for a batch macro?

Thanks,

Andy

Can you put a check box on the container title bar to make it easier to enable/disable containers in the process window? And can you make the minimize/maximize option for a conatiner a separate option from enable/disable?

Hi all,

When debugging an error, we need to verify tool by tool in a sequence to better understand what is really going on.

Sometimes the tools are miles away from each other. Imagine a gigantic workflow with a lot of connections going back and forth and wireless connections everywhere to help the workflow organization. Here is an example with more than 1300 tools:

My idea is to have a shortcut showing all the previous/next tools and by selecting the previous/next one you go directly to them.

Something like this:

What do you guys think about that?

Best,

Fernando Vizcaino

The following idea might not be as valuable as some of @SeanAdams posts, but it would save this user precious fractions of time. When I leave the canvas with my mouse (point A) to go up to the pallet I select and drag the tool down to the canvas. Sometimes I do right-click and go through the menus to add the next tool, but generally I go through that labor only when I'm inserting in-stream the tool. So here is my idea:

Double-Click your NEXT tool and it "Alteryx-ly" appears on your canvas in proximity to the hi-lighted (last) tool. Better yet, connect it! Now I can move from the pallet to the configuration panel directly without having to move my mouse down to the canvas and then over to the configuration panel.

Hopefully, my friend @Hollingsworth will find this time-saving idea worthy of a star. Speed demons like @NicoleJohnson and @BenMoss might not need this turbo boost, but at my age it is worth the ask.

Cheers,

Mark

For in-DB use, please provide a Data Cleansing Tool.

At our organization we are required to change our passwords every few months forcing a change to my Tableau Server password. How does this relate to Alteryx? Well, every 90 days I have to change my password in the "Publish to Tableau Server Tool" for all of my workflows. This is quite a cumbersome process that could be eliminated with AD.

If you dislike manually changing your for each workflow that uses this tool then "star" this post!

Alteryx hosting CRAN

Installing R packages in Alteryx has been a tricky issue with many posts over the years and it fundamentally boils down to the way the install.packages() function is used; I've made a detailed post on the subject. There is a way that Alteryx can help remedy the compatibility challenge between their updates of Predictive Tools and the ever-changing landscape that is open-source development. That way is for Alteryx to host their own CRAN!

The current version of Alteryx runs R 4.1.3, which is considered an 'old release', and there are over 18,000 packages on CRAN for this version of R. By the time you read this post, there is likely a newer version of one of these packages that the package author has submitted to the R Foundation's CRAN. There is also a good chance that package isn't compatible with any Alteryx tool that uses R. What if you need that package for a macro you've downloaded? How do you get the old version, the one that is compatible? This is where Alteryx hosting CRAN comes into full fruition.

Alteryx can host their own CRAN, one that is not updated by one of many package authors throughout its history, and the packages will remain unchanged and compatible with the version of Predictive Tools that is released. All we need to do as Alteryx users is direct install.packages() to the Alteryx CRAN to get our new packages, like so,

install.packages(pkg_name, repo = "https://cran.alteryx.com")

There is a R package to create a CRAN directory, so Alteryx can get R to do the legwork for them. Here is a way of using the miniCRAN package,

library(miniCRAN)

library(tools)

path2CRAN <- "/local/path/to/CRAN"

ver <- paste(R.version$major, strsplit(R.version$minor, "\\.")[[1]][1], sep = ".") # ver = 4.1

repo <- "https://cran.r-project.org" # R Foundation's CRAN

m <- available.packages() # a matrix of all packages and their meta data from repo

pkgs4CRAN <- m[,"Package"] # character vector of all packages from repo

makeRepo(pkgs = pkgs4CRAN, path = path2CRAN, type = c("win.binary", "source"), repos = repo) # makes the local repo

write_PACKAGES(paste(path2CRAN, "bin/windows/contrib", ver, sep = "/"), type = "win.binary") # creates the PACKAGES file for package binaries

write_PACKAGES(paste(path2CRAN, "src/contrib", sep = "/"), type = "source") # creates the PACKAGES files for package sources

It will create a directory structure that replicates R Foundation's CRAN, but just for the version that Alteryx uses, 4.1/.

Alteryx can create the CRAN, host it to somewhere meaningful (like https://cran.alteryx.com), update Predictive Tools to use the packages downloaded with the script above and then release the new version of Predictive Tools and announce the CRAN. Users like me and you just need to tell the R Tool (for example) to install from the Alteryx repo rather than any others, which may have package dependency conflicts.

This is future-proof too. Let's say Alteryx decide to release a new version of Designer and Predictive Tools based on R 4.2.2. What do they do? Download R 4.2.2, run the above script, it'll create a new directory called 4.2/, update Predictive Tools to work with R 4.2.2 and the packages in their CRAN, host the 4.2/ directory to their CRAN and then release the new version of Designer and Predictive Tools.

Simple!

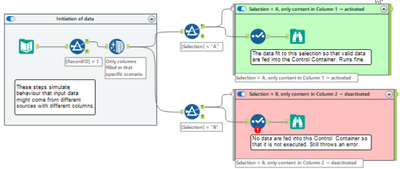

Sometimes, Control Containers produce error messages even if they are deactivated by feeding an empty table into their input connection.

(Note that this is a made up example of something which can happen if input tables might be from different sources and have different columns so that they need separated treatment.)

According to the product team, this is expected behaviour since a selection does not allow zero columns selected. This might be true (which I doubt a bit), but it is at least counter-intuitive. If this behaviour cannot be avoided in total, I have a proposal which would improve the user experience without changing the entire workflow validation logic.

(The support engineer understands the point and has raised a defect.)

Instead of writing messages inside Control Containers directly to the log output (on screen, in logfile) and to mark the workflow as erroneous, I propose to introduce a message (message, warning, error) stack for tools inside Control Containers:

- When the configuration validation is executed:

- Messages (messages, warnings, errors) produced outside of Control Containers are output to the screen log and to the log files (as today).

- Messages (messages, warning, errors) produced inside of Control Containers are not yet output but stored in a message stack.

- At the moment when it is decided whether a Control container is activated or deactivated:

- If Control Container activated: Write the previously stored message stack for this Control Container to the screen and to the log output, and increase error and warning counts accordingly.

- If Control Container deactivated: Delete the message stack for this Control Container (w/o reporting anything to the log and w/o increasing error and warning count).

This would result in a different sequence of messages than today (because everything inside activated Control Containers would be reported later than today). Since there’s no logical order of messages anyways, this would not matter. And it would avoid the apparently illogical case that deactivated Control Containers produce errors.

Hello,

In Datascience, Levenshtein and Jaro Winkler distances are used to quanitify a similarity between two strings.

Here the wikipedia pages

https://en.wikipedia.org/wiki/Levenshtein_distance

https://en.wikipedia.org/wiki/Jaro%E2%80%93Winkler_distance

Note 1 : the Levenshtein and Jaro Distances are already used in Fuzzy Matching tool, so that shouldn't be a huge work to include it in formula

Note 2 : there is a useful macro on the galley https://gallery.alteryx.com/#!app/LevenshteinDistance/5c54701f826fd30988f02779

Note 3 : some product already have it implemented such as Apache Hive or Qlik Sense

Best regards,

Simon

Hi GUI Gang

At the moment, I have a lovely formatted XLS with corporate branding, logos, filled cells, borders etc. The data from the Alteryx output needs to start in cell B6. I have tried the output tools to this named range, but Alteryx destroys all the Excel formatted cells in the data block.

As a workaround on the forums, many Alteryx users pump out to a hidden "Output" tab, and then code =OutputA1 in the formatted sheet. This looks messy to the users who then go hunting for the hidden tab. Personally I end up pumping the workflow out to a temporary CSV file. Then opening that in Excel, selecting all, and then pasting values in the pretty Excel file.

This is fine for one file, but I need to split the output report block by a country field and do this 100s of time for each month end.

Please can we have a output tool that does the same as my workaround. Outputs directly from a workflow to a range in Excel that doesnt destroy the workbook's formatting.

Jay

Hello All,

I'm using the dynamic input tool for SQL requests in my Workflow (WF).

I'm using the "Replace a Specific String" to replace elements in the SQL statement dynamically depeding on results of prevoius tools, user input etc.

So the statement looks like

select * from Schema_Name_xx where invoice_number = 'invoice_number_xx'

Since Schema_Name_xx is no valid Schema in the Database, the statement (= Validation) won't work. Only if I replace Schema_Name_xx by e.g. Invoice_Data_Current it will work, same with the invoice number, invoice_number_xx is replaced by e.g. 4711.

Therefore, validation makes no sense and will never work, only if the WF is running, the correct Schema is inserted in the SQL statement by the "Replace a Specific String" function.

It would be great to disable it in the users settings or wherever in the Designer, changing a config file would also be great :-)

Pls. note: I'm thinking (since I'm not allowed anyway ;-)) about changing/disabeling anything in the Alteryx Server settings.

Reason:

1. Speed: Validating a WF with SQL statements that don't work takes time (every time I save it), sometimes I get even a timeout...

2. WF error entries: Each upload with a failed validation creates an entry in the WF result list which makes it harder to seperate them from the "real" WF errors...

Thanks & Best Regards,

Thomas

When using the output data tool, it would save me and my cluttered organizational skills a lot of effort if the writing workflow was saved as part of the yxdb metadata.

I've often had to search to find a workflow which created the yxdb. I tend to use naming conventions to help me, but it would be easier if the file and or path was easily found.

cheers,

mark

Please update the Render tool to allow users to name the Excel sheet for the output. Alteryx currently errors when using same naming convention that works in normal Output tool.

We see canvasses every day where dozens fields are brought into a canvas or a macro, but never used - and this just creates slowness for no good benefit.

Given that one of the selling features of Alteryx is the speed of processing - could we look at three improvements to the Alteryx engine & designer:

- easiest: Keep track of every field brought in / created - and if they are not used in an output, then throw a warning at the end of the execution process

- For example - you bring in fields a,b,c - you create field d and e during the flow in formula tools

- Field d is never used as an input to any filters or formulae - and it doesn't appear on any output - so it's just waste

- Field a and b are part of the output, so they are fine

- Field c is never used at all - so that's just waste.

- Field e is used to filter the records before output - so this one is fine.

- So we've immediately found 2 fields that we can eliminate and make this canvas faster

- Medium: Ignore the unused fields in the execution engine

- Hardest: Tell the users that their field is unused in Alteryx Designer by doing a lineage analysis of the tools, just like software environments like Visual Studio do. This may require a change to the engine & to designer 'cause we would need to make each tool capture the full detail of the fields that they know in their configuration in order to do this trace.

This has probably been mentioned before, but in case it hasn't....

Right now, if the dynamic input tool skips a file (which it often does!) it just appears as a warning and continues processing. Whilst this is still useful to continue processing, could it be built as an option in the tool to select a 'error if files are skipped'?

Right now it is either easy to miss this is happening, or in production / on server you may want this process to be stopped.

Thanks,

Andy

DearAlteryx team and community,

all the best for 2021!

Thank you very much for enhancing the output option from Alteryx Designer to Excel keeping the format.

For a lot of my use cases this is very helpful!

Still, there are some use cases left. In case I want to overwrite a calculated/linked number (e.g. calculated prediction) with the Actual number, it would be very helpful to feed into those cells as well. At the moment Alteryx is doing the job but I receive a lot of Excel Errors (xml errors) and a corupt Excel file when overwriting calculated fields/linked fields.

Is there a chance to extend the current setup for all of those cases?

Thanks and best regards

Chhristoph

I would love a tool to be created for looking up a value in a table based on a condition. It could be called "Lookup." One input to the tool would be the lookup list, the other is the main database. Inside the tool you could enter functions that can query the lookup table and return the results either as an overwrite of an existing field in the main DB or as a new field in the main DB, similar to the options in the Multi-Row Formula tool.

Here is a link to my post in Community that explains the problem. The solution, in a nutshell, was to create a Join (which resulted in millions of additional rows), run the conditional formula, then filter to get rid of the millions of rows that were created by the Join so only those that met the condition remained (the original database rows).

Here is the text of my Community post describing my project (slightly modified for clarity):

Table 1: A list of Pay Dates (the lookup table)

Table 2: Daily timekeeper data with Week Start and Week End Date fields.

The goal: To find the Pay Date in Table 1 that is greater than the Week Start Date in Table 2 and no more than 13 days after the Week End Date in Table 2.

[Table 2: Week Start Date] < [Table 1: Pay Date]

and [Table 2: Week End Date] < [Table 1: Pay Date]

and DateTimeDiff([Table 1: Pay Date], [Table 2: Week End Date], 'Days') <= 13

There are many different flows I could use this type of tool for that would save time and simplify the flow.

Thanks!

- New Idea 316

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 171

- Accepted 54

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 110

- Revisit 57

- Partner Dependent 4

- Inactive 674

-

Admin Settings

21 -

AMP Engine

27 -

API

11 -

API SDK

223 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

212 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

646 -

Category Interface

242 -

Category Join

105 -

Category Machine Learning

3 -

Category Macros

154 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

398 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

82 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

91 -

Configuration

1 -

Content

1 -

Data Connectors

969 -

Data Products

3 -

Desktop Experience

1,568 -

Documentation

64 -

Engine

129 -

Enhancement

361 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

25 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

81 -

Machine Learning

13 -

My Alteryx

1 -

New Request

211 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

25 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

82 -

UX

223 -

XML

7

- « Previous

- Next »

- asmith19 on: Auto rename fields

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections