Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Additional formatting functionality would be great to see in the Interface Designer.

First off, I want to acknowledge other submitted ideas (vote for them too!):

- Allow Drag and Drop in Interface Designer - Alteryx Community

- Alteryx Interface Designer - Place Element Where S... - Alteryx Community

Both of these are great suggestions and I want to show support of them as well!

To take it another step further from targeted placement or drag/drop... I would also like to see new objects included in the ADD menu. We have Groupings but I'd like to see horizontally split groupings. Meaning, I want the ability to place two Date Inputs next to each other, or short prompts across instead of listed vertically.

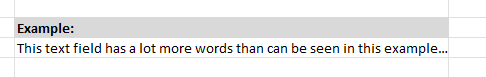

Example:

Why this matters: If Alteryx aspires to be a bonofied contender in the Analytic Application space (which I think it is), then we need added functionality that puts a greater emphasis on the user-experience side of things. Because as we know, user acceptance, ease of use, and adoption all depend on a clean presentation for the elements they interract with.

If you agree, your "thumbs up" of support is only one click away!

Search Box for Tools like Formula Tool where a drop down list of all columns is present to choose from the list. It might take quite a while to search for a specific column in a Formula tool when there is hundreds of columns in a data stream. This is quite an important case when we work with raw columns directly from an SAP extractor.

The constant [Engine.GuiInteraction] can be used to determine whether a workflow was run in the Designer or Gallery. Currently, there's no method to also find out whether a workflow was initiated by a schedule or run manually in the Gallery. The information is available in the Gallery but not forwarded to inside the workflow.

Please introduce a new variable [Engine.ScheduledRun] (or similar) which determines whether the workflow was initiated by a schedule (value "true" if boolean or "schedule" if string type) or manually (value "false" or "manual").

It doesn't seem that Alteryx tests data that isn't on the same hard drive. If my data is located locally, Alteryx works great. If my data is located on a shared server, OMG it takes forever for it to do anything. Simply clicking off a tool onto the canvas can cause a 30-60 second freeze/wait. I literally spend about 1-3hrs per DAY waiting for Alteryx to simply load a tool view. 2024.2 is the worse so far, I have to wait for it to do anything.

It seems Alteryx is getting worse and worse at this, processing non-database data that isn't located locally on hard drive. My idea is to get better at this.

Hello,

Right now you can write a file into sharepoint. However, sometimes, you just want to upload a file. There is already the ability to download (for Sharepoint input). I would like the same for uploading a file (based on an path or workflow dependancies).

Best regards,

Simon

Hi

Currently the date time now input outputs data only in string format, it could be useful if there was an option to output the data in date or date time format.

We have a feature to limit the number of records, and I thought why not have a column limit as well?

Columns take up a lot of space and processing, the more columns we have the more it slows down. So if we can declare it at the start to import the first 20 columns always, it’ll ensure that any new or unwanted columns in Excel will be avoided.

When building join operations in Alteryx, it can be time-consuming to manually scroll through long lists of fields to find the right one to join on, especially when working with large datasets or unfamiliar schemas.

It would be great to have a search-as-you-type filter in the Join tool’s field selection interface. Similar to the existing field selector search, this feature would allow users to start typing a field name and instantly see a filtered list of partial matches. This would significantly speed up the process of identifying and selecting the correct join fields and reduce the risk of selecting incorrect fields due to visual clutter.

I would like the parse tools (regex, split to columns...) to by defualt, not point at any column.

The parse tools need to be pointed at a column, however they by defualt configure them selves to point at the first column. Every time I use them, I enter the other configuration options, such as a regular expression, then hit run. After hitting run, my output column will be populated with only null values yet I will recieve no error.

The reason for this, is 100% EBKAC (error between keyboard and chair), as I have forgotton to point the tool to the correct column, and instead its looking at the defualt (first column)

If the defualt option didnt exist, or was blank, the tool would then error telling me to think about what im doing and point the tool to the correct column.

I believe this change in the tools defualt behaviour would save hours of debug time, wondering why my regex statment isnt working, when in actual fact im just looking at the wrong column.

When working within the Table Tool, there are many options to help users format the width of their columns (i.e. Automatic, Fixed, or Percentage).

It would be nice to see an option added to disable word-wrapping. Meaning, expand to encompass the header or data within the field so that each row is of uniform height regardless of the option:

Fixed: The rest of the data would just be masked like in excel:

Percentage: Same as fixed (above), but relative to the variable width...

Automatic: Resizing to the required width, regardless.

- It would also be nice to have options under automatic, akin to constraints

- Automatic, but with a maximum width of...

- Automatic, but with a minimum width of....

- But regardless without word-wrapping

Why this matters: When producing automation, especially for finalized outputs such as reports and tables; having maximum control over the output format is vital to ensuring downstream users don't have to continue to manipulate the output to suit their needs. Maybe this isn't best practice, but when has customer demands ever taken a backseat to best practices! 😉

Idea: “Create THEN Append” Output Mode for Files and Databases

When outputting data in Alteryx—whether to an Excel file or a database table—the standard practice is:

First run: Set the output tool to “Create New Sheet” or “Create New Table.”

Subsequent runs: Manually change the setting to “Append to Existing.”

This works fine, but it’s very easy to forget to switch from "Create" to "Append" after the first run—especially in iterative development or when building workflows for others.

Suggested Enhancement:

Add a new option to the Output Data tool called:

“Create THEN Append”

Behavior:

On the first run, it creates the file/sheet or table.

On future runs, it automatically switches to append mode without needing manual intervention.

Why This Matters:

Prevents data loss from accidentally overwriting files/tables.

Improves automation and reusability.

Makes workflows more reliable when shared with others.

Mirrors functionality found in many ETL tools that allow dynamic "upsert-like" behavior.

Applies To:

Excel outputs (new sheet creation vs. append)

Database outputs (new table vs. append to existing)

CSV or flat file outputs where structure remains consistent

In the Table tool, is there a way to edit the bar graph's max and min values using a formula based on table values, rather than a fixed value?

For Example, the automatic selection may choose bounds of 0 and 3324539 to include all values. Still, realistically, 100% needs to be a specific value from the table, with batch reports making this amount dynamic.

The current update notification in Alteryx Designer can feel overly persistent and disruptive — especially for enterprise users whose Designer must stay compatible with an Alteryx Server version. Users often see repeated prompts to upgrade Designer to the newest version, even when doing so would break compatibility with their organization’s Server, which can cause errors, confusion, or rework.

Proposed Solution:

Server Version Awareness:

When Designer is connected to Alteryx Server, automatically check the Server version and suppress any upgrade prompt that would lead to a version mismatch.

Flexible Dismissal:

Allow users to snooze or permanently dismiss the update notification for the current version cycle - or dismisal longer than 30 days which is the current max - rather than re-seeing the prompt each launch.

Impact:

Prevents accidental incompatibility between Designer and Server

Reduces user frustration with repetitive prompts

Why This Matters:

Many organizations cannot upgrade Designer independently of Server, and compatibility mismatches lead to support tickets and lost productivity. A smarter, quieter update experience respects these realities and makes version management more reliable for everyone.

Currently the dynamic select tool let's you choose fields you want select or not, however it would be useful to have other features in the select tool i.e change data type/field size. This can be done via the multi field formula tool, but would be useful if it could be done via formulas/selection of a specific data type

In complex Alteryx workflows, it can be hard to navigate between different tool containers - especially when there are dozens spread across in a large canvas.

I would love a feature where users could create a 'table of contents' using clickable text or bookmarks at the top of the workflow. For example, Clicking on a text label named 'Output Calculation' would automatically scroll to view to the Tool Container named 'Output Calculation'.

Suggested Implementation ideas :

- Text boxes or Comment Tools support clickable links that scroll the canvas to a specific container or tool.

- Add a new 'Bookmark' or 'Jump To' action tied to container names or Tool IDs.

- Right click on a text label or container and choose 'Link to' or 'Scroll to this container'.

- Use CTRL + Click or ALT + Click behavior on a text comment to jump.

This would massively improve usability and workflow navigation, especially for large teams or workflows that are shared across departments.

Inspiration :

Tools like POWER BI and TABLEAU allow similar dashboard or bookmark style navigation . Implementing this in Alteryx designer would be a game changer.

Hello,

The Data source window allow severla kind of connections like quick connect, ODBC, etc. But the order is not the same and this is confusing :

Best regards,

Simon

Currently, this option is available in the SharePoint Input tool, which will only output a list of files/items found in the directory specified, which is helpful in cases where we need to add some comparison logic to avoid reading a file that have been processed already (in like a data copy type of scenario). However, this feature was not included in the other connectors (Azure Data Lake File Input, One Drive Input, Box Input, etc.).

Additionally, including an optional input anchor to feed in a list of files to read would also be extremely helpful, similar to a Dynamic Input tool, and avoid the need of creating a Batch macro to perform this operation.

Currently the simulation sampling tool doesn't accept model objects from time series tools as a model input. It would be beneficial if it could so one could run simulations from Time series output (or a new tool is built to offer this functionality)

The global constants, specifically the user-defined ones (within the Workflow configuration) are a great tool for making quick changes. I would love to be able to include the value of these constants directly within a comment: the Comment tool, the captions for Tool & Control Containers, or the Annotation of individual tools. My immediate use-case would be to clearly show what the constants are set to, directly on the canvas, though there are certainly a lot of other uses as well.

It would be great for the Run Until Selected Tool to be able to used on Browse tools. In complex workflows, it is useful for testing certain parts and investigating all records in the Browse component instead of the subset previewed in the previous tool leading up to the Browse tool.

- New Idea 368

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

226 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

251 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

215 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

654 -

Category Interface

245 -

Category Join

107 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

77 -

Category Predictive

79 -

Category Preparation

401 -

Category Prescriptive

2 -

Category Reporting

202 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

981 -

Data Products

3 -

Desktop Experience

1,599 -

Documentation

64 -

Engine

134 -

Enhancement

401 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

14 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

223 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

- Pilsner on: Select/Unselect all for Manage workflow assets

-

TheOC on: Dynamic Select Everywhere

| User | Likes Count |

|---|---|

| 21 | |

| 4 | |

| 3 | |

| 3 | |

| 3 |