Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Novas ideias

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

Current In-DB connections to SAP HANA via ODBC don't extract the Field Descriptions in addition to the technical field names. This forces users to manually rename each field within the workflow or create a secondary, In-DB connection to HANA _SYS_BI tables and dynamically rename. This second option only works if the Descriptions are maintained in the BI tables (which is not always the case).

I have posted the work-around solution on the community but a standard fix would be welcome. DVW and Tableau both offer solutions that seamlessly handle this issue.

I have not found this function or a workaround, only as "recent connections" which normally, are not saved on Virtual Machines.

This would save the time it takes to find the path/folder where Calgary DBs are saved.

if this has been proposed or fixed already, please delete this idea!

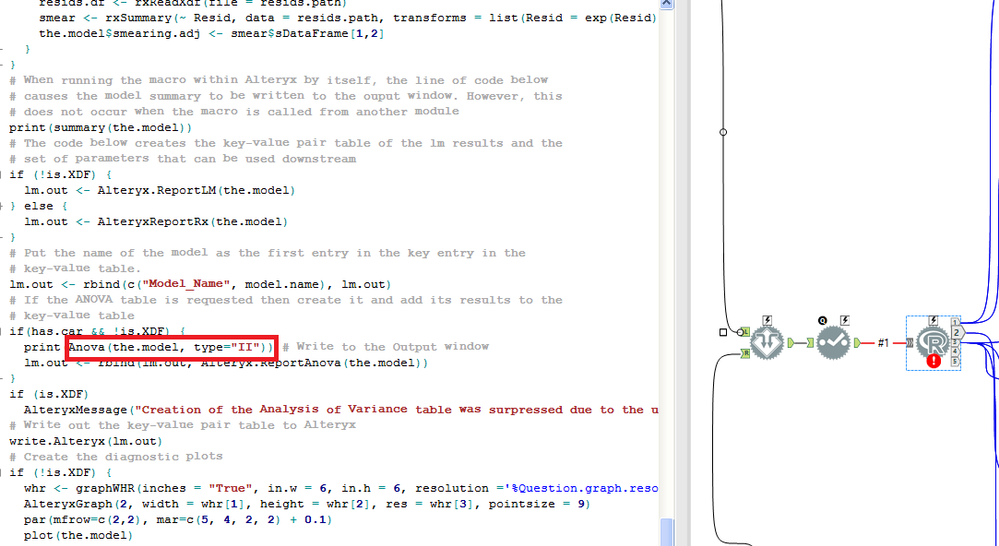

We don't have a seperate ANOVA tool in Alteryx, do you think of any reason?

It's not raw data or row blended data but insights gathered that's important:

Linear Regression Tool has a report for Type II ANOVA based on the model table we provide.

But both type II and other types are not available as standalone statistics tools...

Here is the list of different types of Anova that may be useful;

ANOVA models Definitions

| t-tests | Comparison of means between two groups; if independent groups, then independent samples t-test. If not independent, then paired samples t-test. If comparing one group against a fixed value, then a one-sample t-test. |

| One-way ANOVA | Comparison of means of three or more independent groups. |

| One-way repeated measures ANOVA | Comparison of means of three or more within-subject variables. |

| Factorial ANOVA | Comparison of cell means for two or more between-subject IVs. |

| Mixed ANOVA (SPANOVA) | Comparison of cells means for one or more between-subjects IV and one or more within-subjects IV. |

| ANCOVA | Any ANOVA model with a covariate. |

| MANOVA | Any ANOVA model with multiple DVs. Provides omnibus F and separate Fs. |

Looking forward for the addition of ANOVA tools to the data investigation tool box...

In the Gallery, the File Browse tool returns the file location on the server where the file was uploaded. This allows the file to then be read in as input to a workflow.

If you need the file path of the original file location, you have to add a Text Input for the user to manually add it.

In my case (#00293302), I used a chained app to populate a list box for the user to select the Sheet Names they would like to process through the application. Unfortunately, since I was not able to capture the original file location the application errored out. This is due to the second app using the file location on the server where the file was uploaded, which is provided by the first workflow. This file location (from the Browse tool) is a temporary file location, where inputs are immediately deleted after processing.

Want to test this out? Create an application where you Output the file path from a File Browse tool.

i know.....grrr, this doesn't match your original file location!

Thank you,

Mark

Do you often present your workflows to others within Alteryx, as opposed to just printing a PDF? Wouldn't be nice to have a formatted heading that describes your workflow?

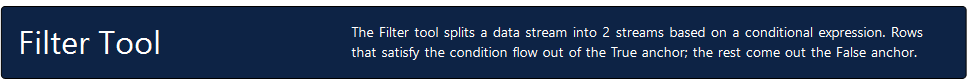

I'm sure you may already be adding and formatting nice headers, like many of our Alteryx tools. For example, the Filter tool:

However, once you begin to scroll down the headers disappear. I recommend we add the ability to freeze the canvas. In this scenario, I would want to view this Header no matter how far I scroll down or right. This would be extremely beneficial when presenting to stakeholders. Additionally, it would be nice to have this repeat on each page, when printed!

Thanks,

Mark

When developing and/or troubleshooting workflows, I frequently disable the outputs using the checkbox in the Runtime configuration settings to speed up the workflow and prevent sending emails and/or overwriting data in the output sources... however, 9/10 times I forget to turn off this checkbox when I save my workflow back up to the Gallery. This results in countless emails from users to the tune of "I ran the workflow successfully, but there was no output?" 🙂

Would love love love to see some sort of warning notification (similar to the ones that already shown for data sources etc.) when saving to the Gallery if the "Disable All Tools that Write Output" option is selected in the Runtime settings.

Thank you!!

NJ

The current (version 7.0.x) Google Analytics connector in the Analytics Gallery is not very robust. I had issues maintaining credentials and was forced to frequently reconnect and reconfigure the GA tools in my flow. I ended up resorting to other means of downloading the data from GA.

It would be helpful if Alteryx created a "first-class" data connector as exists for other cloud-based data sources such as Salesforce.

Many software & hardware companies take a very quantitative approach to driving their product innovation so that they can show an improvement over time on a standard baseline of how the product is used today; and then compare this to the way it can solve the problem in the new version and measure the improvement.

For example:

- Database vendors have been doing this for years using TPC benchmarks (http://www.tpc.org/) where a FIXED set of tasks is agreed as a benchmark and the database vendors then they iterate year over year to improve performance based on these benchmarks

- Graphics card companies or GPU companies have used benchmarks for years (e.g. TimeSpy; Cinebench etc).

How could this translate for Alteryx?

- Every year at Inspire - we hear the stats that say that 90-95% of the time taken is data preparation

- We also know that the reason for buying Alteryx is to reduce the time & skill level required to achieve these outcomes - again, as reenforced by the message that we're driving towards self-service analytics & Citizen-data-analytics.

The dream:

Wouldn't it be great if Alteryx could say: "In the 2019.3 release - we have taken 10% off the benchmark of common tasks as measured by time taken to complete" - and show a 25% reduction year over year in the time to complete this battery of data preparation tasks?

One proposed method:

- Take an agreed benchmark set of tasks / data / problems / outcomes, based on a standard data set - these should include all of the common data preparation problems that people face like date normalization; joining; filtering; table sync (incremental sync as well as dump-and-load); etc.

- Measure the time it takes users to complete these data-prep/ data movement/ data cleanup tasks on the benchmark data & problem set using the latest innovations and tools

- This time then becomes the measure - if it takes an average user 20 mins to complete these data prep tasks today; and in the 2019.3 release it takes 18 mins, then we've taken 10% off the cost of the largest piece of the data analytics pipeline.

What would this give Alteryx?

This could be very simple to administer; and if done well it could give Alteryx:

- A clear and unambiguous marketing message that they are super-focussed on solving for the 90-95% of your time that is NOT being spent on analytics, but rather on data prep

- It would also provide focus to drive the platform in the direction of the biggest pain points - all the teams across the platform can then rally around a really deep focus on the user and accelerating their "time from raw data to analytics".

- A competitive differentiation - invite your competitors to take part too just like TPC.org or any of the other benchmarks

What this is / is NOT:

- This is not a run-time measure - i.e. this is not measuring transactions or rows per second

- This should be focussed on "Given this problem; and raw data - what is the time it takes you, and the number of clicks and mouse moves etc - to get to the point where you can take raw data, and get it prepped and clean enough to do the analysis".

- This should NOT be a test of "Once you've got clean data - how quickly can you do machine learning; or decision trees; or predictive analytics" - as we have said above, that is not the big problem - the big problem is the 90-95% of the time which is spent on data prep / transport / and cleanup.

Loads of ways that this could be administered - starting point is to agree to drive this quantitatively on a fixed benchmark of tasks and data

@LDuane ; @SteveA ; @jpoz ; @AshleyK ; @AJacobson ; @DerekK ; @Cimmel ; @TuvyL ; @KatieH ; @TomSt ; @AdamR_AYX ; @apolly

Now

File Specification: Specify the type of files to return.

- *.*: Return all file types in the specified directory. The default value.

- *.csv: Return all csv files in the specified directory.

- temp*.*: Return all files that begin with temp in the file name: temp1.txt and temp_file.yxdb

Idea

now the file specification option can only be one or all.

is it possible to specify multiple file types.For example *.csv or *.xlsx ?

Similar to how there is a functionality to use pip through the ayxinstallPackages, there needs to be a way to upgrade python itself. There are important packages such as keras that have errors in Python 3.6 that are not present when used with 3.7 so it should really be up to the user as to which python package to use. Another solution could also be to allow the user to point to their own local installation of Python so that the user can maintain consistency between their own local site-packages and the one that Alteryx has.

With an increasing number of different projects, involving different machine learning models, it's becoming difficult to manage different package versions across workflows. Currently, the Python tool has a single virtual environment, so we need to develop models in different projects always using the same Python and package versions as the Python tool venv. While this doesn't bother the code itself too much, it becomes a problem as soon as we store and load pickled models, which are sensitive to even minor changes in packages.

This is even more so a problem when we are working on the Alteryx server, where different teams might use different packages. Currently, there is only the server admin who can install packages on the server and there can only be one version per package.

So, a more robust venv management in the Python tool would be much appreciated!

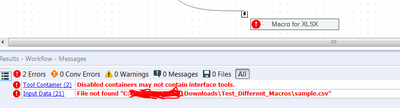

Disabled Containers throw errors if it contains any interface tools. It should not throw any error as the user is intentionally disabling the container.

In the output window, numbers should always be displayed right aligned by default. Also the font should be fixed width type so an 8 and 1 both take equal width and we dont see numbers as below.

11111111

88888888

1. It instantly tells the user that its data type is numeric without having to check metadata.

2. Readability of the values is greatly increased.

The current Power BI Connector can only be used to publish to Power BI in the cloud but not to a local Power BI server. I would like to suggest the idea to improve this connector and configure it in a similar way to the Publish to Tableau Server Connector. The connector should allow to publish to a local url. See attachment.

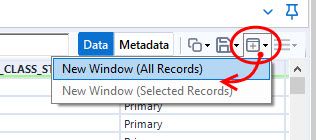

Love the new updates to the Browse tool in 2019.2! However, if you choose the option Open results in new window, which I do often so I can see my whole dataset, the search/filter/sort functionality goes away. Would be great if that new functionality also worked in the new window. Thanks!

Can't wait for the new base maps!

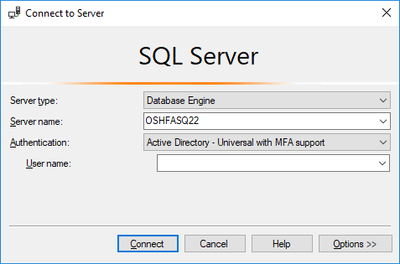

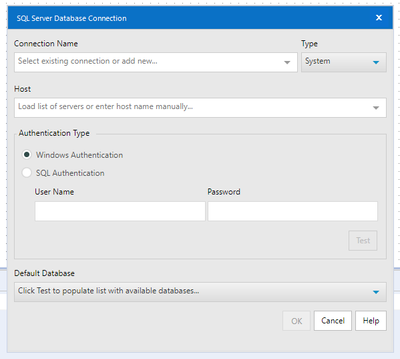

Add to SQL Server Database Connection an Authentication Type for Multi-Factored Authentication (MFA). Allowing to connect to databases which require 2-step authentication (a separate token key each time user connects).

The above added to

I have a workflow that accepts 4 different sets of inputs.

It also has four different outputs. However, I can easily output to separate files in four containers and just close the containers that don't pertain to the input.

On the input side, I keep having to delete the connections and connect the desired input.

I would like to connect multiple files to one tool and have the tool only work if only one of the inputs it's live. Through the use of containers you would then choose which input was live.

The method of saving the results of one app to be read in by a follow on app seems very clunky to me. Can we develop a method to use the results within a workflow to feed drop down lists in later stages in the same workflow? That way an app can stand on it's own without having to save files out and chain further apps to read them again.

It seems this only works for selecting fields to include in the output but not for list of values to feed to a drop down list.

It has become clear that the Jupyter Notebook integration caches code and does not appropriately clear when there are changes made - resulting in "saved" workflows that do not contain updated code. This happens when two people are using a "shared" workflow (emailed back and forth or from a shared drive) if one person does not completely shut down out of Designer Desktop if they had previously had the workflow open at any point. This has been confirmed by Alteryx Support and is not just my hunch.

This also happens sometimes with a single user - where the Jupyter Notebook save button has been pressed multiple times and the workflow has been saved, but the changes do not make it to the file.

The integration is a step in the right direction for sure and is great to use - but my idea is that the cache should be attached to the workflows, not the entire session of Designer. Not knowing if changes were actually saved, and discovering that some were not is extremely frustrating.

- New Idea 377

- Accepting Votes 1.784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1.605 -

Documentation

64 -

Engine

134 -

Enhancement

407 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

86 -

UX

227 -

XML

7

- « Anterior

- Próximo »

- abacon em: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS em: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC em: Date time now input (date/date time output field t...

- EKasminsky em: Limit Number of Columns for Excel Inputs

- Linas em: Search feature on join tool

-

MikeA em: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 em: Select Tool - Bulk change type to forced

-

Carlithian em: Allow a default location when using the File and F...

- jmgross72 em: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com em: Select/Unselect all for Manage workflow assets

| Usuário | Contagem de estrelas |

|---|---|

| 32 | |

| 6 | |

| 5 | |

| 3 | |

| 3 |