Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

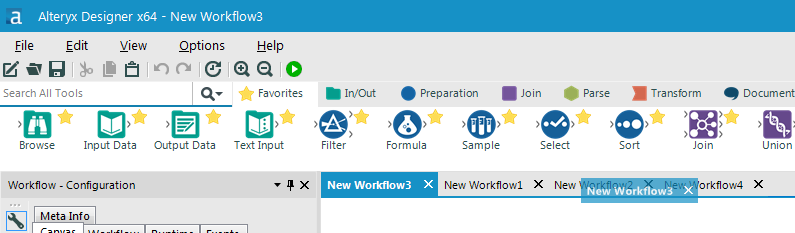

When you have multiple workflows open in tabs and want to rearrange them, it's kind of hard to tell where the tab is. In Firefox and Chrome, as examples, the tabs move around as you drag - if you drag a tab left, the tab next to it will slide to the right. In Tableau, a line with an arrow appears in the location where the tab will be dropped. But in the Alteryx interface, the new location of the tab is not so obvious. A line or something at the destination point would be great!

Chaos reigns within. Repent, reflect and reboot. Order shall return.

For those of us really old school, this would be a novel Easter egg to add

![]()

It would be great if there was an option in the configuration of the Output Tool to create the output directory if it doesn't already exist. Maybe also to append instead of overwrite for all file types too?

I think in case we can have a feature at workflow level to check if the entire logic written inside an Alteryx workflow can be converted into a native language, there can be performance improvements in terms of avoiding too and fro between App (here Alteryx) level and DB level.

For e.g. if I am using Oracle as my source connection and target connection as well, and the transformations done in Alteryx can be pushed down to a DML statement (or some loop with commit interval set) to execute within Oracle itself, we might be able to get performance improvements as the overhead of pulling data from Oracle to Alteryx and that of pushing it back from Alteryx to Oracle can be avoided.

Hi,

I have a suggestion for selecting multiple tools. You currently have it to where the user can hold down Ctrl and select multiple tools one at a time. Could you take it the next step and allow the user to hold Ctrl and then click and drag over multiple tools, then go to another area while still holding Ctrl and click and drag to select multiple tools again.

For example, I would first click and drag over these tools:

Then hold down Ctrl and click and drag over these tools (I’m trying to select the 5 tools without grabbing the Filter tool and it’s 2 Browses):

Please ignore this post! I just discovered the amazing dynamic input tool can accomplish exactly this 🙂

This is no longer a "good future idea" but simply a solution already provided by AlterYX! Thanks AlterYX!

Hello everyone. I think it would be incredibly helpful if all input tools (database / csv files / etc..) had an input stream for data processing.

This input stream would cause the tool to fire if and only if at least 1 row of "data" was passed into it. This would work similar to the Email tool, as the input would simply not fire if no records in the workflow were passed into it.

By making this change, AlterYX could then control the flow of execution when reading / writing to database tables and files without having to bring in Macros or chained applications.

The problem with having to use batch macros and block until done to solve this problem is that it obfuscates your workflow logic, making it much harder for another developer to look at the workflow and understand what is happening. Below is an extremely simply example of where this is necessary.

Example: any time you are doing a standard dimentional warehouse ETL.

Step #1: Write data to a table with an auto-incremented Key

Step #2: Read back from that table to get the Key that you just generated

Step #3: use that key as a foreign key in your primary Fact table.

Without this enhancment you need at least 2 different workflows (chained apps or marco's) to accomplish the task above, however with this simple enhancement the above task could be accomplished in 3 tools.

This task is the basic building block of almost all ETL's so this enhancement would be very useful in my opinion.

Hi,

I am wondering if it is possible to add search function in the browse tool/preview results. It would be easier to locate the key words and not necessarily to add filter for checking and re-run the workflows for extra number of times.

Another thing is about the connectors, is it possible to allow manual adjustment on the connecters or the positions of the tool container? It would be great to adjust the lines when handling complex workflows.

Thanks.

Kenneth

In addition to the existing functionality, it would be good if the below functionality can also be provided.

1) Pattern Analysis

This will help profile the data in a better way, help confirm data to a standard/particular pattern, help identify outliers and take necessary corrective action.

Sample would be - for emails translating 'abc@gmail.com' to 'nnn@nnnn.nnn', so the outliers might be something were '@' or '.' are not present.

Other example might be phone numbers, 12345-678910 getting translated to 99999-999999, 123-456-78910 getting translated to 999-999-99999, (123)-(456):78910 getting translated to (999)-(999):99999 etc.

It would also help to have the Pattern Frequency Distribution alongside.

So from the above example we can see that there are 3 different patterns in which phone numbers exist and hence it might call for relevant standadization rules.

2) More granular control of profiling

It would be good, that, in the tool, if the profiling options (like Unique, Histogram, Percentile25 etc) can be selected differently across fields.

A sub-idea here might also be to check data against external third party data providers for e.g. USPS Zip validation etc, but it would be meaningful only for selected address fields, hence if there is a granular control to select type of profiling across individual fields it will make sense.

Note - When implementing the granular control, would also need to figure out how to put the final report in a more user friendly format as it might not conform to a standard table like definition.

3) Uniqueness

With on-going importance of identifying duplicates for the purpose of analytic results to be valid, some more uniqueness profiling can be added.

For example - Soundex, which is based on how similar/different two things sound.

Distance, which is based on how much traversal is needed to change one value to another, etc.

So along side of having Unique counts, we can also have counts if the uniqueness was to factor in Soundex, Distance and other related algorithms.

For example if the First Name field is having the following data -

Jerry

Jery

Nick

Greg

Gregg

The number of Unique records would be 5, where as the number of soundex unique might be only 3 and would open more data exploration opportunities to see if indeed - Jerry/Jery, Greg/Gregg are really the same person/customer etc.

4) Custom Rule Conformance

I think it would also be good if some functionality similar to multi-row formula can be provided, where we can check conformance to some custom business rules.

For e.g. it might be more helpful to check how many Age Units (Days/Months/Year) are blank/null where in related Age Number(1,10,50) etc are populated, rather than having vanila count of null and not null for individual (but related) columns.

Thanks,

Rohit

We primarily work on tracking people through a store or any physical space and extract shopping trip information such as areas visited, dwell time, etc. This shopper track information consists of a series of X,Y coordinates which are also used to plot heat maps based on density of points in a given area.

I have been using a workaround due to the lack of cartesian coordinate support in spatial tools - involving converting cartesian values to Lat., Long. by dividing X,Y values by 10,000. This approach works fine for finding spatial matches between points and polygons. However, when using something like a heat map tool which requires Grid Size and Max Distance in Miles/Kilometers, since there is no support for smaller units of distance, you will not be able to generate a heatmap data file.

In addition to adding support for Cartesian coordinates, it would also be helpful to provide an option to load in a Floorplan/Image as Base Map when browsing Spatial tool results.

When attempting to connect to an oracle database, if the connection is unsuccesful, nothing happens so you just sit and stare and wait for the SQL window to pop up. It would be helpful if there was an error message to tell you that the connection was unsuccesful.

Once you get the hang of it, Alteryx is great at producing reports. Unfortunately, each item has its own XML parameters, like font size, colors, table defaults, etc. As it stands now, if I want to make a change across the course of a complex, multi-page report, I have to update the settings in each table and chart.

I'd love it if I could have a 'Styles' module that I could set, then copy and paste and insert after each table. From that point, I suppose you could make parameters out of style options and build them into a bigger module. For now, though, some kind of reusable style would be invaluable.

We frequently report on our data by week. However, the DateTimeTrim function (in the Formula Tool and others) does not support this trim type.

Some workarounds have been posted that involve calculating the day of the week and then subtracting it out:

http://community.alteryx.com/t5/Data-Preparation-Blending/Summarize-data-by-the-week/td-p/6002

It would be very helpful to update DateTimeTrim as follows:

- Add a <trim type> of 'week'

- Add an optional parameter for <start of week>

- Default value: 0 (Week beginning Sunday)

- Other values: 1 (Week beginning Monday), 2 (Week beginning Tuesday), etc.

I often have many tool containers, which I have to constantly manage locations of so they do not overlap, etc. It would be much easier to have the option to have a tabbed tool container.

I often find when building a big workflow that I need to cancel its running in order to tweak something, but I still want to know which element was making it slow/crashing the workflow.

What would be useful in this circumstance is for the performance profiling to work even on partially finished (e.g. cancelled) workflows. It could just display a message reminding that this is a percentage of things that have run so far.

Currently if a user has multiple connections in a workflow that connect to a password-protected source, and that password changes, the user will be locked out of their account by login attempts as Alteryx attempts to validate the connection.

Today I had to manually edit the XML of another user's workflow in order to remove references to their server, so they could correct their password without locking the account for a third time today.

While I understand that aliases are a good workaround to this problem, the issue still has potential to occur.

Having an option to load a workflow in a "SECURE" or "SAFE" mode, where it would not validate a query until runtime, or refreshing the metadata manually, would help to significantly reduce lockouts which would improve the usability of the tool.

Currently the OLEDB/ODBC connection string for a server that requires a password can be injected into with a password that contains a ; or a |. There may be other values that cause this as well - these are the ones our company has found so far.

This lowers the security of passwords for our other systems, by limiting what characters we can use.

I find the Run Command tool to be counter-intuitive: rather than supplying a required I/O parameter (in at least one of "Write Source" and/or "Read Results"), I would rather just use a "Block Until Done" approach to 1. write file, 2. issue custom system command, 3. read file. An even simpler example is the case where I don't need I/O to/from the system command... in that case, I just want to issue the command, nothing more. But the current tool will require me to specify a dummy file, which is counter-intuitive and also leaves that unnecessary file somewhere.

To fix this up without breaking existing user implementations, the "idea" is:

- Do not require either "Write Source" or "Read Result" ... allow both to be blank.

- Allow (but don't require) any of "Command," "Command Arguments," and "Working Directory" to be dynamically populated from fields in the data streamed into the tool.

So... any existing user implementation should be unnaffected... but these changes would allow users to implement system commands in a more intuitive manner, and even allow for very dynamic system commands based on the workflow.

Thanks!

There are cases where a batch macro is used for multiple tasks where not all of the control parameter inputs are available all of the time. To use a batch macro control parameter it must be populated with a field, meaning sometimes a field containing a dummy value may have to be created to work-around this forced populating.

It would be useful if control parameter inputs could be made optional, passing a NULL value when a field hasn't been provided and handling any NULLs passed in within the batch macro.

In version 10.5 if the taskbar is auto-hidden and alteryx is the active window - you cannot access the taskbar by moving the mouse to the bottom of the screen.

You have to use the windows key or switch to another application window

- New Idea 291

- Accepting Votes 1,791

- Comments Requested 22

- Under Review 166

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,551 -

Documentation

64 -

Engine

127 -

Enhancement

343 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 7 | |

| 7 | |

| 5 | |

| 3 | |

| 3 |