Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: Hot Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

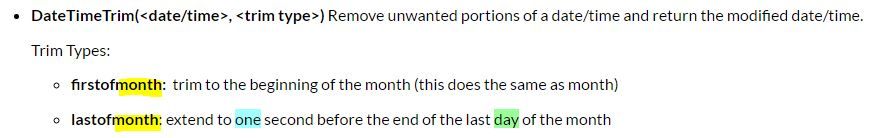

It was great to find the DateTimeTrim function when trying to identify future periods in my data set.

It would be even better if in addition to the "firstofmonth" , "lastofmonth" there could be "firstofyear", "lastofyear" functionality that would find for instance Jan, 1 xxxx plus one second and Jan, 1 xxxx minus one second. (Dec, 31 xxxx 12:58:59) respectively.

I'm not sure if time down to the second would even be needed but down to the day period.

The overview window is useful with large workflows. Clicking once centers the workflow on the desired location. I think it would be great if you could double click and it would center on that location as well as zoom to the "Normal" zoom level. Quite often when working with large workflows you are zoomed out and it would be helpful to quickly be able to go to a section of the workflow and automatically be at a level where you can see the the connection progress (without having to zoom in enough times to see it.)

I'm using Alteryx with an Hadoop Cluster, so I'm using lots of In-DB tools to build my workflows using Simba Hive ODBC Driver.

My Hadoop administrator set some king of default properties in order to share the power of the cluster to many people.

But some intensive workflow request need to override some properties. For instance, I must adjust the size of the TEZ container in setting specific values for hive.tez.container.size and hive.tez.java.opts.

A work-arround is to set those properties in the server side properties panel in ODBC Administrator but if I have many different configuration, I will have a lot of ODBC datasource which is not the ideal.

If I could directly set those properties in the Connect In-DB tool, it would be nice.

I find this page very helpful to understand how each tool works: Alteryx Tools

It would be really great if Alteryx also provides a working example for each tool. For an effective hands-on experience....!!

Hi All,

While using the Join tool, I have ran across the following which I believe if included as part of Alteryx vanilla Join Tool version would be helpful -

1) Joining two data sets on Null values should be optional or should be removed. Generally Null means the value is not known so it seems like a logical error to treat two unknown values as same, unless specified otherwise.

2) Compress whitespaces, I have come across data sets coming from two entites which are all same except for the whitespace. So I think it would help to have an option wherein multiple whitespaces are compressed into one.

3) Case sensitivity/insensitivity - This is quite common for users to convert into upper case or lower case the columns on which Join condition are based. But IT developers end up coding more and at times creating new fields just for joining purpose.

4) Null matches non null - At times the requirement is such that if the join succeeds on a particular key column, null and not null values should be considered a match (but not two non equal non null values).

5) Removal of junk characters - There should be some functionality to remove junk characters from the columns on which joins are performed.

All/Any of the above points can be made available as an additional option in conjunction with the settings available today.

Thanks,

Rohit Bajaj

Hi All,

It would be a given wherein IT would have invested effort and time building workflows and other components using some of the tools which became deprecated with the latest versions.

It is good to have the deprecated versions still available to make the code backward compatible, but at the same time there should be some option where in a deprecated tool can be promoted to the new tool available without impacting the code.

Following are the benefits of this approach -

1) IT team can leverage the benefits of the new tool over existing and deprecated tools. For e.g. in my case I am using Salesforce connectors extensibly, I believe in contrast to the existing ones the new ones are using Bulk API and hence are relatively much faster.

2) It will save IT from reconfiguring/recoding the existing code and would save them considerable time.

3) As the tool keeps forward moving in its journey, it might help and make more sense to actually remove some of the deprecated tool versions (i.e. I believe it would not be the plan to have say 5 working set of Salesforce Input connectors - including deprecated ones). With this approach in place I think IT would be comfortable with removal of deprecated connectors, as they would have the promote option without impacting exsiting code - so it would ideally take minimal change time.

In addition, if it is felt that with new tools some configurations has changed (should ideally be minor), those can be published and as part of

promotions IT can be given the option to configure it.

Thanks,

Rohit Bajaj

So, best practice is to add annotations - so how many times have you seen an annotation that says

"This filters for column y" and then it's been changed to something else in the meantime.

Alteryx provides some default annotations, but wouldn't it be amazing if those metadata fields were available in an easy way to include in your own annotations

I imagine this working like so:

On an input tool:

"The file {filename} comes in from Joe in accounts every Tuesday and is currently {rows} big."

Filter tool

"This filters out the salaries above 100k with the formula {expression}"

Join

"I join using the {fields} fields rather than the typical key because the key isn't well populated"

Union

"I combine {IncomingNames} sets"

Formula

"The expression {expression1} calculates the exposure then {expression2} converts to bps."

Input, SQL Server

"The data source {databasename} is owned by Joe Soap in Investment"

Suddenly it makes sense to use annotations, and they don't lie (as easily) and mislead you into believing something other than the inherent logic, but with flexibility to select which bits of the metadata of the tool make sense to you.

Alteryx must have this functionality under the hood with the auto annotation, and this just provides better flex for that.

In SQL, obtaining partial sums in a grouped aggregation is as simple as adding "WITH ROLLUP" to the GROUP BY clause.

Could we get a "WITH ROLLUP" checkbox in the Summary Tool's confg panel in order to produce partial sums?

(This was initiated as a question here.)

Here is an example of without rollup vs. with rollup, in SSMS:

(Replacing "NULL" with the phrase "(any)" or some such, and we have a very useful set of partial sums.

I'm curently creating an app using interface tools to control multiple worklflows. It would be nice if I didnt have to physically drag the interface tool to the recceiving node. For example, right now I can click on the Left node of a join tool and it gives me the option to make connections to that tool or out of that tool wireless. It would be nice if I could right click and have an option to select from a list of interface tool incoming connections.

Need to allow Aliases to work with the Google Sheets Input / Output tools, so that I can input API keys in one place to service multiple tools. I know I can do this via Macros, but I also know it's best practice to avoid nesting macros.

We are enjoying the new functionality of pointing to a .zip file in the input data tool and reading in .csv files without having to manually unzip the file. We process a lot of SQlite files so having that as an option would be great. We also have several clients that supply .zip flat fixed lenght files with 100+ fields that we have mapped and having the functionality to use the Flat File Layout tool and Import the mapping file that has already been completed would be very helpful also. The current workaround is to read in as a csv with \0 as the delimiter but we then have to substring to parse each field and rename.

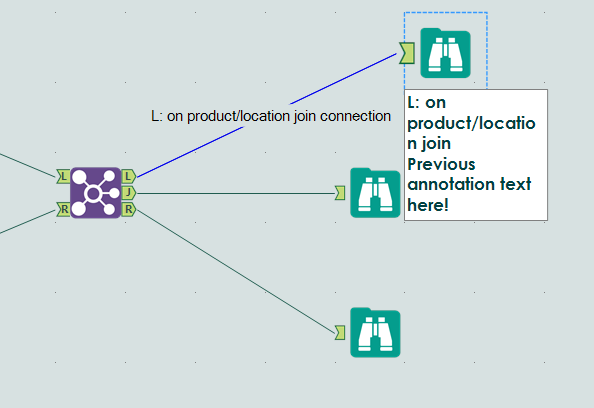

I find that when I'm using Alteryx, I'm constantly renaming the tool connectors. Here's my logic, most of the time:

I have something like a Join and 3 browses.

- I name the L join something like "L: on product/location join"

- I then copy that descriptor, and past it in the Annotation field

- I then copy that descriptor, select the wired connector, and paste that in the connection configuration

MY VISION:

Have a setting where I could select the following options:

- Automatically annotate based on tool rename

- Automatically rename incoming connector based on tool rename

If I rename a tool, and "Automatically annotate based on tool rename" is enacted, it will insert that renaming at the top of the annotation field. If there is already data in that field, it will be shifted down. If I rename a tool and "Automatically rename incoming connector..." is on, then the connection coming into it gets [name string]+' connection' put into its name field. I included a picture of the end game of my request.

Thanks for your ear!

It would be nice if when Alteryx crashes there is an autosave capability that when you re-open Alteryx it shows you the list of workflows that were autosaved and give you the immediate option to select which ones you want to save. SImilar to what Excel does.

I know about the current autosave feature, but I would still like to have it pop up or the option to have it pop up for me to select which to keep and which to discard.

As I'm sure many users do, we schedule our workflows to run during non-business hours -- overnight and over the weekend. Our primary datasource (input tool) is a remotely hosted database that our organization doesn't maintain (and hence cannot monitor the status of). If the database were to timeout or if our query were to overload it's resources, our scheduled Alteryx workflow would (attempt to) continue to run for an unknown amout of time. We would like the ability to cancel a scheduled workflow if it has been running for a certain amount of time in order to prevent this.

I was trying to check the correctness of multiple URLs with the help of download tool connected to parsing tool that way I check the download status and filter the records to good and bad based on the HTTP status codes. To my suprise it allows 2 errors at the maximum ans stops checking next records which is not at all useful for me. I wondered if someone can help me. As @JordanB say it is the default behavior of the tool and can't be handled as of now. Hope you guys have the error handling feature in your next release.

When testing I often need to check single (or a handful of numbers) throughout the workflow. I have to click on each browse to check the numbers. A tool that rendered the output of multiple fields throughout the workflow would allow me to check if I was dropping any rows or miscalculating at a glance.

For instance if at the beginning of a workflow the row count was 15,951 and the cohort size was 328. Also the sum of profit for the cohort was £1,934,402 and the count of sales was 1,584. Remember those? Not if you have to click on the browse tool multiple times all the way through a large workflow to make sure you keep these figures intact. Copying out into excel or popping out the data from browse are the only options, each fiddly when trying to alter things quickly.

A resizable output window such as the explorer window would uber-useful.

Thanks

Running into an issue when typing from keyboard to make a selection in some dropdown lists found in tool configurations. I've replicated it in the Join, Join Multiple, and Filter tools. (Sort tool worked as expected.) Running version 10.6.

- Join tool configuration.

- From "Left" dropdown start typing to select a column.

- Click off the "Left" dropdown (into "Right" for example).

- Notice that your selection in "Left" is maintained.

- Now click on another tool or in the cavas.

- Click back to the Join tool. Notice that the "Left" selection has reverted to the original field and your typed selection is lost.

- Note: you can "commit" your selection by hitting "Enter" after typing, but I don't think this extra step should be required (as evidenced by the Sort tool).

This small mis-step can have SIGNIFICANT impact if a developer assumes their join field selection was set correctly, but in fact it changed without them noticing and is resulting in incorrect joins.

See attached video for quick walkthrough.

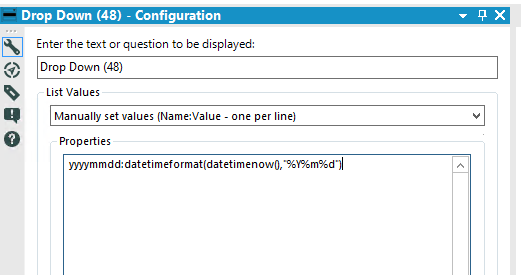

Please give the ability to use formulas when using the "Manually set values" option in the Drop down tool.

Screenshot below shows an example:

When users who have no idea what the Field API Name is try to pull data from Salesforce it can be problematic. A simple solution would be to add the Field Label to the Query Window to allow users to pick the fields based on API NAME or FIELD LABEL.

When pulling data from Oracle and pushing to Salesforce, there are many times where we have an ID field in Oracle and a field containing this ID in Salesforce in what is called an external ID field. Allowing us to match against those external ID fields would save us a lot of time and prevent us from having to do a query on the entire object in Salesforce to pull out the ID of the records we need.

- New Idea 292

- Accepting Votes 1,790

- Comments Requested 22

- Under Review 167

- Accepted 55

- Ongoing 8

- Coming Soon 7

- Implemented 539

- Not Planned 111

- Revisit 59

- Partner Dependent 4

- Inactive 674

-

Admin Settings

20 -

AMP Engine

27 -

API

11 -

API SDK

220 -

Category Address

13 -

Category Apps

113 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

247 -

Category Data Investigation

79 -

Category Demographic Analysis

2 -

Category Developer

209 -

Category Documentation

80 -

Category In Database

215 -

Category Input Output

645 -

Category Interface

240 -

Category Join

103 -

Category Machine Learning

3 -

Category Macros

153 -

Category Parse

76 -

Category Predictive

79 -

Category Preparation

395 -

Category Prescriptive

1 -

Category Reporting

199 -

Category Spatial

81 -

Category Text Mining

23 -

Category Time Series

22 -

Category Transform

89 -

Configuration

1 -

Content

1 -

Data Connectors

968 -

Data Products

3 -

Desktop Experience

1,552 -

Documentation

64 -

Engine

127 -

Enhancement

344 -

Feature Request

213 -

General

307 -

General Suggestion

6 -

Insights Dataset

2 -

Installation

24 -

Licenses and Activation

15 -

Licensing

13 -

Localization

8 -

Location Intelligence

80 -

Machine Learning

13 -

My Alteryx

1 -

New Request

204 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

24 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

81 -

UX

223 -

XML

7

- « Previous

- Next »

- Shifty on: Copy Tool Configuration

- simonaubert_bd on: A formula to get DCM connection name and type (and...

-

NicoleJ on: Disable mouse wheel interactions for unexpanded dr...

- haraldharders on: Improve Text Input tool

- simonaubert_bd on: Unique key detector tool

- TUSHAR050392 on: Read an Open Excel file through Input/Dynamic Inpu...

- jackchoy on: Enhancing Data Cleaning

- NeoInfiniTech on: Extended Concatenate Functionality for Cross Tab T...

- AudreyMcPfe on: Overhaul Management of Server Connections

-

AlteryxIdeasTea

m on: Expression Editors: Quality of life update

| User | Likes Count |

|---|---|

| 3 | |

| 3 | |

| 2 | |

| 2 | |

| 2 |