Alteryx Designer Desktop Ideas

Share your Designer Desktop product ideas - we're listening!Submitting an Idea?

Be sure to review our Idea Submission Guidelines for more information!

Submission Guidelines- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- Designer Desktop: New Ideas

Featured Ideas

Hello,

After used the new "Image Recognition Tool" a few days, I think you could improve it :

> by adding the dimensional constraints in front of each of the pre-trained models,

> by adding a true tool to divide the training data correctly (in order to have an equivalent number of images for each of the labels)

> at least, allow the tool to use black & white images (I wanted to test it on the MNIST, but the tool tells me that it necessarily needs RGB images) ?

Question : do you in the future allow the user to choose between CPU or GPU usage ?

In any case, thank you again for this new tool, it is certainly perfectible, but very simple to use, and I sincerely think that it will allow a greater number of people to understand the many use cases made possible thanks to image recognition.

Thank you again

Kévin VANCAPPEL (France ;-))

Thank you again.

Kévin VANCAPPEL

It would be incredible helpful if Alteryx canvases auto-populated some metadata about each canvas to track its origination and updates.

The metadata fields I'm specifically thinking about are:

-Author

-Date Created

-Date Last Updated

-

API SDK

-

Category Developer

Hello gurus -

Pretty much every coding framework supports this. If we really want Alteryx to embrace no-code, we've got to have some ability to control commit / rollbacks across transactions. As it stands currently, it is pretty easy to write out parent records, fail to be able to write out children, and wind up with a database state that makes the end users very sad.

Thanks!

brian

Hello AlteryxDevs -

Back when I used to do more coding, some of the ORMs had the ability to return back to you a natively generated primary key for new rows created; this could be really useful in situations wherein you wanted / needed to create a parent / child relationship or needed to pass the value back to another process for some reason.

As it stands now, the mechanism to achieve this in Alteryx is kind of clunky; all I have been able to figure out is the following:

1) Block until done 1.

1a) Create parent record. Hopefully it has an identifying characteristic that can be attached to.

2) Block until done 2.

2a) Use a dynamic select to go get the parent record and get the id generated by the database.

3) Block until done 3.

3a) Append your primary key found in 2a. Create your children records.

I mean it works. But it is clunky, not graceful, and does not give you any control over the transaction, though that is kind of a more complicated feature request.

Thanks!

When training people on the use of action tools, something that I always have to hit on is that when you are telling the tool which piece of the XML that you are adjusting, it's sort of difficult to tell what you have selected, and super easy to accidentally select something else.

Example:

When you initially select the action to take it's this nice Blue Color. However, it still doesn't feel exactly like you have actually selected anything or told the Action Tool what to do, since it's so easy to just select any other one of these actions.

A slightly different problem is that if you are selecting an action that has been previously configured, it is just this light grey color. So it can be easy to accidentally change your settings because you may not realize it's actually set up.

Here is a recent community post that sort of outlines a few of these problems.

The Download tool allows for encrypted SFTP connections, but I recently discovered (the hard way) that the Alteryx capabilities are incomplete and the algorithms not fully up to date. Just adding an additional updated algorithm or two to the 4 available for message authentication would bring it up to date.

As back story, our firm has onboarded a new SFTP server, and all of a sudden my Alteryx SFTP workflows didn't work when I pointed them at the new server. After going back and forth extensively with the helpful folks at Alteryx, we discovered there's a gap in Alteryx's current capabilities.

Basically, the Alteryx download tool can use the old encryption algorithm and half of the new version, and half of the new version is like having half a bridge.

Up until 2017, SHA-1 was the most common hash used for cryptographic signing. Since then it's been slowing getting supplanted by SHA-2.

Alteryx can use SHA-2 for key exchanges, but not for message authentication (the HMAC algorithm). The internet seems to swear up and down that the old SHA-1 algorithm works just fine for message authentication, but I don't have the luxury of caring about that. All I know is that as of March 2019 the SFTP server I have to connect with has deprecated Alteryx's SHA-1 algorithm as being too out of date and only allows the new SHA-2 message authentication.

Alteryx CAN use the up to date SHA-2 for key exchange (GOOD, halfway there!) but can only use (old) ways of doing message authentication that do NOT include SHA-2 (NOT GOOD!). Please add updated SHA-2 algorithms (hmac-sha2-512, hmac-sha2-256) to the HMAC mix too!

Many thanks,

Josiah

-

API SDK

-

Category Developer

Similar to how there is a functionality to use pip through the ayxinstallPackages, there needs to be a way to upgrade python itself. There are important packages such as keras that have errors in Python 3.6 that are not present when used with 3.7 so it should really be up to the user as to which python package to use. Another solution could also be to allow the user to point to their own local installation of Python so that the user can maintain consistency between their own local site-packages and the one that Alteryx has.

-

API SDK

-

Category Developer

With an increasing number of different projects, involving different machine learning models, it's becoming difficult to manage different package versions across workflows. Currently, the Python tool has a single virtual environment, so we need to develop models in different projects always using the same Python and package versions as the Python tool venv. While this doesn't bother the code itself too much, it becomes a problem as soon as we store and load pickled models, which are sensitive to even minor changes in packages.

This is even more so a problem when we are working on the Alteryx server, where different teams might use different packages. Currently, there is only the server admin who can install packages on the server and there can only be one version per package.

So, a more robust venv management in the Python tool would be much appreciated!

-

API SDK

-

Category Developer

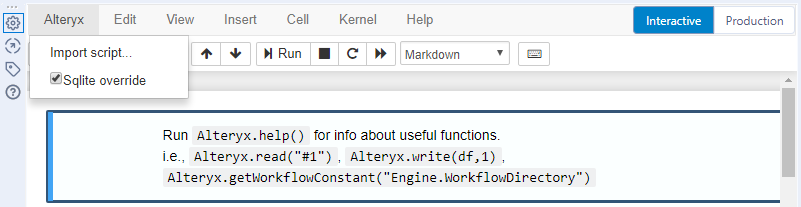

When reading and writing large data frames to/from a python script in Alteryx it seems that there are limitations to the SQLite component of the tool. Given that this selection is recommended only when the user is having issues in the python tool why is the option selected by default? A colleague and I spent a couple of hours trying to work through an issue with importing a data frame larger than 1000x1000 and once we found this option (SQLite override) and unchecked it the data was written back to Alteryx without any problems.

Hint provided by the tool, "This changes the intermediate data format between Alteryx and Jupyter from yxdb to SQLite. Use only if running into issues. See help for more details."

Error message provided by the tool

After unchecking the option the workflow ran without any errors.

Recommendation: the python tool should default to SQLite override unchecked

-

API SDK

-

Category Developer

-

Tool Improvement

Why don't Alteryx build an "Designer extended edition"?

An Alteryx designer download that contains every available

- R package and

- Python package available in the universe to date of release...

-

API SDK

-

Category Developer

in a scenario where i pass multiple files to the download tool (to upload to an FTP server), the data in the workflow gets cut off with the message "ended by a downstream tool". This leads to me only uploading one file (out of the two I am trying to upload).

based on community posts, this seems to be because i am not outputting anything in the workflow or do not have any browses (browses are automatically turned off because I am using Crew Macro's List Runner)

BUG: the download tool should count as an output tool because it is indeed outputting.

BUG 2: if browses are disabled but they exist in the workflow, it should still create all the records.

-

API SDK

-

Category Developer

Hi - think it would be great to have to open only one debug window, and where I add to my workflow, the debug automatically updates to include the new features of my workflow.

As it is now, I believe that I have to open a new Debug window where I have added new components to my workflow.

-

API SDK

-

Category Developer

1) Add cURL Options support from within the Download Tool

The Download tool allows adding Header and Payload (data), which are key components of the cURL command structure. However, there is no avenue to include any of the cURL options in the Download tool. Most of the 'solutions' found have been to abandon the use of the Download tool and run the cURL EXE through the Run Command tool. However, that introduces many other issues such as sending passwords to the Run Command tool, etc. etc.

With the variation and volume of connection requests that are being funneled through the Download tool, users are really looking for it to have the flexibility to do what they need it to do with the Options that they need to send to cURL.

2) Update cURL version used by Alteryx Download tool to a recent version that allows passing host keys

cURL added the option to pass host keys starting in version 7.17.1 (available 10/29/2007). The option is --hostpubmd5 <md5>:

https://curl.haxx.se/docs/manpage.html

And it appears that the cURL shipped with Alteryx is 7.15.1 (from 12/7/2005😞

PS C:\Program Files\Alteryx\bin\RuntimeData\Analytic_Apps> .\curl -V

curl 7.15.1 (i586-pc-mingw32msvc) libcurl/7.15.1 zlib/1.2.2

Protocols: tftp ftp gopher telnet dict ldap http file

Features: Largefile NTLM SSPI libz

Thanks for considering!

Cameron

The Python tool has been a tremendous boon in being able to add capability that is not yet available in the Alteryx platform.

It would make the Python Tool much more usable and useful if you can define the inputs explicitly rather than just relying on the good behaviour of both the user; and also the python code that reads the inbound data (Alteryx.Read('#1'))

This is not something that the Jupyter notebook code-interface may handle directly (because the Jupyter notebook has no priveledged knowledge of the workflow outside it); so this may be best handled by the container itself.

The key here is that if my python app requires 2 inputs - it should be possible to define these explicitly so that we can test; and also so that we can prevent errors and make this more bullet-proof.

The same would apply on the outbound nodes for the Python tool.

-

API SDK

-

Category Developer

-

Feature Request

-

General

One of the common things that we need to do, is to take a delta-copy of a file or a DB table into the staging area of the analytical database.

This always looks very similar - so it would be useful to make this a wizard based process so that teams can easily build these very quickly rather than having to hand wrap:

Process:

- Check which primary keys exist - fill the gaps where they don't

- Are there any rows that update over time (or is this insert-only) - if they update over time, which column is the "updated date" column so that we can spot updates - if there is no update date; then we need to do a column by column check of some kind (like a hash or a checksum)

- Do you want to sync deletes?

- Do you want to keep updates?

Outputs:

- Target table in staging area which is now updated compared to the source

- Logging done (similar to what Kimball recommends in the ETL Handbook) with the run date/time; summary stats; and any errors

- Errors table for any errors that arose with row numbers

- Tables in target created (with history table if requested)

Hello All,

We are new to Alteryx and we could see that the Supported Data Sources from IBM are of below :

- IBM DB2

- IBM Netezza/Pure Data Systems

- IBM SPSS

How about adding IBM Sterling to this?

We want Alteryx to support connection with IBM Sterling OMS which will help the Business requirements

Can anyone post some suggestions on this? How we can connect to Sterling?

Thanks,

Praveen C

When passing a data connection to the Dynamic Input Tool as a string and using the 'Change Entire File Path' option, the password parameter of the connection string is not encrypted and is displayed in the metadata source information.

We have since changed our macro that was using this method, but wanted to raise awareness of this situation. I suggest that the same procedure used to encrypt the password in all other connection methods be called if the workflow is configured to pass a password through the input as a string.

-

API SDK

-

Category Developer

Per my initial community posting, it seems that in environments where the firewall blocks pip the YXI installation process takes longer than it needs. My experience was 9:15 minutes for a 'simple' custom tool (one dependency wheel included in the YXI).

Given the helpful explanation of the YXI installation process, it seems the --upgrade pip and setuptools is causing the delay. Disconnecting from the internet entirely causes the custom YXI to install in 1:29 minutes.

My 'Idea' is to provide a configuration option to install the YXI files 'offline'. That is, to skip the pip install --upgrade steps, and perhaps specify the --find-links and --no-index options with the pip install -r requirements.txt command. The --no-index option would assume that the developer has included the dependency wheel files in the YXI package. If possible, a second config option to add the path to the dependencies for the --find-links option would help companies that have a central location for storing their dependencies.

-

API SDK

-

Category Developer

Currently pip is the package manager in place within the Designer. Unfortunately this is something that doesn't fit our requirements as Data Scientists. We prefer using conda due to the following reasons:

- conda manages also non-Python library dependencies. This way conda can be used to manage R packages as well which comes in quite handy (even tough not all packages from CRAN Repository are available)

- conda provides a very simple way of creating conda envs (similar to virtualenv but with conda one can also install and manage pip packages --> virtualenv cannot install conda packages!) to isolate required packages (with specific versions) used in a workflow (e.g. for a Python Tool in Designer).

So I would like to have conda instead or additionally to pip and would like to create my conda envs where I install the packages I need for a specific task within my workflow. Moreover, if you think about to feature an R jupyter notebook capability (like the Python Tool) it could be beneficial to change from pip to conda for managing packages in both worlds.

-

API SDK

-

Category Developer

We need some way (unless one exists that I am unaware of - beyond disabling all but the Container I want to run) to fire off containers in particular order. Run Container "Step1" then Run Container "Step2" and so on.

-

API SDK

-

Category Developer

Dynamic Input is a fantastic tool when it works. Today I tried to use it to bring in 200 Excel files. The files were all of the same report and they all have the same fields. Still, I got back many errors saying that certain files have "a different schema than the 1st file." I got this error because in some of my files, a whole column was filled with null data. So instead of seeing these columns as V_Strings, Alteryx interpreted these blank columns as having a Double datatype.

It would be nice if Alteryx could check that this is the case and simply cast the empty column as a V_String to match the previous files. Maybe make it an option and just have Alteryx give a warning if it has to do this..

An even simpler option would be to add the ability to bring in all columns as strings.

Instead, the current solution (without relying on outside macros) is to tick the checkbox in the Dynamic Input tool that says "First Row Contains Data." This then puts all of the field titles into the data 200 times. This makes it work because all of the columns are now interpreted as strings. Then a Dynamic Rename is used to bring the first row up to rename the columns. A Filter is used to remove the other 199 rows that just contained copies of the field names. Then it's time to clean up all of the fields' datatypes. (And this workaround assumes that all of the field names contain at least one non-numeric character. Otherwise the field gets read as Double and you're back at square one.)

-

API SDK

-

Category Developer

-

Feature Request

-

General

- New Idea 376

- Accepting Votes 1,784

- Comments Requested 21

- Under Review 178

- Accepted 47

- Ongoing 7

- Coming Soon 13

- Implemented 550

- Not Planned 107

- Revisit 56

- Partner Dependent 3

- Inactive 674

-

Admin Settings

22 -

AMP Engine

27 -

API

11 -

API SDK

228 -

Category Address

13 -

Category Apps

114 -

Category Behavior Analysis

5 -

Category Calgary

21 -

Category Connectors

252 -

Category Data Investigation

79 -

Category Demographic Analysis

3 -

Category Developer

217 -

Category Documentation

82 -

Category In Database

215 -

Category Input Output

655 -

Category Interface

246 -

Category Join

108 -

Category Machine Learning

3 -

Category Macros

155 -

Category Parse

78 -

Category Predictive

79 -

Category Preparation

402 -

Category Prescriptive

2 -

Category Reporting

204 -

Category Spatial

83 -

Category Text Mining

23 -

Category Time Series

24 -

Category Transform

92 -

Configuration

1 -

Content

2 -

Data Connectors

982 -

Data Products

4 -

Desktop Experience

1,604 -

Documentation

64 -

Engine

134 -

Enhancement

406 -

Event

1 -

Feature Request

218 -

General

307 -

General Suggestion

8 -

Insights Dataset

2 -

Installation

26 -

Licenses and Activation

15 -

Licensing

15 -

Localization

8 -

Location Intelligence

82 -

Machine Learning

13 -

My Alteryx

1 -

New Request

226 -

New Tool

32 -

Permissions

1 -

Runtime

28 -

Scheduler

26 -

SDK

10 -

Setup & Configuration

58 -

Tool Improvement

210 -

User Experience Design

165 -

User Settings

85 -

UX

227 -

XML

7

- « Previous

- Next »

- abacon on: DateTimeNow and Data Cleansing tools to be conside...

-

TonyaS on: Alteryx Needs to Test Shared Server Inputs/Timeout...

-

TheOC on: Date time now input (date/date time output field t...

- EKasminsky on: Limit Number of Columns for Excel Inputs

- Linas on: Search feature on join tool

-

MikeA on: Smarter & Less Intrusive Update Notifications — Re...

- GMG0241 on: Select Tool - Bulk change type to forced

-

Carlithian on: Allow a default location when using the File and F...

- jmgross72 on: Interface Tool to Update Workflow Constants

-

pilsworth-bulie

n-com on: Select/Unselect all for Manage workflow assets