We recently released a Microsoft Kit that included a text analytics tool. This tool uses a Cortana Analytics Gallery text analytics API to provide sentiment analysis and key phrase extraction. The tool has received positive feedback but is limited to 10,000 records per month before you have to pay a monthly fee. Given this backdrop, I wanted to compare the Microsoft sentiment analysis capability to a couple open source algorithms available.

The Sentiment Tool

The first open source package I identified to try out was the R package "sentiment". The package has long been archived on CRAN but is still available for download. It was not too difficult to leverage this package inside of Alteryx - a few lines of code in the R tool was all that was needed.

The second package came to my attention via a Microsoft blog post. The Stanford CoreNLP project is an expansive "set of natural language analysis tools", even though (for now) I'm only interested in sentiment analysis. I was able to utilize this package in Alteryx via the Run Command tool. Whereas the "sentiment" package gives a total score for an entire block of text, the Stanford package parses each sentence and gives a separate score for each. For the sake of this tool, I averaged these scores together to give a single score for the entire text.

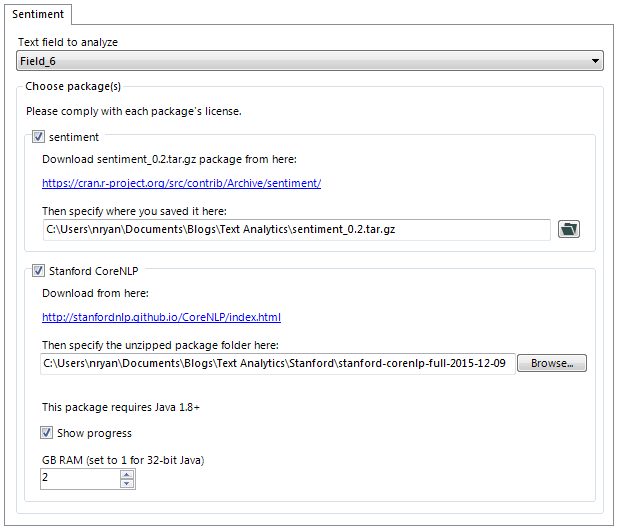

To use the tool you'll need to download each of these packages and point to where you've downloaded them as per the instructions laid out in the tool's interface. (I'm not a lawyer, but I think by forcing you to download the packages yourself it absolves me of all liability of you violating the packages' license terms.) Here's how it looks after I've configured mine:

The Analysis

I'm using Sentiment140 data for the analysis. Basically it's twitter data that's been pre-scored according to emoticons - if a tweet contained a smiley, it's positive; a frowny, negative. (Careful if you want to use this data as it's Twitter, so probably NSFW.) In order to do this for free (see Microsoft API record limit above), I'm limiting to a random subset of 10,000 tweets.

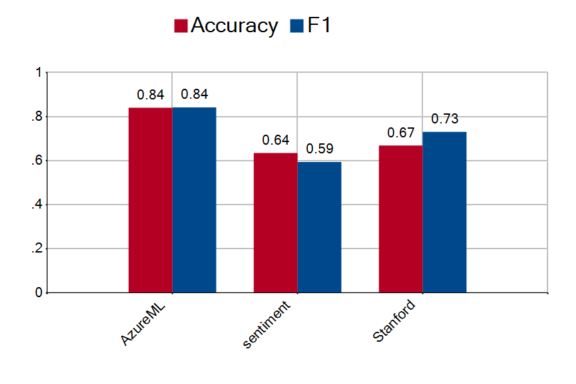

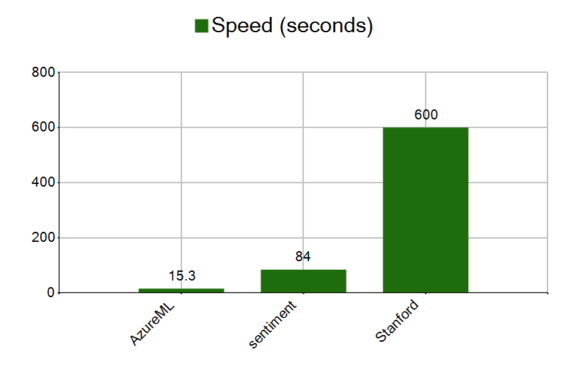

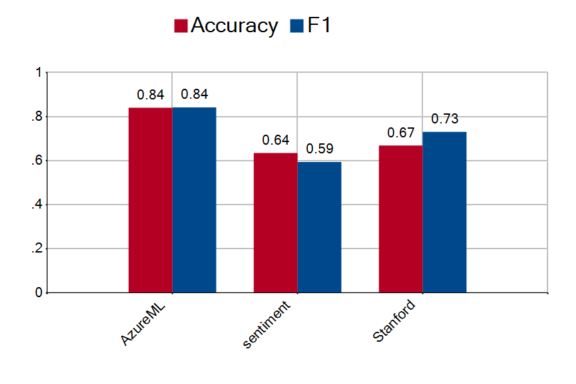

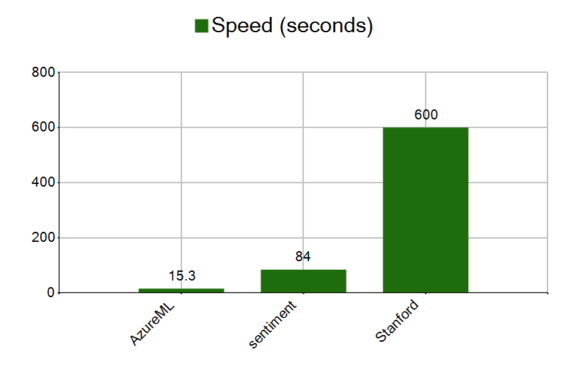

The Microsoft algorithm came out on top for accuracy. Interestingly, it was also the fastest, even though it was leveraging an API over the web.

I've attached the Sentiment tool - feel free to tweak it to see if you can improve the accuracy or performance. I also encourage you to try to replicate my results, either with the Sentiment140 data or some other data source. I'll attach my analysis in the comments upon request.