Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Image Recognition in Python with Keras

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Computer Vision!

Computer vision isn't just for PhD's and R&D folks anymore. Open source libraries like Tensorflow, Keras, and OpenCV are making it more accessible and easier to implement. When combined with advancements in algorithms like deep neural nets it just gets easier!

In this post we'll walk you through building a deep neural net that can identify things contained within an image and show you how to deploy it into a web app.

Our model

The model we'll be using comes from an academic paper that details how you can use deep neural nets for image recognition. Included in the paper is some Python code that you can use to actually load and execute the model--Hooray reproducibility!

I'm not going go into the details of the paper (since it's way over my head) but if you're interested, click the link below to check it out. It also contains links to the source code and model files we'll be using.

Very Deep Convolutional Networks for Large-Scale Image Recognition - K. Simonyan, A. Zisserman

Quick primer on Keras

As you can probably see, the model is using a new (and awesome) Python library called Keras. Keras allows you to build deep neural nets using multiple backends (such as Theano and Tensorflow). It provides a fairly high level API that's easy to work with and has fairly intuitive function/class names--always helpful :).

You can use Keras for doing things like image recognition (as we are here), natural language processing, and time series analysis. For more info, check out the docs or read through some of the tutorials.

The Data

Since we're making an image recognition model, you can probably guess what data we're going to be using: images! We'll need a "mathy" version of the image that's represented in matrix form. For that, we'll be using cv2. Take the following example:

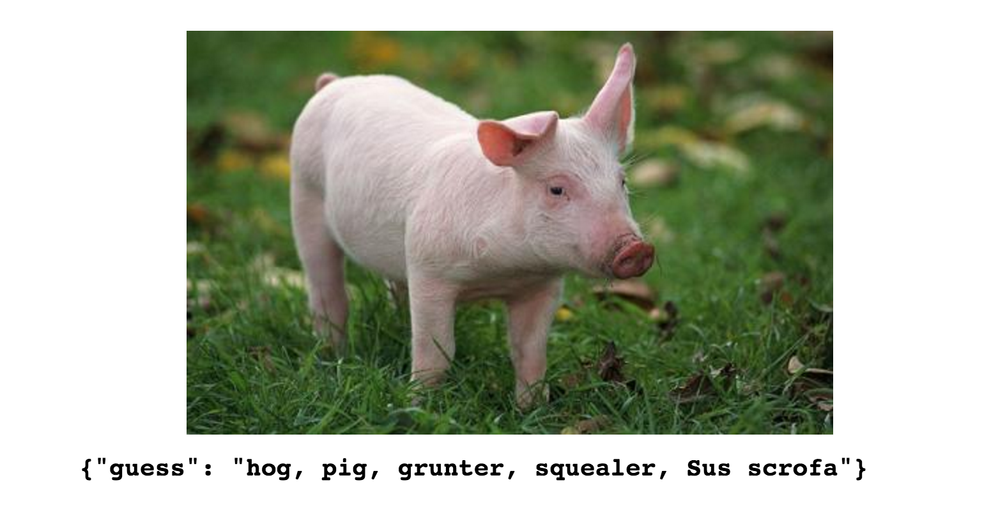

pig = cv2.imread('images/pig.jpg')

cv2.imshow(pig)

Our model is going to be able to take this image and figure out what it is. When asked what's in this picture, most humans look at this picture and say "It's a pig"! Not too surprising (or impressive) as many of us have been doing this since the time you could pull the lever on that toy that made barnyard noises and told you what each animal said:

The VGG model (Visual Geometry Group at Oxford) was trained on the open source ILSVRC dataset. According to the findings...

Using dense single-scale evaluation (the smallest image side rescaled to 384), the top-5 classification error on the validation set of ILSVRC-2012 is 8.1% (see Table 3 in the arXiv paper).

Sounds pretty good to me! But what does this really mean? Well, it means that we can feed just about any (that's right ANY) image to our model and it's going to be able to tell us what it thinks is in the image. Sounds like science-fiction but as you'll soon learn it's fairly easy to do!

The model

Ok so enough background, let's get down to business (🎶to defeat, the Huns!🎶). I'm going to take the easy route here and just copy and paste the model code directly from the paper into my Python session. It's a little lengthy but don't be intimidated. Most of it is just setting up the neural net, and while it's explicit (and a bit long), I think this is a good thing. Neural nets are vague and strange enough on their own, the last thing we need is a library that's abstracting too much away and making them even more nebulous.

Ok so I've got this VGG_16 function, now I need to call it to load my model. In order to do that I need a "weights file". In this case that's actually the pre-built model from our paper. You can download the vgg16_weights.h5 file from Google Drive, ahem G Suite.

Sweet! Ok, the last thing we need to do is specify the optimizer we'll be using; in this case it'll be SGD (Stochastic Gradient Descent). Load that puppy in and then run the model.compile function.

model = VGG_16('data/vgg16_weights.h5')

sgd = SGD(lr=0.1, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(optimizer=sgd, loss='categorical_crossentropy')

Making predictions

We've got our data, we've got our model, now let's put it to use! Executing keras models is simple. Just use the predict function to generate (you guessed it) predictions. predict will output a set of probabilities for a sequence of 1000 categories of things it can detect. Watch what happens when we run it on Pilget:

out = model.predict(im)

pred = dict(zip(labels, model.predict_proba(im)[0]))

...other guesses go here...

best_guess = labels[np.argmax(out)]Not too shabby!

Deployment

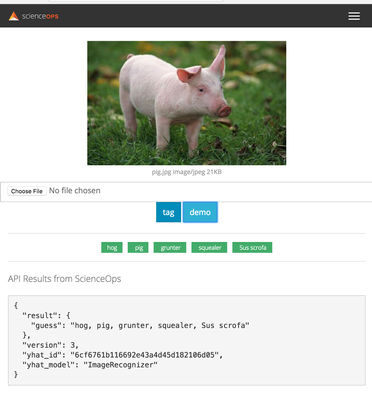

Ok last step. Since we're making an app, we need to deploy this model somewhere. As you might've guessed, we're going to use Yhat's own ScienceOps product to do this. There's not much to it: import Yhat, copy/paste the code from above into the execute function, and run deploy().

from yhat import Yhat, YhatModel

from PIL import Image

from StringIO import StringIO

import base64

def image_from_base64(img64😞

binaryimg = base64.decodestring(img64)

pilImage = Image.open(StringIO(binaryimg))

return np.array(pilImage)

class ImageRecognizer(YhatModel😞

REQUIREMENTS = ["opencv"]

def execute(self, data😞

img64 = data['image64']

image = image_from_base64(img64)

# VGG specific stuff

resized_image = cv2.resize(image, (224, 224)).astype(np.float32)

resized_image[:,:,0] -= 103.939

resized_image[:,:,1] -= 116.779

resized_image[:,:,2] -= 123.68

resized_image = resized_image.transpose((2,0,1))

resized_image = np.expand_dims(resized_image, axis=0)

# make a prediction and our best guess

out = model.predict(resized_image)

pred = dict(zip(labels, model.predict_proba(im)[0]))

best_guess = labels[np.argmax(out)]

# some verbose logging

print "It's a %s" % best_guess

return { "guess": best_guess }Bam! We've got an API that you can send an image to and get a reply back with our model's best guess as to what's in the image. Pretty slick!

{"guess": "hog, pig, grunter, squealer, Sus scrofa"}

{"guess": "flagpole, flagstaff"}

{"guess": "jeep, landrover"}

App

To bring everything together, I've rigged up a simple web app (just some Javascript and HTML) that you can upload images to. After the image is uploaded, it'll automatically use our model's API on ScienceOps to try and detect what's in the picture.

Visit the app here and upload your own images!. It works great on your phone too. You can take a picture and upload it directly from the app.

If you like what you see, you can also play around with the credit modeling, product recommender, image classification, and lead scoring apps we've rigged up on our app demo page. Each app includes the technical details (e.g. POST/cURL requests, github links to the full code repo) and a non-technical explanation.

Conclusion

There you have it! We've built an app that can look at an arbitrary image and do it's best to figure out what's in it. Not too shabby for <250 lines of code.

Want to learn more about image recognition, keras, or cv2? Check out these resources:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.