Engine Works

Under the hood of Alteryx: tips, tricks and how-tos.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Engine Works

- :

- Web Scraping in Alteryx

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Are you looking for a dataset to analyze and train the model but can't find it anywhere? or

You are not a coder but want to get the data without using Python or R? or

You can't find any API to extract the data you want?

If the answer is Yes, then you could consider choosing Alteryx to extract data. In the previous article, I introduced web scraping definition, the format of an HTML page with tags, and showed how to extract data from a webpage in Python through 5 steps. If you haven't read it yet, you can read my notebook - Web Scraping in Python here. Please feel free to leave any comments.

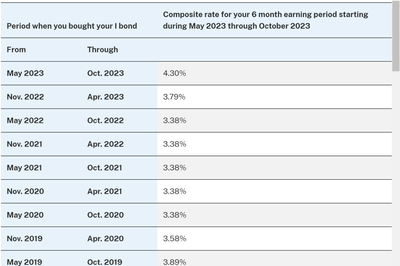

In this article, I will do web scraping in Alteryx. The data source in this article is about Saving Bonds from the U.S. Department of the Treasury. I would like to get the history of the composite rate of I-bond from 1998 until now. Extracting data from this webpage is for learning purposes.

Data source: https://www.treasurydirect.gov/savings-bonds/i-bonds/i-bonds-interest-rates/

Part 1: Understand the structure of HTML page

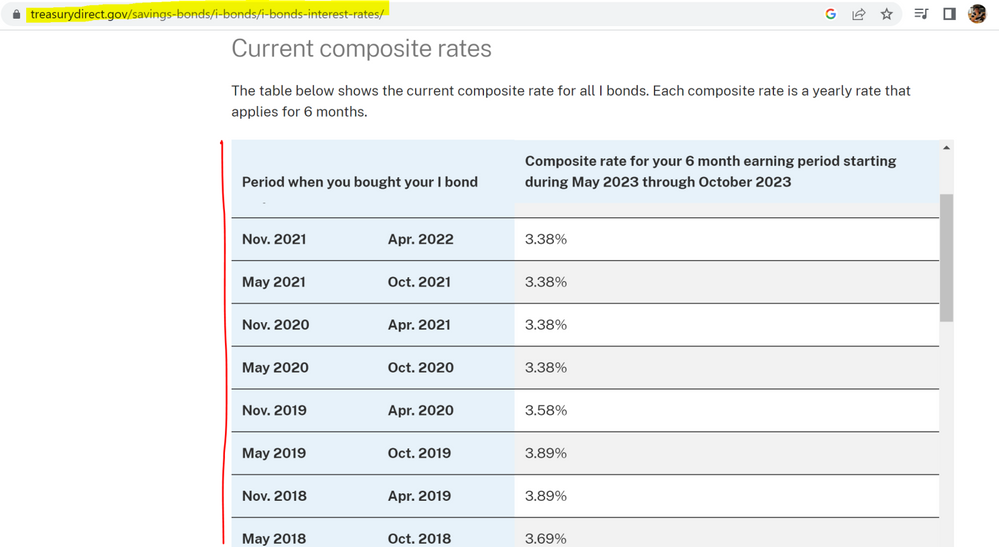

Before getting started to extract the data from the webpage, let's preview the structure of that HTML page first. There are 5 tables on that webpage. In this article, I only want to extract data from the 5th table (at the bottom of the page). (Image 1)

Image 1: Last table of the webpage

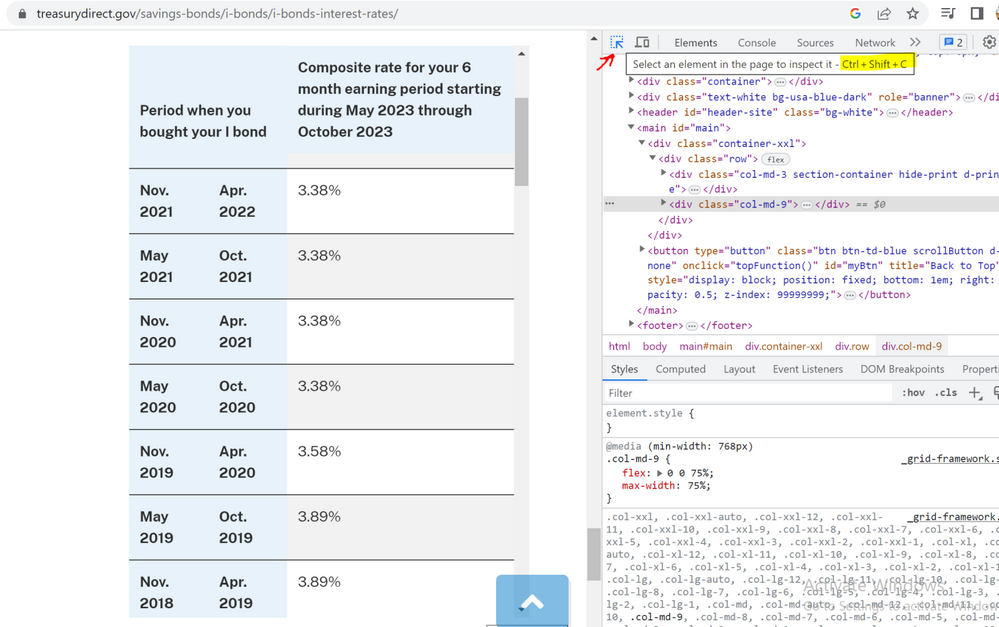

If you are using Google Chrome Browser, right-click on the webpage. Then, choose Inspect. Another window appears on the right side. Click on the icon as the (Image 2) below or hold Ctrl+Shift+C buttons.

Image 2: Inspect the webpage

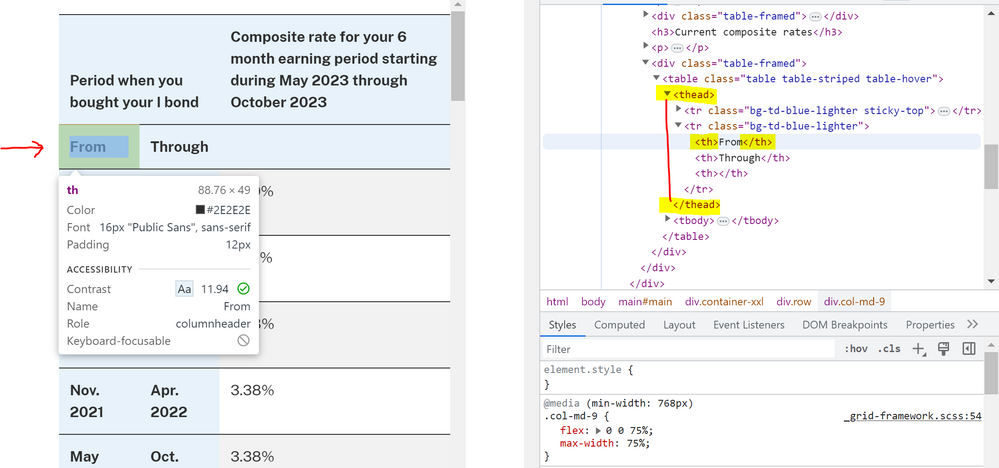

After clicking on that button, hover the mouse on any parts of the table on the left window. It will show you the HTML code on the right window. For example, I hover the mouse on From header. On the right window, it shows that the header is in <th> and </th> tags. But the parents of that tag are <thead> and </thead> tags (Image 3a).

Image 3a: Header of each column in <thead> and </thead> tags

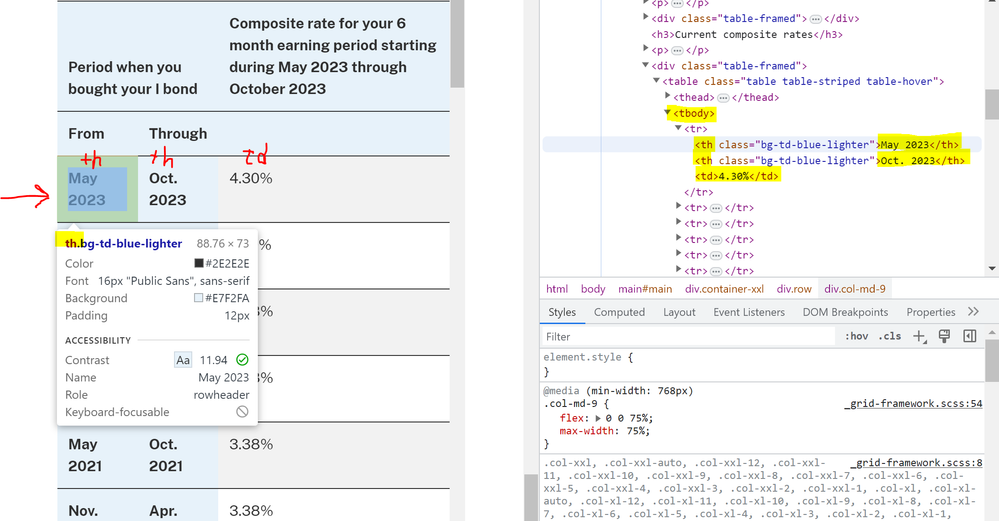

In the image below (Image 3b), when I hover the mouse over any parts in the table, it will show the tag and position of that row on the right window. Note that all the rows below the <thead> header tag above are now in <tbody> and </tbody> tags. In each row, there are 2 pairs of <th> and </th> tags and 1 pair of <td> and </td> tags. All the rows below are in the same structure.

Image 3b: Data I want to extract

Now, I understand the structure of that HTML page. I'm ready to extract data with Alteryx. Here is the result that I want:

Are you ready? Let's get started!

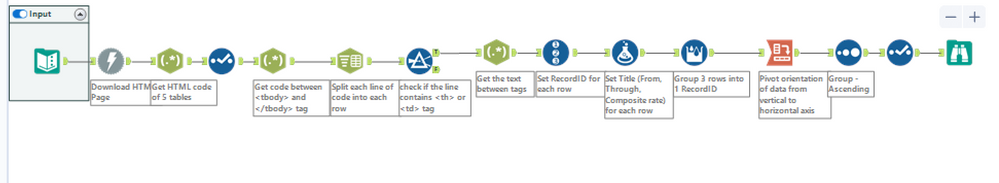

Part 2: Extract Data from HTML page in Alteryx

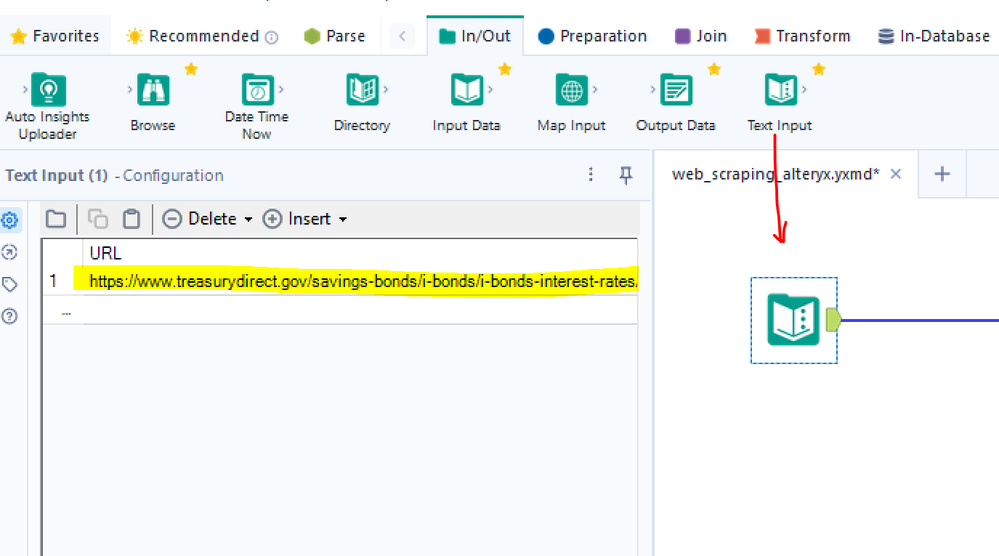

Step 1: Drag the Text Input tool to the canvas. Copy the URL above into the cell and name the column URL (Image 4).

Image 4: Drag Text Input and insert URL

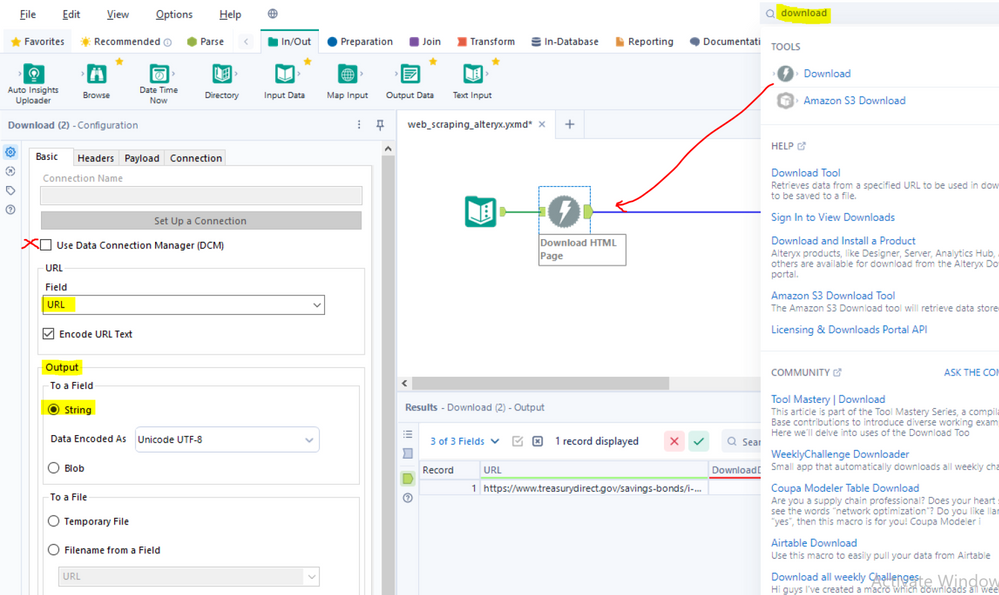

Step 2: Drag the Download tool from the Search box on the top right side. Uncheck Use Data Connection Manager (DCM). In the URL field, choose the URL column and output as a string with Unicode UTF-8 (Image 5).

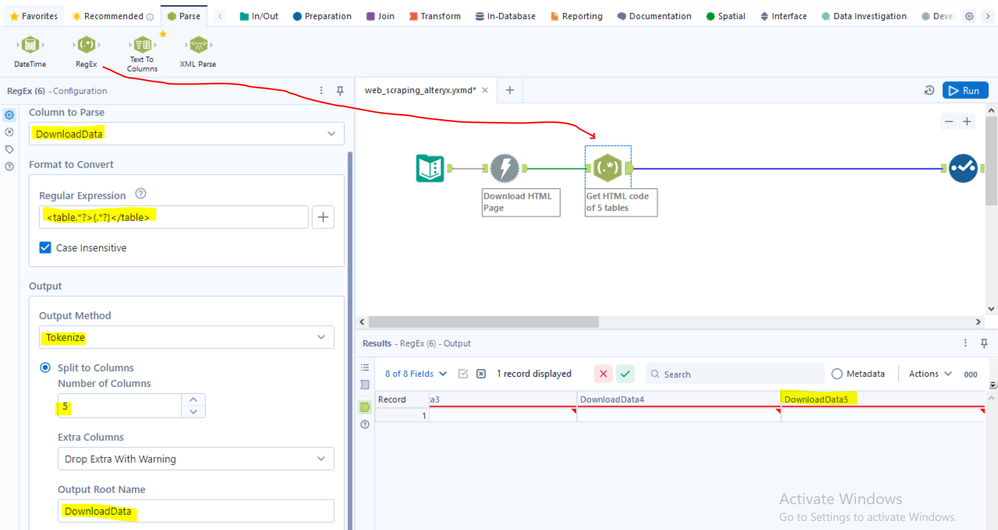

Step 3: Use the RegEx tool to extract the HTML code of 5 tables in the webpage. Connect the RegEx tool to connect with the Download tool. In the Configuration window, I choose the DownloadData column to Parse. I choose Tokenize Output Method because I would like to use the matched Regular Expression to split 5 tables into 5 separate columns. I have a notebook of RegEx here. I use expression: <table.*?>(.*?)</table>. It means getting all text between <table...> and </table> tags. From Part 1, I know there are 5 tables. So I choose to split 5 columns. But my goal is only extracting data from table 5 (Image 6).

Image 6: Use RegEx tool to get HTML code of 5 tables in 5 serparate columns

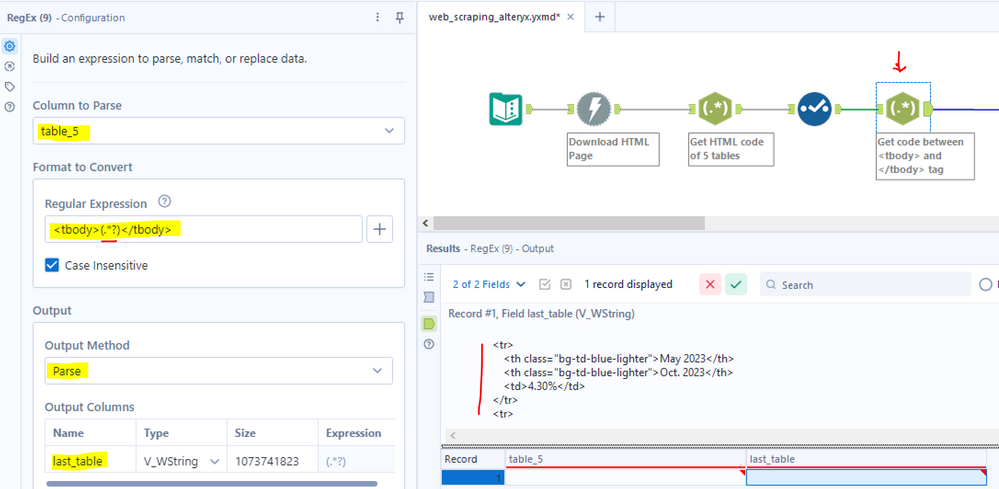

Step 4: Use the RegEx tool to get all the lines between <tbody> and </tbody> tags. In this step, I use the Parse method because I don't need to split any columns/ rows. The Regular Expression is the same structure as above but in <tbody> tag. So it would be <tbody>(.*?)</tbody>. I put the name for the output column as last_table (Image 7).

Image 7: Use RegEx tool to get all the code between <tbody> and </tbody> tag

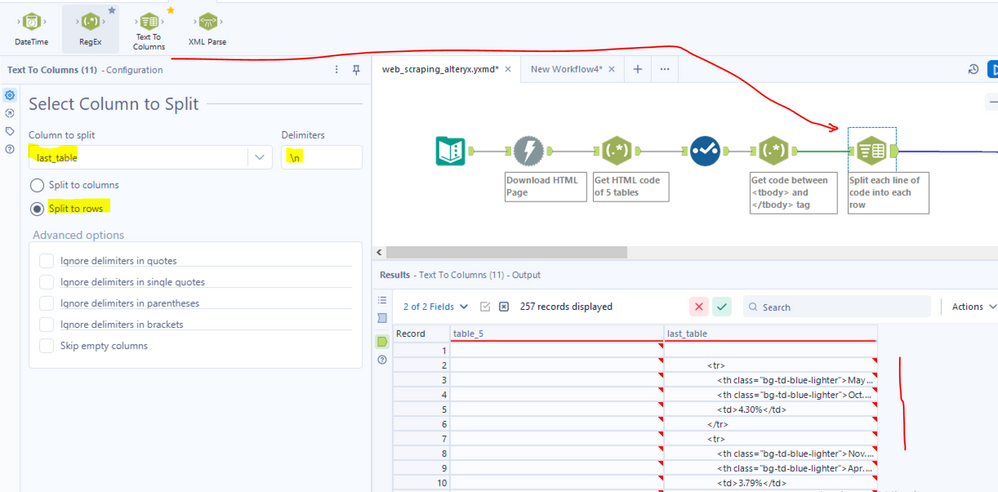

Step 5: Use the Text to Columns tool to split each line from the last_table column into rows. The delimiter is "\n". It means if the system meets the new line (\n), then split another row (Image 8).

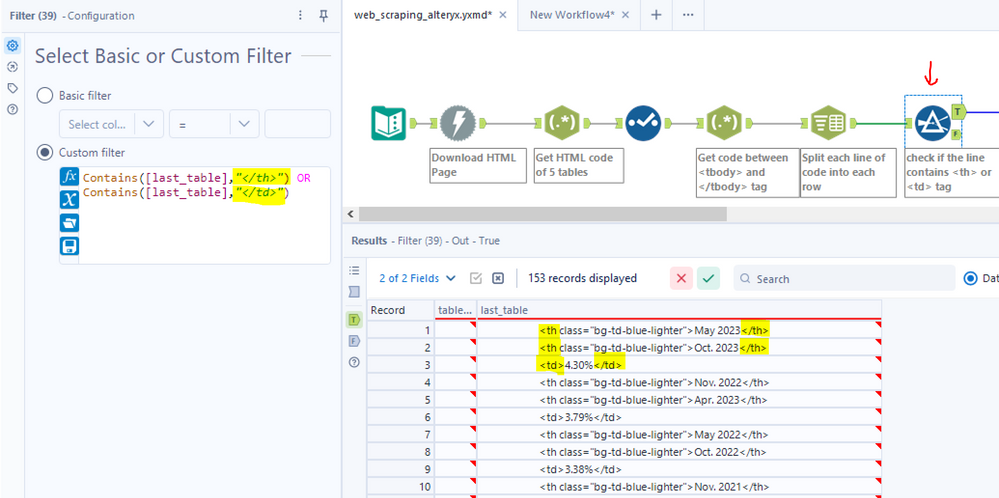

Step 6: Use the Filter tool to filter out rows that have <th> or <td> tags. As I analyzed the structure of the HTML page in part 1 above. I need to get 2 pairs of <th> tags and 1 pair of <td> tags. The condition is each row in the last_table contains </th> or </td> tag (Image 9).

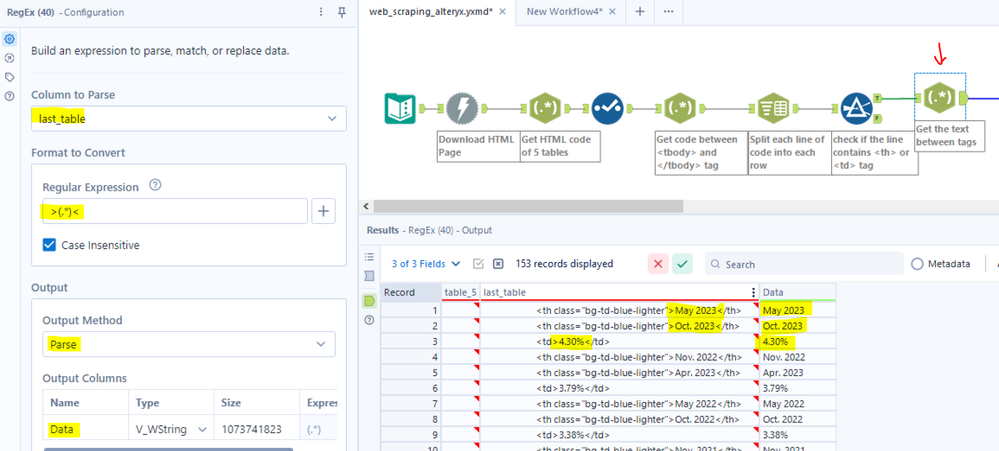

</th> or </td> tagStep 7: Use RegEx to get the text between pair of tags on each line. In the configuration window, I choose the last_table column to Parse. The Regular Expression is >(.*)<. It means get any characters between ">" and "<" characters (Image 10).

Image 10: Use RegEx to get the text between tags

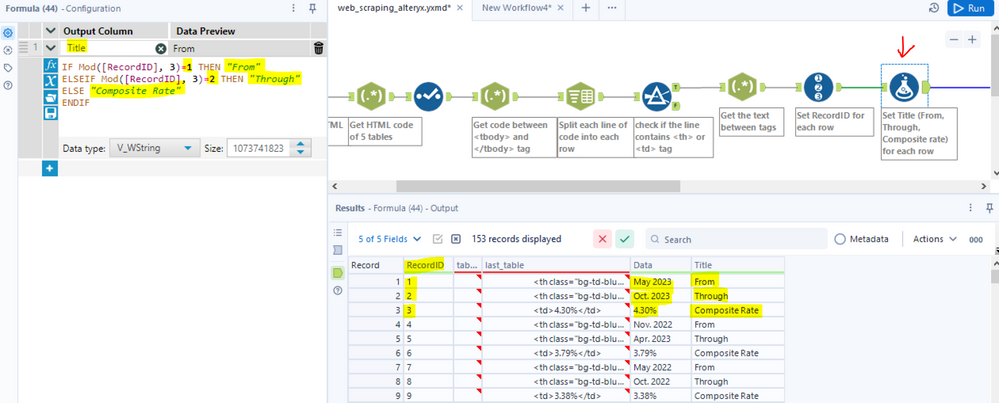

Step 8: Generate RecordID for each row and Set Title for each row. Use the RecordID tool to generate the recordID. It would be helpful to set the Title. Then, use the Formula tool to set the title for each row.

If RecordID%3 = 1 Then return "From". If RecordID%3=2 Then return "Through". If RecordID%3=0 Then return "Composite Rate" (Image 11).

Image 11: Use RecordID and Formula tools to set the title for each row

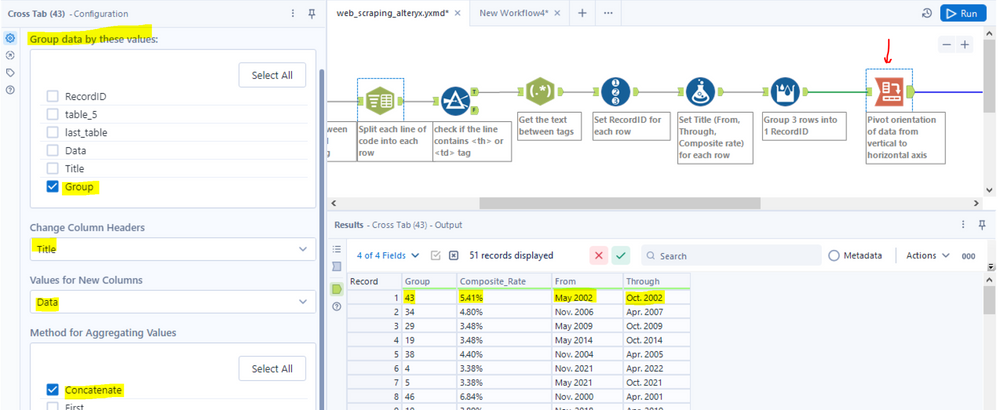

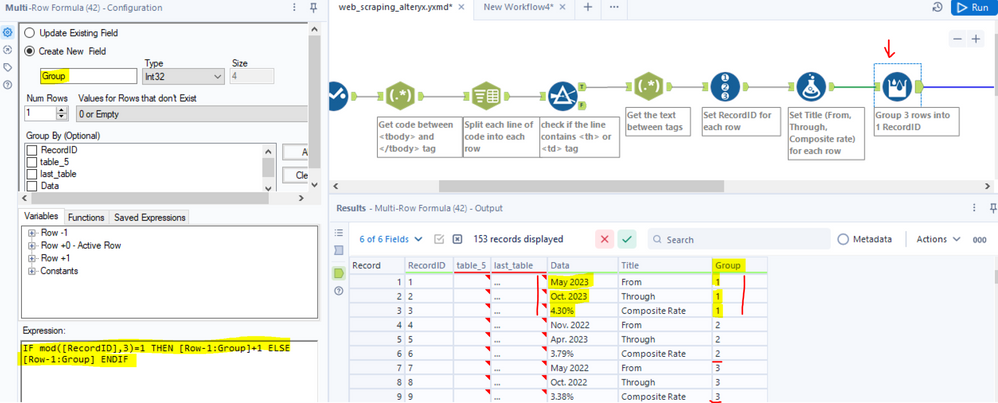

Step 9: I already got the title of 3 columns. Now, I would like to group 3 rows into 1 RecordID. Use the Multi-row Formula tool to connect with the previous Formula tool. In Configuration, create a new column Group. The first cell in the Group column starts at 1.

The expression is:

IF mod([RecordID],3) =1

THEN [Row-1:Group] + 1

ELSE [Row-1:Group]

ENDIF

That expression means if RecordID in the current row mod 3 =1, then my Group value at the current row = Group value in the previous row +1. Otherwise, keep Group value in the previous row (Image 12).

For example:

* At the row RecordID=1:

Group value starts at 1. It doesn't have the previous cell of Group value. So Group = 1

* At the row RecordID=2:

RecordID =2 mod 3 = 2. It doesn't satisfy my condition that mod([RecordID],3)=1. Therefore, it will do statement in ELSE. It means keep the Group value in previous cell. So, at the row RecordID=2, the Group value =1.

* At the row RecordID=3:

RecordID = 3 mod 3 = 0. It doesn't satisfy my condition. It will do ELSE statement. So at the row RecordID=3, the Group value = 1.

* At the row RecordID=4:

RecordID = 4 mod 3 = 1. At this time, it satisfied my condition. Therefore, it will do THEN statement. The current Group Value = Group value in previous cell + 1. But my Group value in previous cell is 1. So at RecordID=4, my Group value is 1+1=2.

Step 10: Use the Cross Tab tool to pivot the orientation of data from vertical to horizontal axis group by the Group column. The header of each column is the Title field. The value for each cell is the Data field. The method for Aggregating Values is Concatenate (Image 13).

Image 13: Use Cross Tab tool to pivot orientation of data

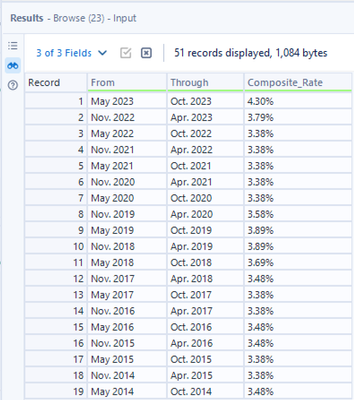

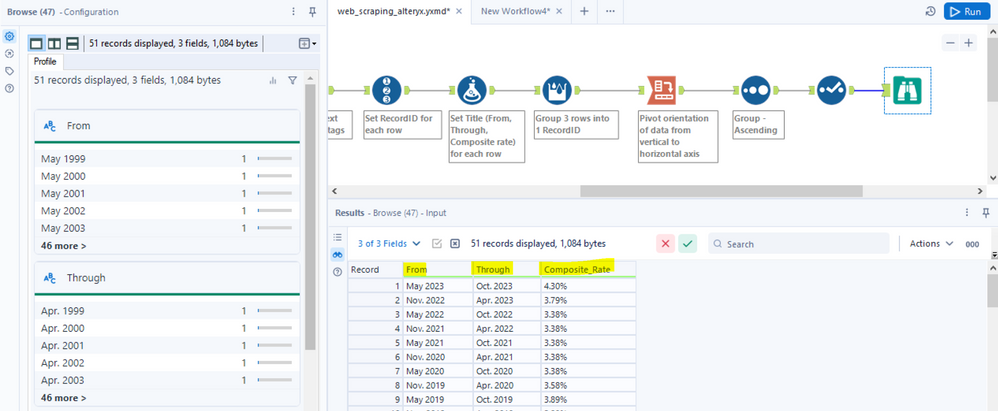

Step 11: In this last step, I will sort the Group field by ascending. Use the Select tool to organize the order of each column. Then use Browse Tool to get the last result (Image 14).

My workflow

Congratulations!!! Now we got the table same as the table on the webpage. Thank you very much for reading my article until now. The HTML table that you want to extract data from the webpage could be different from this HTML table on the treasuredirect.gov page. After reading this article, I hope you know the structure of the HTML table that I explained in Part 1 and how to extract the data based on that structure in Alteryx (Part 2).

Finally, I hope you enjoy my article about Web Scraping in Alteryx. I would love to hear your feedback. I am also open to discussing if you have any questions. Please feel free to comment or send direct messages to me. See you in the next article!

>(.*)< Happy Learning! >(.*)<

This article was originally published on LinkedIn.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.