Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Word2vec for the Alteryx Community

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Word embeddings are vector representations of words, where more similar words will have similar locations in vector space. First developed by a team of researchers at Google led by Thomas Mikolov, and discussed in the paper Efficient Estimation of Word Representations in Vector Space, word2vec is a popular group of models that produce word embeddings by training shallow neural networks. There are many diverse applications of word2vec, including more traditional NLP applications like machine translation or sentiment analysis, as well as more novel (less language-driven) applications like a song or listing recommendations on Spotify or Airbnb, respectively.

Word2vec is based on the simple concept that you can derive the meaning of a word based on the company it keeps (or as my middle school English teacher would say, using context clues!). To learn the word vectors, word2vec trains a shallow neural network. The input layer of the neural network has as many neurons as there are words in the vocabulary being learned. The hidden layer is set to have a pre-specified number of nodes, depending on how many dimensions you want in the resulting word vector (i.e., if you want to create 100-dimension word vectors, the hidden layer will have 100 nodes). This is because the word vectors learned by the word2vec model are the weights created in the trained neural network.

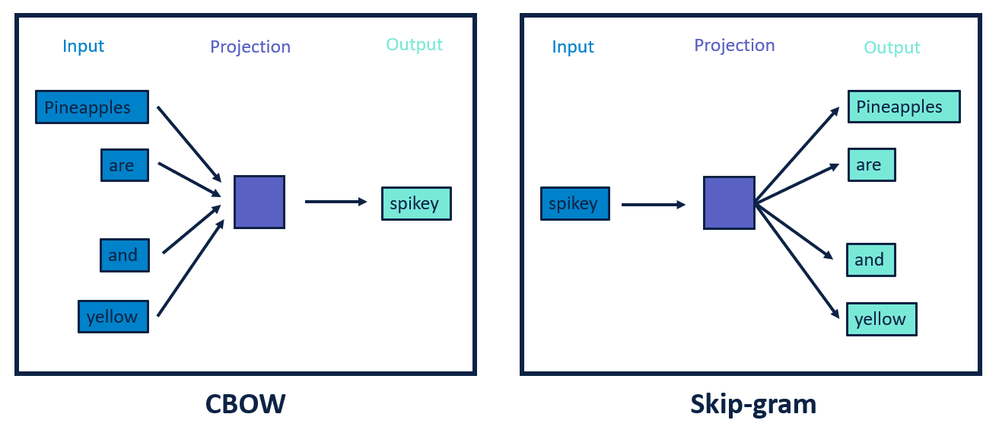

There are two varieties of word2vec, the Continuous Bag of Words (CBOW) model, and the Continuous Skip-Gram model. The CBOW model learns word embeddings by predicting the current word based on its context. Skip-gram learns word embeddings by predicting the context (surrounding words) of the current word.

Example adapted from Mikolov et al., 2013

There are a plethora of fantastic blog posts written on how Word2vec works, so if you are even slightly interested in going a little bit deeper, I would recommend checking them out. TensorFlow’s Vector Representations of Words article gets into the underlying math of word2vec, and provides working examples of implementing a word2vec model with TensorFlow on Python; Chris McCormick’s Word2vec Tutorial – The Skip-Gram Model includes a series of very helpful diagrams (his blog is pretty excellent in general, he even has a post dedicated to Word2vec Resources); Deep Learning Weekly's Demystifying Word2vec explores how and why Word2vec works; Towards Data Science has a post called Light on Math Machine Learning: Intuitive Guide to Understanding Word2vec which is descriptive without getting too deep into the underlying mechanics, and finally, the morning paper has a nice write up called The amazing power of word vectors with links to related academic papers, and some fun hand-drawn figures. This is a very abbreviated selection of what the internet has to offer on word2vec; if these articles don't do it for you, I am sure some light Googling will find one that does.

Why Word Embeddings?

Word embeddings learned from word2vec are particularly cool because they can capture both syntactic and semantic relationships between words in vector space.

One of the most popular (and somewhat obligatory) demonstrations of this comes from the first Mikolov et al., 2013 paper:

"Somewhat surprisingly, it was found that the similarity of word representations goes beyond simple syntactic regularities. Using a word offset technique where simple algebraic operations are performed on the word vectors, it was shown for the example that vector("King") - vector("Man") + vector("Woman") results in a vector that is closest to the vector representation of the word Queen." -Mikolov et al., 2013

That's right! Word2vec allows for (meaningful) word-math.

These mathematical word analogies work for both syntactic (apparent is to apparently as rapid is to rapidly) and semantic (Athens is to Greece as Oslo is to Norway) relationships (Mikolov et al., 2013). This demonstrates that both location and direction have meaning for word embeddings mapped in vector space.

The following figure, taken from Mikolov et al.'s follow up paper Distributed Representations of Words and Phrases and their Compositionality, demonstrates the linear relationships between Country and Capital vectors in 1000-dimensional Skip-gram vectors.

In this follow-up work, Mikolov et al. also found that simple addition between word vectors could have meaningful results. For example, they found that adding the vector for Russia to the word vector for river resulted in a word vector closest to the Volga River.

After learning about word2vec, the thing I was most excited about was seeing what would happen when a word2vec model is applied to the Alteryx Community corpus (NLP talk for a collection of texts).

Applying Word2vec to the Alteryx Community(!)

My first step was to download all of the posts and content currently on the Community to a directory on my computer. Luckily for me, this was an incredibly easy process thanks to API genius @MattD, who has developed a stash of macros that connect to the Community. Running a simple workflow with a couple of these macros downloaded a large dataset of text for me to play with. Also in this workflow, I performed some very light pre-processing on the texts; removing extra whitespace, and performing a find/replace for HTML character entities included in the post bodies (again, thank you Matt).

After obtaining my dataset, I did some research on how I could go about applying a word2vec model to the text. There are a few different R and Python packages that can implement word2vec. I ultimately decided to use the Python package Gensim. One of the (many) cool things about Gensim is that it is based in the packages Numpy, SciPy, and Cython, and is designed to handle large text collections with data streaming and incremental algorithms. With Gensim models, not all of the data needs to be held in memory simultaneously, which allows large bodies of text to be processed.

To take advantage of sentence streaming, one of the first things I did was define a Sentences object to stream in the Community Corpus one post at a time. Within this class definition, while the words are being read some additional preprocessing is also performed. With .lower(), all text is converted to lowercase, and using regex punctuation is stripped or replaced.

class Sentences(object😞

def __init__(self, dirname):

self.dirname = dirname

def __iter__(self😞

for fname in os.listdir(self.dirname):

for line in open(os.path.join(self.dirname, fname), encoding = "latin-1"😞

yield [x for x in re.sub(r'[-]', '_', re.sub(r'[.,:@#?!$"%&()*+=><^~`{};/@]', '', line.lower())).split() if x !="_"]

Now that I’ve defined this class, I can use it to read-in the Community Corpus by pointing to the folder the text files are saved in.

sentences = Sentences("...UpdatedCommunityPosts\\only_community")

In Mikolov et al.'s follow up paper Distributed Representations of Words and Phrases and their Compositionality, the authors also discuss the limitations of the Skip-gram for representing idiomatic phrases that are not compositions of the individual words (e.g., the "Colorado Avalanche" does not have the same meaning as combining the meanings of "Colorado" and "Avalanche". To work around this, they propose the training of phrases as individual tokens (like words) in a word2vec model. They found that word2vec effectively found word embeddings for their identified phrases.

With this in mind, I decided to train a Phrases model using Gensim to create Bigrams. Bigrams are single ideas or concepts represented by two adjacent words. I felt that this step was important for the words used on the Community because of phrases like Alteryx Server and SQL Server. Without bigrams, the word server in both circumstances would be embedded as the same vector, even though the word is being used to represent different ideas.

The default equation used to determine bigrams in the Gensim Phrases() function is the same one Mikolov et al. proposed in their paper Distributed Representations of Words and Phrases and their Compositionality.

For a first pass, I choose to leave most of the arguments in the Phrases function to their defaults. The min_count argument sets the minimum number of times a set of words need to occur together to be considered a potential bigram. The default value for this setting is 5. The threshold argument, which sets the threshold at which the score needs to pass in order to be selected as a bigram, has a default value of 10. The one argument I did deviate on is the common_terms argument. The common_terms argument accepts a list, of words, and any words included on this list do not effect the frequency count of the bigram calculation. The common_terms is effectively a stopwords argument, which prevents phrases like “I have” or “and the” from being created, while also allowing the creation of phrases like “Atilla the Hun” or “Isle of Skye”

common_terms = ["of", "with", "without", "and", "or", "the", "a", "in", "to", "is", "but", "i",

"you", "be", "for", "we", "are", "on", "as", "it", "can", "would", "do", "this", "all", "me", "if",

"so", "that", "your", "an", "there", "was", "from", "has", "have", "at", "am", "than", "i'm", "have",

"they", "my", "does", "any", "did", "into", "i'd", "it's", "hi", "let", "hello", "sure", "like", "want",

"need", "make", "use", "no"]

bigram = Phrases(sentences, common_terms = common_terms)

After running this initial bigram model, I printed out the top bigram results (sorted by frequency in the corpus, filtered with phrases with one underscore or more). The results looked like this:

This is exactly what I was hoping to capture with the bigrams – tool names, in-db, and the difference between Alteryx Server and SQL server. Yay!

So… what about trigrams? If you haven't guessed it, trigrams are the same concept as bigrams, but with three words instead of two. I decided it was worth looking in to. To create trigrams you simply apply another phrases model to the model you created to generate bigrams (and this can go on indefinitely, but would get silly).

trigram = Phrases(bigram[sentences], common_terms=common_terms)

Printing out the most common trigram results (phrases with two or more underscores, sorted by frequency).

There are phrases here I am excited about as well, such as run_command_tool, multi_row_formula, dynamic_input_tool, publish_to_tableau, and new_to_alteryx (these last two were probably actually generated in the bigram phase as “to” was included one of our common terms). Further down the list, highlights include block_until_done, and text_input_tool.

There are also some phrases I am less excited about- hope_this_helps, should_be_able, thanks_in_advance, create_a_new, take_a_look, and when_I_try. It seems like that some of these trigrams were actually generated in the bigram training phrase. I experimented with changing the thresholds and minimum counts to remove phrases like this, but found it was ineffective. It seems that many people will close their Community posts with “hope_this_helps” or “thanks_in_advance”, so it doesn’t seem unfair to consider these phrases as trigrams.

Overall, I like the trigrams - I felt that it was good that there would be a distinction between an input_tool, a text_input_tool, and a dynamic_input_tool. I decided to keep them for my word2vec model.

The preprocessing of data is an iterative process and is really the most important step in any model development. After developing the sentences class and spending some time refining bigrams and trigrams, I felt ready to train an initial word2vec model. By default, the word2vec function trains a CBOW word2vec model. This can be switched to a skip-gram model with the argument sg=1. For this iteration of model building, I am going to leave it as a CBOW. The size argument sets the dimensionality of the output vector, which I set to 100. The max_vocab_size limits the number of word embeddings trained, eliminating the less frequently occurring words if this threshold is exceeded. It is intended to curb training time. I set this value to None, thereby enforcing no limit. However, the min_count argument also sets a threshold for the number of times a word must occur for an embedding to be created for it. This prevents words that are not frequently used being given a vector on only a few observations. I set this value to 5.

model = gensim.models.Word2Vec(trigram[sentences], min_count=10, max_vocab_size=None, size=100, sg=1)

With a model developed, we can start playing with some evaluation methods. With word2vec models, evaluation is typically specifically tailored to assess the word vectors for their intended use. Because we do not have a specific intended use at this point, we will just do some basic exploration of the relationships between our trained word vectors in vector space.

First, we can run some basic similarity queries. For example, which words are the closest (in vector space) to the word python? In the Gensim library, the function most_similar() will return a list of words with the highest cosine similarities to the word(s) you provide, based on each word's vector.

print(model.most_similar(‘python’))

as a result, we get:

| Word | Similarity |

| r | 0.8407 |

| r_tool | 0.7369 |

| spark | 0.7333 |

| java | 0.7299 |

| sdk | 0.7250 |

Given that the Python SDK and Python Tool are both relatively recent adds to Alteryx (2018.2 and 2018.3, wooo!) and we are likely still building up content around Python, these results are promising. R, another open source programming language, is logically one of the closest things to Python that gets discussed on the Alteryx Community. The R tool is likely very close to R in vector space, and therefore close to Python. Spark is a cluster computing framework, integrated with Alteryx in version 2018.1, and is used with Python, R, and Scala. These vectors occurring close to the word vector for Python all make sense.

When we try running most_similar() on one of the bigram phrases we created, alteryx_server, the top five similarity matches are:

| Word | Similarity |

| scheduler | 0.8319 |

| server | 0.8277 |

| alteryx_designer | 0.8203 |

| designer | 0.7817 |

| desktop | 0.7441 |

In contrast, most_similar() for sql_server returns:

| Word | Similarity |

| oracle | 0.9259 |

| odbc | 0.9094 |

| teradata | 0.8841 |

| hive | 0.8824 |

| odbc_driver | 0.8649 |

For me, the distinction between Alteryx server and SQL server feels like a huge win. Alteryx server is matching more with other applications like scheduler and Alteryx designer, where SQL server is matching with other databases.

Next test: what happens when we run doesn’t match queries?

The doesn’t_match() function takes a provided list of words and identifies the word vector that is least similar to the other words included in the set.

When we provide formula_tool, select_tool, sample_tool, and decision_tree, the model correctly identifies that the predictive model, decision_tree, is the phrase that doesn’t belong.

print(model.doesnt_match(['formula_tool', 'select_tool', 'sample_tool', 'decision_tree']))

When we try R, Python, html, and Alteryx, html is identified as the outlier.

print(model.doesnt_match(['r', 'python', 'html', 'alteryx']))

So far, this all seems to be tracking pretty well. Now, let's try some word math! The most_similar() function can take arguments positive= to add words together, and negative= to subtract words.

To start exploring simple word addition, we add the vectors for regex and formula together:

print(model.most_similar(positive=['regex', 'formula']))

As a result, we see the following words have the closest vectors to the new vector created by adding the vectors for regex and formula together:

| Word | Similarity |

| formula_tool | 0.8484 |

| regex_tool | 0.8438 |

| expression | 0.8064 |

| regular_expression | 0.7985 |

| filter_tool | 0.7745 |

filter_tool seems a little bit like an outlier, but the other four words make sense. You can execute regex (regular expression) in the Formula tool or the Regex tool.

When we add the vectors for spatial_data and drivetime together:

print(model.most_similar(positive=['spatial_data', 'drivetime']))

The closest word vectors are:

| Word | Similarity |

| demographic | 0.8739 |

| demographic_data | 0.8333 |

| tomtom | 0.8214 |

| terabytes | 0.8017 |

| geographic | 0.8766 |

tomtom being included in these results is exciting because TomTom provides the majority of the drivetime data included with a Spatial Data license.

Now, we can try out a few analogies by performing addition and subtraction with our word vectors.

First, let's try CEO is to Dean Stoecker as CTO is to ?.

To execute this analogy, we add the word vectors for dean_stoecker and cto together, and subtract the word vector for ceo.

print(model.most_similar(positive=['dean_stoecker', 'cto'], negative=['ceo']))

and the results are:

| Word | Similarity |

| jarrod | 0.8906 |

| laurenu | 0.8906 |

| jona | 0.8828 |

| mendelson | 0.8827 |

| alexk | 0.8824 |

... this is not quite what I was hoping for. The expected answer, ned_harding, is down at 9th place, with a distance of 0.8801. Clearly, this is not a relationship the word2vec model was quite able to capture from the Community corpus (as a side note, ned_harding does show up as the top result for adding the vectors for dean_stoecker and co_founder together and subtracting the vector for olivia_duane).

Trying CEO is to Dean Stoecker as CCO is to ? (looking for our Cheif Customer Officer Libby Duane Adams) returns an error that "cco" is not included in the word2vec vocabulary, indicating the word cco occurs less than five times in the Community corpus.

Let's try another semantic analogy, and then a syntactic one.

filter is to filter_tool as formula is to ?

print(model.most_similar(positive=['filter_tool', 'formula'], negative=['filter']))

Success!

| Word | Similarity |

| formula_tool | 0.8834 |

| regex_tool | 0.8088 |

| expression | 0.8047 |

| datetime_tool | 0.7553 |

| regular_expression | 0.7525 |

And for our syntactic test; help is to helps as thank is to ?

print(model.most_similar(positive=['help', 'thank'], negative=['helps']))

Looks good, but the cosine similarity measures are not as high as other returns have been.

| Word | Similarity |

| thanks | 0.5865 |

| thanks_again | 0.5441 |

| reply | 0.4880 |

| thank_you_so_much | 0.4788 |

| exactly_what | 0.4786 |

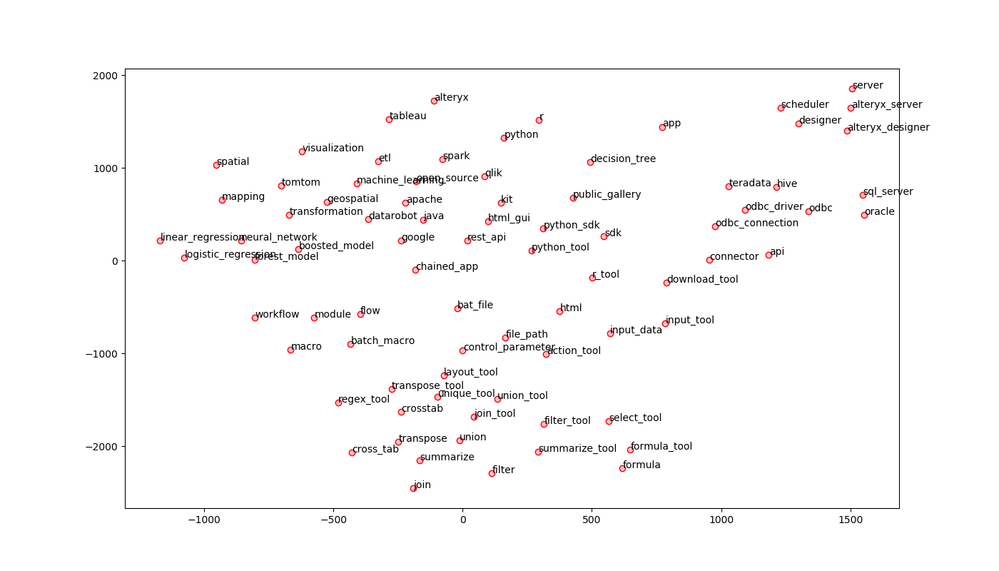

If you've stuck with me through all of the word math, to wrap up this post, I will leave you with a plot of a subset of word vectors. Word vectors learned by word2vec models can be visualized using t-SNE (t-distributed stochastic neighbor embedding), which is a machine learning algorithm that reduces high-dimensional data (e.g., 100-dimension word vectors) to 2 or 3 dimensions. There is a great tutorial on implementing t-SNE to plot word vectors from Kaggle.

Points close together suggest shorter distances between the respective word vectors, indicating the words are more related. In the top right corner of the plot, we can see a nice group of Alteryx products. We can see that the word vectors for Alteryx and Tableau are plotted relatively close to one another, and that formula and formula_tool have a similar directional offset from one another to the directional offsets between filter and filter_tool and union and union_tool.

Although many of these results are promising, there are gaps in this iteration of the Community word2vec model. One factor that could be attributed to this is that the Community corpus is on the smaller size for generating a word2vec model. You can help by continuing to post questions, answers, and new content 🙂 In addition to increasing the corpus size, there are a few parameters that can be tweaked during the model training process, such as the size of the trained vectors, or even using Skip-gram as opposed to CBOW, to potentially improve results. Creating any robust model is an iterative and experimental process, and this will be no exception. On the whole, for a phase one product, I'm pretty excited about these embeddings. Stay tuned in the future for updates or potential applications of these handy word vectors.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

A geographer by training and a data geek at heart, Sydney joined the Alteryx team as a Customer Support Engineer in 2017. She strongly believes that data and knowledge are most valuable when they can be clearly communicated and understood. She currently manages a team of data scientists that bring new innovations to the Alteryx Platform.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.