Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Data Wrangling 101: Using Python to Fetch, Manipul...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

This is a basic tutorial using pandas and a few other packages to build a simple datapipe for getting NBA data. Even though this tutorial is done using NBA data, you don't need to be an NBA fan to follow along. The same concepts and techniques can be applied to any project of your choosing.

This is meant to be used as a general tutorial for beginners with some experience in Python or R.

Step One: What data do we need?

The first step to any data project is getting an idea of what you want. We're going to focus on getting NBA data at a team level on a game by game basis. From my experience, these team level stats usually exist in different places, making them harder to compare across games.

Our goal is to build box scores across a team level to easily compare them against each other. Hopefully this will give some insight as to how a team's play has changed over the course of the season or make it easier to do any other type of analysis.

On a high level, this might look something like:

Game | Days Rest | Total Passes | Total Assists | Passes/Assist | EFG | Outcome

Next step: Where is the data coming from?

stats.nba.com has all the NBA data that's out there, but the harder part is finding a quick way to fetch and manipulate it into the form that's needed (and what most of this tutorial will be about).

Analytics is fun, but everything around it can be tough.

We're going to use the nba_py package

Huge shoutout to https://github.com/seemethere for putting this together.

This is going to focus on team stats, so lets play around a little bit to get a sense of what we're working with.

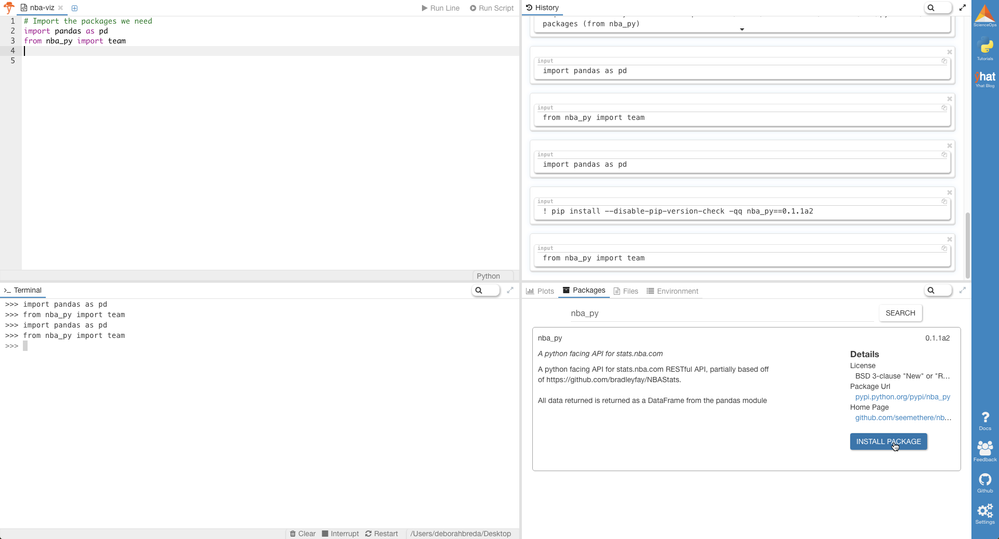

Start by importing the packages we'll need:

import pandas as pd

from nba_py import team

If you're using jupyter notebooks notebooks you can pip-install any packages you don't have straight from the notebook using:

%%bash

pip install nba_py

If you're using Yhat's Python IDE, Rodeo you can install nba_py in the packages tab.

So referring to the docs, it looks like we'll need some sort of roster id to get data for each team. This api hits an endpoint on the NBA"s website, so the IDs are most likely in the URL:

(Unapologetic Knicks bias) Looking at the team page for the on stats.nba.com, here's the url: http://stats.nba.com/team/#!/1610612752/

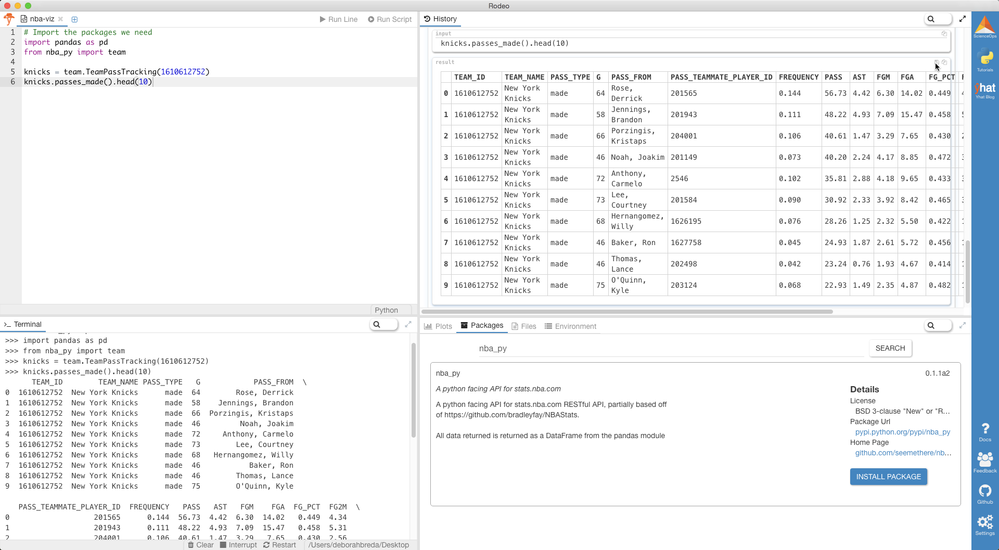

That number at the end looks like a team ID. Let's see how the passing data works:

class nba_py.team.TeamPassTracking(team_id, measure_type='Base', per_mode='PerGame', plus_minus='N', pace_adjust='N', rank='N', league_id='00', season='2016-17', season_type='Regular Season', po_round='0', outcome='', location='', month='0', season_segment='', date_from='', date_to='', opponent_team_id='0', vs_conference='', vs_division='', game_segment='', period='0', shot_clock_range='', last_n_games='0')

passes_made() passes_recieved()

knicks = team.TeamPassTracking(1610612752)

All the info is stored in the knicks object:

#the dataframe.head(N) command returns the first N rows of a dataframe

knicks.passes_made().head(10)

| TEAM_ID | TEAM_NAME | PASS_TYPE | G | PASS_FROM | PASS_TEAMMATE_PLAYER_ID | FREQUENCY | PASS | AST | FGM | FGA | FG_PCT | FG2M | FG2A | FG2_PCT | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | made | 64 | Rose, Derrick | 201565 | 0.144 | 56.73 | 4.42 | 6.30 | 14.02 | 0.449 | 4.34 | 8.64 | 0.503 | 1.95 | 5.38 | 0.363 |

| 1 | 1610612752 | New York Knicks | made | 58 | Jennings, Brandon | 201943 | 0.111 | 48.22 | 4.93 | 7.09 | 15.47 | 0.458 | 5.31 | 10.50 | 0.506 | 1.78 | 4.97 | 0.358 |

| 2 | 1610612752 | New York Knicks | made | 66 | Porzingis, Kristaps | 204001 | 0.106 | 40.61 | 1.47 | 3.29 | 7.65 | 0.430 | 2.56 | 5.50 | 0.466 | 0.73 | 2.15 | 0.338 |

| 3 | 1610612752 | New York Knicks | made | 46 | Noah, Joakim | 201149 | 0.073 | 40.20 | 2.24 | 4.17 | 8.85 | 0.472 | 3.43 | 6.93 | 0.495 | 0.74 | 1.91 | 0.386 |

| 4 | 1610612752 | New York Knicks | made | 72 | Anthony, Carmelo | 2546 | 0.102 | 35.83 | 2.88 | 4.18 | 9.65 | 0.433 | 3.13 | 6.99 | 0.447 | 1.06 | 2.67 | 0.396 |

| 5 | 1610612752 | New York Knicks | made | 73 | Lee, Courtney | 201584 | 0.090 | 30.92 | 2.33 | 3.92 | 8.42 | 0.465 | 3.01 | 5.97 | 0.505 | 0.90 | 2.45 | 0.369 |

| 6 | 1610612752 | New York Knicks | made | 68 | Hernangomez, Willy | 1626195 | 0.076 | 28.26 | 1.25 | 2.32 | 5.50 | 0.422 | 1.74 | 3.93 | 0.442 | 0.59 | 1.57 | 0.374 |

| 7 | 1610612752 | New York Knicks | made | 46 | Baker, Ron | 1627758 | 0.045 | 24.93 | 1.87 | 2.61 | 5.72 | 0.456 | 1.93 | 3.80 | 0.509 | 0.67 | 1.91 | 0.352 |

| 8 | 1610612752 | New York Knicks | made | 46 | Thomas, Lance | 202498 | 0.042 | 23.24 | 0.76 | 1.93 | 4.67 | 0.414 | 1.70 | 3.78 | 0.448 | 0.24 | 0.89 | 0.268 |

| 9 | 1610612752 | New York Knicks | made | 75 | O'Quinn, Kyle | 203124 | 0.068 | 22.93 | 1.49 | 2.35 | 4.87 | 0.482 | 1.93 | 3.63 | 0.533 | 0.41 | 1.24 | 0.333 |

Referring back to the docs, this looks like per game averages for passes. Definitely a lot that can be done with this, but let's try to get it for a specific game. Referring to the docs:

knicks_last_game = team.TeamPassTracking(1610612752, last_n_games = 1)

knicks_last_game.passes_made().head(10)

| TEAM_ID | TEAM_NAME | PASS_TYPE | G | PASS_FROM | PASS_TEAMMATE_PLAYER_ID | FREQUENCY | PASS | AST | FGM | FGA | FG_PCT | FG2M | FG2A | FG2_PCT | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | made | 1 | Baker, Ron | 1627758 | 0.212 | 72.0 | 6.0 | 7.0 | 15.0 | 0.467 | 7.0 | 11.0 | 0.636 | 0.0 | 4.0 | 0.000 |

| 1 | 1610612752 | New York Knicks | made | 1 | Ndour, Maurice | 1626254 | 0.135 | 46.0 | 1.0 | 3.0 | 9.0 | 0.333 | 3.0 | 4.0 | 0.750 | 0.0 | 5.0 | 0.000 |

| 2 | 1610612752 | New York Knicks | made | 1 | Anthony, Carmelo | 2546 | 0.126 | 43.0 | 2.0 | 5.0 | 16.0 | 0.313 | 4.0 | 13.0 | 0.308 | 1.0 | 3.0 | 0.333 |

| 3 | 1610612752 | New York Knicks | made | 1 | O'Quinn, Kyle | 203124 | 0.118 | 40.0 | 5.0 | 5.0 | 6.0 | 0.833 | 4.0 | 4.0 | 1.000 | 1.0 | 2.0 | 0.500 |

| 4 | 1610612752 | New York Knicks | made | 1 | Lee, Courtney | 201584 | 0.118 | 40.0 | 3.0 | 6.0 | 8.0 | 0.750 | 2.0 | 4.0 | 0.500 | 4.0 | 4.0 | 1.000 |

| 5 | 1610612752 | New York Knicks | made | 1 | Hernangomez, Willy | 1626195 | 0.082 | 28.0 | 3.0 | 4.0 | 8.0 | 0.500 | 4.0 | 6.0 | 0.667 | 0.0 | 2.0 | 0.000 |

| 6 | 1610612752 | New York Knicks | made | 1 | Holiday, Justin | 203200 | 0.071 | 24.0 | 3.0 | 4.0 | 7.0 | 0.571 | 4.0 | 6.0 | 0.667 | 0.0 | 1.0 | 0.000 |

| 7 | 1610612752 | New York Knicks | made | 1 | Kuzminskas, Mindaugas | 1627851 | 0.059 | 20.0 | 2.0 | 2.0 | 6.0 | 0.333 | 2.0 | 5.0 | 0.400 | 0.0 | 1.0 | 0.000 |

| 8 | 1610612752 | New York Knicks | made | 1 | Randle, Chasson | 1626184 | 0.044 | 15.0 | 0.0 | 0.0 | 1.0 | 0.000 | 0.0 | 1.0 | 0.000 | 0.0 | 0.0 | NaN |

| 9 | 1610612752 | New York Knicks | made | 1 | Vujacic, Sasha | 2756 | 0.035 | 12.0 | 1.0 | 2.0 | 3.0 | 0.667 | 2.0 | 2.0 | 1.000 | 0.0 | 1.0 | 0.000 |

This looks clean enough to be wrangled into a form that can be worked with.

If we're trying to create a team level box score, we're more than likely going to need to join tables together down the line, just something to keep in mind.

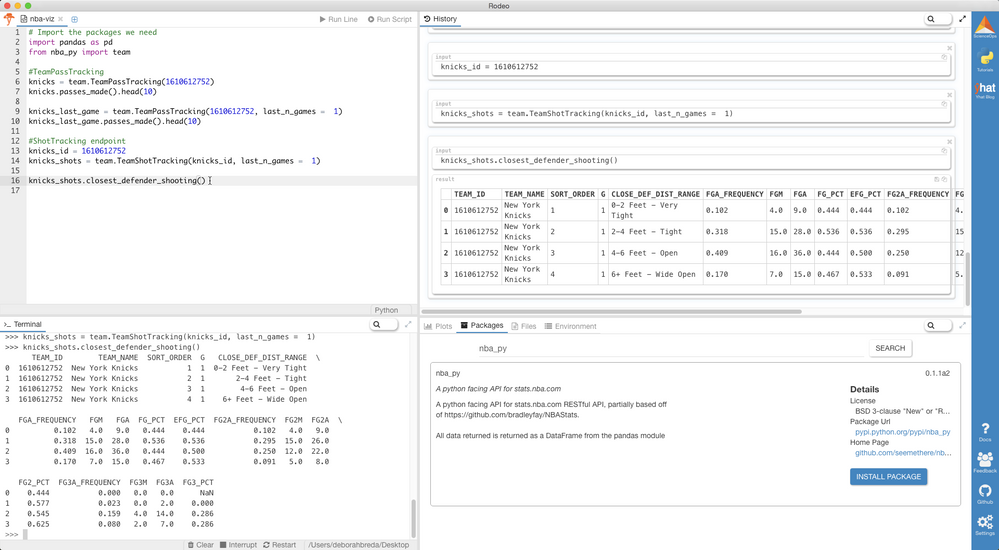

Hitting the ShotTracking endpoint looks interesting:

knicks_id = 1610612752

knicks_shots = team.TeamShotTracking(knicks_id, last_n_games = 1)

knicks_shots.closest_defender_shooting()

| TEAM_ID | TEAM_NAME | SORT_ORDER | G | CLOSE_DEF_DIST_RANGE | FGA_FREQUENCY | FGM | FGA | FG_PCT | EFG_PCT | FG2A_FREQUENCY | FG2M | FG2A | FG2_PCT | FG3A_FREQUENCY | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | 1 | 1 | 0-2 Feet - Very Tight | 0.091 | 4.0 | 8.0 | 0.500 | 0.500 | 0.091 | 4.0 | 8.0 | 0.500 | 0.000 | 0.0 | 0.0 | NaN |

| 1 | 1610612752 | New York Knicks | 2 | 1 | 2-4 Feet - Tight | 0.318 | 15.0 | 28.0 | 0.536 | 0.536 | 0.295 | 15.0 | 26.0 | 0.577 | 0.023 | 0.0 | 2.0 | 0.000 |

| 2 | 1610612752 | New York Knicks | 3 | 1 | 4-6 Feet - Open | 0.409 | 16.0 | 36.0 | 0.444 | 0.500 | 0.250 | 12.0 | 22.0 | 0.545 | 0.159 | 4.0 | 14.0 | 0.286 |

| 3 | 1610612752 | New York Knicks | 4 | 1 | 6+ Feet - Wide Open | 0.182 | 7.0 | 16.0 | 0.438 | 0.500 | 0.102 | 5.0 | 9.0 | 0.556 | 0.080 | 2.0 | 7.0 | 0.286 |

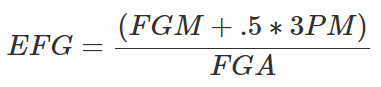

This looks interesting! We wanted EFG% (effective field goal percentage) in our original table, but it looks like we can get EFG% for open and covered shots. Let's group 'Open' and 'Wide Open' together, along with 'Tight' and 'Very Tight.'

Effective field goal percentage is a statistic that adjusts field goal percentage to account for the fact that three-point field goals count for three points while field goals only count for two points:

This might help answer questions like "Do teams hit more open shots when they win?"

df_grouped = knicks_shots.closest_defender_shooting()

df_grouped['OPEN'] = df_grouped['CLOSE_DEF_DIST_RANGE'].map(lambda x : True if 'Open' in x else False)

##This creates a new column OPEN, mapped from the 'CLOSE_DEF_DIST_RANGE' column.

##http://pandas.pydata.org/pandas-docs/stable/generated/pandas.Series.map.html

df_grouped

| TEAM_ID | TEAM_NAME | SORT_ORDER | G | CLOSE_DEF_DIST_RANGE | FGA_FREQUENCY | FGM | FGA | FG_PCT | EFG_PCT | FG2A_FREQUENCY | FG2M | FG2A | FG2_PCT | FG3A_FREQUENCY | FG3M | FG3A | FG3_PCT | OPEN | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | 1 | 1 | 0-2 Feet - Very Tight | 0.091 | 4.0 | 8.0 | 0.500 | 0.500 | 0.091 | 4.0 | 8.0 | 0.500 | 0.000 | 0.0 | 0.0 | NaN | False |

| 1 | 1610612752 | New York Knicks | 2 | 1 | 2-4 Feet - Tight | 0.318 | 15.0 | 28.0 | 0.536 | 0.536 | 0.295 | 15.0 | 26.0 | 0.577 | 0.023 | 0.0 | 2.0 | 0.000 | False |

| 2 | 1610612752 | New York Knicks | 3 | 1 | 4-6 Feet - Open | 0.409 | 16.0 | 36.0 | 0.444 | 0.500 | 0.250 | 12.0 | 22.0 | 0.545 | 0.159 | 4.0 | 14.0 | 0.286 | True |

| 3 | 1610612752 | New York Knicks | 4 | 1 | 6+ Feet - Wide Open | 0.182 | 7.0 | 16.0 | 0.438 | 0.500 | 0.102 | 5.0 | 9.0 | 0.556 | 0.080 | 2.0 | 7.0 | 0.286 | True |

The last column 'OPEN' gives us the information we need. Now we can aggregate based off of it. Let's get the total number of open shots.

total_open_shots = df_grouped.loc[df_grouped['OPEN'] == True, 'FGA'].sum()

print total_open_shots

52.0

That looks like it worked. Similarly, we can get the total number of "covered" shots taken (looks like it's a lot higher...nothing surprising there.)

Keep in mind, this is a bit misleading, as layups and other shots near the basket are more likely to have a nearby defender.

Referring to the definition for EFG%:

We definitely have all the information we need to compute this for open and covered shots:

#Mapping the formula above into a column:

open_efg = (df_grouped.loc[df_grouped['OPEN']== True, 'FGM'].sum() + (.5 * df_grouped.loc[df_grouped['OPEN']== True, 'FG3M'].sum()))/(df_grouped.loc[df_grouped['OPEN']== True, 'FGA'].sum())

covered_efg = (df_grouped.loc[df_grouped['OPEN']== False, 'FGM'].sum() + (.5 * df_grouped.loc[df_grouped['OPEN']== False, 'FG3M'].sum()))/(df_grouped.loc[df_grouped['OPEN']== False, 'FGA'].sum())

print open_efg

print covered_efg

0.5

0.527777777778

Interesting... shooting better when there's a defender nearby makes it look like there's more to the story. Then again, nothing about the Knicks ever seems to makes sense.

Referring back to the original plan, it looks like we have most of the stats we set out to get. However, we still haven't addressed:

1) Who was the game against? Who won?

2) How many days rest did each team have?

3) How are we going to get all this data together?

From the looks of it, there isn't anything in the nba_py team modules we're using that can be directly used as an identifier.

However, it looks like we can get stats for date ranges. To test this, let's look at a single game the Knicks played on Sunday, January 29th:

date = '2017-01-29'

knicks_jan = team.TeamShotTracking(knicks_id, date_from = date, date_to = date)

knicks_jan_shots = knicks_jan.closest_defender_shooting()

knicks_jan_shots

| TEAM_ID | TEAM_NAME | SORT_ORDER | G | CLOSE_DEF_DIST_RANGE | FGA_FREQUENCY | FGM | FGA | FG_PCT | EFG_PCT | FG2A_FREQUENCY | FG2M | FG2A | FG2_PCT | FG3A_FREQUENCY | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | 1 | 1 | 0-2 Feet - Very Tight | 0.156 | 6.0 | 20.0 | 0.300 | 0.300 | 0.148 | 6.0 | 19.0 | 0.316 | 0.008 | 0.0 | 1.0 | 0.000 |

| 1 | 1610612752 | New York Knicks | 2 | 1 | 2-4 Feet - Tight | 0.344 | 23.0 | 44.0 | 0.523 | 0.591 | 0.258 | 17.0 | 33.0 | 0.515 | 0.086 | 6.0 | 11.0 | 0.545 |

| 2 | 1610612752 | New York Knicks | 3 | 1 | 4-6 Feet - Open | 0.320 | 13.0 | 41.0 | 0.317 | 0.390 | 0.156 | 7.0 | 20.0 | 0.350 | 0.164 | 6.0 | 21.0 | 0.286 |

| 3 | 1610612752 | New York Knicks | 4 | 1 | 6+ Feet - Wide Open | 0.180 | 9.0 | 23.0 | 0.391 | 0.522 | 0.039 | 3.0 | 5.0 | 0.600 | 0.141 | 6.0 | 18.0 | 0.333 |

A quick check of the box score confirms that the Knicks shot a total of 128, so it looks like adding a date field will work out. We'll just need to figure out which dates to pass in:

We still don't know what the outcome was, so let's jump back into the docs to see if another module will help out.

#Hitting another endpoint

knicks_log = team.TeamGameLogs(knicks_id)

knicks_log.info()

| Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | FGM | ... | FT_PCT | OREB | DREB | REB | AST | STL | BLK | TOV | PF | PTS | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | 0021601160 | APR 04, 2017 | NYK vs. CHI | W | 30 | 48 | 0.385 | 240 | 42 | ... | 0.625 | 16 | 37 | 53 | 26 | 5 | 7 | 15 | 22 | 100 |

| 1 | 1610612752 | 0021601145 | APR 02, 2017 | NYK vs. BOS | L | 29 | 48 | 0.377 | 240 | 33 | ... | 0.840 | 8 | 24 | 32 | 20 | 12 | 2 | 11 | 20 | 94 |

| 2 | 1610612752 | 0021601133 | MAR 31, 2017 | NYK @ MIA | W | 29 | 47 | 0.382 | 240 | 38 | ... | 0.941 | 8 | 31 | 39 | 25 | 9 | 5 | 14 | 18 | 98 |

| 3 | 1610612752 | 0021601115 | MAR 29, 2017 | NYK vs. MIA | L | 28 | 47 | 0.373 | 240 | 33 | ... | 0.810 | 17 | 35 | 52 | 19 | 2 | 6 | 14 | 16 | 88 |

| 4 | 1610612752 | 0021601098 | MAR 27, 2017 | NYK vs. DET | W | 28 | 46 | 0.378 | 240 | 45 | ... | 0.923 | 4 | 33 | 37 | 26 | 13 | 5 | 12 | 16 | 109 |

| 5 | 1610612752 | 0021601085 | MAR 25, 2017 | NYK @ SAS | L | 27 | 46 | 0.370 | 240 | 41 | ... | 0.867 | 12 | 33 | 45 | 24 | 6 | 5 | 16 | 16 | 98 |

| 6 | 1610612752 | 0021601071 | MAR 23, 2017 | NYK @ POR | L | 27 | 45 | 0.375 | 240 | 36 | ... | 0.900 | 9 | 31 | 40 | 23 | 5 | 9 | 11 | 20 | 95 |

| 7 | 1610612752 | 0021601066 | MAR 22, 2017 | NYK @ UTA | L | 27 | 44 | 0.380 | 240 | 38 | ... | 0.889 | 9 | 27 | 36 | 19 | 5 | 1 | 11 | 26 | 101 |

| 8 | 1610612752 | 0021601050 | MAR 20, 2017 | NYK @ LAC | L | 27 | 43 | 0.386 | 240 | 40 | ... | 0.792 | 14 | 34 | 48 | 24 | 6 | 1 | 12 | 19 | 105 |

| 9 | 1610612752 | 0021601016 | MAR 16, 2017 | NYK vs. BKN | L | 27 | 42 | 0.391 | 240 | 41 | ... | 0.962 | 5 | 29 | 34 | 20 | 6 | 4 | 7 | 26 | 110 |

| 10 | 1610612752 | 0021601001 | MAR 14, 2017 | NYK vs. IND | W | 27 | 41 | 0.397 | 240 | 35 | ... | 0.615 | 11 | 41 | 52 | 21 | 8 | 4 | 14 | 15 | 87 |

| 11 | 1610612752 | 0021600986 | MAR 12, 2017 | NYK @ BKN | L | 26 | 41 | 0.388 | 240 | 39 | ... | 0.813 | 11 | 32 | 43 | 22 | 5 | 8 | 9 | 20 | 112 |

| 12 | 1610612752 | 0021600975 | MAR 11, 2017 | NYK @ DET | L | 26 | 40 | 0.394 | 240 | 36 | ... | 0.636 | 8 | 36 | 44 | 26 | 4 | 7 | 18 | 18 | 92 |

| 13 | 1610612752 | 0021600952 | MAR 08, 2017 | NYK @ MIL | L | 26 | 39 | 0.400 | 240 | 39 | ... | 0.667 | 10 | 33 | 43 | 22 | 4 | 6 | 15 | 20 | 93 |

| 14 | 1610612752 | 0021600935 | MAR 06, 2017 | NYK @ ORL | W | 26 | 38 | 0.406 | 240 | 40 | ... | 0.964 | 12 | 33 | 45 | 26 | 6 | 1 | 9 | 23 | 113 |

| 15 | 1610612752 | 0021600928 | MAR 05, 2017 | NYK vs. GSW | L | 25 | 38 | 0.397 | 240 | 39 | ... | 0.800 | 12 | 35 | 47 | 18 | 5 | 6 | 15 | 20 | 105 |

| 16 | 1610612752 | 0021600909 | MAR 03, 2017 | NYK @ PHI | L | 25 | 37 | 0.403 | 240 | 33 | ... | 0.879 | 9 | 32 | 41 | 14 | 10 | 3 | 10 | 20 | 102 |

| 17 | 1610612752 | 0021600895 | MAR 01, 2017 | NYK @ ORL | W | 25 | 36 | 0.410 | 240 | 34 | ... | 0.806 | 13 | 37 | 50 | 21 | 9 | 3 | 11 | 16 | 101 |

| 18 | 1610612752 | 0021600882 | FEB 27, 2017 | NYK vs. TOR | L | 24 | 36 | 0.400 | 240 | 33 | ... | 0.842 | 8 | 32 | 40 | 17 | 10 | 6 | 17 | 19 | 91 |

| 19 | 1610612752 | 0021600868 | FEB 25, 2017 | NYK vs. PHI | W | 24 | 35 | 0.407 | 240 | 43 | ... | 0.783 | 10 | 34 | 44 | 21 | 6 | 7 | 11 | 22 | 110 |

| 20 | 1610612752 | 0021600853 | FEB 23, 2017 | NYK @ CLE | L | 23 | 35 | 0.397 | 240 | 42 | ... | 0.706 | 16 | 34 | 50 | 24 | 4 | 7 | 12 | 19 | 104 |

| 21 | 1610612752 | 0021600845 | FEB 15, 2017 | NYK @ OKC | L | 23 | 34 | 0.404 | 240 | 41 | ... | 0.857 | 6 | 33 | 39 | 19 | 8 | 12 | 15 | 21 | 105 |

| 22 | 1610612752 | 0021600817 | FEB 12, 2017 | NYK vs. SAS | W | 23 | 33 | 0.411 | 240 | 34 | ... | 0.810 | 5 | 39 | 44 | 18 | 5 | 8 | 19 | 19 | 94 |

| 23 | 1610612752 | 0021600800 | FEB 10, 2017 | NYK vs. DEN | L | 22 | 33 | 0.400 | 240 | 52 | ... | 0.600 | 10 | 23 | 33 | 36 | 10 | 5 | 10 | 14 | 123 |

| 24 | 1610612752 | 0021600791 | FEB 08, 2017 | NYK vs. LAC | L | 22 | 32 | 0.407 | 240 | 46 | ... | 0.833 | 12 | 29 | 41 | 25 | 9 | 5 | 11 | 22 | 115 |

| 25 | 1610612752 | 0021600768 | FEB 06, 2017 | NYK vs. LAL | L | 22 | 31 | 0.415 | 240 | 37 | ... | 0.788 | 6 | 34 | 40 | 16 | 4 | 4 | 16 | 24 | 107 |

| 26 | 1610612752 | 0021600759 | FEB 04, 2017 | NYK vs. CLE | L | 22 | 30 | 0.423 | 240 | 39 | ... | 0.500 | 13 | 29 | 42 | 23 | 9 | 7 | 10 | 20 | 104 |

| 27 | 1610612752 | 0021600733 | FEB 01, 2017 | NYK @ BKN | W | 22 | 29 | 0.431 | 240 | 35 | ... | 0.613 | 21 | 37 | 58 | 23 | 16 | 7 | 13 | 18 | 95 |

| 28 | 1610612752 | 0021600724 | JAN 31, 2017 | NYK @ WAS | L | 21 | 29 | 0.420 | 240 | 34 | ... | 0.800 | 22 | 29 | 51 | 18 | 8 | 2 | 12 | 17 | 101 |

| 29 | 1610612752 | 0021600711 | JAN 29, 2017 | NYK @ ATL | L | 21 | 28 | 0.429 | 340 | 51 | ... | 0.826 | 15 | 48 | 63 | 32 | 9 | 11 | 12 | 39 | 139 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 48 | 1610612752 | 0021600456 | DEC 25, 2016 | NYK vs. BOS | L | 16 | 14 | 0.533 | 240 | 41 | ... | 0.889 | 17 | 32 | 49 | 11 | 5 | 6 | 17 | 23 | 114 |

| 49 | 1610612752 | 0021600438 | DEC 22, 2016 | NYK vs. ORL | W | 16 | 13 | 0.552 | 240 | 41 | ... | 0.882 | 18 | 34 | 52 | 26 | 9 | 9 | 15 | 18 | 106 |

| 50 | 1610612752 | 0021600421 | DEC 20, 2016 | NYK vs. IND | W | 15 | 13 | 0.536 | 240 | 44 | ... | 0.810 | 4 | 41 | 45 | 24 | 6 | 8 | 14 | 18 | 118 |

| 51 | 1610612752 | 0021600404 | DEC 17, 2016 | NYK @ DEN | L | 14 | 13 | 0.519 | 240 | 35 | ... | 0.923 | 9 | 26 | 35 | 18 | 6 | 4 | 11 | 27 | 114 |

| 52 | 1610612752 | 0021600388 | DEC 15, 2016 | NYK @ GSW | L | 14 | 12 | 0.538 | 240 | 38 | ... | 0.474 | 14 | 35 | 49 | 19 | 10 | 5 | 11 | 10 | 90 |

| 53 | 1610612752 | 0021600372 | DEC 13, 2016 | NYK @ PHX | L | 14 | 11 | 0.560 | 265 | 38 | ... | 0.737 | 11 | 32 | 43 | 23 | 10 | 4 | 13 | 27 | 111 |

| 54 | 1610612752 | 0021600360 | DEC 11, 2016 | NYK @ LAL | W | 14 | 10 | 0.583 | 240 | 41 | ... | 0.839 | 8 | 36 | 44 | 21 | 9 | 11 | 10 | 15 | 118 |

| 55 | 1610612752 | 0021600345 | DEC 09, 2016 | NYK @ SAC | W | 13 | 10 | 0.565 | 240 | 36 | ... | 0.840 | 12 | 42 | 54 | 22 | 3 | 6 | 16 | 25 | 103 |

| 56 | 1610612752 | 0021600327 | DEC 07, 2016 | NYK vs. CLE | L | 12 | 10 | 0.545 | 240 | 35 | ... | 0.867 | 13 | 30 | 43 | 22 | 6 | 3 | 16 | 22 | 94 |

| 57 | 1610612752 | 0021600316 | DEC 06, 2016 | NYK @ MIA | W | 12 | 9 | 0.571 | 240 | 48 | ... | 0.688 | 18 | 35 | 53 | 22 | 6 | 6 | 10 | 18 | 114 |

| 58 | 1610612752 | 0021600302 | DEC 04, 2016 | NYK vs. SAC | W | 11 | 9 | 0.550 | 240 | 39 | ... | 0.708 | 14 | 44 | 58 | 20 | 5 | 10 | 18 | 25 | 106 |

| 59 | 1610612752 | 0021600285 | DEC 02, 2016 | NYK vs. MIN | W | 10 | 9 | 0.526 | 240 | 41 | ... | 0.800 | 11 | 32 | 43 | 26 | 9 | 6 | 15 | 18 | 118 |

| 60 | 1610612752 | 0021600271 | NOV 30, 2016 | NYK @ MIN | W | 9 | 9 | 0.500 | 240 | 41 | ... | 0.733 | 12 | 27 | 39 | 24 | 8 | 3 | 13 | 26 | 106 |

| 61 | 1610612752 | 0021600255 | NOV 28, 2016 | NYK vs. OKC | L | 8 | 9 | 0.471 | 240 | 36 | ... | 0.893 | 12 | 28 | 40 | 20 | 9 | 11 | 5 | 16 | 103 |

| 62 | 1610612752 | 0021600241 | NOV 26, 2016 | NYK @ CHA | L | 8 | 8 | 0.500 | 240 | 37 | ... | 0.800 | 11 | 36 | 47 | 26 | 9 | 6 | 8 | 27 | 102 |

| 63 | 1610612752 | 0021600228 | NOV 25, 2016 | NYK vs. CHA | W | 8 | 7 | 0.533 | 265 | 45 | ... | 0.923 | 13 | 42 | 55 | 26 | 9 | 8 | 16 | 21 | 113 |

| 64 | 1610612752 | 0021600208 | NOV 22, 2016 | NYK vs. POR | W | 7 | 7 | 0.500 | 240 | 45 | ... | 1.000 | 10 | 33 | 43 | 26 | 8 | 5 | 13 | 23 | 107 |

| 65 | 1610612752 | 0021600193 | NOV 20, 2016 | NYK vs. ATL | W | 6 | 7 | 0.462 | 240 | 42 | ... | 0.714 | 11 | 39 | 50 | 21 | 8 | 1 | 15 | 23 | 104 |

| 66 | 1610612752 | 0021600169 | NOV 17, 2016 | NYK @ WAS | L | 5 | 7 | 0.417 | 240 | 41 | ... | 0.900 | 10 | 26 | 36 | 23 | 9 | 1 | 13 | 20 | 112 |

| 67 | 1610612752 | 0021600162 | NOV 16, 2016 | NYK vs. DET | W | 5 | 6 | 0.455 | 240 | 42 | ... | 0.632 | 19 | 33 | 52 | 24 | 8 | 9 | 9 | 11 | 105 |

| 68 | 1610612752 | 0021600146 | NOV 14, 2016 | NYK vs. DAL | W | 4 | 6 | 0.400 | 240 | 34 | ... | 0.889 | 14 | 37 | 51 | 18 | 5 | 5 | 17 | 16 | 93 |

| 69 | 1610612752 | 0021600131 | NOV 12, 2016 | NYK @ TOR | L | 3 | 6 | 0.333 | 240 | 44 | ... | 0.750 | 17 | 32 | 49 | 19 | 3 | 2 | 16 | 23 | 107 |

| 70 | 1610612752 | 0021600125 | NOV 11, 2016 | NYK @ BOS | L | 3 | 5 | 0.375 | 240 | 33 | ... | 0.882 | 21 | 36 | 57 | 19 | 7 | 11 | 25 | 26 | 87 |

| 71 | 1610612752 | 0021600106 | NOV 09, 2016 | NYK vs. BKN | W | 3 | 4 | 0.429 | 240 | 44 | ... | 0.706 | 9 | 41 | 50 | 25 | 11 | 5 | 14 | 21 | 110 |

| 72 | 1610612752 | 0021600087 | NOV 06, 2016 | NYK vs. UTA | L | 2 | 4 | 0.333 | 240 | 42 | ... | 0.895 | 10 | 29 | 39 | 18 | 8 | 5 | 12 | 26 | 109 |

| 73 | 1610612752 | 0021600073 | NOV 04, 2016 | NYK @ CHI | W | 2 | 3 | 0.400 | 240 | 46 | ... | 0.762 | 11 | 29 | 40 | 32 | 7 | 2 | 5 | 23 | 117 |

| 74 | 1610612752 | 0021600058 | NOV 02, 2016 | NYK vs. HOU | L | 1 | 3 | 0.250 | 240 | 37 | ... | 0.680 | 7 | 27 | 34 | 18 | 10 | 6 | 16 | 22 | 99 |

| 75 | 1610612752 | 0021600050 | NOV 01, 2016 | NYK @ DET | L | 1 | 2 | 0.333 | 240 | 35 | ... | 0.800 | 8 | 35 | 43 | 18 | 6 | 9 | 11 | 20 | 89 |

| 76 | 1610612752 | 0021600028 | OCT 29, 2016 | NYK vs. MEM | W | 1 | 1 | 0.500 | 240 | 40 | ... | 0.641 | 6 | 35 | 41 | 24 | 4 | 4 | 12 | 25 | 111 |

| 77 | 1610612752 | 0021600001 | OCT 25, 2016 | NYK @ CLE | L | 0 | 1 | 0.000 | 240 | 32 | ... | 0.750 | 13 | 29 | 42 | 17 | 6 | 6 | 18 | 22 | 88 |

78 rows × 27 columns

Looks like this can be manipulated to get rest days:

df_game_log = knicks_log.info()

df_game_log['GAME_DATE']=pd.to_datetime(df_game_log['GAME_DATE']) ##converting the columns datatype

df_game_log['DAYS_REST'] = df_game_log['GAME_DATE']- df_game_log['GAME_DATE'].shift(-1)

df_game_log.head()

| Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | FGM | ... | OREB | DREB | REB | AST | STL | BLK | TOV | PF | PTS | DAYS_REST | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | 0021601160 | 2017-04-04 | NYK vs. CHI | W | 30 | 48 | 0.385 | 240 | 42 | ... | 16 | 37 | 53 | 26 | 5 | 7 | 15 | 22 | 100 | 2 days |

| 1 | 1610612752 | 0021601145 | 2017-04-02 | NYK vs. BOS | L | 29 | 48 | 0.377 | 240 | 33 | ... | 8 | 24 | 32 | 20 | 12 | 2 | 11 | 20 | 94 | 2 days |

| 2 | 1610612752 | 0021601133 | 2017-03-31 | NYK @ MIA | W | 29 | 47 | 0.382 | 240 | 38 | ... | 8 | 31 | 39 | 25 | 9 | 5 | 14 | 18 | 98 | 2 days |

| 3 | 1610612752 | 0021601115 | 2017-03-29 | NYK vs. MIA | L | 28 | 47 | 0.373 | 240 | 33 | ... | 17 | 35 | 52 | 19 | 2 | 6 | 14 | 16 | 88 | 2 days |

| 4 | 1610612752 | 0021601098 | 2017-03-27 | NYK vs. DET | W | 28 | 46 | 0.378 | 240 | 45 | ... | 4 | 33 | 37 | 26 | 13 | 5 | 12 | 16 | 109 | 2 days |

5 rows × 28 columns

df_game_log.dtypes

Team_ID int64

Game_ID object

GAME_DATE datetime64[ns]

MATCHUP object

WL object

W int64

L int64

W_PCT float64

MIN int64

FGM int64

FGA int64

FG_PCT float64

FG3M int64

FG3A int64

FG3_PCT float64

FTM int64

FTA int64

FT_PCT float64

OREB int64

DREB int64

REB int64

AST int64

STL int64

BLK int64

TOV int64

PF int64

PTS int64

DAYS_REST timedelta64[ns]

dtype: object

#We have the information we need, but it's not the right data type. To switch it back:

df_game_log['DAYS_REST'] = df_game_log['DAYS_REST'].astype('timedelta64[D]')

df_game_log.dtypes

Team_ID int64

Game_ID object

GAME_DATE datetime64[ns]

MATCHUP object

WL object

W int64

L int64

W_PCT float64

MIN int64

FGM int64

FGA int64

FG_PCT float64

FG3M int64

FG3A int64

FG3_PCT float64

FTM int64

FTA int64

FT_PCT float64

OREB int64

DREB int64

REB int64

AST int64

STL int64

BLK int64

TOV int64

PF int64

PTS int64

DAYS_REST float64

dtype: objectThis looks like we'll get all the info for all games. We'll start by appending the information for a single game and then try to do it for all dates:

df_game_log.head()

| Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | FGM | ... | OREB | DREB | REB | AST | STL | BLK | TOV | PF | PTS | DAYS_REST | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | 0021601160 | 2017-04-04 | NYK vs. CHI | W | 30 | 48 | 0.385 | 240 | 42 | ... | 16 | 37 | 53 | 26 | 5 | 7 | 15 | 22 | 100 | 2.0 |

| 1 | 1610612752 | 0021601145 | 2017-04-02 | NYK vs. BOS | L | 29 | 48 | 0.377 | 240 | 33 | ... | 8 | 24 | 32 | 20 | 12 | 2 | 11 | 20 | 94 | 2.0 |

| 2 | 1610612752 | 0021601133 | 2017-03-31 | NYK @ MIA | W | 29 | 47 | 0.382 | 240 | 38 | ... | 8 | 31 | 39 | 25 | 9 | 5 | 14 | 18 | 98 | 2.0 |

| 3 | 1610612752 | 0021601115 | 2017-03-29 | NYK vs. MIA | L | 28 | 47 | 0.373 | 240 | 33 | ... | 17 | 35 | 52 | 19 | 2 | 6 | 14 | 16 | 88 | 2.0 |

| 4 | 1610612752 | 0021601098 | 2017-03-27 | NYK vs. DET | W | 28 | 46 | 0.378 | 240 | 45 | ... | 4 | 33 | 37 | 26 | 13 | 5 | 12 | 16 | 109 | 2.0 |

5 rows × 28 columns

#Get the dates from the game logs and pass them into the other functions:

dates = df_game_log['GAME_DATE']

print len(dates)

78

#We have the first date, so now to get the relevant passing and shot info we need:

date = dates[2] ##picking a random date

game_info = team.TeamPassTracking(knicks_id, date_from =date, date_to = date).passes_made()

game_info['GAME_DATE'] = date

game_info

| TEAM_ID | TEAM_NAME | PASS_TYPE | G | PASS_FROM | PASS_TEAMMATE_PLAYER_ID | FREQUENCY | PASS | AST | FGM | FGA | FG_PCT | FG2M | FG2A | FG2_PCT | FG3M | FG3A | FG3_PCT | GAME_DATE | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | made | 1 | Baker, Ron | 1627758 | 0.238 | 67.0 | 6.0 | 7.0 | 18.0 | 0.389 | 5.0 | 14.0 | 0.357 | 2.0 | 4.0 | 0.5 | 2017-03-31 |

| 1 | 1610612752 | New York Knicks | made | 1 | Vujacic, Sasha | 2756 | 0.149 | 42.0 | 7.0 | 8.0 | 15.0 | 0.533 | 5.0 | 9.0 | 0.556 | 3.0 | 6.0 | 0.5 | 2017-03-31 |

| 2 | 1610612752 | New York Knicks | made | 1 | Hernangomez, Willy | 1626195 | 0.131 | 37.0 | 2.0 | 3.0 | 5.0 | 0.600 | 3.0 | 5.0 | 0.600 | 0.0 | 0.0 | NaN | 2017-03-31 |

| 3 | 1610612752 | New York Knicks | made | 1 | Porzingis, Kristaps | 204001 | 0.113 | 32.0 | 3.0 | 4.0 | 9.0 | 0.444 | 4.0 | 7.0 | 0.571 | 0.0 | 2.0 | 0.0 | 2017-03-31 |

| 4 | 1610612752 | New York Knicks | made | 1 | Lee, Courtney | 201584 | 0.106 | 30.0 | 1.0 | 4.0 | 6.0 | 0.667 | 4.0 | 5.0 | 0.800 | 0.0 | 1.0 | 0.0 | 2017-03-31 |

| 5 | 1610612752 | New York Knicks | made | 1 | O'Quinn, Kyle | 203124 | 0.096 | 27.0 | 3.0 | 3.0 | 5.0 | 0.600 | 3.0 | 5.0 | 0.600 | 0.0 | 0.0 | NaN | 2017-03-31 |

| 6 | 1610612752 | New York Knicks | made | 1 | Holiday, Justin | 203200 | 0.082 | 23.0 | 2.0 | 4.0 | 5.0 | 0.800 | 3.0 | 3.0 | 1.000 | 1.0 | 2.0 | 0.5 | 2017-03-31 |

| 7 | 1610612752 | New York Knicks | made | 1 | Randle, Chasson | 1626184 | 0.057 | 16.0 | 0.0 | 0.0 | 2.0 | 0.000 | 0.0 | 2.0 | 0.000 | 0.0 | 0.0 | NaN | 2017-03-31 |

| 8 | 1610612752 | New York Knicks | made | 1 | Ndour, Maurice | 1626254 | 0.028 | 8.0 | 1.0 | 1.0 | 2.0 | 0.500 | 1.0 | 2.0 | 0.500 | 0.0 | 0.0 | NaN | 2017-03-31 |

##Sum everything by GAME_DATE, similar to SQL-style aggregation/groupby:

df_sum = game_info.groupby(['GAME_DATE']).sum()

df_sum.reset_index(level = 0, inplace = True)

df_sum

| GAME_DATE | TEAM_ID | G | PASS_TEAMMATE_PLAYER_ID | FREQUENCY | PASS | AST | FGM | FGA | FG_PCT | FG2M | FG2A | FG2_PCT | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2017-03-31 | 14495514768 | 9 | 7321056 | 1.0 | 282.0 | 25.0 | 34.0 | 67.0 | 4.533 | 28.0 | 52.0 | 4.984 | 6.0 | 15.0 | 1.5 |

When we merge this row back up to the bigger dataframe, we can drop the columns we don't need.

shot_info = team.TeamShotTracking(knicks_id, date_from = date, date_to = date).closest_defender_shooting()

shot_info

| TEAM_ID | TEAM_NAME | SORT_ORDER | G | CLOSE_DEF_DIST_RANGE | FGA_FREQUENCY | FGM | FGA | FG_PCT | EFG_PCT | FG2A_FREQUENCY | FG2M | FG2A | FG2_PCT | FG3A_FREQUENCY | FG3M | FG3A | FG3_PCT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | New York Knicks | 1 | 1 | 0-2 Feet - Very Tight | 0.200 | 8.0 | 16.0 | 0.500 | 0.500 | 0.200 | 8.0 | 16.0 | 0.500 | 0.000 | 0.0 | 0.0 | NaN |

| 1 | 1610612752 | New York Knicks | 2 | 1 | 2-4 Feet - Tight | 0.450 | 15.0 | 36.0 | 0.417 | 0.417 | 0.425 | 15.0 | 34.0 | 0.441 | 0.025 | 0.0 | 2.0 | 0.0 |

| 2 | 1610612752 | New York Knicks | 3 | 1 | 4-6 Feet - Open | 0.263 | 11.0 | 21.0 | 0.524 | 0.619 | 0.138 | 7.0 | 11.0 | 0.636 | 0.125 | 4.0 | 10.0 | 0.4 |

| 3 | 1610612752 | New York Knicks | 4 | 1 | 6+ Feet - Wide Open | 0.088 | 4.0 | 7.0 | 0.571 | 0.714 | 0.038 | 2.0 | 3.0 | 0.667 | 0.050 | 2.0 | 4.0 | 0.5 |

#From earlier

shot_info['OPEN'] = shot_info['CLOSE_DEF_DIST_RANGE'].map(lambda x: True if 'Open' in x else False)

df_sum['OPEN_SHOTS'] = shot_info.loc[shot_info['OPEN']== True, 'FGA'].sum()

df_sum['OPEN_EFG'] = (shot_info.loc[shot_info['OPEN']== True, 'FGM'].sum() + (.5 * shot_info.loc[shot_info['OPEN']== True, 'FG3M'].sum()))/(shot_info.loc[shot_info['OPEN']== True, 'FGA'].sum())

df_sum['COVERED_EFG']= (shot_info.loc[shot_info['OPEN']== False, 'FGM'].sum() + (.5 * shot_info.loc[shot_info['OPEN']== False, 'FG3M'].sum()))/(shot_info.loc[shot_info['OPEN']== False, 'FGA'].sum())

df_sum

| GAME_DATE | TEAM_ID | G | PASS_TEAMMATE_PLAYER_ID | FREQUENCY | PASS | AST | FGM | FGA | FG_PCT | FG2M | FG2A | FG2_PCT | FG3M | FG3A | FG3_PCT | OPEN_SHOTS | OPEN_EFG | COVERED_EFG | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2017-03-31 | 14495514768 | 9 | 7321056 | 1.0 | 282.0 | 25.0 | 34.0 | 67.0 | 4.533 | 28.0 | 52.0 | 4.984 | 6.0 | 15.0 | 1.5 | 28.0 | 0.642857 | 0.442308 |

Now to append the columns we need back up. This is going to work like a SQL left-join.

df_custom_boxscore = pd.merge(df_game_log, df_sum[['PASS', 'FG2M', 'OPEN_SHOTS' ,'OPEN_EFG', 'COVERED_EFG']], how = 'left', left_on = df_game_log['GAME_DATE'], right_on = df_sum['GAME_DATE'])

df_custom_boxscore.head(10)

| Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | FGM | ... | BLK | TOV | PF | PTS | DAYS_REST | PASS | FG2M | OPEN_SHOTS | OPEN_EFG | COVERED_EFG | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | 0021601160 | 2017-04-04 | NYK vs. CHI | W | 30 | 48 | 0.385 | 240 | 42 | ... | 7 | 15 | 22 | 100 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 1 | 1610612752 | 0021601145 | 2017-04-02 | NYK vs. BOS | L | 29 | 48 | 0.377 | 240 | 33 | ... | 2 | 11 | 20 | 94 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 2 | 1610612752 | 0021601133 | 2017-03-31 | NYK @ MIA | W | 29 | 47 | 0.382 | 240 | 38 | ... | 5 | 14 | 18 | 98 | 2.0 | 282.0 | 28.0 | 28.0 | 0.642857 | 0.442308 |

| 3 | 1610612752 | 0021601115 | 2017-03-29 | NYK vs. MIA | L | 28 | 47 | 0.373 | 240 | 33 | ... | 6 | 14 | 16 | 88 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 4 | 1610612752 | 0021601098 | 2017-03-27 | NYK vs. DET | W | 28 | 46 | 0.378 | 240 | 45 | ... | 5 | 12 | 16 | 109 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 5 | 1610612752 | 0021601085 | 2017-03-25 | NYK @ SAS | L | 27 | 46 | 0.370 | 240 | 41 | ... | 5 | 16 | 16 | 98 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 6 | 1610612752 | 0021601071 | 2017-03-23 | NYK @ POR | L | 27 | 45 | 0.375 | 240 | 36 | ... | 9 | 11 | 20 | 95 | 1.0 | NaN | NaN | NaN | NaN | NaN |

| 7 | 1610612752 | 0021601066 | 2017-03-22 | NYK @ UTA | L | 27 | 44 | 0.380 | 240 | 38 | ... | 1 | 11 | 26 | 101 | 2.0 | NaN | NaN | NaN | NaN | NaN |

| 8 | 1610612752 | 0021601050 | 2017-03-20 | NYK @ LAC | L | 27 | 43 | 0.386 | 240 | 40 | ... | 1 | 12 | 19 | 105 | 4.0 | NaN | NaN | NaN | NaN | NaN |

| 9 | 1610612752 | 0021601016 | 2017-03-16 | NYK vs. BKN | L | 27 | 42 | 0.391 | 240 | 41 | ... | 4 | 7 | 26 | 110 | 2.0 | NaN | NaN | NaN | NaN | NaN |

10 rows × 33 columns

Looks like everything joined correctly for exactly the date we chose. Let's make some modifications and then work on a script to join the rest of the dates.

df_custom_boxscore['PASS_AST'] = df_custom_boxscore['PASS'] / df_custom_boxscore['AST']

#It's easier to work with dichotomos variables as binaries instead of letters

df_custom_boxscore['RESULT'] = df_custom_boxscore['WL'].map(lambda x: 1 if 'W' in x else 0)We should be good to go!

Put all the steps above into a function:

def custom_boxscore(roster_id😞

game_logs = team.TeamGameLogs(roster_id)

df_game_logs = game_logs.info()

df_game_logs['GAME_DATE'] = pd.to_datetime(df_game_logs['GAME_DATE'])

df_game_logs['DAYS_REST'] = df_game_logs['GAME_DATE'] - df_game_logs['GAME_DATE'].shift(-1)

df_game_logs['DAYS_REST'] = df_game_logs['DAYS_REST'].astype('timedelta64[D]')

##Just like before, that should get us the gamelogs we need and the rest days column

##Now to loop through the list of dates for our other stats

##This will build up a dataframe of the custom stats and join that to the gamelogs

df_all =pd.DataFrame() ##blank dataframe

dates = df_game_logs['GAME_DATE']

for date in dates:

game_info = team.TeamPassTracking(roster_id, date_from=date, date_to=date).passes_made()

game_info['GAME_DATE'] = date ## We need to append the date to this so we can join back

temp_df = game_info.groupby(['GAME_DATE']).sum()

temp_df.reset_index(level = 0, inplace = True)

##now to get the shot info. For the most part, we're just reusing code we've already written

open_info = team.TeamShotTracking(roster_id,date_from =date, date_to = date).closest_defender_shooting()

open_info['OPEN'] = open_info['CLOSE_DEF_DIST_RANGE'].map(lambda x: True if 'Open' in x else False)

temp_df['OPEN_SHOTS'] = open_info.loc[open_info['OPEN'] == True, 'FGA'].sum()

temp_df['OPEN_EFG']= (open_info.loc[open_info['OPEN']== True, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== True, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== True, 'FGA'].sum())

temp_df['COVERED_EFG']= (open_info.loc[open_info['OPEN']== False, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== False, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== False, 'FGA'].sum())

##append this to our bigger dataframe

df_all = df_all.append(temp_df)

df_boxscore = pd.merge(df_game_logs, df_all[['PASS', 'FG2M', 'FG2_PCT', 'OPEN_SHOTS', 'OPEN_EFG', 'COVERED_EFG']], how = 'left', left_on = df_game_logs['GAME_DATE'], right_on = df_all['GAME_DATE'])

df_boxscore['PASS_AST'] = df_boxscore['PASS'] / df_boxscore['AST']

df_boxscore['RESULT'] = df_boxscore['WL'].map(lambda x: 1 if 'W' in x else 0 )

return df_boxscoreLet's see if this worked:

df_knicks_box_scores = custom_boxscore(knicks_id)

df_knicks_box_scores.head(10)

| Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | FGM | ... | PTS | DAYS_REST | PASS | FG2M | FG2_PCT | OPEN_SHOTS | OPEN_EFG | COVERED_EFG | PASS/ASSIST | RESULT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1610612752 | 0021600845 | 2017-02-15 | NYK @ OKC | L | 23 | 34 | 0.404 | 240 | 41 | ... | 105 | 3.0 | 339.0 | 26.0 | 4.070 | 38.0 | 0.500000 | 0.572917 | 17.842105 | 0 |

| 1 | 1610612752 | 0021600817 | 2017-02-12 | NYK vs. SAS | W | 23 | 33 | 0.411 | 240 | 34 | ... | 94 | 2.0 | 261.0 | 22.0 | 4.882 | 28.0 | 0.750000 | 0.437500 | 14.500000 | 1 |

| 2 | 1610612752 | 0021600800 | 2017-02-10 | NYK vs. DEN | L | 22 | 33 | 0.400 | 240 | 52 | ... | 123 | 2.0 | 313.0 | 31.0 | 5.733 | 46.0 | 0.652174 | 0.638298 | 8.694444 | 0 |

| 3 | 1610612752 | 0021600791 | 2017-02-08 | NYK vs. LAC | L | 22 | 32 | 0.407 | 240 | 46 | ... | 115 | 2.0 | 336.0 | 36.0 | 4.981 | 47.0 | 0.542553 | 0.544444 | 13.440000 | 0 |

| 4 | 1610612752 | 0021600768 | 2017-02-06 | NYK vs. LAL | L | 22 | 31 | 0.415 | 240 | 37 | ... | 107 | 2.0 | 316.0 | 30.0 | 4.501 | 35.0 | 0.571429 | 0.445652 | 19.750000 | 0 |

| 5 | 1610612752 | 0021600759 | 2017-02-04 | NYK vs. CLE | L | 22 | 30 | 0.423 | 240 | 39 | ... | 104 | 3.0 | 308.0 | 21.0 | 3.457 | 46.0 | 0.510870 | 0.486842 | 13.391304 | 0 |

| 6 | 1610612752 | 0021600733 | 2017-02-01 | NYK @ BKN | W | 22 | 29 | 0.431 | 240 | 35 | ... | 95 | 1.0 | 305.0 | 23.0 | 4.150 | 37.0 | 0.297297 | 0.435484 | 13.260870 | 1 |

| 7 | 1610612752 | 0021600724 | 2017-01-31 | NYK @ WAS | L | 21 | 29 | 0.420 | 240 | 34 | ... | 101 | 2.0 | 293.0 | 24.0 | 5.245 | 41.0 | 0.317073 | 0.460784 | 16.277778 | 0 |

| 8 | 1610612752 | 0021600711 | 2017-01-29 | NYK @ ATL | L | 21 | 28 | 0.429 | 340 | 51 | ... | 139 | 2.0 | 479.0 | 32.0 | 4.137 | 64.0 | 0.437500 | 0.500000 | 14.968750 | 0 |

| 9 | 1610612752 | 0021600699 | 2017-01-27 | NYK vs. CHA | W | 21 | 27 | 0.438 | 240 | 46 | ... | 110 | 2.0 | 350.0 | 31.0 | 6.167 | 37.0 | 0.594595 | 0.483051 | 15.909091 | 1 |

10 rows × 36 columns

I'm going to throw in a safeguard against divide by 0 errors just in case. This is a really janky, ugly fix, but it'll get the job done for the time being:

def custom_boxscore(roster_id😞

game_logs = team.TeamGameLogs(roster_id)

df_game_logs = game_logs.info()

df_game_logs['GAME_DATE'] = pd.to_datetime(df_game_logs['GAME_DATE'])

df_game_logs['days_rest'] = df_game_logs['GAME_DATE'] - df_game_logs['GAME_DATE'].shift(-1)

df_game_logs['days_rest'] = df_game_logs['days_rest'].astype('timedelta64[D]')

##Just like before, that should get us the gamelogs we need and the rest days column

##Now to loop through the list of dates for our other stats

##Build up a dataframe of our custom stats and join that to the gamelogs instead of joining each individual row

df_all =pd.DataFrame() ##blank dataframe

dates = df_game_logs['GAME_DATE']

for date in dates:

game_info = team.TeamPassTracking(roster_id, date_from=date, date_to=date).passes_made()

game_info['GAME_DATE'] = date ## We need to append the date to this so we can join back

temp_df = game_info.groupby(['GAME_DATE']).sum()

temp_df.reset_index(level = 0, inplace = True)

##now to get the shot info. For the most part, we're just reusing code we've already written

open_info = team.TeamShotTracking(roster_id,date_from =date, date_to = date).closest_defender_shooting()

open_info['OPEN'] = open_info['CLOSE_DEF_DIST_RANGE'].map(lambda x: True if 'Open' in x else False)

temp_df['OPEN_SHOTS'] = open_info.loc[open_info['OPEN'] == True, 'FGA'].sum()

temp_df['COVERED_SHOTS'] = open_info.loc[open_info['OPEN'] == False, 'FGA'].sum()

if open_info.loc[open_info['OPEN']== True, 'FGA'].sum() > 0:

temp_df['OPEN_EFG']= (open_info.loc[open_info['OPEN']== True, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== True, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== True, 'FGA'].sum())

else:

temp_df['OPEN_EFG'] = 0

if open_info.loc[open_info['OPEN']== False, 'FGA'].sum() > 0:

temp_df['COVER_EFG']= (open_info.loc[open_info['OPEN']== False, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== False, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== False, 'FGA'].sum())

else:

temp_df['COVER_EFG'] = 0

##append this to our bigger dataframe

df_all = df_all.append(temp_df)

df_boxscore = pd.merge(df_game_logs, df_all[['PASS', 'FG2M', 'FG2_PCT', 'OPEN_SHOTS','COVERED_SHOTS', 'OPEN_EFG', 'COVER_EFG']], how = 'left', left_on = df_game_logs['GAME_DATE'], right_on = df_all['GAME_DATE'])

df_boxscore['PASS_ASSIST'] = df_boxscore['PASS'] / df_boxscore['AST']

df_boxscore['RESULT'] = df_boxscore['WL'].map(lambda x: 1 if 'W' in x else 0 )

return df_boxscore

df_knicks_box_scores = custom_boxscore(knicks_id)Awesome! Looks like everything came out okay. With a team_id, we can do this with every team. We just need a get a list of team_ids and team names.

From the documentation:

http://nba-py.readthedocs.io/en/0.1a2/nba_py/

import nba_py

teams = nba_py.Scoreboard()

df_teams = pd.concat([teams.east_conf_standings_by_day()[['TEAM','TEAM_ID']], teams.west_conf_standings_by_day()[['TEAM','TEAM_ID']]])

df_teams.head()

| TEAM | TEAM_ID | |

|---|---|---|

| 0 | Boston | 1610612738 |

| 1 | Cleveland | 1610612739 |

| 2 | Toronto | 1610612761 |

| 3 | Washington | 1610612764 |

| 4 | Milwaukee | 1610612749 |

Now we can pass in the team IDs to create custom boxscores for all teams.

from nba_py import team

import time

def game_summary_teams(roster_ids, team_names😞

print roster_ids, team_names

for i in range (0, len(roster_ids)):

time.sleep(35)

print roster_ids[i]

print team_names[i]

info = team.TeamGameLogs(roster_ids[i])

df = info.info()

df['GAME_DATE'] = pd.to_datetime(df['GAME_DATE'])

df['DAYS_REST'] = df['GAME_DATE'] - df['GAME_DATE'].shift(-1) ##this gives us our days rest column

df['DAYS_REST']= df['DAYS_REST'].astype('timedelta64[D]')

vals = df['GAME_DATE']

df_passes = pd.DataFrame()

for v in vals:

time.sleep(.5)

##these values are being over-written each time

print 'GAME FOR' + team_names[i] + ' for ' + str(v)

game_info = team.TeamPassTracking(roster_ids[i], date_from =v, date_to = v).passes_made()

time.sleep(5)

game_info['EVENT_DATE'] = v

df_sum = game_info.groupby(['EVENT_DATE']).sum()

df_sum.reset_index(level = 0, inplace = True)

open_info = team.TeamShotTracking(roster_ids[i], date_from =v, date_to = v).closest_defender_shooting()

time.sleep(5)

open_info['OPEN'] = open_info['CLOSE_DEF_DIST_RANGE'].map(lambda x: True if 'Open' in x else False)

df_sum['OPEN_SHOTS'] = open_info.loc[open_info['OPEN']== True, 'FGA'].sum()

df_sum['COVERED_SHOTS'] = open_info.loc[open_info['OPEN']== False, 'FGA'].sum()

##gotta figure out a better divide by 0 fix.

if (open_info.loc[open_info['OPEN']== True, 'FGA'].sum() > 0😞

df_sum['OPEN_EFG']= (open_info.loc[open_info['OPEN']== True, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== True, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== True, 'FGA'].sum())

else:

df_sum['OPEN_EFG'] = 0

if (open_info.loc[open_info['OPEN']== False, 'FGA'].sum() > 0😞

df_sum['COVERED_EFG']= (open_info.loc[open_info['OPEN']== False, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== False, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== False, 'FGA'].sum())

else:

df_sum['COVERED_EFG']=0

df_passes = df_passes.append(df_sum)

info = pd.merge(df, df_passes[['PASS', 'FG2M', 'FG2_PCT','OPEN_SHOTS', 'OPEN_EFG','COVERED_SHOTS', 'COVERED_EFG']], how = 'left',left_on = df['GAME_DATE'], right_on = df_passes['EVENT_DATE'])

info['PASS_AST'] = info['PASS']/info['AST']

info['RESULT'] = info['WL'].map(lambda x: 1 if 'W' in x else 0 )

file_name = team_names[i]

info.to_csv(file_name, index = False)

teams = df_teams['TEAM']

roster_ids = df_teams['TEAM_ID']

Just feed in these two arrays into the function and we should be good to go.

I went ahead and did this for a few teams. The NBA's website cuts you off if you make too many requests too quickly (hence all the sleep statements above).

After fiddling with it for a while, I was finally able to get the data for each team. You might have to run the code above piece by piece, or just use the CSVs here:

Visualization

Let's see if we can visually represent anything about each team's offense:

These visualizations are going to be done in Plotly because I think it's the best vizualiation library out there for quickly and easily making graphs that are both visually appleaing and interactive, but feel free to use something else (although I can't imagine why you would).

This is going to break my heart a bit...but lets compare some of the stats fetched between the Knicks and teams that aren't the Knicks.

Something about not counting another man's money right?

knicks = pd.read_csv('New York.csv')

spurs = pd.read_csv('San Antonio.csv')

warriors = pd.read_csv('Golden State.csv')

thunder = pd.read_csv('Oklahoma City.csv')

celtics = pd.read_csv('Boston.csv')

import plotly.plotly as py

import plotly.graph_objs as go

trace0 = go.Box(

y=knicks['PASS'],

name='Knicks',

boxmean='sd'

)

trace1 = go.Box(

y=spurs['PASS'],

name='Spurs',

boxmean='sd'

)

trace2 = go.Box(

y=warriors['PASS'],

name='Warriors',

boxmean='sd'

)

trace3 = go.Box(

y=thunder['PASS'],

name='Thunder',

boxmean='sd'

)

trace4 = go.Box(

y=celtics['PASS'],

name='Celtics',

boxmean='sd'

)

layout = go.Layout(

title='Passing Box Plot',

)

data = [trace0, trace1, trace2, trace3, trace4]

fig = go.Figure(data=data, layout=layout)

py.iplot(fig)Ignoring the outlier from the 4OT Knicks-Hawks game, this graph is pretty telling. Obviously this isn't the full story, but it looks like the Spurs and Thunder play pretty consistent but different offenses. What's really interesting is that despite the Spurs and Warriors having coaches and systems that emphasize ball movement, they throw FEWER passes than a team like the Knicks.

Let's look at if those passes translate to assists:

import plotly.plotly as py

import plotly.graph_objs as go

trace0 = go.Box(

y=knicks['PASS_AST'],

name='Knicks',

boxmean='sd'

)

trace1 = go.Box(

y=spurs['PASS_AST'],

name='Spurs',

boxmean='sd'

)

trace2 = go.Box(

y=warriors['PASS_AST'],

name='Warriors',

boxmean='sd'

)

trace3 = go.Box(

y=thunder['PASS_AST'],

name='Thunder',

boxmean='sd'

)

trace4 = go.Box(

y=celtics['PASS_AST'],

name='Celtics',

boxmean='sd'

)

data = [trace0, trace1, trace2, trace3, trace4]

layout = go.Layout(

title='Passes per Assist',

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig)These two graphs in conjunction are pretty telling. On average, it takes the Knicks almost 2 more passes than the Spurs, and 5 more than the Warriors to get an assist.

Going by this graph every, ~10th pass the Warriors make results in an assist. From the previous graph, we see that they make an average of 313 passes a game. This almost lines up with their season average of roughly 31 assists/game.

The standard deviation of the above graph can be interpreted in a few ways. On one hand, it's a loose metric of playstyle consistency; teams that play the same way through the entire game are probably going to have a lower standard deviation than teams who pass the ball for 3 quarters and forget to in the 4th (cough cough, New York, cough cough). On the other hand, teams might have different playstyles depending on the lineups they have on the floor, resulting in a higher standard deviation (Spurs).

The Thunder probably fall into this, most likely due to Russell Westbrook averaging over 10 of the team's total 20 assists per game.

Obviously, there's a lot more to the story. How many passes led to FTs? Is there any correlation between passes per assist and wins? If anything, stats like these tell you more about what kind of offense a team runs, not how effectively they run it.

Now let's see if there's any noticeable difference in wins vs losses:

import plotly.plotly as py

import plotly.graph_objs as go

trace0 = go.Box(

y=knicks.loc[knicks['WL'] == 'W']['PASS_AST'],

name='Knicks Wins',

boxmean='sd'

)

trace1 = go.Box(

y=knicks.loc[knicks['WL'] == 'L']['PASS_AST'],

name='Knicks Loss',

boxmean='sd'

)

trace2 = go.Box(

y=spurs.loc[spurs['WL'] == 'W']['PASS_AST'],

name='Spurs Wins',

boxmean='sd'

)

trace3 = go.Box(

y=spurs.loc[spurs['WL'] == 'L']['PASS_AST'],

name='Spurs Loss',

boxmean='sd'

)

trace4 = go.Box(

y=warriors.loc[warriors['WL'] == 'W']['PASS_AST'],

name='Warriors Wins',

boxmean='sd'

)

trace5 = go.Box(

y=warriors.loc[warriors['WL'] == 'L']['PASS_AST'],

name='Warriors Losses',

boxmean='sd'

)

trace6 = go.Box(

y=thunder.loc[thunder['WL'] == 'W']['PASS_AST'],

name='Thunder Wins',

boxmean='sd'

)

trace7 = go.Box(

y=thunder.loc[thunder['WL'] == 'L']['PASS_AST'],

name='Thunder Losses',

boxmean='sd'

)

trace8 = go.Box(

y=celtics.loc[celtics['WL'] == 'W']['PASS_AST'],

name='Celtics Wins',

boxmean='sd'

)

trace9 = go.Box(

y=celtics.loc[celtics['WL'] == 'L']['PASS_AST'],

name='Celtics Lossses',

boxmean='sd'

)

layout = go.Layout(

title='Passes per Assist in Wins vs Losses',

)

data = [trace0, trace1, trace2, trace3, trace4, trace5, trace6, trace7, trace8, trace9]

fig = go.Figure(data=data, layout=layout)

py.iplot(fig)Apart from the Celtics, every team had to make more at least 1 more pass to get an assist in games they lost compared to games they lost. In one way, it's almost like they have to "work harder" for assists.

From the looks of this graph, the Warriors offense when its firing on all cylinders is a in a league of its own.

Just to reiterate once again, the purpose of the visualizations above is to ask, not answer questions.

But now, let's see if we can get any team specific insights from any of this:

The Clippers have played without Chris Paul and Blake Griffin, two of the best passers at their position in the league.

Do the boxscores show how their offense has had to adjust?

clippers = pd.read_csv('LA Clippers.csv')

clippers = clippers.sort(['GAME_DATE'], ascending = True)

clippers.head()

/home/virajparekh/anaconda2/lib/python2.7/site-packages/ipykernel/__main__.py:2: FutureWarning:

sort(columns=....) is deprecated, use sort_values(by=.....)

| Unnamed: 0 | Team_ID | Game_ID | GAME_DATE | MATCHUP | WL | W | L | W_PCT | MIN | ... | DAYS_REST | PASS | FG2M | FG2_PCT | OPEN_SHOTS | COVERED_SHOTS | OPEN_EFG | COVERED_EFG | PASS_AST | RESULT | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 77 | 53.0 | 1610612746 | 21600017 | 2016-10-27 | LAC @ POR | W | 1 | 0 | 1.00 | 240 | ... | NaN | 301.0 | 21.0 | 4.047 | 41.0 | 50.0 | 0.463415 | 0.440000 | 25.083333 | 1.0 |

| 76 | 52.0 | 1610612746 | 21600035 | 2016-10-30 | LAC vs. UTA | W | 2 | 0 | 1.00 | 240 | ... | 3.0 | 275.0 | 22.0 | 4.757 | 34.0 | 48.0 | 0.558824 | 0.375000 | 16.176471 | 1.0 |

| 75 | 51.0 | 1610612746 | 21600045 | 2016-10-31 | LAC vs. PHX | W | 3 | 0 | 1.00 | 240 | ... | 1.0 | 276.0 | 28.0 | 4.795 | 38.0 | 42.0 | 0.565789 | 0.511905 | 13.142857 | 1.0 |

| 74 | 50.0 | 1610612746 | 21600064 | 2016-11-02 | LAC vs. OKC | L | 3 | 1 | 0.75 | 240 | ... | 2.0 | 302.0 | 24.0 | 2.893 | 33.0 | 54.0 | 0.424242 | 0.435185 | 13.727273 | 0.0 |

| 73 | 49.0 | 1610612746 | 21600074 | 2016-11-04 | LAC @ MEM | W | 4 | 1 | 0.80 | 240 | ... | 2.0 | 300.0 | 17.0 | 2.681 | 41.0 | 44.0 | 0.451220 | 0.397727 | 15.789474 | 1.0 |

5 rows × 38 columns

clippers_rolling = clippers[['GAME_DATE','PTS','TOV','PASS', 'OPEN_SHOTS', 'OPEN_EFG', 'AST', 'PASS_AST']].rolling(5).mean()

clippers_rolling.head(10)

| GAME_DATE | PTS | TOV | PASS | OPEN_SHOTS | OPEN_EFG | AST | PASS_AST | |

|---|---|---|---|---|---|---|---|---|

| 77 | 2016-10-27 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 76 | 2016-10-30 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 75 | 2016-10-31 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 74 | 2016-11-02 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 73 | 2016-11-04 | 100.0 | 12.8 | 290.8 | 37.4 | 0.492698 | 18.2 | 16.783881 |

| 72 | 2016-11-05 | 100.4 | 13.0 | 293.6 | 37.4 | 0.514649 | 20.6 | 14.392215 |

| 71 | 2016-11-07 | 105.6 | 12.4 | 302.4 | 39.2 | 0.544745 | 22.8 | 13.435492 |

| 70 | 2016-11-09 | 104.6 | 10.6 | 303.0 | 38.8 | 0.537143 | 23.4 | 13.131921 |

| 69 | 2016-11-11 | 110.0 | 9.2 | 308.8 | 41.2 | 0.563405 | 22.6 | 14.064244 |

| 68 | 2016-11-12 | 114.0 | 9.8 | 306.6 | 40.8 | 0.591110 | 23.8 | 13.218349 |

If we want to see all of this on the same graph, we need to normalize it. This means we're going to scale each value by subtracting it from the mean, and dividing by standard deviation.

This is a bit of a janky way to do so because it relies on the columns being in the same order.

clippers_norm = clippers[['GAME_DATE', 'PTS','TOV','PASS', 'OPEN_SHOTS', 'OPEN_EFG', 'AST', 'PASS_AST']]

for c in clippers_norm.columns[1:]:

clippers_rolling[c] = (clippers_rolling[c] - clippers_norm[c].mean())/clippers_norm[c].std()

clippers_rolling.head(10)

| GAME_DATE | PTS | TOV | PASS | OPEN_SHOTS | OPEN_EFG | AST | PASS_AST | |

|---|---|---|---|---|---|---|---|---|

| 77 | 2016-10-27 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 76 | 2016-10-30 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 75 | 2016-10-31 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 74 | 2016-11-02 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 73 | 2016-11-04 | -0.705388 | 0.033424 | -0.403622 | -0.504550 | -0.714018 | -0.878286 | 0.739290 |

| 72 | 2016-11-05 | -0.672092 | 0.088895 | -0.284434 | -0.504550 | -0.480817 | -0.376102 | 0.083865 |

| 71 | 2016-11-07 | -0.239256 | -0.077516 | 0.090155 | -0.202852 | -0.161093 | 0.084234 | -0.178321 |

| 70 | 2016-11-09 | -0.322493 | -0.576750 | 0.115695 | -0.269896 | -0.241857 | 0.209780 | -0.261513 |

| 69 | 2016-11-11 | 0.126991 | -0.965042 | 0.362583 | 0.132369 | 0.037146 | 0.042385 | -0.006014 |

| 68 | 2016-11-12 | 0.459943 | -0.798631 | 0.268936 | 0.065325 | 0.331470 | 0.293477 | -0.237828 |

import plotly.plotly as py

import plotly.graph_objs as go

trace = go.Scatter(

x = clippers_rolling['GAME_DATE'],

y = clippers_rolling['PASS'],

name = 'Pass to Assist'

)

trace1 = go.Scatter(

x = clippers_rolling['GAME_DATE'],

y = clippers_rolling['PTS'],

name = 'Points'

)

trace2 = go.Scatter(

x = clippers_rolling['GAME_DATE'],

y = clippers_rolling['AST'],

name = 'Assists'

)

trace3 = go.Scatter(

x = clippers_rolling['GAME_DATE'],

y = clippers_rolling['PASS_AST'],

name = 'PASSES PER ASSIST'

)

data = [trace1,trace2 ,trace3]

layout = go.Layout(

title = 'Clippers Offense',

showlegend=True,

annotations=[

dict(

x='2016-12-20',

y=-1,

xref='x',

yref='y',

text='Blake Griffin Injury',

showarrow=True,

arrowhead=7,

ax=0,

ay=-330

),

dict(

x='2017-01-19',

y=-1,

xref='x',

yref='y',

text='Chris Paul Injury',

showarrow=True,

arrowhead=7,

ax=0,

ay=-275

),

dict(

x='2017-01-24',

y=-1,

xref='x',

yref='y',

text='Blake Griffin Returns',

showarrow=True,

arrowhead=7,

ax=0,

ay=-330

),

dict(

x='2017-02-24',

y=-1,

xref='x',

yref='y',

text='Chris Paul Returns',

showarrow=True,

arrowhead=7,

ax=0,

ay=-330

)

]

)

fig = go.Figure(data=data, layout=layout)

py.iplot(fig)According to this, Blake's abscence definitely had an effect on how the team runs their offense. Passes per assist went up just as total points went down after Blake's injury.

From the looks of it, the Clippers were starting to adjust to Blake being out just as CP3 got hurt, but for the most part, there's too much noise here.

Blake gets injured almost every year, so I wonder how this graph looks for 2015-2016 data.....

This is just the tip of the iceburg. Exploratory analysis like this is about finding interesting questions, not answering them.

Building the Pipe:

Now that we can what we can do with the data, let's get everything piping into a database.

If you see yourself doing some of your own analysis or just want some more experience moving and cleaning data, this section is a high level overview of how to do that:

I stored the output from the previous function in CSVs in the same directory as this notebook so they can be easily imported.

Pandas has a built in to_sql function that works with sqlalchemy:

http://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.to_sql.html

import glob, os

import time

files = []

os.chdir("YOUR_DIRECTORY_HERE")

for file in glob.glob("*.csv"😞

files.append(file)

from sqlalchemy import *

for f in files:

team_name = str(f)

team_name = team_name.partition('.csv')[0].replace(' ','_').replace('.','').lower()

df = pd.read_csv(f)

eng = create_engine("YOUR_CONNECTION_STRING_HERE")

df.to_sql(con = eng, index = False, name = team_name, schema = 'nba', if_exists = 'append')

time.sleep(10)The python sqlalchemy package supports most databases, so refer to the documentation for each db's connection string:

What would really make analysis easier is if information updated after every game. Let's try using the info we have to update one of the CSVs, and then use that to do it for all the other files:

def update_info(file😞

file_name = str(file)

name = file_name.partition('.csv')[0].replace(' ','_').replace('.','').lower()

old_info = pd.read_csv(file_name)

team_id = old_info['Team_ID'].max()

new_logs = team.TeamGameLogs(team_id).info()

old_info['GAME_DATE'] = pd.to_datetime(old_info['GAME_DATE'])

order = old_info.columns

new_logs['GAME_DATE'] = pd.to_datetime(new_logs['GAME_DATE'])

## If there's no new games for the team, return:

if max(new_logs['GAME_DATE']) == max(old_info['GAME_DATE']):

return

new_logs['DAYS_REST']= new_logs['GAME_DATE'] - new_logs['GAME_DATE'].shift(-1) ##this gives us our days rest column

new_logs['DAYS_REST']= new_logs['DAYS_REST'].astype('timedelta64[D]')

##keeping datatypes consistent

new_logs['Game_ID'] = new_logs['Game_ID'].astype(str).astype(int)

##Append the info from the previously saved CSV and append it to the new game logs

info = pd.concat([old_info, new_logs], ignore_index = True)

##Drop the duplicates

info = info.drop_duplicates(['Game_ID'], keep = 'first')

## Sort by date

info = info.sort(['GAME_DATE'], ascending = [0])

##Reset the axis

info = info.reset_index(drop = True)

##Find the dates where there's no values for any of the stats we fetched. We can make requests for only those dates

updates = info.loc[np.isnan(info['COVERED_EFG'])]

##If the team's boxscore is up to date, return.

if len(updates) == 0:

return

dates = updates['GAME_DATE']

df_passes = pd.DataFrame()

for d in dates:

##All exactly the same as before

game_info = team.TeamPassTracking(team_id, date_from =d, date_to = d).passes_made()

game_info['EVENT_DATE'] = d

df_sum = game_info.groupby(['EVENT_DATE']).sum()

df_sum.reset_index(level = 0, inplace = True)

open_info = team.TeamShotTracking(team_id, date_from =d, date_to = d).closest_defender_shooting()

open_info['OPEN'] = open_info['CLOSE_DEF_DIST_RANGE'].map(lambda x: True if 'Open' in x else False)

df_sum['OPEN_SHOTS'] = open_info.loc[open_info['OPEN']== True, 'FGA'].sum()

df_sum['COVERED_SHOTS'] = open_info.loc[open_info['OPEN']== False, 'FGA'].sum()

if (open_info.loc[open_info['OPEN']== True, 'FGA'].sum() > 0😞

df_sum['OPEN_EFG']= (open_info.loc[open_info['OPEN']== True, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== True, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== True, 'FGA'].sum())

else:

df_sum['OPEN_EFG'] = 0

if (open_info.loc[open_info['OPEN']== False, 'FGA'].sum() > 0😞

df_sum['COVERED_EFG']= (open_info.loc[open_info['OPEN']== False, 'FGM'].sum() + (.5 * open_info.loc[open_info['OPEN']== False, 'FG3M'].sum()))/(open_info.loc[open_info['OPEN']== False, 'FGA'].sum())

else:

df_sum['COVERED_EFG']=0

df_passes = df_passes.append(df_sum)

df_passes = df_passes.reset_index(drop = True)

##Join the new stats with the old information.

info.update(df_passes[['PASS', 'FG2M', 'FG2_PCT', 'OPEN_SHOTS', 'OPEN_EFG', 'COVERED_EFG','COVERED_SHOTS']])

##Calculate these two post join in case there were any stat corrections

info['PASS_AST'] = info['PASS'] / info['AST']

info['RESULT'] = info['WL'].map(lambda x: 1 if 'W' in x else 0 )

##Reorder the columns in the dataframe

info = info[order]

##Save to csv

info.to_csv(file_name, index = False)

##Upload, and replace the information already there. Since we're working with a relatively small volume of data,

##upserts isn't worth the time.

info.to_sql(con = eng, index = False, name = name, schema = 'nba', if_exists = 'replace')

print name

return infoYou can put the script above in a seperate .py file in the same directory as all of the team CSVs and schedule it via crontab to run automatically so your database and CSVs will always contain the latest information (assuming stats.nba.com doesn't change anything on their end).

Here's a great tutorial on how to do so: https://www.youtube.com/watch?v=hDJ3XQzW8nk

Wrapping it all up

That should be everything you need to get started.

If this was interesting to you and you want to test what you learned, here are a few excercises (in relatively increasing difficulty):

1) The current boxscores only have a team_id in each table. Find a way to insert a column for a team name.

2) Throw in some data about conested/unconested rebounding. This might be interesting when looking at different factors that contribute to wins.

3) There's no player specific data in any of this. Try throwing in a column in each team's boxscores with each game's leading scorer.

4) Compare data across seasons! Is it possible to visualize changes to the Thunder offense after KD left? How different is Tom Thibideau's offense from Sam Mitchell's scheme in Minnesota?

5) Do some data science! I'd love to see what sort of interesting models can be drummed up using this infastructure.

Thanks for reading! Send your suggestions, solutions, or anything else to viraj@astronomer.io

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.