Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Dynamic profiling tool

Dynamic profiling tool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Just arrived in the Alteryx community - Happy to be here!

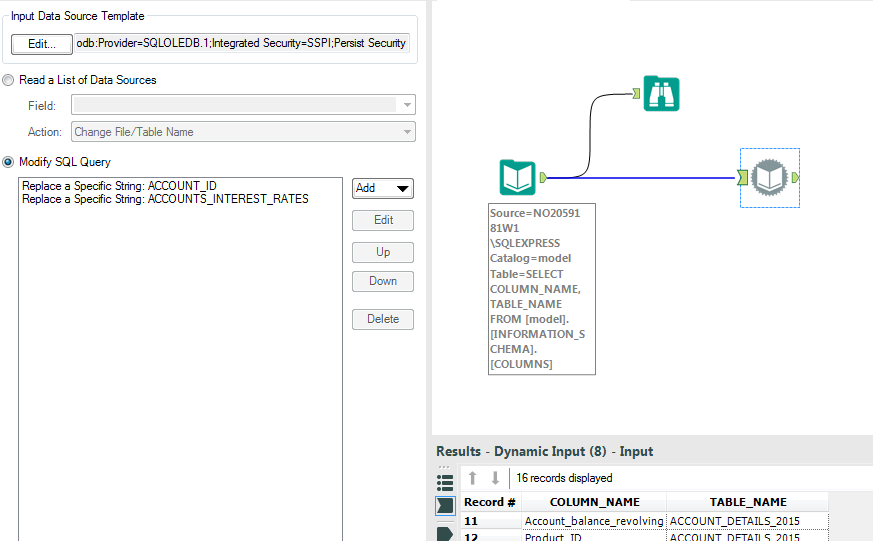

I am attempting to build a data profiling tool for an SQL database, that can read a variable amount of tables with an uneven number of columns in each table. The workflow should then be able to run a set of operations on each column (count blanks, count distinct, etc...) and output the results for each column into an Excel spreadsheet or similar.

I have done some testing with the input and dynamic input tools, and arrived at the workflow underneath. As you can see, there is an input node that retrieves the user-defined field and table-names in the database, and feeds them into a dynamic input node that loops through all fields and tablenames. However, when I try to link this up to anything else, I get trouble with several error messages, as well as the fact that I cannot find a way to dynamically feed the output from my dynamic input node into a formula node.

Is there anything I have overlooked in how to do this, or any alternatives that would be more sensible to make my dynamic profiling tool?

Solved! Go to Solution.

- Labels:

-

Parse

-

Preparation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Interesting idea - will play with at Lunch

Think you might need to be careful around different field types. I think you might need a couple of different paths for each of the type.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Attached my lunchtime playing.

You need to cast everything a fixed type then you are ok.

I cast both TableName and ColumnName in SQL to a nvarchar(256).

The value I cast to a 2048 block string. One optimsation would be to read max length needed from the input columns and set that.

The template query I used was:

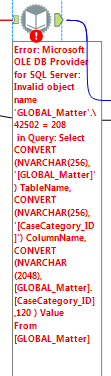

Select CONVERT(NVARCHAR(256), '[GLOBAL_Matter]') TableName, CONVERT(NVARCHAR(256), '[CaseCategory_ID]') ColumnName, CONVERT(NVARCHAR(2048), [GLOBAL_Matter].[CaseCategory_ID],120 ) Value From [GLOBAL_Matter]

I adjusted the SQL table column query to:

select '[' + TABLE_SCHEMA + '].[' + TABLE_NAME +']' TableName,

'[' + COLUMN_NAME + ']' ColumnName,

DATA_TYPE,

CHARACTER_MAXIMUM_LENGTH,

NUMERIC_PRECISION

from INFORMATION_SCHEMA.COLUMNS

By surrounding with [] I think safer for this one.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for your advice! This seems a good step closer to a solution. However, I keep getting an error message in relation to the [Global_Matter]. Any thoughts?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Was just the sample table I used.

Have amended to:

select CONVERT(NVARCHAR(256), 'INFORMATION_SCHEMA.COLUMNS') TableName,

CONVERT(NVARCHAR(256), 'COLUMN_NAME') ColumnName,

CONVERT(NVARCHAR(256), COLUMN_NAME, 120) Value

from INFORMATION_SCHEMA.COLUMNS

You will need to change connection strings in both input and dynamic input (as points to my laptops local SQL Server)

Otherwise should work out of the box now I hope

-

AAH

1 -

AAH Welcome

2 -

Academy

24 -

ADAPT

82 -

Add column

1 -

Administration

20 -

Adobe

177 -

Advanced Analytics

1 -

Advent of Code

5 -

Alias Manager

70 -

Alteryx

1 -

Alteryx 2020.1

3 -

Alteryx Academy

3 -

Alteryx Analytics

1 -

Alteryx Analytics Hub

2 -

Alteryx Community Introduction - MSA student at CSUF

1 -

Alteryx Connect

1 -

Alteryx Designer

44 -

Alteryx Engine

1 -

Alteryx Gallery

1 -

Alteryx Hub

1 -

alteryx open source

1 -

Alteryx Post response

1 -

Alteryx Practice

134 -

Alteryx team

1 -

Alteryx Tools

1 -

AlteryxForGood

1 -

Amazon s3

138 -

AMP Engine

192 -

ANALYSTE INNOVATEUR

1 -

Analytic App Support

1 -

Analytic Apps

17 -

Analytic Apps ACT

1 -

Analytics

2 -

Analyzer

17 -

Announcement

4 -

API

1,047 -

App

1 -

App Builder

43 -

Append Fields

1 -

Apps

1,169 -

Archiving process

1 -

ARIMA

1 -

Assigning metadata to CSV

1 -

Authentication

4 -

Automatic Update

1 -

Automating

3 -

Banking

1 -

Base64Encoding

1 -

Basic Table Reporting

1 -

Batch Macro

1,278 -

Beginner

1 -

Behavior Analysis

219 -

Best Practices

2,426 -

BI + Analytics + Data Science

1 -

Book Worm

2 -

Bug

625 -

Bugs & Issues

2 -

Calgary

59 -

CASS

46 -

Cat Person

1 -

Category Documentation

1 -

Category Input Output

2 -

Certification

4 -

Chained App

236 -

Challenge

7 -

Charting

1 -

Clients

3 -

Clustering

1 -

Common Use Cases

3,401 -

Communications

1 -

Community

188 -

Computer Vision

46 -

Concatenate

1 -

Conditional Column

1 -

Conditional statement

1 -

CONNECT AND SOLVE

1 -

Connecting

6 -

Connectors

1,190 -

Content Management

8 -

Contest

6 -

Conversation Starter

17 -

copy

1 -

COVID-19

4 -

Create a new spreadsheet by using exising data set

1 -

Credential Management

3 -

Curious*Little

1 -

Custom Formula Function

1 -

Custom Tools

1,729 -

Dash Board Creation

1 -

Data Analyse

1 -

Data Analysis

2 -

Data Analytics

1 -

Data Challenge

83 -

Data Cleansing

4 -

Data Connection

1 -

Data Investigation

3,078 -

Data Load

1 -

Data Science

38 -

Database Connection

1,905 -

Database Connections

5 -

Datasets

4,601 -

Date

3 -

Date and Time

3 -

date format

2 -

Date selection

2 -

Date Time

2,897 -

Dateformat

1 -

dates

1 -

datetimeparse

2 -

Defect

2 -

Demographic Analysis

173 -

Designer

1 -

Designer Cloud

488 -

Designer Integration

60 -

Developer

3,667 -

Developer Tools

2,942 -

Discussion

2 -

Documentation

456 -

Dog Person

4 -

Download

910 -

Duplicates rows

1 -

Duplicating rows

1 -

Dynamic

1 -

Dynamic Input

1 -

Dynamic Name

1 -

Dynamic Processing

2,553 -

dynamic replace

1 -

dynamically create tables for input files

1 -

Dynamically select column from excel

1 -

Email

747 -

Email Notification

1 -

Email Tool

2 -

Embed

1 -

embedded

1 -

Engine

129 -

Enhancement

3 -

Enhancements

2 -

Error Message

1,984 -

Error Messages

6 -

ETS

1 -

Events

178 -

Excel

1 -

Excel dynamically merge

1 -

Excel Macro

1 -

Excel Users

1 -

Explorer

2 -

Expression

1,701 -

extract data

1 -

Feature Request

1 -

Filter

1 -

filter join

1 -

Financial Services

1 -

Foodie

2 -

Formula

2 -

formula or filter

1 -

Formula Tool

4 -

Formulas

2 -

Fun

4 -

Fuzzy Match

617 -

Fuzzy Matching

1 -

Gallery

594 -

General

93 -

General Suggestion

1 -

Generate Row and Multi-Row Formulas

1 -

Generate Rows

1 -

Getting Started

1 -

Google Analytics

140 -

grouping

1 -

Guidelines

11 -

Hello Everyone !

2 -

Help

4,137 -

How do I colour fields in a row based on a value in another column

1 -

How-To

1 -

Hub 20.4

2 -

I am new to Alteryx.

1 -

identifier

1 -

In Database

856 -

In-Database

1 -

Input

3,727 -

Input data

2 -

Inserting New Rows

1 -

Install

3 -

Installation

306 -

Interface

2 -

Interface Tools

1,652 -

Introduction

5 -

Iterative Macro

954 -

Jira connector

1 -

Join

1,744 -

knowledge base

1 -

Licenses

1 -

Licensing

210 -

List Runner

1 -

Loaders

12 -

Loaders SDK

1 -

Location Optimizer

52 -

Lookup

1 -

Machine Learning

231 -

Macro

2 -

Macros

2,508 -

Mapping

1 -

Marketo

12 -

Marketplace

4 -

matching

1 -

Merging

1 -

MongoDB

66 -

Multiple variable creation

1 -

MultiRowFormula

1 -

Need assistance

1 -

need help :How find a specific string in the all the column of excel and return that clmn

1 -

Need help on Formula Tool

1 -

network

1 -

News

1 -

None of your Business

1 -

Numeric values not appearing

1 -

ODBC

1 -

Off-Topic

14 -

Office of Finance

1 -

Oil & Gas

1 -

Optimization

651 -

Output

4,526 -

Output Data

1 -

package

1 -

Parse

2,108 -

Pattern Matching

1 -

People Person

6 -

percentiles

1 -

Power BI

197 -

practice exercises

1 -

Predictive

2 -

Predictive Analysis

825 -

Predictive Analytics

1 -

Preparation

4,650 -

Prescriptive Analytics

186 -

Publish

230 -

Publishing

2 -

Python

731 -

Qlik

36 -

quartiles

1 -

query editor

1 -

Question

18 -

Questions

1 -

R Tool

454 -

refresh issue

1 -

RegEx

2,115 -

Remove column

1 -

Reporting

2,123 -

Resource

15 -

RestAPI

1 -

Role Management

3 -

Run Command

501 -

Run Workflows

10 -

Runtime

1 -

Salesforce

245 -

Sampling

1 -

Schedule Workflows

3 -

Scheduler

372 -

Scientist

1 -

Search

3 -

Search Feedback

20 -

Server

528 -

Settings

760 -

Setup & Configuration

47 -

Sharepoint

466 -

Sharing

2 -

Sharing & Reuse

1 -

Snowflake

1 -

Spatial

1 -

Spatial Analysis

559 -

Student

9 -

Styling Issue

1 -

Subtotal

1 -

System Administration

1 -

Tableau

465 -

Tables

1 -

Technology

1 -

Text Mining

415 -

Thumbnail

1 -

Thursday Thought

10 -

Time Series

397 -

Time Series Forecasting

1 -

Tips and Tricks

3,800 -

Tool Improvement

1 -

Topic of Interest

40 -

Transformation

3,233 -

Transforming

3 -

Transpose

1 -

Truncating number from a string

1 -

Twitter

24 -

Udacity

85 -

Unique

2 -

Unsure on approach

1 -

Update

1 -

Updates

2 -

Upgrades

1 -

URL

1 -

Use Cases

1 -

User Interface

21 -

User Management

4 -

Video

2 -

VideoID

1 -

Vlookup

1 -

Weekly Challenge

1 -

Weibull Distribution Weibull.Dist

1 -

Word count

1 -

Workflow

8,508 -

Workflows

1 -

YearFrac

1 -

YouTube

1 -

YTD and QTD

1

- « Previous

- Next »