Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- power regression to linear?

power regression to linear?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

So, to be up front, i already have *a* solution to this problem.. and this more of a math question than it is an Alteryx question..

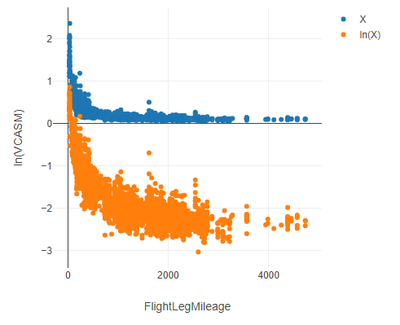

I have a data set (shown in blue) that very clearly follows a power curve. and the goal is to find a mathematical curve that best fits it

My current solution to use a python Curve_Fit function. It fits the data to the formula y = a*x^b.. This solution works well.. But since it's stepping through many iterations of 'a' and 'b' to find the best fit, it's kind of slow

After some googling, i have been led to believe that i should be able to mathematically transform the raw data into a linear trend.. then i can just do a linear regression on it.. but i cant figure out how to do that..

Are there any math whizzes out there that know if it's possible to transform this raw data from a power curve into a linear one?

Solved! Go to Solution.

- Labels:

-

Preparation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

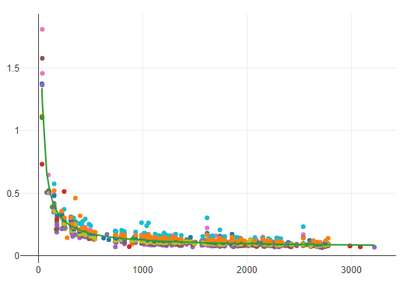

Got it! (kind of) my solution needs to be polished so i can build it into a macro, but i found the solution.. it isnt as elegant as having the actual power function (y = a*x^b), but at least with this method, i dont need a stepwise python script to find the power function by trial+error

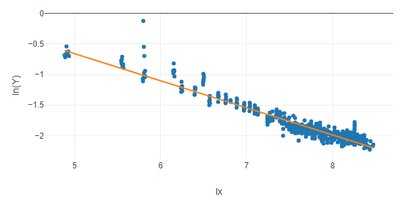

First i needed to take the natural log of both X and Y (Log(x), and Log(Y)). that transforms the data into a linear shape that i can calculate a line of best fit on

Then i plot all the values for that line of best fit

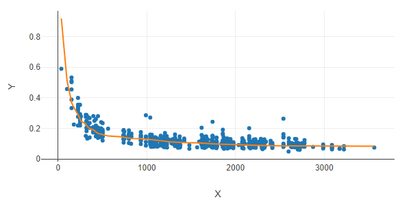

Then i can transform that line line back into normal numbers (e^LinReg), and plot it along side the original X and Y values

I feel like a wizard.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Very cool @Matthew!

Reminds me a little of the Kernel Trick from Support Vector Machines which I still think is awesome. Also a bit reminiscent of the Time Series transformations too.

Pretty nifty stuff - thanks for sharing what you've done here!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@ianwi thanks for sharing those links!

i dont think a log transformation qualifies as a kernel trick, because i'm not introducing an additional dimension, but i see what you mean about it being similar because i'm manufacturing linearity!

Do you happen to have any resources for learning more about support vector machines or the kernel method?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Matthew,

Glad you thought the Kernel Trick was interesting too!

Yes, we do have more resources on SVM in the Data Science Learning Path. Specifically it's in the Creating a Predictive Model Interactive Lesson. Loads of lessons in Academy on a wide variety of topics if you ever get curious...

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »