Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Grouping duplicate users over 30 attributes

Grouping duplicate users over 30 attributes

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Altreryx Hive Mind

I have a challenge that I have yet to find a robust solution

I have a ‘Person’ table some million-plus rows long and some 30 attributes/columns wide and many duplicated uses in it.

I am trying to group duplicate users together ready to be migrated to a new system, however, the data quality is not ideal (whenever is it!) and, for a given person, data attributes may or may not be populated or even different for different rows. (a classic data governance/quality issue!)

Take the (Dummy) sample data below, it is easy for me as a human to see that the first 4 rows are the same person despite the title & phone being different and the email missing on row one as there is enough information to extrapolate a relationship (e.g. the phone is the same for rows 1 &2 and the email is the same for rows 2,3 & 4)

I have tried several approaches but all without joy. The solutions tried half worked but none fully worked and none are scalable for the 30 some attributes that need checking.

# generating hash keys for column combinations and then aggregating (count group-by) on the hashkeys and comparing the counts

# Using the Fuzzy Match & Make Group tools (both listing columns individually and using long concatenated column) but couldn’t get it to work fully even on this small dummy dataset.

(1,2,3 & 4 Group) (5,6 & 7 Group) (8 Group) (9 Group)

| OBJECT_ID | Fname | Sname | Title | DOB | phone | |

| 1 | john | brown | Mr | 02/02/1972 | 11111 | |

| 2 | john | brown | Mr | 02/02/1972 | ghjkghjk_AT_hotmail.com | 11111 |

| 3 | john | brown | Capt | 02/02/1972 | ghjkghjk_AT_hotmail.com | 99999 |

| 4 | john | brown | 02/02/1972 | ghjkghjk_AT_hotmail.com | ||

| 5 | john | brown | Mr | 20/01/2020 | qwertyui_AT_gmail.com | 55555 |

| 6 | john | brown | Mr | qwertyui_AT_gmail.com | ||

| 7 | john | brown | 20/01/2020 | 55555 | ||

| 8 | john | brown | Mr | |||

| 9 | Julie | brown | Mrs | 25/09/1950 | abcdefghijk_AT_truemail.com | 88888 |

So, how would you tackle this? Any solution or approach you could offer which would scale for the number of rows and columns involved would be much appreciated.

Thanks

P

- Labels:

-

Data Investigation

-

Fuzzy Match

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@PaulSmith thanks for sharing. 100% agree on data quality and governance comment, this is a one of the best use cases for learners like me. From my experience, I may think of few points below: (apologies if this already thought about)

As a starting point:

1. Can I divide the data? i.e. exclude / prioritize most relevant attributes/columns or create a - Super-set of this dataset?

2. Can I leverage the grouping logic with most consistent columns i.e. First and Second name. If that approach is acceptable (which means we will loose other inconsistent data within other attributes like different email and telephone information) then we can look into transforming the data with high priority + consistent data.

I really liked this question and I am totally open to the opinion of other experts from the community. It is a fabulous use case of data governance and quality as you mentioned earlier. We had a team of big data experts breaking-down the source data into several databases in oracle and setup dependencies that will lead to required information but above all it starts with a logic of building super-set and then identify/create links.

Looking forward to learn from everybody here.

Best regards,

Pratik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Pratik

Thanks for looking at the issue. As always with Alteryx, feel free to break it down, concatenate or manipulate the data in any way that works for you achieves the objective 😀

I found that developing a solution for the 6 attributes listed is relatively straightforward (you could almost hard code the logic in a few Multi-Row Formula tools) the real challenge here is finding a solution that will scale and work over some 30 attributes. If you start checking for specific column permutations it starts getting out-of-hand very quickly.

I too am looking forward to seeing the creativity and ability of this community!

Paul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@PaulSmith happy to give it a try based on the approach best to my knowledge.

I would like to see slice of your data and understand if you have something in mind to create 'master key' based on which we can built super-set and subset logic.

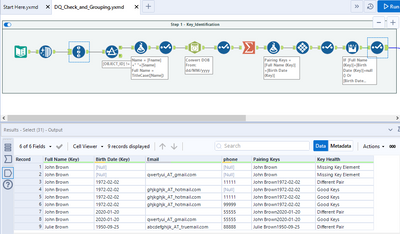

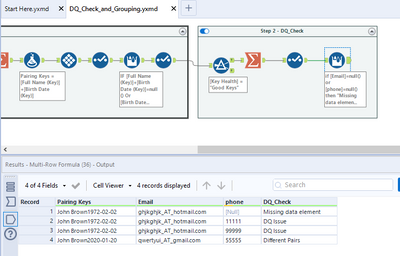

Below is just a sample of starting point 1) Key identification and 2) DQ checks. I am applying simple logic to segregate consistent vs inconsistent data and then take next steps as suitable in the process.

So here's my suggestion; (workflow attached for your test run)

Step 1 - Key Identification and Health

Step 2 - DQ Check

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Pratik

An Intriguing start – I am interested to understand how you envisioned the DQ check to translate into a single user grouping function?

Sadly, I can't share any real data with you as it is personal information, however, the sample data captures the essence of the issue with the only difference being the scale. I have included a list of (slightly amended) columns being used to show the challenge.

‘Object_id’ is the Primary Key(PK) in the main source table and acting as the ‘key identifier’. There is a unique person identifier but, as the users are duplicated, a single person who is in the table 4 times they will also have 4 unique person identifiers.

## Source columns

1 A_OBJECT_ID (PK)

2 A_ID_NUMBER

3 A_FIRST_NAME

4 B_FIRST_NAME

5 A_PREFERRED_NAME

6 B_KNOWNAS

7 A_MIDDLE_NAME

8 B_MIDDLE_NAME

9 A_SURNAME

10 B_SURNAME

11 B_KNOWNAS_SURNAME

12 A_BIRTH_CERTIFICATE_SURNAME

13 A_TITLE

14 B_TITLE

15 A_DATE_OF_BIRTH

16 B_DATE_OF_BIRTH

17 A_GENDER

18 B_GENDER

19 B_GENDER_O

20 B_UNIQUE_ID

21 B_USERNAME

22 A_EMAIL

23 B_EMAIL

24 A_PHONE

25 B_PHONE

26 A_PHONE_M

27 B_PHONE_M

28 B_START_DATE

29 PT_PERSON_TYPE

30 PT_DESCRIPTION

31 A_POSTCODE_CANDIDATE

32 CERT_COMPETENCY

33 A_IDENTIFYING_CODE_0

34 A_ID_CARD

35 B_ID_CARD

36 A_PERSONALID

37 A_IDENTIFYING_CODE_1

38 A_IDENTIFYING_CODE_2

39 A_USER_NAME

40 A_IDENTIFYING_CODE_3

41 A_IDENTIFYING_CODE_4

## Derived columns

42 PHONE_S_Coalesce

43 PHONE_M_S_Coalesce

44 PHONE_Coalesce

45 FIRST_NAME_Coalesce

46 MIDDLE_NAME_Coalesce

47 SURNAME_Coalesce

48 TITLE_Coalesce

49 POSTCODE

50 POSTCODE_VALIDATED

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@PaulSmith I understand data sharing is based on PII compliant. Not an issue however, I would have really liked to deep-dive and validate my approach. For ease of understanding,

Grouping logic remains same across all steps i.e. creating pairs across the table (This effort is required to find unique > find most consistent and accurate row)

Steps will be;

1. Create pair for key elements i.e. most consistent data - Names (First, Middle & Second) & DOB - (Duplicate data elements can be removed here itself before moving to DQ checks as birth date is master identifier for any customer records)

2. Then grouping by inconsistent data with paired keys from step 1 - this will represent differences as DQ check

Logic of Key Health and DQ check is exactly same i.e. validating data between current vs previous row. Any inconsistency will flag - DQ check or Bad Keys in step 1 and 2

Validation of incremental data (i.e. columns) will always be linked to master key i.e. Name and birth date and DQ checks for incremental data will always require new subset of keys (assuming new column will further drill down on customer information that will expand the scope of uniqueness in available records)

Best regards,

Pratik

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Just adding to my earlier response. Logic reference is shared below:

1. Master Key = Name + DOB (Validation for Master Key should flag - missing key elements - That should be scoped out of further grouping/investigation)

2. Master/Paired Key, Email, Phone, AA, BB, CC....other attributes = Subset pair of key > i.e. Master Key + Email + Phone + AA + BB + etc. (this validation should flag inconsistencies within the subset key paired/grouped with Master Key)

Hope this helps.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,368 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,705 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,974

- « Previous

- Next »