Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Faster pgadmin Bulk Loader Update ?

Faster pgadmin Bulk Loader Update ?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Alteryx Community,

Scenario - We receive Customer extract file every day from Client with 80K records and 39 fields containg a flag (P,F or C) as an xlsx file . This data is written to a postgres table with a primary key field.

Objective - To insert new records or update the existing flag in the Postgres table for previous records from the new file received.

Example:

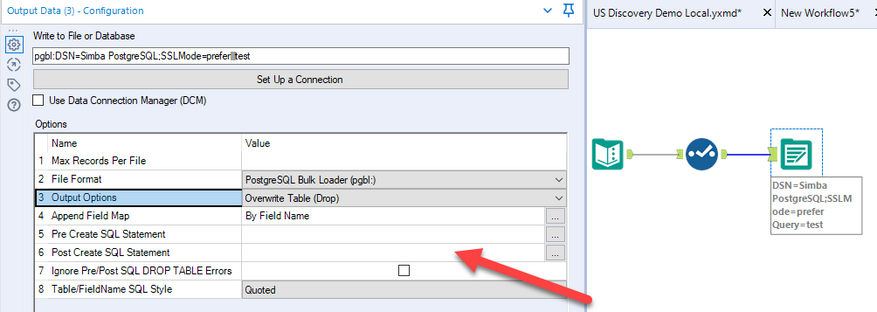

Day 1 : 80K records written into Posgtres table using File Format- Postgres SQL Bulk Loader in the output tool. ( This runs in 37 secs).

Day 2 : New extract file is provided and needs to update the flag in the table for records loaded in Day 1 or insert new records into the table - Used File format ODBC Database. This option is used as there is no insert/update option for bulk loader file format.

Issue - When running the Day 2 - it takes around half hour to insert/update the postgres table as its using ODBC. Performance gets impacted as the size of the table gets increased.

Day 2 file when written using insert/update output option took around half hour.

Question : Is there a way we can achieve the fast loading performance of a postgres bulkloader in addition to the insert/update feature from ODBC in order to process the extract file faster everyday? Objective is to always have the latest flag status in the postgres table.

Will it perform faster if i upgrade my version to the latest one? .

Alteryx version used:

Alteryx Admin Designer

Version: 2021.1.3.22649

postgres 4 Database used:

Version 4.30

Python Version

3.8.3 (tags/v3.8.3:6f8c832, May 13 2020, 22:37:02) [MSC v.1924 64 bit (AMD64)]

Flask Version 1.0.2

Application Mode Desktop

- Labels:

-

Optimization

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

What about an approach where you use a bulkloader to write data into a staging table, and run an INSERT INTO command in PostSQL to update the other table

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Brandon,

The INSERT INTO command in the 'Post Create SQL Statement' will insert the day 2 data from the staging table to the main table after the workflow runs.

eg: Day 1 had 6 records with flag 'P' for order number XXXXX which is written to the main table.

Day 2 may have the 6 records with the same order number XXXXXX but with a different flag 'F'.

We are looking for solution to update the flag field in the main table after insertion. Using the INSERT INTO command in the PostSQL solution - the main table will now contain 12 records in total - 6 with flag 'P' and 6 with Flag 'F' .

The main table should have only 6 records with the latest flag ie order number XXXXX with 'F'

Only the file format - ODBC datasource had update/insert if new option . But when processing 8000 records takes a massive time. was wondering if there is an option with Bulk loader as we recieve around 8000 records every day.

Thanks,

Alex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Alex_Intelling that's because it needs the ON CONFLICT part of the query when using an upsert functionality in postgres. You can see a tutorial here; https://www.postgresqltutorial.com/postgresql-tutorial/postgresql-upsert/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Brandon ,

I looked at the link you provided, i was able to run a insert statement in Alteryx when I add a hardcoded value for @primaryKey and @wf_boundary_state in the query below. But I read in other forums the post create sql statement in alteryx cannot be parametrized to bring value for @primaryKey and @wf_boundary_state from the data stream. (ie the new file provided which has the updated Flags for the order number)

Instead I figured we can use dynamic query to get the values from the data stream by replacing the specific string. I know it works for Select statements but when trying for updating string from data stream to a Insert statements - the tool treats it as a select and errors out.

The initial way I attempted was to compare the first file and second file using the order number and if they are different - write the latest record only to a yxdb file. But it was taking long time when checking against days worth of files.

regards,

Alex

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If the postsql needs to be dynamic, you can wrap the output tool in a macro and update the postsql value with the action tool as described here: https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Post-SQL-Create-amp-Changing-variable/...

You are correct that in an Input Data tool it is expecting a SELECT statement because the purpose of that query is to feed data into the workflow.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »