If you have caught my previous blog post on bulk uploading data into Snowflake with speed, then you know I left you a little promise to share with you how to bulk upload spatial data to Snowflake. Using primary keys, you can also use this process to bulk update too! Happy days.

Before we jump into how to do this, we need to understand a couple of things about Snowflake and Spatial data.

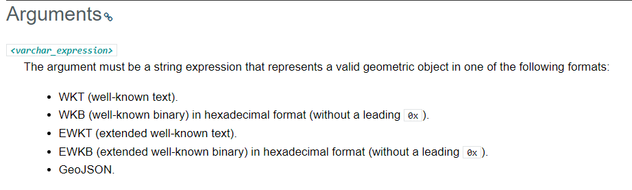

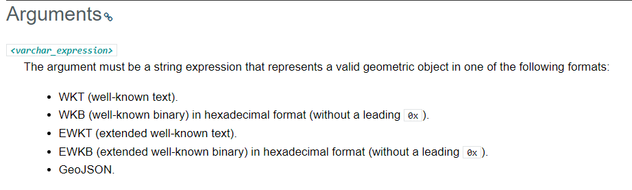

Firstly, the good news: Snowflake can handle spatial geography. However, it must be presented in a particular way. Their documentation is exceptionally helpful around this. Here is a snippet, link below if needed.

Source: Snowflake Geography Documentation

The two most common ways would be WKT (Well Known Text) or GeoJSON; all the others are variations of the same.

I have created a macro for WKT that I will share on Gallery (Sam, you just keep on giving – you are welcome!) However, Alteryx is a wonderful tool because it handles spatial data in the form of GeoJSON within the tool itself. All you need to do is change the data type from SpatialObj to VW_String within the select tool, and you have exactly what you need—no conversions needed. Fantastic and simple! Believe it or not, easily forgettable, so bookmark this page now! 😀😀

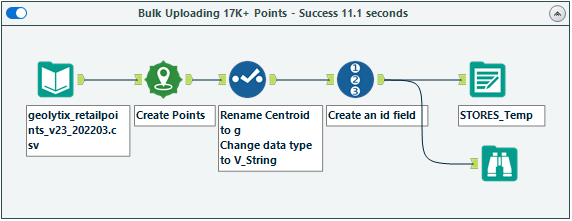

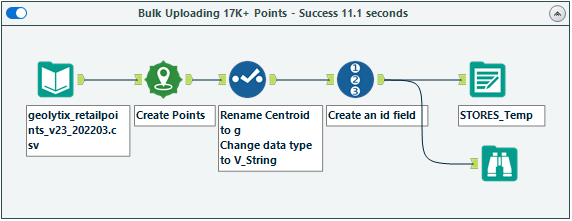

Now, all we need is a dataset. I have again used Geolytix Retail Points to support me on my quest. We are simply going to:

- Input the data

- Create Points

- In the Select tool:

- Rename Centroid to g

- Change its data type to VW_String (reducing the string length to something reasonable – Snowflake doesn’t like MAX length strings)

- For this example, I have turned all other fields off, but you can leave them on. It just means adding more fields in your SQL code. (I’ll show you where to do this!)

- Create an ID with a Record ID tool, then add a Browse tool

The data should look like this:

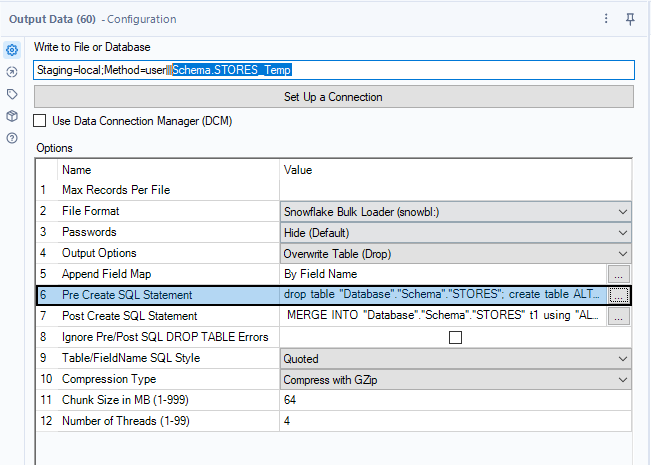

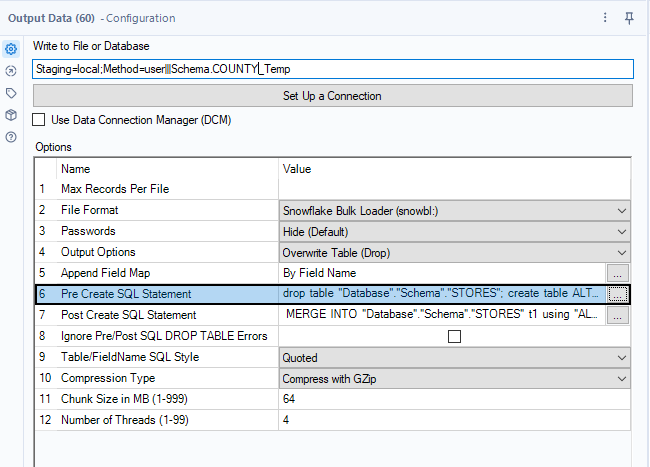

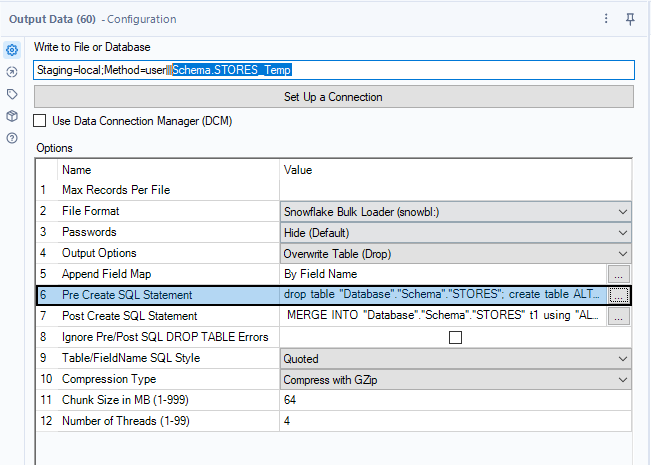

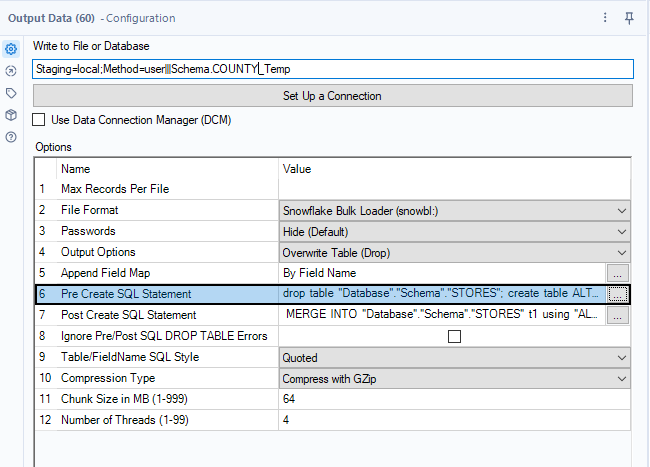

Now we need to focus on the Output tool and push the data into Snowflake. Whereas my previous blog was all about Post SQL statements within the Output tool.

In order to achieve uploading spatial data into Snowflake, we utilise both Pre and Post SQL statements.

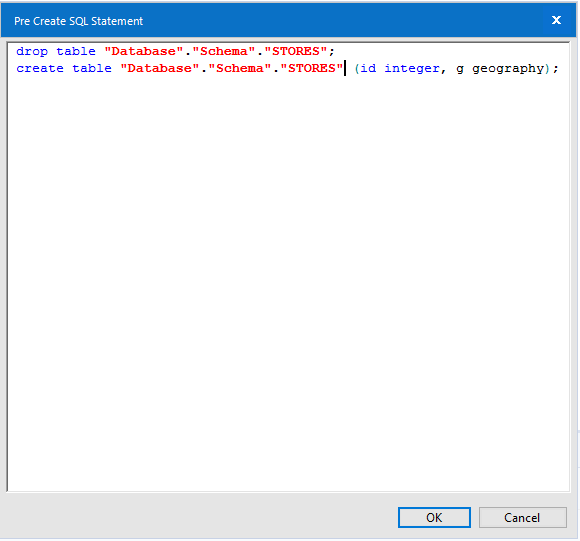

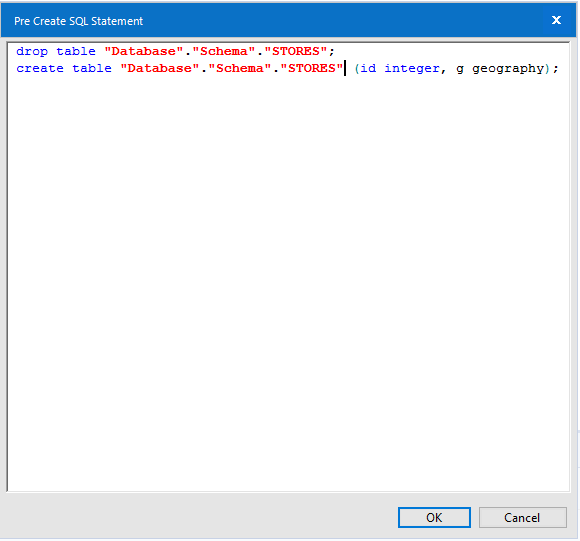

First Stop PreSQL

drop table "Database"."Schema"."STORES";

create table "Database"."Schema"."STORES" (id integer, g geography);

As you can see, the PreSQL statements are very simple. In fact, the first time you run the workflow, you only need the create table statement.

Let’s see if you are reading or just copying code :D. If you run it with the drop table line before the table exists, you will get an error.

Here you are really creating the table using Snowflake syntax to ensure that the table includes a geography column. You would have to include all the columns you want to write to the database alongside their correct data types.

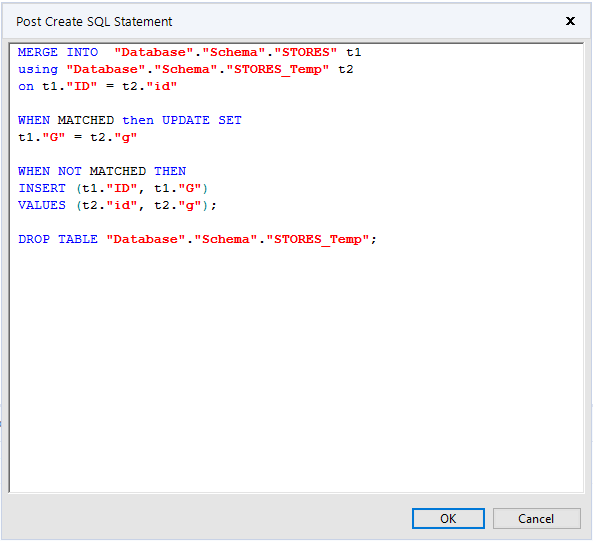

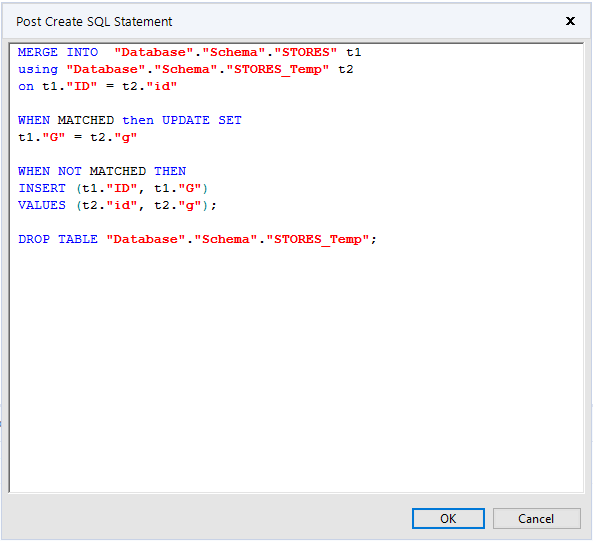

Second Stop PostSQL

MERGE INTO "Database"."Schema"."STORES" t1

using "Database"."Schema"."STORES_Temp" t2

on t1."ID" = t2."id"

WHEN MATCHED then UPDATE SET

t1."G" = t2."g"

WHEN NOT MATCHED THEN

INSERT (t1."ID", t1."G")

VALUES (t2."id", t2."g");

DROP TABLE "Database"."Schema"."STORES_Temp";

Now we use Snowflake Merge statements to push the data from the TEMP table to the PRODUCTION table. This means the GeoJSON is copied from a string field into a geography field which is why it needs to be a Snowflake agreed format. 😉

Then we drop the TEMP table to keep the database clean.

Now we have Spatial data in Snowflake!

Source: GIPHY

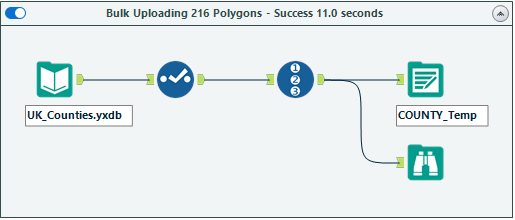

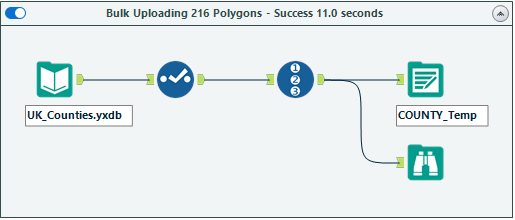

Let’s do this again but now push some Polygon data into Snowflake.

Similar process here as above:

- Load Spatial data

- Change Data Type of SpatialObj to VW_String and the max size to a reasonably large one

- Add a Record ID tool

- Output tool

- PreSQL – Remember it’s database.schema.table (and its your database name and schema)

drop table "Database"."Schema"."COUNTY";

create table "Database"."Schema"."COUNTY" (id integer, g geography);

2. PostSQL

MERGE INTO "Database"."Schema"."COUNTY" t1

using "Database"."Schema"."COUNTY_Temp" t2

on t1."ID" = t2."id"

WHEN MATCHED then UPDATE SET

t1."G" = t2."g"

WHEN NOT MATCHED THEN

INSERT (t1."ID", t1."G")

VALUES (t2."id", t2."g");

DROP TABLE "Database"."Schema"."COUNTY_Temp";

Congratulations you now have a polygon and point dataset in Snowflake!!

Tune in same time next week to learn how to do cool spatial SQL in Snowflake using Alteryx InDB tools!