Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Too many fields in record#1

Too many fields in record#1

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am trying to read a pipe delimited file and I am receiving the error above.

The header row is 1,000 characters and contains 60 fields.

There is a total of 24,173 rows in the file.

The error message doesn't make much sense. Is there an upper limit on the number of fields in a delimited data file?

FWIW. Excel has no trouble reading and correctly parsing the data.

Solved! Go to Solution.

- Labels:

-

Input

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

It seems that if I append a pipe (delimiter) character to the end of the header row, the file is correctly parsed. However, an empty field is appended to the result set.

This observation doesn't help much though. I still need to be able to read the files as they stand.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @nhunter!

If an explanation helps, I can explain the behavior. As for Excel, I'll put in my two cents.

If your data looks like:

Name|Address|Phone

Mark|Michigan|

Mark|Michigan

Mark|Michigan|555-1212|888-123-4567

Excel might parse this out with a blank field name and show NULL values appropriately. Excel would also parse out this row incorrectly:

Mark||Michigan|555-1212

It would move Michigan into the phone field because of the extra |.

When data is expected to conform to a consistent format, Alteryx prevents you from loading the data when the wrong count of fields is encountered.

If you post a file with the first 2 or 3 records only. I'd be happy (or one of many others helping here) to take a look and see what we recommend specifically for you. My first priority is to help you with the issue. Once that is done, an idea for future enhancements can be made to be more forgiving of delimited files and allow the data to be read with caution.

Cheers,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @nhunter,

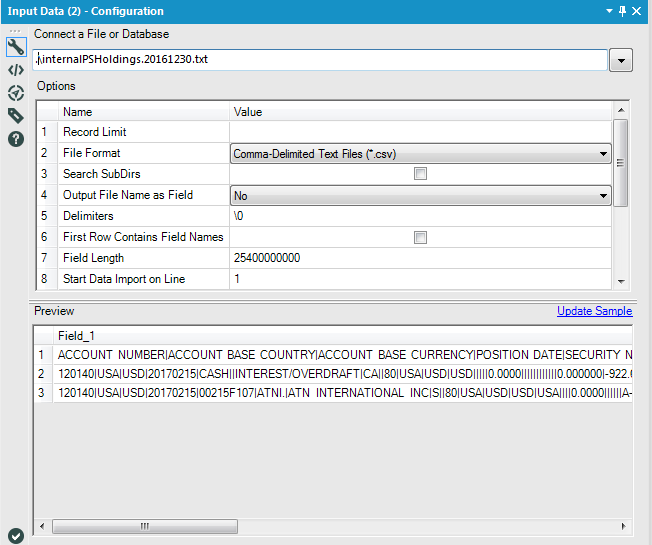

Generally this error occurs because the field length on input is too short, causing truncation of the data. If you increase the Field Length in the input tool and read in the file with no delimiters (delimiter = \0), you should be able to read the entire file in. Then, you can use a Text to Columns tool to parse out on the pipe.

Senior Solutions Architect

Alteryx, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For additional color, here's a great knowledge base article: Error reading “FILEPATH\MYFILE.txt”: Too many fields in record #

Senior Solutions Architect

Alteryx, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi, Sophia,

I appreciate your response but the KB article is not that helpful. Since I have more header fields than data fields, according to the article I should...

just get a warning like this:

'Warning: Input Data (1): Record #6: Not enough fields in record'

And the last record in the third field (sic--presumably he means the third field in the last record) will just be null.

But that's not what's happening. I would be fine with that. What is actually happening is that I get an error message and no data is loaded.

I tried loading the file without delimiters and then using the Text to Columns tool but then all the fields are named Field1, Field2, ... FieldN.

How would I recover the field names from the original header row?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For your last question, use a Dynamic Rename and "Take Field Names from First Row"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @nhunter,

Helpful as my friends @RodL & @SophiaF were, I have a full answer for you.

YUCK!

In order to bring in your data cleanly, I did the following:

- Counted the pipes to determine 60 fields of data.

- Imported the file as a single field

- Set Length of field to 1024 bytes

- Used \0 as a delimiter

- Trimmed the spaces off of the data (I think that this is the root issue)

- Parsed the data on the pipe (|) delimiter

- Removed extra fields

Wrote this essay.... Excel is more space forgiving...

I am sending you the workflow in version 11. I recommend upgrading to it if you haven't already. I am sending it in version 10 too.

Cheers,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks, Mark, much appreciated!

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,259 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,710 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »