Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Insert into Oracle Database hosted by AWS

Insert into Oracle Database hosted by AWS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm working on a project where I need to write approx. 190k records to an Oracle DB hosted at AWS on a daily basis. I don't have any issues querying or writing to the database from the standpoint of reading or writing data from / to the DB. The issue I'm having is speed. It is taking 10 hours to upload 190k records. I know a remote hosted DB will be slow, but I wasn't expecting this slow. Wondering it anyone had any suggestions for speeding up the process, either with how I'm using Alteryx or how the database is configured.

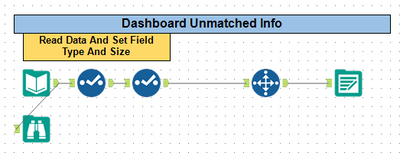

For our initial data load my workflow is simple:

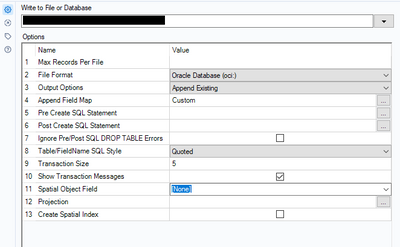

The database output tool configuration is below. This is using the standard Oracle OCI driver.

I set the transaction size to a low number to see if it made a difference from the extremely high default value. It didn't.

Is there a better Alteryx tool to use or is there a better driver maybe?

Thanks for any advice you can provide!

-Winston

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Winston

If you want to reduce the data size from your database, have you considered using In-DB tools?

https://help.alteryx.com/20223/designer/database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If you use AWS, you may find this blog post interesting.

The Ins and Outs of In-DB: Do Something Awe-Inspiring with AWS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

So a few issues:

1) Do you have enough ram? Do you have enough ram allocated to Alteryx?

2) Where are you getting this data from? Is this from a different DB? What's the length of time it takes to get your query and return it in memory? Is it faster via In-DB - note to go from DB1 to DB2 (two different DBs) you would need to go in-memory (datastream out/in) - but if they are both on Oracle in AWS - you would not need to.

3) Are there other latency issues (other users) affecting either db?

4) What is the size of your Oracle DB footprint (is it on an EC2? How large is your EC2?)

5) Can you share your drive configurations (odbc 64) and your ouput tool settings/manage in-db configurations? There could be something wrong on the driver side...

Also worth asking - what's up with the Spatial Object field [none]? how large are these objects? try the bulk loader?

Also Transaction Size should be 0. 5 would mean you are writing 36,000 temporary log files on a 180k upload...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Yoshiro_Fujimori, @apathetichell

Thanks for the suggestions. Status update is below.

Update: Using a bulk data loader I have the time cut down to 1.5 hours, which is still unacceptable. I have also tried In-DB and if I read in all the records from the table and immediately write it to another table it takes 22 seconds. But if I add an In-DB Browse tool (configured to show all daa) it takes 1.25 hours.

Obviously In-DB is the way to go, without adding browse tools to monitor data flow, but I'm running into the issue that In-DB tools don't have all the tools I'm using, ie. Append, Fuzzy Match, etc. Since its the acquiring of the data that is taking the bulk of the time, Using the IN-DB Data Stream Out tool and doing the appends and Fuzzy matching and then use In-DB Data Stream In so it can be written to the database seems like I'm doubling the run time of the workflow.

Any suggestions on how get around streaming out and then streaming back in, just to use append or fuzzy matching?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Something is configured wrong. your browse In-DB tool should not be adding that much of a load to your process. Are you logging everything by accident? Can you share your odbc 64 config? What driver are you using?

You are correct that in order to use Alteryx specific tools (ie fuzzy match) you will need to stream the data out... For append there are work arounds... I think I mentioned that this is really ram specific... what kind of machine are your running (virtual/real?) what's the memory allocation? When you query how much of a hit do you see in resource manager? Are you on a VPN?

My first hunch because of the browse issue (unless you drastically increased the default size of 100 records) is that you have something configured off in your ODBC.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,209 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,488 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,373 -

Developer Tools

3,531 -

Documentation

528 -

Download

1,037 -

Dynamic Processing

2,940 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,260 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

713 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,295 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,095 -

Join

1,959 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,731 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »