Alteryx Analytics Cloud Product Ideas

Share your Alteryx Analytics Cloud product ideas, including Designer Cloud, Intelligence Suite and more - we're listening!- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- AAC (Cloud): New Ideas

Hello all,

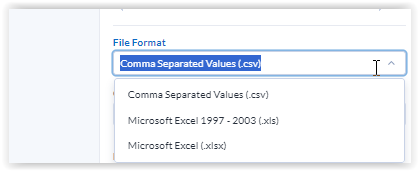

Only one file format is supported by AutoML : csv. And we can't even choose the separator (not the same in the US and France).

Best regards,

Simon

Hello folks,

let's say it's my second greatest deception so far! Alteryx on-premise is not perfect but it supports a lot of file format... and here, three file formats only, and three that are not performant or rich : xls, xlsx, csv

I won't make an idea by missing file format but the bare minimum seems at least to support the same file formats than on-premise (like yxdb, hyper, parquet,kml, etc...)

Best regards,

Simon

Hello,

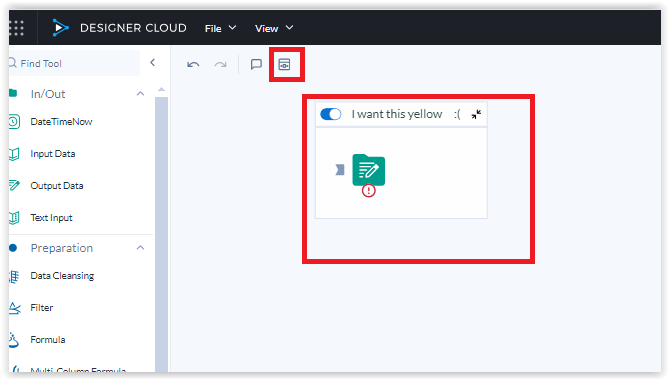

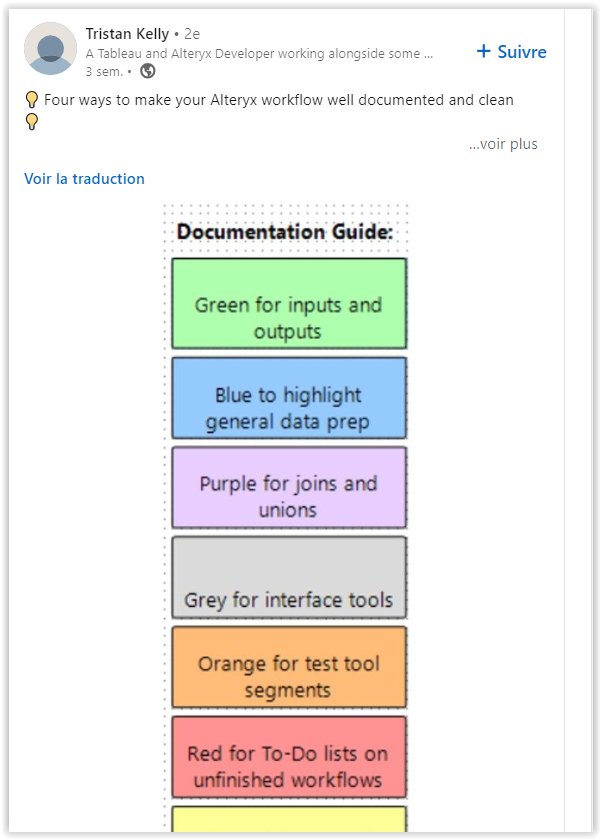

On Alteryx Designer on-premise, we can set background and text color (and other settings...). It's very useful because it allows to have a color palette by functional or technical feature of the container.

And as an example of the popularity of this color feature, this post on linkedin :

Best regards,

Simon

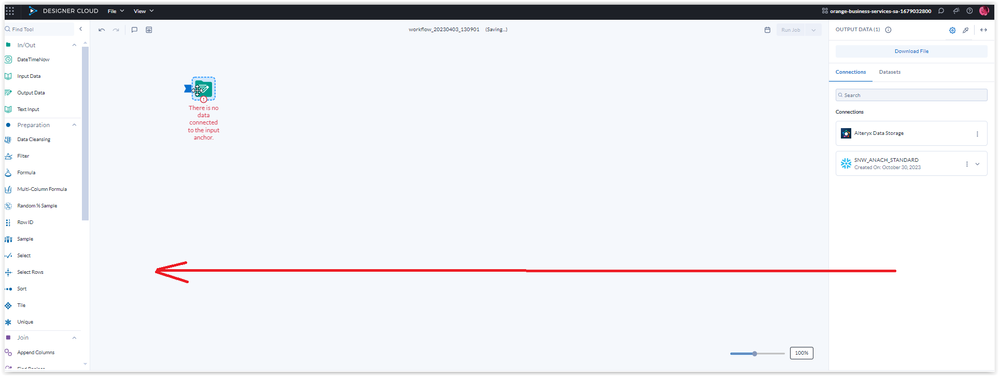

Hello all,

I would like to move the Tool Configuration to the left instead of the default right (and save this configuration for me). 2 reasons for that :

1/ same experience than Designer on-premise

2/ less distance to do with the mouse

(also, if the tool palette could be movable upside.... would be great)

Best regards,

Simon

Hello all,

On Alteryx Designer, you have a star (asterik) after the workflow name when it's not saved. Would be nice to have the same feature in Cloud, that's an easy, standard way to get the information.

Best regards,

Simon

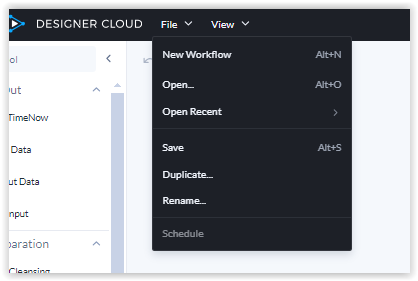

Hello,

I love standards and ctrl+S is a pretty common on for the last four decades. Can we expect it to work also on Alteryx Designer Cloud please ? Even if it's in the browser, it seems possible since the guys at Dataiku managed to do it.

Best regards,

Simon

Hello,

Correct me if I'm wrong but we can't export or import a workflow. That sounds a very easy and very useful function, especially when you develop something and want to share with others users.

Best regards,

Simon

Use a linked datasets created by GCP Analytic Hub as data source in DataPrep. Detailed informations in link below:

Can I use linked dataset (created by Analytic Hub in GCP) to build flows in DataPrep? (trifacta.com)

As of now the once a user deletes the flow, the flow will not be visible to anyone, except in the database. But the flow is soft deleted in the database. So can enable the option for admins to see all the deleted flows and recover those flows if required, so that in case some one deletes the flow by mistake then admins can retrieve it by recover option. This has to be an option by check box, where they can recover those flows all at once if it is a folder. This option can also be given to folder recovery where they can recover all the flows in the folder.

I want to create a dynamic output file name in both the Trifacta UI and New Designer Cloud UI. I know that I can create a timestamp or a parameter, but it seems like the parameterization in Trifacta is static. Instead of having to manually override the parameter every run, I am wanting to grab the desired filename from a field in the dataset. This is a functionality commonly used in Desktop Designer, so I'm hoping for it to be added to the Cloud soon!

Currently there is support for parameterizing variables in custom SQL dataset in Dataprep. However it requires that the tables using this feature have the same table structure. This request is to allow this same functionality but with tables that have different table structures.

Example:

Table A

dev.animals.dogs

name | height | weight

Table B

dev.animals.cats

name| isFriendly

Would like to use a query where we have 1 custom SQL dataset where we just say

SELECT * FROM dev.animals.[typeOfAnimal]

typeOfAnimal being the parameterized variable with a default of dogs.

Hi team,

We would need a page where a user can handle all the email notifications they are receiving from all the flows (success and failure).

Thank you

We would strongly like the ability to be able to edit datasets, created with custom SQL that have been shared with us. We think of Trifacta in part as a shared development space so if 1 users needs to make an update to a dataset but wasn't originally the owner - this slows down our workflow considerably.

Create a connector to Mavenlink.

If a flow is shared between multiple editors and someone make changes in it, there should be a way we can see all the changes made to that flow by different users, like creating a trigger that will notify the users about the changes made in the flow by someone as soon as the recipe changes or if we can extract the information about the flow or the job. I have attached the snippet of data that can be useful to us.

Right now there is no place where team members can collectively create flows and share at one place. If given the option to share the Folders among different members just like we have for flows it will be lot easier. For Example: If there is a folder with 4 different flows, and I share the folder with my team mates they can edit and created new flows over there and can see all the 4 different flows already present. But if out of 4 flows if I share 2 flows with someone, they see the folder but they don't see the flows not shared with them.

In order to monitor the status of the plan that has been running several different flows inside, in my case it is around 300, I send the HTTP request to Datadog to display the result of failed and success on a dashboard. The problem is, DATADOG understands only epoch timestamp and not the datetime value. Right now we cannot convert the timestamp into epoch. I was thinking of approaching this problem in the following ways:

1) Having a pre-request script

2) Creating dynamic parameters in Dataprep instead of using a fixed value, that can be used further in the HTTP request body

3) This is just the turnaround - Creating a table that stores the flow name and timestamp in it, and we are supposed to use this table in a plan every time we are running a flow. But this is not the right way. It will work but it is waste of time as we will end up creating separate tables like this one for each flow.

We at Grupo Boticário, who currently have 13k Dataprep licenses and close to the official launch internally, have noticed a recurring request for a translation of the tool. Bearing in mind that it will be an enabler for more users to use in their day-to-day work, I would like to formalize and reinforce the importance of our request for translation into Brazilian Portuguese as well as a forecast of this improvement.

We often use hashing functions like fingerprint in SQL (Big Query) to mark or identify rows that match for specific attributes or to generate UUIDs. I know it's possible to do so by adding UDFs, but it would be more convenient to have a native function.

We can migrate flows from one environment to other environment using Trifacta APIs.

Export and Import the flow from source to target.

Rename the flow.

Share flow with appropriate user according to environment.

Change the input and output of the flow.

- New Idea 83

- Accepting Votes 0

- Comments Requested 0

- Under Review 10

- Accepted 1

- Ongoing 0

- Coming Soon 0

- Implemented 0

- Not Planned 0

- Revisit 0

- Partner Dependent 0

- Inactive 0

-

Admin Experience

2 -

Admin Portal

2 -

Administration

24 -

App Builder

1 -

Automating

7 -

Category Input Output

1 -

Connecting

11 -

Data Connectors

6 -

Designer Cloud

14 -

Engine

2 -

Enhancement

20 -

Integrations

1 -

Location Intelligence

1 -

Machine Learning

1 -

New Request

14 -

Profiling

2 -

Publishing

4 -

Sharing & Reuse

6 -

Tools Macros

2 -

Transforming

12 -

Trifacta Classic

66 -

User Experience

10 -

User Management

2

- « Previous

- Next »

- abacon on: Function to Identify User Running Workflow for Con...

-

TheOC on: Create a Run Log of Workflows and Outputs Generate...

-

TheOC on: Ability to be notified when AACP is upgraded

- julieldsp on: Ability to create groups of users

- AmelMoudoud on: the lack of integration of the different apps is d...

- AmelMoudoud on: AutoML : disturbing lack of file formats

- simonaubert_bd on: Container Background and Text Color

- simonaubert_bd on: * (star, asterisk) after the workflow name when no...

-

TheOC on: Ctrl+S instead of Alt+S for save key shortcut

- RWvanLeeuwen on: A simple way to export a workflow/app/... any asse...