Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Challenge #131: Think Like a CSE... The R Error Me...

Challenge #131: Think Like a CSE... The R Error Message: cannot allocate vector of size...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

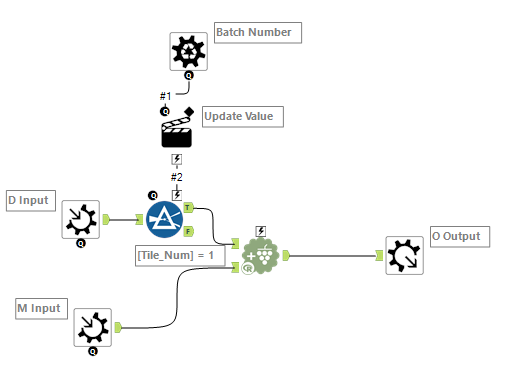

The tile tool is really good for this, and I feel this is an underused tool in my toolset.

Check out my collaboration with fellow ACE Joshua Burkhow at AlterTricks.com

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

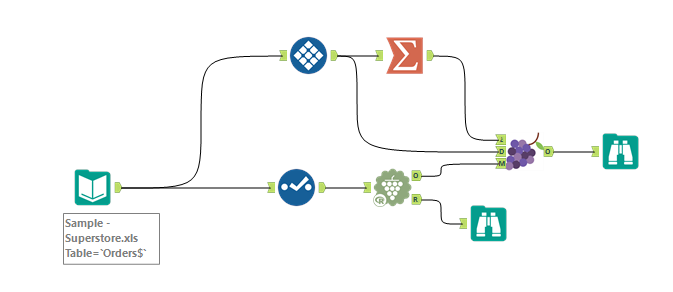

Some thoughts below. I'll append the note in a text file so I have something attached to my response.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Done.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here's my solution:

Looks like a memory issue in the append cluster tool.

cannot allocate vector of size 7531.1 Gb

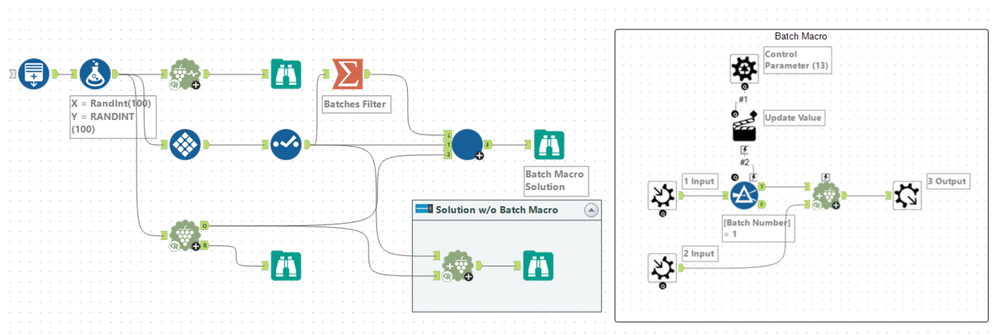

I made a workflow to see if batching the process of the append cluster tool affected it

I would advise using a batch macro (like above) to only feed a subset of data into the append cluster tool at a time - hopefully solving the error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Suggestions below.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

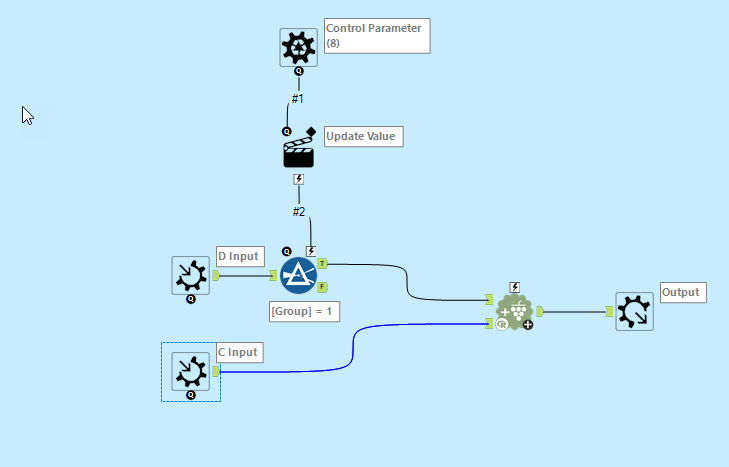

Memory issued on append cluster, which means the clustering is working but the labeling isn't. So we can batch the latter.

Nice to know!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

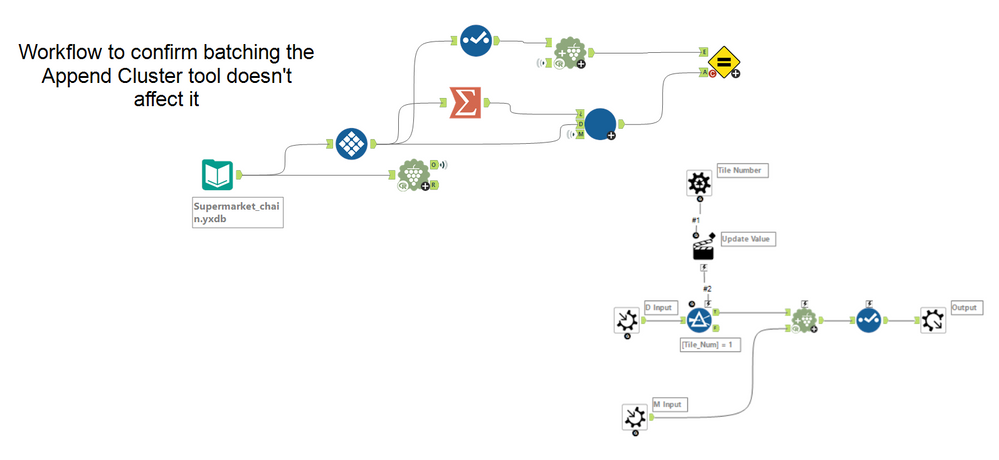

As an Alteryx CSE I would investigate the error message and the tools used in the workflow and quickly find out:

"Error messages beginning 'cannot allocate vector of size' indicate a failure to obtain memory, either because the size exceeded the address-space limit for a process or, more likely, because the system was unable to provide the memory."

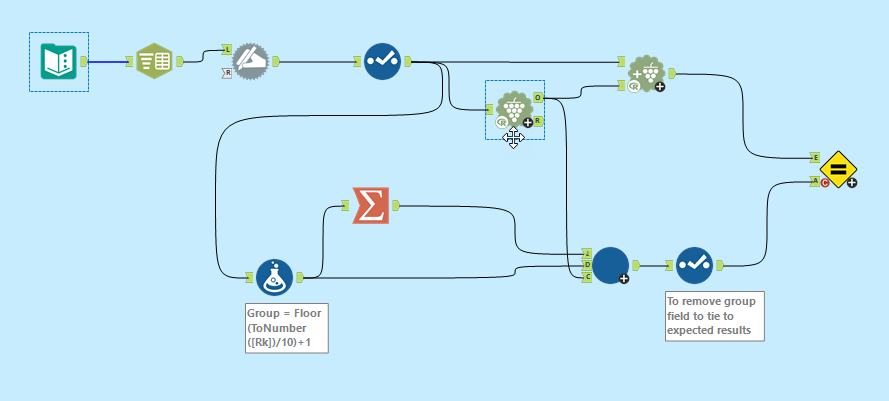

Then I'd ask the community and they'd say to use a batch macro since it's only the Append Cluster tool that's throwing the error and I'd send them this example of a batch macro workflow using the Append Cluster tool. It uses the Tile Tool to create X number of tiles/batches, which then runs those batches through.

I would also mention to the user that he/she should consider using the K-Centroids Diagnostics tool, which will output a 'K-Means Cluster Assessment Report'. Per the tool mastery article, "The K-Centroids Diagnostics Tool provides information to assist in determining how many clusters to specify," which would likely be of benefit to the user as well.

Sources:

https://stat.ethz.ch/R-manual/R-devel/library/base/html/Memory-limits.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Some options:

1. Convert the csv to yxdb prior to running the workflow.

2. Drop all not-needed fields early on. Use only the bare minimum needed for the cluster allocation, then bring the cluster id back to the main stream of data via join/find&replace if needed.

3. Make sure all fields have proper sizes.

4. The cluster generation is working, so split the data for the cluster assignation in batches (batch macro with fixed object coming from the K-Cluster analysis tool).

-

Advanced

300 -

Apps

26 -

Basic

158 -

Calgary

1 -

Core

157 -

Data Analysis

185 -

Data Cleansing

5 -

Data Investigation

7 -

Data Parsing

14 -

Data Preparation

237 -

Developer

35 -

Difficult

86 -

Expert

16 -

Foundation

13 -

Interface

39 -

Intermediate

267 -

Join

211 -

Macros

62 -

Parse

141 -

Predictive

20 -

Predictive Analysis

14 -

Preparation

272 -

Reporting

55 -

Reporting and Visualization

16 -

Spatial

60 -

Spatial Analysis

52 -

Time Series

1 -

Transform

227

- « Previous

- Next »