Data Science

Machine learning & data science for beginners and experts alike.- Community

- :

- Community

- :

- Learn

- :

- Blogs

- :

- Data Science

- :

- Visualizing Automated Feature Engineering

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

This article originally appeared on the Innovation Labs blog and is featured on the Data Science Portal.

As data science trends towards utilizing more and more automation, a growing concern is how we can audit, evaluate, and understand the results from automated processes. Libraries like Featuretools, an open-source Python tool for automated feature engineering, simplify critical steps in the machine learning pipeline. Automated feature engineering can easily extract salient features from data without the significant time and knowledge required to do so manually. This automation also helps avoid human errors and discover features that a human might miss. However, this abstraction can make it harder to understand how a feature was generated and what it represents.

With manual feature engineering, you have to understand what the feature is in order to create it. Automated feature engineering, on the other hand, requires working backwards from the end result. In Featuretools, we rely on the auto-generated feature names to describe the feature. For a simple feature—i.e., one that only uses a single primitive—the name is usually easy to interpret. However, as primitives and features stack on each other, feature names become significantly more complicated and harder to intuitively understand.

To make it easier to understand how a feature was generated, Featuretools now has the ability to graph the lineage of a feature.

What is a Feature Lineage Graph?

Starting from the original data, lineage graphs show how each primitive was applied to generate intermediate features that form the basis of the target feature. This makes it easier to understand how a feature was automatically generated, and can help audit features by ensuring that they do not rely on undesirable base data or intermediate features.

For an example, let’s look at some features we can generate from this entity set:

import featuretools as ft

es = ft.demo.load_mock_customer(return_entityset=True)

es.plot()

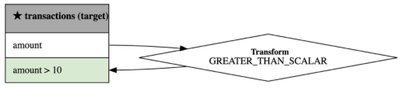

First, we can make a simple transform feature using a transform primitive. Transform primitives take one or more variables from an entity as an input and then output a new variable for that entity. They are applied to a single entity, so they don’t rely on any relationships between the entities.

If we wanted to know whether the amount for a given transaction was over 10, then we could use the GreaterThanScalar primitive. This would generate a feature that “transforms” the transaction amount into a boolean value that indicates whether the amount was over 10 for that transaction.

trans_feat = ft.TransformFeature(es['transactions']['amount'], ft.primitives.GreaterThanScalar(10))

ft.graph_feature(trans_feat)

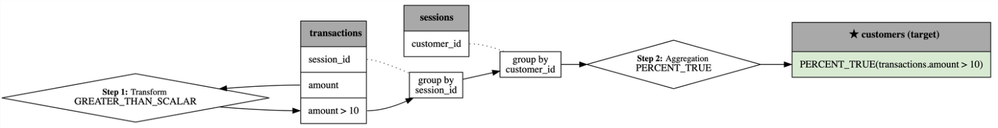

Next, let’s use the feature we just created to perform an aggregation with an aggregation primitive. Unlike transform primitives, aggregation primitives take related instances as an input and output a single value. They are applied across a parent-child relationship in an entity set.

Let’s say for a specific customer, we want to know what percentage of their transactions had a transaction amount over 10. We can use the PercentTrue aggregation primitive on the GreaterThanScalar feature we just generated to create this feature. The resulting feature “aggregates” all of the amount > 10 features for each customer over all transactions, and calculates what percentage of those were true for each customer.

agg_feat = ft.AggregationFeature(trans_feat, es['customers'], ft.primitives.PercentTrue)

ft.graph_feature(agg_feat)

The dotted lines in the graph indicate which variables are used to group the data. The aggregation is then applied to each grouping to create the final feature. Importantly, the graph not only shows the aggregation, it also shows the previous transformation we applied. In this way, feature lineage graphs can track feature development through each intermediary feature. Also note that, in order to go from the ‘transactions’ entity to the ‘customers’ entity, we first had to relate the two through the ‘sessions’ entity that lies between them.

In this example, we manually generated the feature we wanted to explore in order to demonstrate how feature lineage graphs work. With automated feature engineering, we no longer have the advantage of the understanding derived from manually creating a feature and instead have to work backwards from the final feature.

For example, compare this generated feature name: <Feature: customers.NUM_UNIQUE(sessions.MODE(transactions.DAY(transaction_time)))> to the associated lineage graph. Looking at the name alone, it’s much harder to clearly see what this feature actually represents and what steps Featuretools took to generate it. Feature lineage graphs show us how the feature was generated step by step. Graphing the lineage of the feature is another tool to help users gain deeper insight into what the feature means and understand how Featuretools generated it from the base data.

How We Can Use Lineage Graphs to Audit Generated Features

Feature lineage graphs are a powerful tool to help understand how a feature was generated, which in turn can be used to assist in auditing the features. Because we can visually see step by step what features were stacked in order to generate the target feature, we have full knowledge of all features and primitives that were used to generate the feature without having to do any complex analysis.

Knowing which data a feature is based on is a critical step in auditing a feature for three reasons:

First, it allows us to easily throw out features based on data we later realize we don’t want to use. For example, if we realize some of our data is contaminated in some way, we can use feature lineage graphs to determine which features are based on that data and therefore should be discarded.

Second, it means the original data that the feature is based on is obvious. This is particularly important for explaining which original data was fed into the model and how that data may have impacted the result. Being able to clearly show how the original data was used is a critical part of analyzing a model and its results, especially in well-regulated industries.

Finally, feature lineage graphs can also help audit generated features by demonstrating which features were stacked in order to create it. For example, if we know that a feature has a lot of null values or is otherwise contaminated with bad data, we can easily identify and disregard any other feature that stacks on top of it.

Implementation

Feature lineage graphs are implemented by converting feature objects in Featuretools to a collection of nodes and edges that we then render visually. This visualization is possible because every feature in Featuretools contains the entity it is a part of, the primitive it uses, and references to its input features.

You can see the approach we took here. The algorithm utilizes the feature structure by starting at the target feature and then recursively searching over input features in a depth-first manner. As it traverses, every feature is given a node ID and collected by entity. We also create a few additional edges and nodes based on the feature type:

- Aggregation features and direct features are applied across entities and contain data on the relationship path between themselves and their input features. As we traverse that path, we add the groupby/join variables as nodes in our graph.

- Transform features do not relate between entities, so if there is a groupby variable, it is always part of the same entity, and no relationship path needs to be explored. Because of this, groupby transform features store their own groupby variable, which is used to add its groupby node to the graph.

Once we’ve traversed through all features, we have enough organized information to generate the final feature lineage graph. Tables are generated for each entity containing their features, and primitive and groupby nodes are generated from the nodes created during the feature exploration. We then use Graphviz to render the graph as an image.

Conclusion

While automated feature engineering makes it significantly easier to generate new features, it is still critical to be able to understand those features before using them. This helps ensure that features being used are meaningful and interpretable. Feature lineage graphs make both of these goals easier to achieve by helping audit features as well as demonstrating the steps taken to generate features in the first place.

In general, system visualizations are a powerful tool that improves explainability and understanding while also simplifying critical steps like auditing. We’ve implemented feature lineage graphs for just this reason, and believe that the strategies we’ve shown here can be more broadly applied to a variety of tools that rely on complicated multi-step processes.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.