Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Upload Parquet to HDFS

Upload Parquet to HDFS

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I know from other peoples posts on here that Alteryx doesn't natively support outputting columnar data such as parquet files. I was wondering if anybody has created a workaround for this for uploading to hdfs. We're using Alteryx v11.7, so ideally a solution which uses the capabilities of this version but I'm also interested in the best method going forward as we update Alteryx. Are there any clever shenanigans which can be done using the run command tool?

Thanks for your help!

Solved! Go to Solution.

- Labels:

-

Database Connection

-

Output

-

Run Command

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @JonnyR

Have you voted on the idea here: https://community.alteryx.com/t5/Alteryx-Designer-Ideas/Add-Parquet-data-format-as-input-amp-output/...

And have you seen Durga's workaround here: https://community.alteryx.com/t5/Alteryx-Knowledge-Base/Access-Data-in-Parquet-Format/ta-p/80941

Thanks,

Joe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I think Durga's article has been reattributed here.

Principal Support Engineer -- Knowledge Management Coach

Alteryx, Inc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For some reason, I am getting an "Access Denied" message when I try to access that link. Do you know why that might be?

Thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Mogul1 Yes, that article was archived because it was found to be inaccurate. I'm sorry to be the bearer of bad news. I had tried to do something similar and mostly succeeded. Here is a part of what worked for me. It is not officially supported at the moment.

To create the table:

Text in the Comment tool:

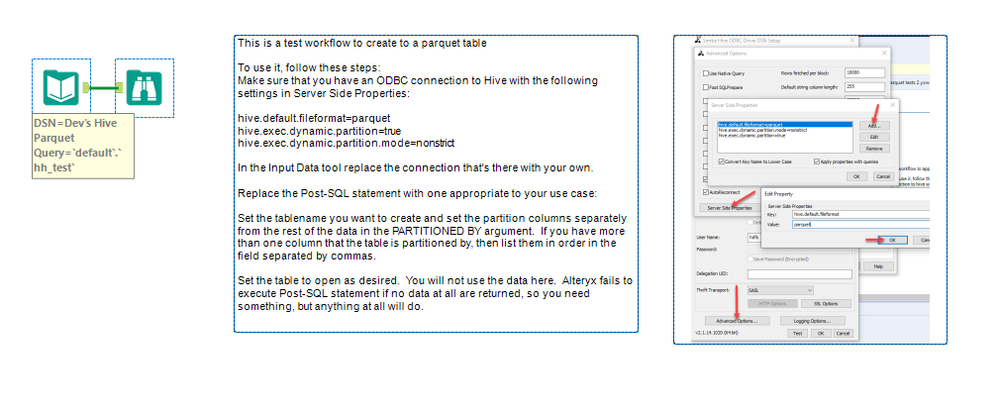

This is a test workflow to create to a parquet table

To use it, follow these steps:

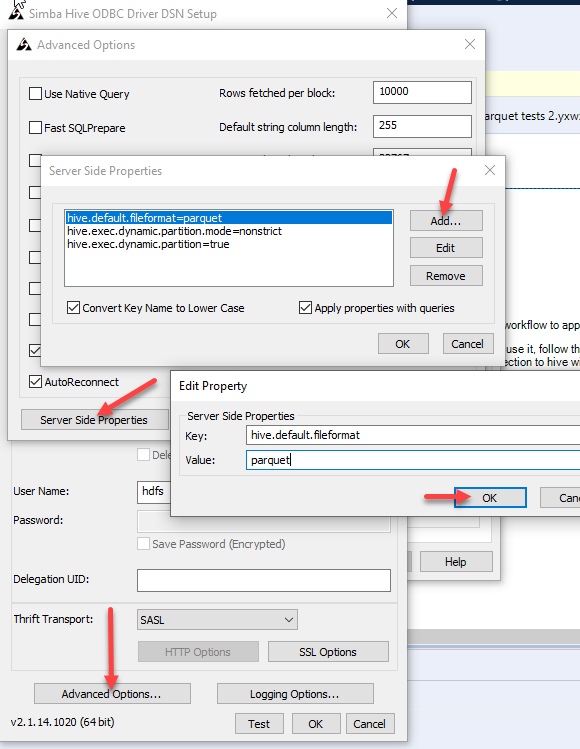

Make sure that you have an ODBC connection to Hive with the following settings in Server Side Properties:

hive.default.fileformat=parquet

hive.exec.dynamic.partition=true

hive.exec.dynamic.partition.mode=nonstrict

In the Input Data tool replace the connection that's there with your own.

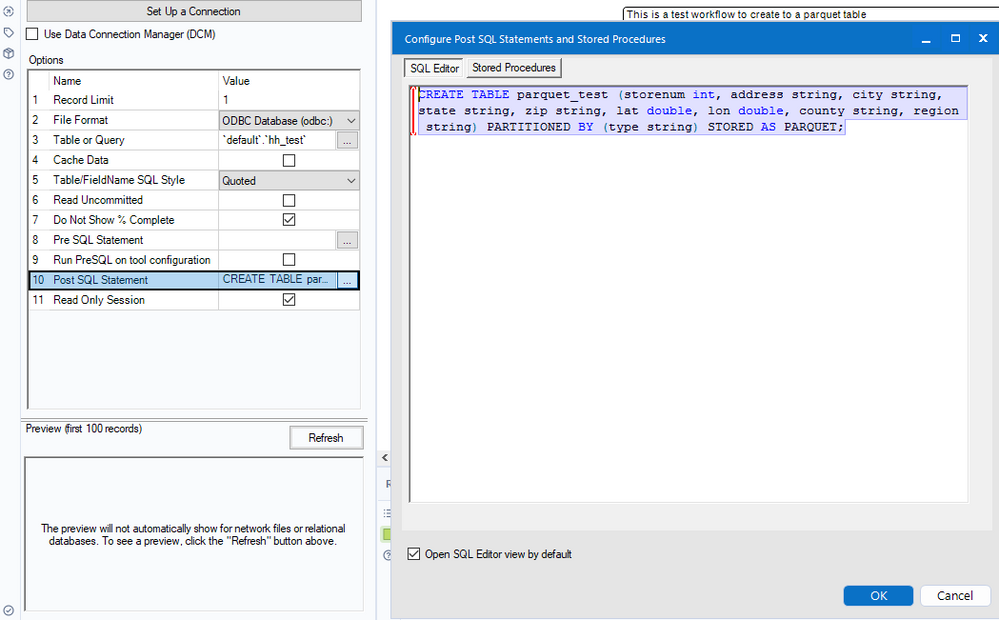

Replace the Post-SQL statement with one appropriate to your use case:

Set the tablename you want to create and set the partition columns separately from the rest of the data in the PARTITIONED BY argument. If you have more than one column that the table is partitioned by, then list them in order in the field separated by commas.

Set the table to open as desired. You will not use the data here. Alteryx fails to execute Post-SQL statement if no data at all are returned, so you need something, but anything at all will do.

Text version of the Post SQL statement I used:

CREATE TABLE parquet_test (storenum int, address string, city string, state string, zip string, lat double, lon double, county string, region string) PARTITIONED BY (type string) STORED AS PARQUET;

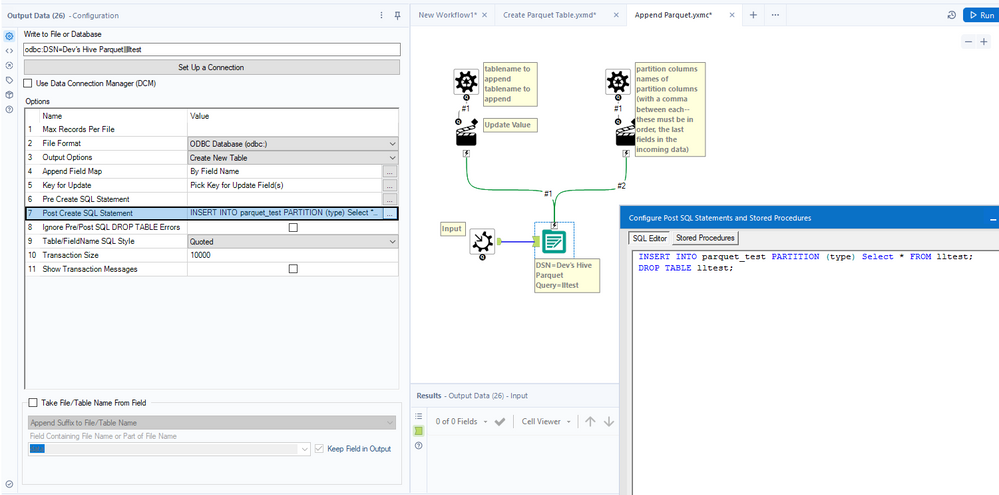

To append:

Text in Comment tool:

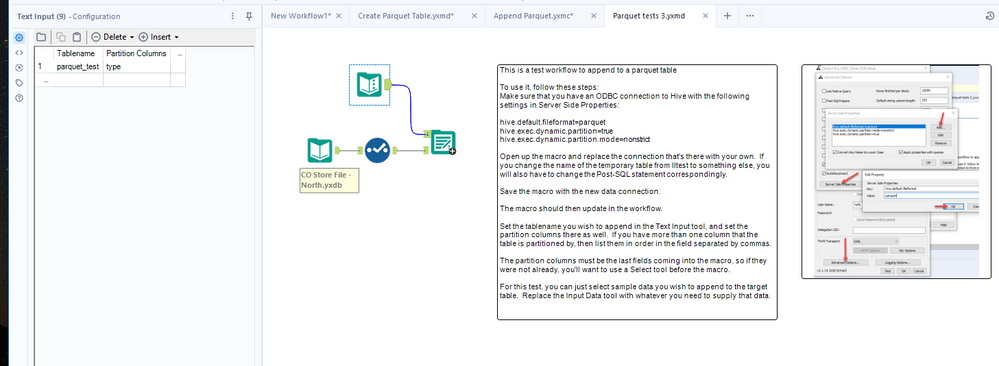

This is a test workflow to append to a parquet table

To use it, follow these steps:

Make sure that you have an ODBC connection to Hive with the following settings in Server Side Properties:

hive.default.fileformat=parquet

hive.exec.dynamic.partition=true

hive.exec.dynamic.partition.mode=nonstrict

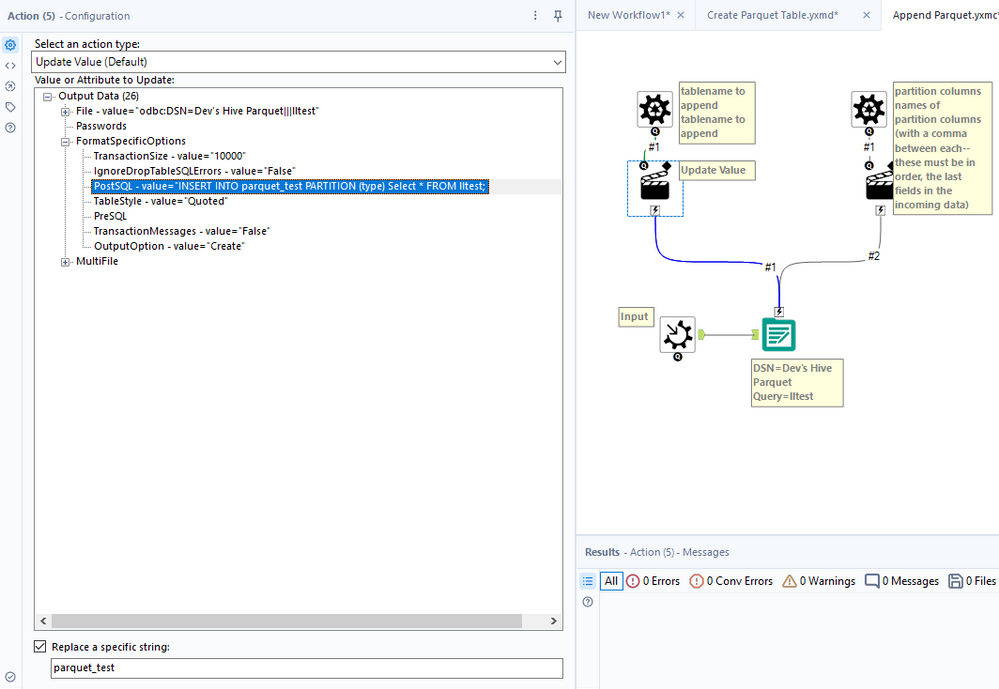

Open up the macro and replace the connection that's there with your own. If you change the name of the temporary table from lltest to something else, you will also have to change the Post-SQL statement correspondingly.

Save the macro with the new data connection.

The macro should then update in the workflow.

Set the tablename you wish to append in the Text Input tool, and set the partition columns there as well. If you have more than one column that the table is partitioned by, then list them in order in the field separated by commas.

The partition columns must be the last fields coming into the macro, so if they were not already, you'll want to use a Select tool before the macro.

For this test, you can just select sample data you wish to append to the target table. Replace the Input Data tool with whatever you need to supply that data.

text of the Post-Create SQL Statement I used: INSERT INTO parquet_test PARTITION (type) Select * FROM lltest;

DROP TABLE lltest;

I wish you the best of luck.

Principal Support Engineer -- Knowledge Management Coach

Alteryx, Inc.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

27 -

Alteryx Designer

7 -

Alteryx Editions

96 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

87 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,377 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,297 -

Installation

361 -

Interface Tools

1,903 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,260 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,188 -

Topic of Interest

1,126 -

Transformation

3,733 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »