Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Python script not populating all of SQL query usin...

Python script not populating all of SQL query using Alteryx, ok in Visual Studio

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

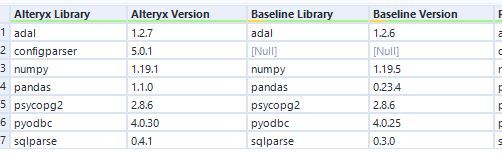

An odd outcome of a query into MS's APS SQL Server, where one column returns as nulled values on my machine where Alteryx is running, and from a peers machine where the result of Visual Studios run happened is complete. Thinking a likely culprit was a difference in libraries, I pulled that from both, shown here:

I was "hoping" that on the my local machine I had outdated libraries, but just the opposite is the case. The second area I covered was ODBC drivers, using both "SQL Server Native Client 11.0" and "ODBC Driver 17 for SQL Server" - that offered no difference.

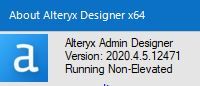

The top snip shows the data that is not being returned, the bottom the result from VS -

I can certainly install deprecated versions of these libraries and import them to show a true like-for-like, it just seems a bit counter intuitive that a subsequent version would have less functionality from a driver pov.

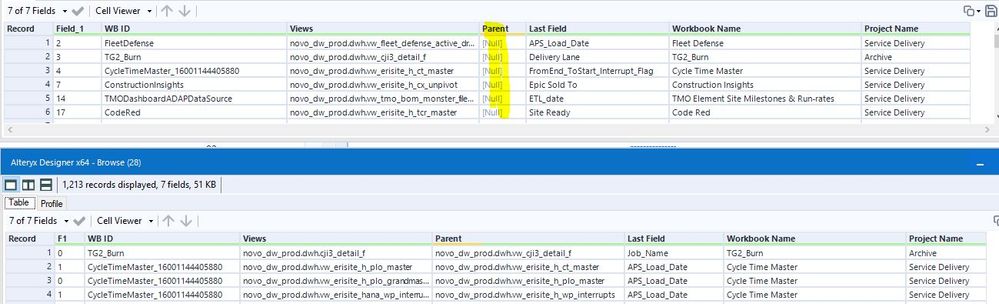

This is the necessary Alteryx info, running it elevated makes no difference either -

Thanks,

Sean

- Labels:

-

Database Connection

-

Python

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @salbol1

Thanks for posting to the Community!

It's hard to judge what's going on since we don't see your python script or your workflow.

While our support team cannot assist you with your python, they may be able to help troubleshoot why the tool isn't working correctly for you.

I would recommend opening a case with Support to see if they can help troubleshoot the Alteryx side of this issue.

Thanks!

TrevorS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This is the python script that is being run. The purpose of this module is to identify all the sources and sub-sources that feed Tableau PDS creation, or custom SQL that is performed against these base views and tables. The intent is to be able to see in near real time the status' of the datasources feeding workbooks, and hence, dashboards so we may provide a comprehensive view of the health of the data we are providing to our end users. These outputs feed timestamping we include as standard on our dashboard ribbons, and can alert the respective dashboard owners (as well as support teams) when a system based source is not refreshing as it should be.

import psycopg2

import pyodbc

import configparser

import os

import os.path

import sys

import subprocess

import smtplib

from psycopg2 import Error

import datetime as dt

import smtplib

import shutil

import adal

import pandas as pd

import numpy as np

import re

import sqlparse

# APS Database connection information left out

Server = 'xxx.xxx.xxx.xxx,yyyyy'

database = 'NOVO_DW_PROD'

username = 'XXXXXXXXXXX'

password = 'xxxxxxx'

driver = '{SQL Server Native Client 11.0}

try:

# Connect to an existing database

connection = pyodbc.connect('DRIVER='+driver+';SERVER='+server+';PORT=1433;DATABASE='+database+';UID='+username+';PWD='+ password)

# Create a cursor to perform database operations

cursor = connection.cursor()

# Executing a SQL query to get the View definitions

cursor.execute(""" SELECT REPLACE(REPLACE(DB_Schema, '[',''),']','.') + REPLACE(REPLACE(Name, '[',''),']','') AS ViewName

, Definition

FROM(

select 'novo_dw_prod.' + lower(SUBSTRING(definition, CHARINDEX('[',definition), (CHARINDEX(']',definition) - CHARINDEX('[',definition)+1))) AS DB_Schema

, lower(SUBSTRING(definition, CHARINDEX('[',definition,CHARINDEX('[',definition)+1), 1+ CHARINDEX(']',definition,CHARINDEX(']',definition)+1) - CHARINDEX('[',definition,CHARINDEX('[',definition)+1))) AS Name

, lower(replace(replace(definition,'[','' ),']','')) as definition

from NOVO_DW_PROD.sys.sql_modules

UNION ALL

select 'eris_hana_prod.' + lower(SUBSTRING(definition, CHARINDEX('[',definition), (CHARINDEX(']',definition) - CHARINDEX('[',definition)+1))) AS DB_Schema

, lower(SUBSTRING(definition, CHARINDEX('[',definition,CHARINDEX('[',definition)+1), 1+ CHARINDEX(']',definition,CHARINDEX(']',definition)+1) - CHARINDEX('[',definition,CHARINDEX('[',definition)+1))) AS Name

, lower(replace(replace(definition,'[','' ),']','')) as definition

from ERIS_HANA_PROD.sys.sql_modules

)T

where REPLACE(REPLACE(DB_Schema, '[',''),']','.') + REPLACE(REPLACE(Name, '[',''),']','') is not null

""")

# Fetch result

record = cursor.fetchall()

# Executing a SQL Query to get the Last Field from the Information Schema

cursor.execute(""" SELECT lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME) AS ColumnName

, Column_Name

FROM NOVO_DW_PROD.INFORMATION_SCHEMA.COLUMNS

INNER JOIN (SELECT lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME) AS CName

, max(ordinal_position) AS Pos

FROM NOVO_DW_PROD.INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_SCHEMA = 'DWH'

GROUP BY lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME))P ON P.CName = lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME)

and P.Pos = ordinal_position

UNION ALL

SELECT lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME) AS ColumnName

, Column_Name

FROM ERIS_HANA_PROD.INFORMATION_SCHEMA.COLUMNS

INNER JOIN (SELECT lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME) AS CName

, max(ordinal_position) AS Pos

FROM ERIS_HANA_PROD.INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_SCHEMA = 'DWH'

GROUP BY lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME))P ON P.CName = lower(TABLE_CATALOG+ '.' +TABLE_SCHEMA + '.' + TABLE_NAME)

and P.Pos = ordinal_position

""")

# Fetch result

LastField = cursor.fetchall()

# Executing a SQL Query to get the views to run the crawler on

cursor.execute(""" SELECT DISTINCT [Workbook Id], [APS Views], [Workbook Name], [Project Name]

FROM DWH.VW_MNBO_Rept_Tableau_WB_Datasource

""")

apsViews = cursor.fetchall()

# Creating a dictionary to map the last field name for the views

lastfieldDf = pd.DataFrame.from_records(LastField, columns = ['View Name', 'Last Field'])

lfdict = lastfieldDf.set_index('View Name')['Last Field'].to_dict()

# Creating a lookup for View definitions and cleaning up the comments from the Definition using sqlparse

df = pd.DataFrame.from_records(record, columns = ['View Name', 'Definition'])

df.set_index('View Name', inplace = True)

df['Definition'] = df['Definition'].map(lambda x: sqlparse.format(x, strip_comments = True))

# Create an output file of all the datasources the crawler needs to run on

apsViewsDfAll = pd.DataFrame.from_records(apsViews, columns=['WB Name','Main', 'WB', 'Project Name'])

apsViewsDf = apsViewsDfAll[['WB Name', 'Main']]

apsViewsDf.to_csv('C:\\Users\\******\\Desktop\\APSCrawler\\datasources.csv')

# Read in the datasources as a df

df = pd.read_csv('C:\\Users\\*********\\Desktop\\APSCrawler\\datasources.csv')

##df.head()

Alteryx.write(apsViewsDf,1)

# Perform some data cleansing

df['DS'] = df["Main"].str.split(" ", 1, expand=True)[0]

df = df[['WB Name', 'DS']]

df['DS'] = df['DS'].str.replace('novo_dw_prod.vw', 'novo_dw_prod.dwh.vw')

df['DS'] = df['DS'].str.replace('+', '')

df = df[df['DS'].str.startswith('novo') | df['DS'].str.startswith('eris') ]

# Main execution of looping to get the fundamental views

reviewd_list = []

limit = 10

cnt = -1

dfmain = pd.DataFrame(columns = ['Views', 'WBDS', 'Parent', 'WB'])

while cnt < limit:

cnt +=1

if cnt == 0:

for j in df['WB Name']:

for i in df[df['WB Name']==j]['DS']:

dict_test = {}

reviewd_list.append(j+' '+i)

try:

stri = df.loc[i,'Definition']

# stri = sqlparse.format(stri, strip_comments = True)

except:

continue

lst = []

lst.append(re.findall('novo_dw_prod.dwh.\w+', stri))

lst.append(re.findall('eris_hana_prod.dwh.\w+', stri))

lst.append(re.findall(' dwh.\w+', stri))

lst = [item.replace(' ','novo_dw_prod.') for sublist in lst for item in sublist]

dict_test[j+';'+i] = list(set(lst))

dfcat = pd.DataFrame.from_dict(dict_test)

dfcat['WBDS'] = j+';'+i

dfcat.columns = ['Views', 'WBDS']

dfcat['Parent'] = i

dfcat['WB'] = j

dfmain = pd.concat([dfmain, dfcat])

dfmain = dfmain[dfmain['WBDS'] != dfmain['WB']+';'+dfmain['Views']]

else:

for j in dfmain['WB']:

for i in dfmain[dfmain['WB']==j]['Views']:

dict_test = {}

lst = []

if j+' '+i in reviewd_list:

continue

else:

reviewd_list.append(j+' '+i)

try:

stri = df.loc[i,'Definition']

# stri = sqlparse.format(stri, strip_comments = True)

lst = []

lst.append(re.findall('novo_dw_prod.dwh.\w+', stri))

lst.append(re.findall('eris_hana_prod.dwh.\w+', stri))

lst.append(re.findall(' dwh.\w+', stri))

lst = [item.replace(' ','novo_dw_prod.') for sublist in lst for item in sublist]

dict_test[j+';'+i] = list(set(lst))

dfcat = pd.DataFrame.from_dict(dict_test)

dfcat['WBDS'] = j+';'+i

dfcat.columns = ['Views', 'WBDS']

dfcat['Parent'] = i

dfcat['WB'] = j

dfmain = pd.concat([dfmain, dfcat])

dfmain = dfmain.drop_duplicates()

except KeyError:

continue

dfmain = dfmain[dfmain['WBDS'] != dfmain['WB']+';'+dfmain['Views']]

dfmain = dfmain[['WB', 'Views', 'Parent']]

dfm_orig = df

dfm_orig.columns = ['WB', 'Views']

dfm_orig['Parent'] = ''

dfmain = pd.concat([dfmain, dfm_orig])

dfmain['Last Field'] = dfmain['Views'].map(lfdict)

dfmain = dfmain[dfmain['Last Field'].notna()]

wbnameIDdict = apsViewsDfAll.set_index('WB Name')['WB'].to_dict()

wbprojectIDdict = apsViewsDfAll.set_index('WB Name')['Project Name'].to_dict()

dfmain['Workbook Name'] = dfmain['WB'].map(wbnameIDdict)

dfmain['Project Name'] = dfmain['WB'].map(wbprojectIDdict)

dfmain.columns = ['WB ID', 'Views', 'Parent', 'Last Field', 'Workbook Name', 'Project Name']

dfmain.to_csv('C:\\Users\\*********\\Desktop\\APSCrawler\\Script3_output.csv')

except (Exception, Error) as error:

logFile = 'C:\\Users\\*********\\Desktop\\APSCrawler\\Log'+'Error_Log_' + strftime("%a %d/%m/%Y") + '.txt'

if os.path.exists(logFile):

append_write = 'a' # append if already exists

else:

append_write = 'w' # make a new file if not

f = open(logFile,append_write)

f.write(filename + "-" + error + '\n')

f.close()

finally:

if (connection):

cursor.close()

connection.close()

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,369 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,706 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,976

- « Previous

- Next »