Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Difference in R^2 between linear regression and de...

Difference in R^2 between linear regression and decision tree model

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am trying to create a regression model to predict sales. When I run my data with the Linear Regression tool I get an R-squared value of .046. When I run it through the decision tree I get .71, which is much better.

I am using the same variables in both models and not changing any other configurations. Could anyone tell me why there is such a large discrepancy between R^2 values?

Solved! Go to Solution.

- Labels:

-

Predictive Analysis

-

R Tool

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @chasejancek

There's a lot more to predictive modeling than a getting a high R^2 value. Selecting the appropriate model type is an important first step.If you're building a model to predict sales, the continuous nature of that dependent variable should guide the types of models tested. A linear regression is the more appropriate choice for this scenario, whereas a Decision Tree model is more appropriate for classification scenarios.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @chasejancek

It's difficult to say without actually seeing your data, but it probably has to do it's "shape". By "shape", what I mean is, what is type of correlation is there between the dependent and independent variables. If the data has a linear correlation built into it, then a linear regression will model it with a large R^2 value. For example, where I'm from, amount of snow left on the ground in March has as strong linear correlation to the amount of snow that has fallen since the previous November. The relationship isn't perfectly linear because there is some temperature induced variation. If the underlying data has a different distribution, i.e. it's normally distributed, linear regression will still produce a result, but the R^2 error will be much smaller.

The decision tree model is more complex and is able to find sub groups within the data that share common correlation characteristics. It's able to take a normal distribution and split it into groups that minimize the R^2

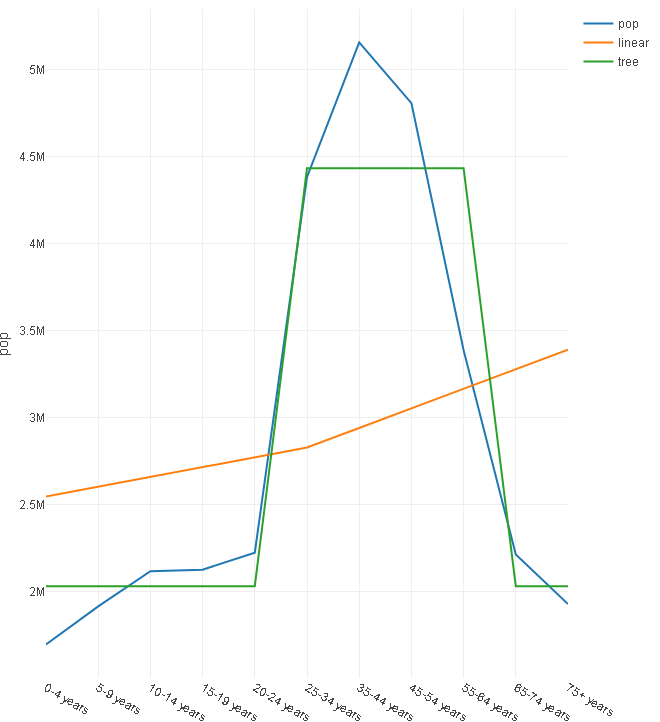

The following data models a hypothetical population distribution based on age(Blue line). The mustard colored line is the output of the Linear regression tool. The green one was created using a Decision Tree tool.

Because the underlying data is not linear, the decision tree was able to model it with a higher R^2 (=.8) than the linear regression (R^2 = 0.01).

You need to have some understanding of your data in order to pick the correct model to apply

This is part of what makes statistics so much fun!

Dan

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

93 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

267 -

Common Use Cases

3,821 -

Community

26 -

Computer Vision

85 -

Connectors

1,425 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,218 -

Datasets

5,218 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,366 -

Developer Tools

3,527 -

Documentation

526 -

Download

1,036 -

Dynamic Processing

2,936 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,255 -

Events

198 -

Expression

1,867 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,705 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,093 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,861 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,251 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,164 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

853 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,430 -

Resource

1 -

Run Command

575 -

Salesforce

276 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

625 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,186 -

Topic of Interest

1,126 -

Transformation

3,724 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,971

- « Previous

- Next »