Alteryx Analytics Cloud Product Ideas

Share your Alteryx Analytics Cloud product ideas, including Designer Cloud, Intelligence Suite and more - we're listening!- Community

- :

- Community

- :

- Participate

- :

- Ideas

- :

- AAC (Cloud): Top Ideas

Replace, ReplaceFirst, ReplaceChar and Regex_Replace in the cloud all have a limitation where arguments must be constants. Any attempt to use a column returns an error. This would a nice enhancement to have parity with Desktop. The below functions currently error out in cloud but work in desktop.

Replace(Column1,Column2, Column3)

Regex_Replace(Column1,Column2,Column3)Could doesn't like that Column2 and Column3 are references to fields. It only works if you hardcode a string like "text". Desktop doesn't have this limitation.

I would like the ability to specify a billing project for BigQuery as part of run options. Currently, data queried from BigQuery is associated to the project from which a Dataprep flow is run with no way to change it. For customers we work with in a multi-project environment, they need the flexibility to align queries to specific projects for purposes of cost and usage attribution.

Additionally, for customers on flat-rate BigQuery pricing, a selectable billing project will allow users to move queries to projects under different reservations for workload balancing and/or performance tuning.

We can migrate flows from one environment to other environment using Trifacta APIs.

Export and Import the flow from source to target.

Rename the flow.

Share flow with appropriate user according to environment.

Change the input and output of the flow.

Create a connector to Mavenlink.

We at Grupo Boticário, who currently have 13k Dataprep licenses and close to the official launch internally, have noticed a recurring request for a translation of the tool. Bearing in mind that it will be an enabler for more users to use in their day-to-day work, I would like to formalize and reinforce the importance of our request for translation into Brazilian Portuguese as well as a forecast of this improvement.

Currently, If there is use-case that the data needs to brought from tables resting in different databases of a same cluster. We have to create n connections for n databases.

But being in same cluster, one should be able to access different databases with a single connection otherwise the connection list gets long and messy.

Currently there is support for parameterizing variables in custom SQL dataset in Dataprep. However it requires that the tables using this feature have the same table structure. This request is to allow this same functionality but with tables that have different table structures.

Example:

Table A

dev.animals.dogs

name | height | weight

Table B

dev.animals.cats

name| isFriendly

Would like to use a query where we have 1 custom SQL dataset where we just say

SELECT * FROM dev.animals.[typeOfAnimal]

typeOfAnimal being the parameterized variable with a default of dogs.

Users to be able to create multiple connections to data lake. Currently user needs to add new data lake path and browse it in order to import data

In our organization we would like to export path to data lake to be enforced and thus user not able to export to any location but a location desired by the application admins.

Users onboarded to Trifacta cannot be deleted from the GUI, only using API. In the GUI users can only be disabled but they still count toward the licensed users. Please allow users to be deleted from the GUI.

If a flow is shared between multiple editors and someone make changes in it, there should be a way we can see all the changes made to that flow by different users, like creating a trigger that will notify the users about the changes made in the flow by someone as soon as the recipe changes or if we can extract the information about the flow or the job. I have attached the snippet of data that can be useful to us.

At the moment the only edit history visible to Trifacta users is within each recipe. Some actions are done within flows rather than recipes, e.g. recipe creation/deletion/taking union/etc. Such actions are not covered within the edit history, but for compliance purposes/troubleshooting it is important for users to know when these actions were taken/who by. Please would it be possible to add the functionality to Trifacta to have an edit history on flows as well as within recipes?

As of now the once a user deletes the flow, the flow will not be visible to anyone, except in the database. But the flow is soft deleted in the database. So can enable the option for admins to see all the deleted flows and recover those flows if required, so that in case some one deletes the flow by mistake then admins can retrieve it by recover option. This has to be an option by check box, where they can recover those flows all at once if it is a folder. This option can also be given to folder recovery where they can recover all the flows in the folder.

When output a Trifacta formated date, like a derived year (year(mydate) ) or formatted to mmddyyyy that when it is published to a SQL table that it maintains that format or transformation. Currently putting out a formatted or transformed date is put out to the SQL Table in the input format and all transformation and formatting is lost.

When creating a destination to and SQL table on an SQL server, allow you to set the field length and not use the Trifacta output standard of varchar (1024). This would help keep the maintenance on the table easier.

Hi team,

We would need a page where a user can handle all the email notifications they are receiving from all the flows (success and failure).

Thank you

Hello,

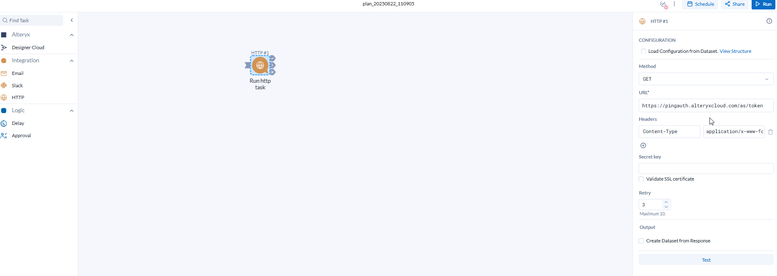

Here what I have when I want to do an http requests in Plan :

Where is the entry for payload, body (like here, I have to specify my client_id..) ?

Edit : my bad, it was a post request and here the recommandation for get

https://www.rfc-editor.org/rfc/rfc2616#section-9.3

Hi Alteryx,

Most of our clients, particularly those from the government and financial sectors, have strict restrictions on cloud-based data storage. While they are open to using cloud products, they are concerned about the possibility of users uploading sensitive data to the cloud.

Given this, could you consider implementing a control that prevents data uploads to the cloud, while still allowing access to cloud-based features where necessary?

Being able to output data to Microsoft Dataverse tables via Alteryx Designer Cloud would be super helpful and a wonderful addition to this great tool. I need to be able to schedule a daily flow to output data to Dataverse tables. While my organization has Alteryx One, we have elected not to enable Cloud Execution for Desktop (for security or maintenance reasons) and we do not have Alteryx Server. So, I need a solution that works from Alteryx Cloud Designer.

When I rename a field in Alteryx - it would be valuable for Alteryx to look at all tools downstream from the select tool and say "Hey - you are using that field - do you want me to clean up the formulas etc".

A field is not just letters in a formula - a field is a logical concept / an object - so it should be relatively easy to inspect everywhere there's a dependance on this object downstream and just clean this up automatically.

This would get rid of all the painful toil of having to reconfigure tools, change formulae, change select tools etc - that can just all go away in a puff of Aidan Magic.

- New Idea 83

- Accepting Votes 0

- Comments Requested 0

- Under Review 10

- Accepted 1

- Ongoing 0

- Coming Soon 0

- Implemented 0

- Not Planned 0

- Revisit 0

- Partner Dependent 0

- Inactive 0

-

Admin Experience

2 -

Admin Portal

2 -

Administration

24 -

App Builder

1 -

Automating

7 -

Category Input Output

1 -

Connecting

11 -

Data Connectors

6 -

Designer Cloud

14 -

Engine

2 -

Enhancement

20 -

Integrations

1 -

Location Intelligence

1 -

Machine Learning

1 -

New Request

14 -

Profiling

2 -

Publishing

4 -

Sharing & Reuse

6 -

Tools Macros

2 -

Transforming

12 -

Trifacta Classic

66 -

User Experience

10 -

User Management

2

- « Previous

- Next »

- abacon on: Function to Identify User Running Workflow for Con...

-

TheOC on: Create a Run Log of Workflows and Outputs Generate...

-

TheOC on: Ability to be notified when AACP is upgraded

- julieldsp on: Ability to create groups of users

- AmelMoudoud on: the lack of integration of the different apps is d...

- AmelMoudoud on: AutoML : disturbing lack of file formats

- simonaubert_bd on: Container Background and Text Color

- simonaubert_bd on: * (star, asterisk) after the workflow name when no...

-

TheOC on: Ctrl+S instead of Alt+S for save key shortcut

- RWvanLeeuwen on: A simple way to export a workflow/app/... any asse...