Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Re: Challenge #131: Think Like a CSE... The R Erro...

Challenge #131: Think Like a CSE... The R Error Message: cannot allocate vector of size...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Sorry, I sent by Mistake, I should send it someone else, accept my apology.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I tried to practice your solution because I have the same problem but related to (Lenier regression), I noticed you used the Generate rows tool, As I understand it will create a new field if the number of rows reached 1000,000 but what does this mean? what it is useful for ? how it solves the problem of memory limitation? please explain more.

Note: After a while (around 7 minutes) when running your workflow My device halted and not responding !!??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

For the particular problem of the coworker, the data size seems to be too big to handle. Connect from Select Tool to remove some fields may be able to solve the problem.

For my R error, the problem is field type mismatch. Connecting from Select Tool also solve the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

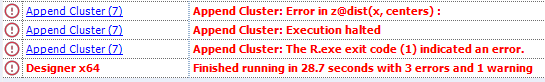

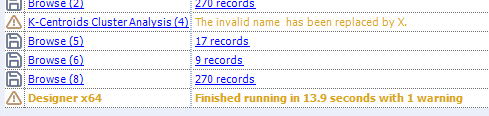

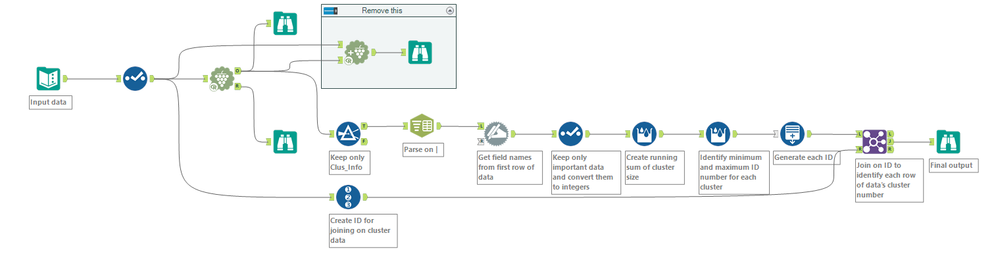

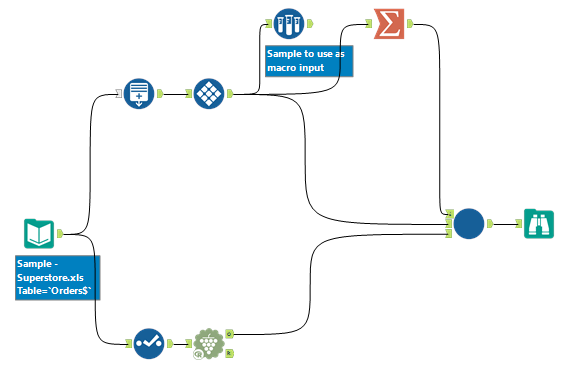

Chose to get the necessary data directly from the K-Centroids Cluster Analysis tool instead of using an Append Cluster tool. Assuming that the K-Centroids Cluster Analysis tool works, hopefully my solution would be a more optimised way of obtaining the data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

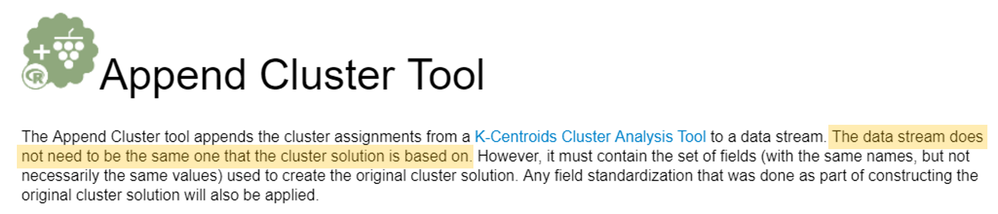

Big hint in the help page for this one!

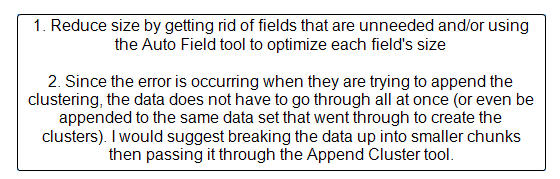

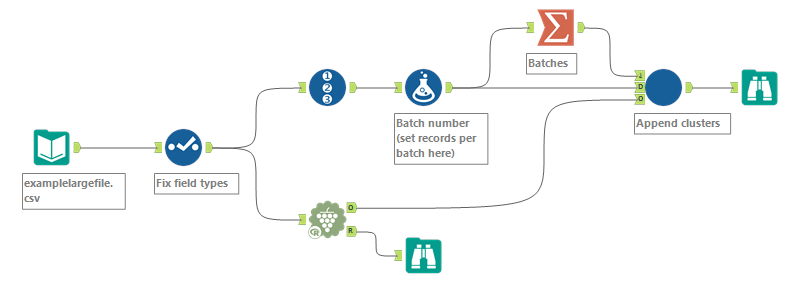

First, I read the help on the Append Cluster tool, and there was a big hint here!

Knowing that 1) the actual cluster analysis runs, and 2) I don't need to send the full original dataset into the Append Clusters tool --> maybe it can be done in batches. I generated 5M fake records for testing.

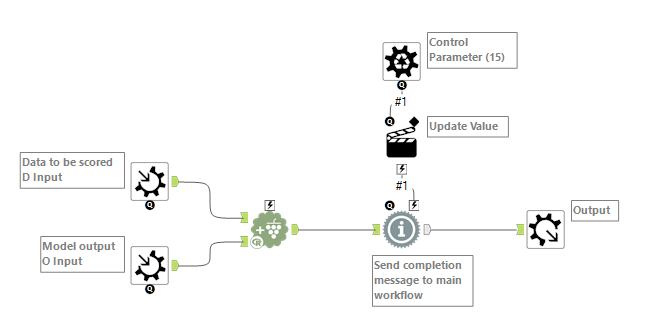

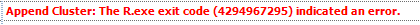

My solution is pretty similar to the given solution:

Batch macro:

Outer workflow:

There also may be some opportunity for using more efficient data types. Possibly, that may be happening in the Select tool in the original workflow, in which case we should connect the Select output to the Append Clusters tool rather than the raw input.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

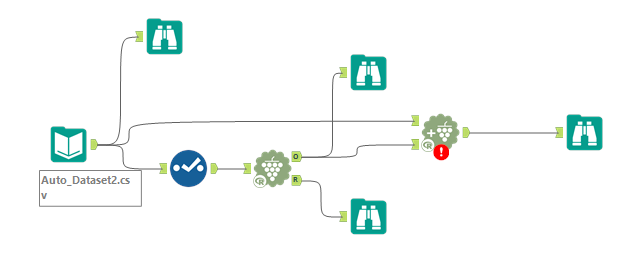

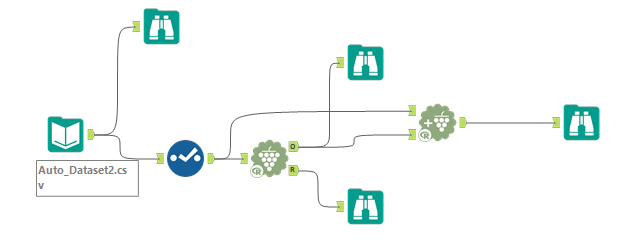

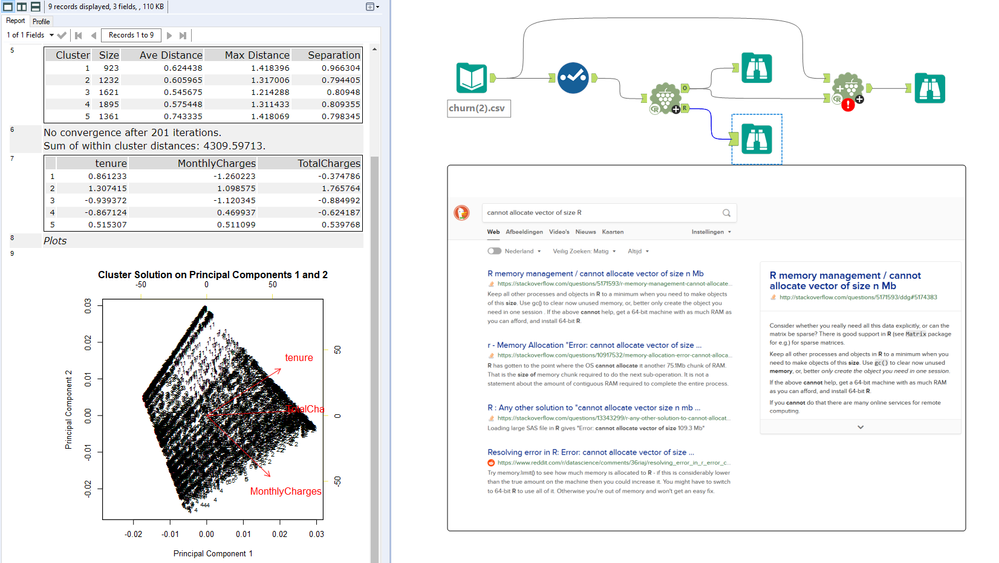

I couldn't replicate the error unfortunately, I kept getting this error - which upon googling, seems to be a fake error - where all the data still flows through. I then looked at some spoilers and tried to copy their approach with a generate rows, but it still didn't give me the error. I then looked on Google as others had suggested for solutions and I found this awesome post:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Cluster-Analysis-Customer-R-tool-Error...

This is what JohnJPS said:

"The "cannot allocate vector" error is what R gives when it runs out of memory; as you can see it's trying to allocate quite a large 5+ GB chunk of RAM. This is not unexpected for large datasets, as R can be somewhat memory inefficient. Potential solutions:

- Increase RAM on your workstation. (Not a convenient option, but not a joke: more RAM helps).

- Find a comfortable data size, and run through only that much, in chunks."

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

many issues do be thought of, but I could not replicate the same error message with any test dataset I had (probably due to the smaller size)

anyways: here's my workflow and thoughts:

problem+solution-combination 1): out of memory + append grouping in chunks using a batch macro, set datatypes optimally to ensure minimal size, increase memory on machine, try the parallel or future packages from R to allow multithreading. 2) perhaps there is a sparsematrix so tryto see if you run out of memory when you impute the missing data first (mean imputation will suffice just for testing purposes) 3) let the workflow become an iterative version where you each time perform a (stratified) sampling maneuvre in each iteration, find the clusters, and append them to the dataset, in a next step in the sequence you could analyse all labels added to the data and find an average or mode /best-fitting label for each item. Anyways, just document your process properly and discuss with your pears whether this would be appropriatecombine

problem+solution-combination 1): out of memory + append grouping in chunks using a batch macro, set datatypes optimally to ensure minimal size, increase memory on machine, try the parallel or future packages from R to allow multithreading. 2) perhaps there is a sparsematrix so tryto see if you run out of memory when you impute the missing data first (mean imputation will suffice just for testing purposes) 3) let the workflow become an iterative version where you each time perform a (stratified) sampling maneuvre in each iteration, find the clusters, and append them to the dataset, in a next step in the sequence you could analyse all labels added to the data and find an average or mode /best-fitting label for each item. Anyways, just document your process properly and discuss with your pears whether this would be appropriatecombine-

Advanced

302 -

Apps

27 -

Basic

158 -

Calgary

1 -

Core

157 -

Data Analysis

185 -

Data Cleansing

5 -

Data Investigation

7 -

Data Parsing

14 -

Data Preparation

238 -

Developer

36 -

Difficult

87 -

Expert

16 -

Foundation

13 -

Interface

39 -

Intermediate

268 -

Join

211 -

Macros

62 -

Parse

141 -

Predictive

20 -

Predictive Analysis

14 -

Preparation

272 -

Reporting

55 -

Reporting and Visualization

16 -

Spatial

60 -

Spatial Analysis

52 -

Time Series

1 -

Transform

227

- « Previous

- Next »