Weekly Challenges

Solve the challenge, share your solution and summit the ranks of our Community!Also available in | Français | Português | Español | 日本語

IDEAS WANTED

Want to get involved? We're always looking for ideas and content for Weekly Challenges.

SUBMIT YOUR IDEA- Community

- :

- Community

- :

- Learn

- :

- Academy

- :

- Challenges & Quests

- :

- Weekly Challenges

- :

- Challenge #131: Think Like a CSE... The R Error Me...

Challenge #131: Think Like a CSE... The R Error Message: cannot allocate vector of size...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The solution to last week's Challenge has been posted here!

We are thrilled to present another Challenge from our “Think like a CSE” series, brought to you by our fearless team of Customer Support Engineers. Each month, the Customer Support team will ask Community members to “think like a CSE” to try to resolve a case that was inspired by real-life issues encountered by Alteryx users like you! This month we present the case of the R Error Message: cannot allocate vector of size 7531.1 Gb.

Below, we’ve provided the information that was initially available to the Customer Support Engineer who resolved the case. It’s up to you to use this information to put a solution together for yourself.

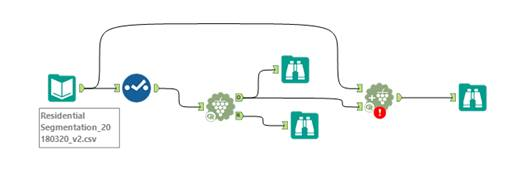

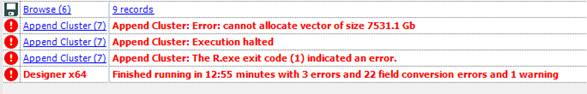

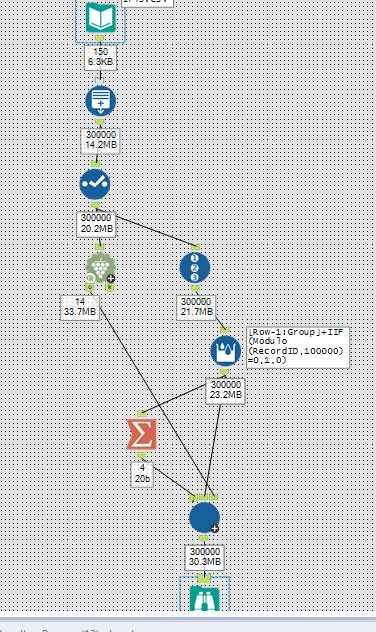

The Case: A co-worker is running into the R error “Append Cluster: Error: cannot allocate vector of size 7531.1 Gb“ in a workflow that uses a couple of the Predictive Clustering Tools. This error is causing the workflow to stop running before completing. A screenshot of the workflow, and the error(s) are as follows.

Your Goal: Identify the root cause of the issue, and develop a solution or workaround to help your co-worker get past this error and finish running the workflow.

Asset Description: Your co-worker can’t share the file with you due to client privacy concerns, but it is about 7 GB in size (3 million records and 30 fields). Using only the provided screenshots, dummy data of your own design, and sheer willpower, can you develop a possible resolution?

The Solution:

Thank you for participating in this week’s think like a CSE challenge!

Like many of you, when taking on this case I first asked for the data set to attempt to reproduce the error on my own machine. This is often a first step particularly for workflows that use predictive tools, as often errors with the predictive tools are caused by the data. However, as noted in the asset description the user was unable to share the data with me due to privacy concerns. This is not uncommon in Support- users are often unable to share their data or workflows due to privacy or other concerns.

To work around this, we will either generate or find a dummy data set to attempt to reproduce the error and explore workarounds.

Many of you correctly identified that the core cause of this error is memory. This is something you can find with a quick internet search of the error message. You can read more about R memory limitations here.

The error is stating that the code is unable to allocate a victory of 7531 GB, which means it is trying to hold that much data in the machine’s memory at once. With this in mind, I did not feel a RAM upgrade would resolve this issue, as 7531 GB of Ram would be difficult to find, and prohibitively expensive.

What is interesting about the error in this workflow is that it is coming from the Append Cluster Tool, which is effectively a Score Tool for the K-Centroids Cluster Analysis Tool. This suggests that the clustering solution itself is being built successfully by the K-Centroids Cluster Analysis Tool, and the workflow is running into the error while trying to assign cluster labels to the original data based on the clustering solution. Because the Append Cluster Tool is essentially a Score Tool, there is no need to try to run all the data through it at once. This gives us an opportunity for a workaround.

I suggested the user try to divide up the data set and run it through the Append Cluster Tool in multiple batches after creating the Clustering solution with the K-Centroids Cluster Analysis Tool. The most efficient way to do this is to build a batch macro with the Append Cluster Tool. There is a great Community Article on this second method called Splitting Records into Smaller Chunks to make a Workflow Process Quicker. The user confirmed that this method resolved the error on their machine. Case Closed!

It is important to remember that this strategy to work around the error is an option because the model itself is being built without issue, and the Append Cluster Tool’s results will not change if you run different groups of data through the tool at different times. Many of the predictive tools do require that the data all be provided at once to build a model, but the Score Tool and the Append Cluster Tool are simply applying a model to data to estimate the target values of the records.

Thank you again for participating! I hope that this has been an informative challenge and that you had as much fun working through it as I did!

- Labels:

-

Difficult

-

Macros

-

Predictive

-

Preparation

-

Transform

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Community Sources:

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Error-cannot-allocate-vector-of-size-2...

https://community.alteryx.com/t5/Alteryx-Designer-Discussions/Predictive-Logistic-Regression-Error/t...

Shout out to @MarshallG and @MarqueeCrew for these solutions.

General suggestions for memory allocation:

- Use the Auto Field tool to change the field types to the most efficient forms that the data allows. Unnecessary fields should be removed entirely.

- If the process allows, isolate the model estimation and assignment to different workflows. All records must be present to achieve the same model specification, but the Cluster Assignment can be batched so that only a subset of records are processed at a time.

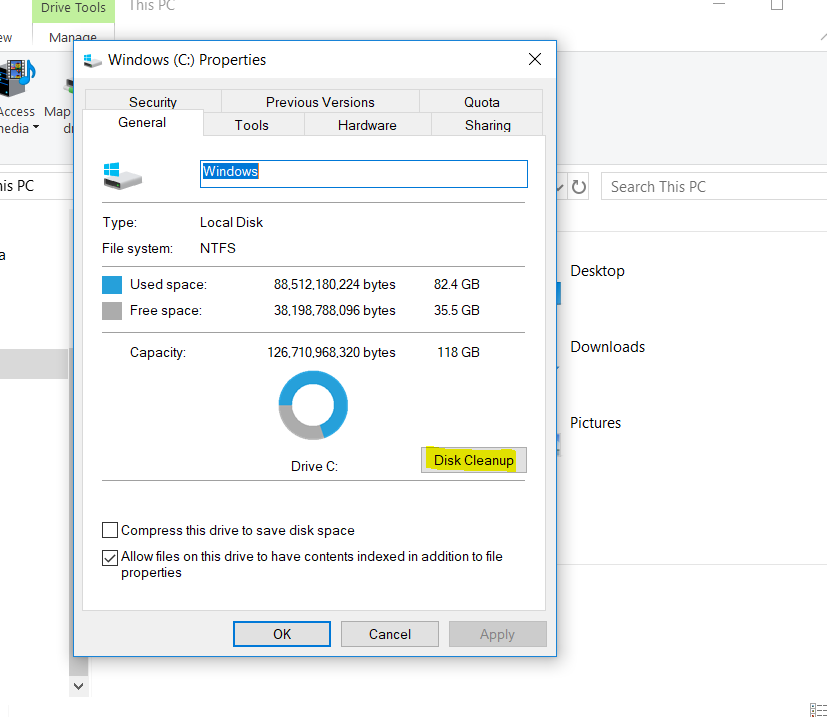

- The user/system settings in Alteryx generally set the initial allocation of memory, The Alteryx engine will use disk space as virtual memory as necessary. Make sure the Global Workspace path (Options>Advanced Options>System Settings) is on a disk with ample space available.

I was unable to replicate this error, so I'm not sure if these suggestions will help (or if this response will count as a completed challenge).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I've never used the cluster tools before, but....

I'm using the Iris dataset and just replicating the data to get a bigger dataset that I send through 100k records at a time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Having trouble recreating a data set here (dummy data would always help), so just going for some suggestions instead of attaching a workflow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here is my attempt at a root cause and possible solutions...

Possible solutions (in order of recommendation):

1. Ensure that the fields are property selected. CSV files default to all text fields, ensure that they are all properly typed and ensure that both the clustering algorithm and clustering append tool are running on the stream with properly typed data.

2. Reduce the use of the browse tool at the various spots in the workflow, these tools use memory on the machine which can contribute to the machine running out of memory

3. Utilize a random sample to create the clustering algorithm, then append based on this random sample. Oversample if required. Make sure that the clustering algorithm is accurate before solving for the scale problem.

4. Increase memory (if running on a server or in a cloud environment)

5. Load and utilize libraries within R to move memory to disk and back (e.g. bigmemory)

Bonus: I have provided many possible solutions, but numbers 1 - 3 in combination should provide the best result. This will work because it will address the memory issue at hand, it will also run faster, and should create accurate results if the sample is representative and large enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I would either size the data types differently and see if that changes the size of the file.

Clear disk space that is not being used.

Potentially break up the data set if that is a viable option (not the best option).

This seems like a viable option due to how Alteryx processes data on the disk space and does not necessarily commit data to memory. It is most likely filling the disk space on each run and can no longer process further.

I am not very experienced with this tool so I used R forum to determine problems. Not 100% on my answer either so I'm looking forward to the answer being posted!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I've used these tools before so I have a few ideas. It seems that the size of the data set with 3 million rows and 30 variables is the culprit!

1. Use principal components analysis to try to group the variables into "super-variables" reducing the number of columns in the data.

2. If there is still an issue, I would then use a random sample of the data to generate the clusters and the use the append cluster tool (2x) to add the clusters to score both the random sample and the unused sample.

For the bonus - reducing the size of the data set is key because I believe that the tool goes thru a very cumbersome task of calculating the distance between each observation and potential seed points or k-centroids and iterates to determine the optimal number of k-centriods as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

-Use a iterative macro to pass through a sensible amount of rows at a time,

-Make the data less big, can anything be cut out?

-Use a package like bigmemory

-If not already try running on a 64 bit computer with a huge amount of memory (althought i dont think this would work great on a 7531 gb file).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm attacking this from a sys admin point of view, since most of the Alteryx/R related points having to do with data set size, process optimisation and memory management were covered previously.

-Check for other processes, i.e. backups, antivirus running on the server that could be chewing up available RAM

-If running on a VM, check to see if RAM is over committed, i.e 2 VM servers allocated 16GB each on a chassis that only has 24GB of physical RAM. This is common practice for most VM environments, since most apps use RAM sporadically and then release it. Some, such as databases, will grab as much memory as allocated to keep data cached.

Dan

-

Advanced

302 -

Apps

27 -

Basic

158 -

Calgary

1 -

Core

157 -

Data Analysis

185 -

Data Cleansing

5 -

Data Investigation

7 -

Data Parsing

14 -

Data Preparation

238 -

Developer

36 -

Difficult

87 -

Expert

16 -

Foundation

13 -

Interface

39 -

Intermediate

268 -

Join

211 -

Macros

62 -

Parse

141 -

Predictive

20 -

Predictive Analysis

14 -

Preparation

272 -

Reporting

55 -

Reporting and Visualization

16 -

Spatial

60 -

Spatial Analysis

52 -

Time Series

1 -

Transform

227

- « Previous

- Next »