Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- How can I make my duplicate values null?

How can I make my duplicate values null?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I was looking throughout the community thinking this was already asked, maybe it has been and I just couldnt find it, but I was curious to know if there was a way to make duplicate values show as null.

For example, lets say I have customer ID numbers as follows:

| Customer ID # |

| 12345 |

| 45832 |

| 78043 |

| 92378 |

| 72213 |

| 12345 |

I would like to have one of these customer ID numbers switched to null. Not the whole row of data just the one cell, or Customer ID #.

Thank you!

Solved! Go to Solution.

- Labels:

-

Preparation

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You could try a multiRow - I've attached a workflow which should help.

If you have more than two customer IDs you would need to amend the formula to:

if [Customer ID #] = [Row-1:Customer ID #] then null()

elseif isnull([Row-1:Customer ID #]) then null() else [Customer ID #] endif

EDIT - attached a second option using a unique tool. Too many choices!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

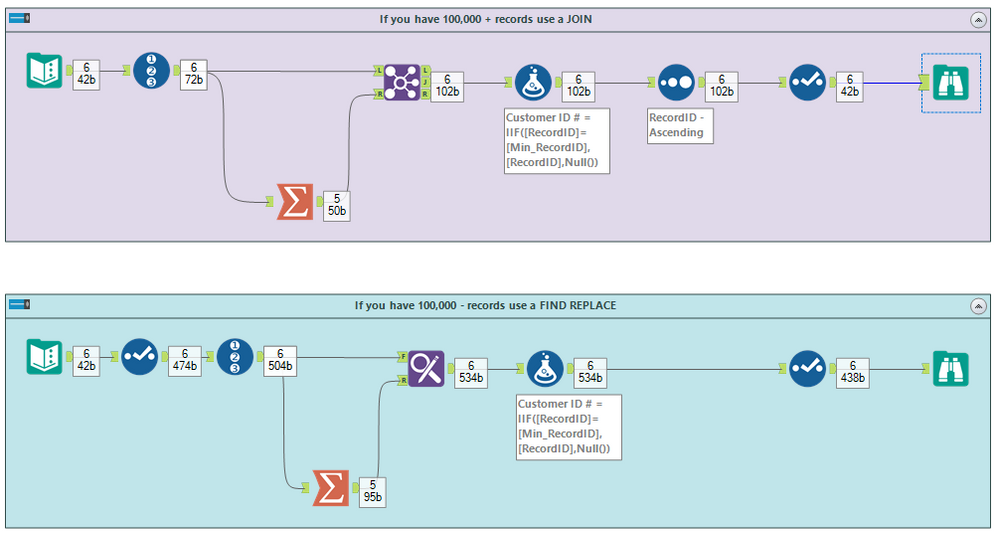

I'd approach this by summarizing. First put a record Id on each record. Next summarize by grouping on your customer id and getting the first or minimum recordid. Now join this data back to your original data on customer id.

a formula could now be built to null ids

if recordid = min_recordid then custid

else null()

endif

cheers,

mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@MarqueeCrew,

Do you have a screenshot or could you be a little more descriptive with this? I think this is the direction I need to head in. I tried the other solution but I have multiple duplicates of the same record and the first solution seems geared towards if you only have one duplicate.

Also, for what it is worth, I already have a rather lengthy workflow going and have already spent some time cleaning my Customer ID column so if there is a way to do this without having to start from scratch that would be ideal.

I am new to this so thank you for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'll build one now for you. Hold on.

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I've performed this with both a FIND REPLACE and a JOIN. The FIND REPLACE doesn't require a SORT.

Thanks for the opportunity to solve another post.

Cheers,

Mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

What about filtering out duplicates from the unique tool and either use a formula tool to replace to null or use find and replace ?

Nez

Alteryx ACE | Sydney Alteryx User Group Lead | Sydney SparkED Contributor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

That is clever. I like that too. It is straight forward.

for designer today, we should both be given accepted solutions. If however I'm the future this should run on an e2 engine, I'm not certain that the unique tool would promise to get the first occurrence.

cheers,

mark

Chaos reigns within. Repent, reflect and restart. Order shall return.

Please Subscribe to my youTube channel.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I used the unique tool to filter out the duplicates originally. I just couldn't figure out how to add them back into my data set as null once I identified the duplicates. Thank you all for your solutions, I will try them shortly and let you know how it goes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @KonaCantTalk ,

I think the second workflow I attached yesterday might have gone unnoticed, it included a potential solution which used a unique tool to split, change the duplicates to Null and then Union back the dataset. I added in an additional duplicate value to test against more than 1 duplicate. Hope this helps.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »