Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Execute Tools after Output succeeds

Execute Tools after Output succeeds

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello everyone,

We're facing a situation at my company and we are not sure if what we ask for is feasible.

CONTEXT

We are thinking about developing a custom execution log for a few Workflows that we have scheduled in the gallery. This would imply writing to a log table in our SQL Server after the normal output in the Workflow has finished successfully.

PROBLEM

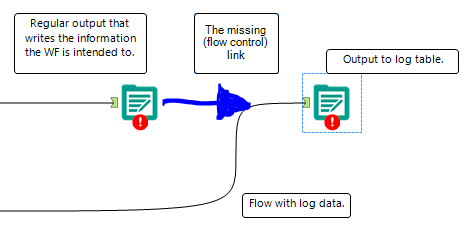

We are unaware of how to make a flow continue after an output. I think the following picture presents the problem in a quite obvious way.

Could someone give us a helping hand and show us any way to achieve the desired flow control requirement?

Best regards,

- Labels:

-

Chained App

-

Output

-

Tips and Tricks

-

Workflow

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey Guys was this resolved. I am trying to implement similar kind of thing:

Once data is loaded into my table - > I want to run stored proc to populate other tables.

I am using Snowflake as the database

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi -

This solution came to my attention recently:

- Turn each workflow into an analytic app, then follow the directions in the linked interactive lesson below for Chaining Analytic Apps: https://community.alteryx.com/t5/Interactive-Lessons/Chaining-Analytic-Apps/ta-p/243120

- ii. To turn a workflow into an app, click on the Workflow tab of the configuration window, and click the radio button for Analytic App, and then save the workflow.

Perhaps that would work for you.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks Mark. What do you think of crew macros ?

Can this is used ?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi -

Unfortunately, I have not seen the Crew macros in action, yet, so not really in a position to comment. The solution that I linked to above seems to be a simple way of linking workflows, but may not be powerful enough for you.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I had a similar problem, because the database had an auto-incrementing ID which I needed for subsequent steps. Here is my solution, which does not use any Chaos Reigns macros:

The incoming stream has an "insert_timestamp" field. The Summary tool blocks downstream tools just like the Block Until Done, and gets the earliest timestamp so the Dynamic Input tool can modify the query to fetch just those records that were appended to the table in the previous Output tool.

It is also worth noting that, just like the Summary tool, the Block Until Done tool does not block until any branches prior to it complete. It only blocks until the incoming stream to that tool is complete. So, if the Output tool is still busy writing to the table, which can be true if large records are being written to a cloud database, the Dynamic Input tool won't see any data yet. So in order to allow for that, I added a delay in the Pre SQL Statement of the Dynamic Input tool:

(This command is specific to Snowflake. Other databases will differ.)

In the OP use case, since they don't want any of the fields from the previous stream, and just want to delay they secondary stream, you can use the Append Fields tool, appending the output of the Summary tool, and then just deselect the appended field.

I hope that helps others that may have this question.

- « Previous

-

- 1

- 2

- Next »

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,222 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,293 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,255 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »