General Discussions

Discuss any topics that are not product-specific here.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- General

- :

- Re: Advent of Code 2022 Day 15 (BaseA Style)

Advent of Code 2022 Day 15 (BaseA Style)

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This felt much easier than the last few days. Both parts can add an optimization challenge depending on your approach.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

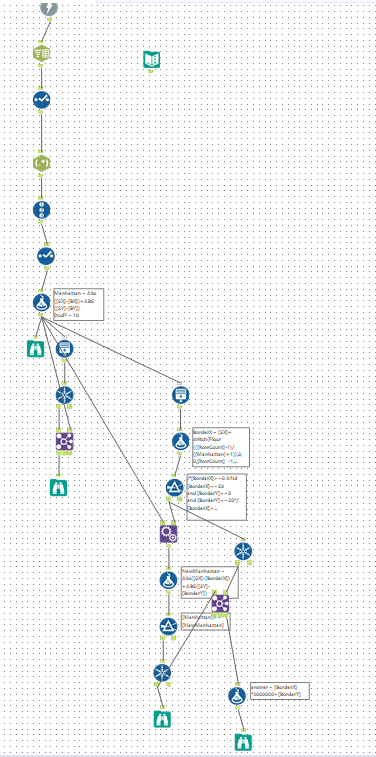

In a last few days, I didn't hold the time to do the challenge because of my work and year-end party, but today I did it! Certainly, today's challenge is easier before a few days.

The easiest way to do this was, as expected, to explode the number of records, so I had to do it different way.

So,it took about 4 minutes in part 2.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

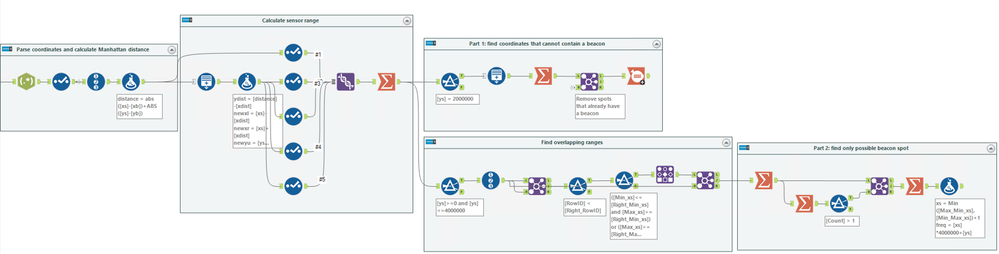

P1 was straightforward. P2 required some ugly brute force.

P2: Generate the min/max for each path by row. Then do a calc to determine if y_min <=[row-1:y_max] THEN 1 for the 14 rows possible. Filtered where THEN =0 and plug magic row back into original logic to determine x,y coordinate then final logic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

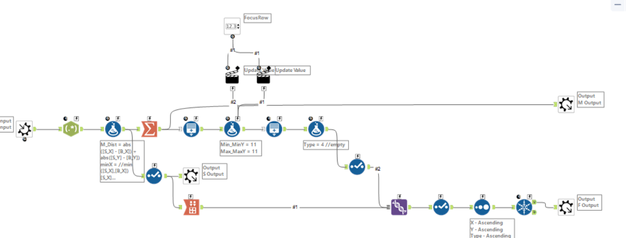

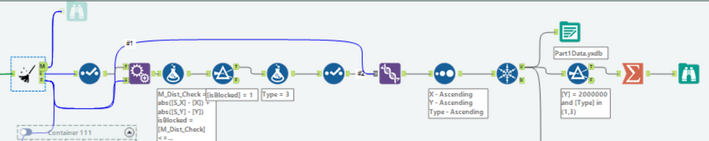

Managed to catch up on Day 15! Part 2 was really fun to try and figure out/optimise after realising I couldn't scaffold a 4m*4m grid to check against. Following a couple of ideas that still lead to huge blowups and cancelling a couple of workflows after 15-20 minutes, I decided to try and use spatial for this which actually ended up running in 0.2 seconds! Entire flow for P1+P2 takes ~17s.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

It was difficult to resolve the performance issue for Part2.

Process took 12 mins for part2 and most of them are for multi-Row formula to process 31M records.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

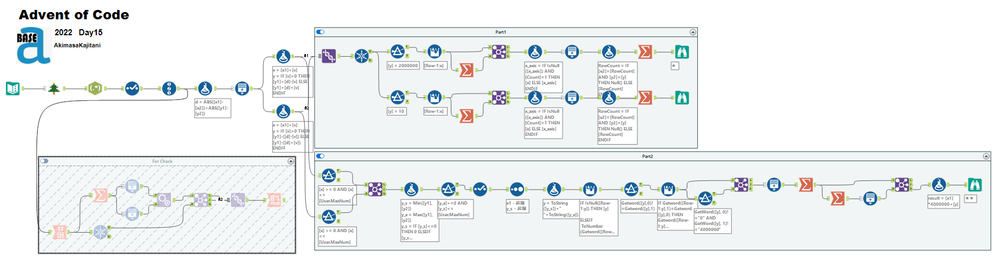

So - took a completely different approach to part 1 vs. part 2.

Very quickly realised (i.e. after running for an hour) that solving part 2 by using a brute-force array would not work.

Part 1 I took a very brute-force approach.

- For each sensor - find the manhattan distance

- Then create a diamond shape of points based on manhattan distance of blocked spots

- Then combine all these

... and filter for the focus row.

This is almost all done in the input parser:

And then the outer call looks like this.

Part 2:

I originally did part 2 in the same way - it worked for the example test but didn't scale to the full data.

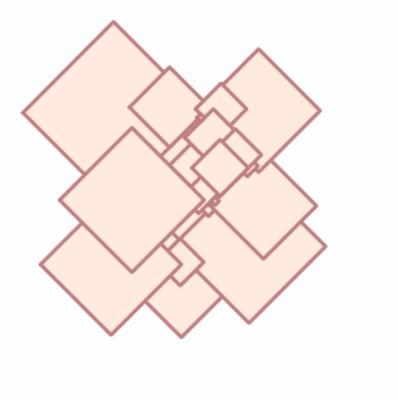

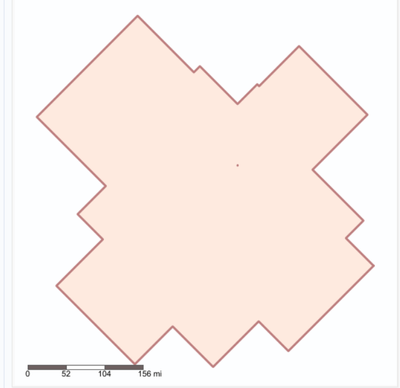

To make this scale - switched to Spatial since this is really an intersection of squares exercise.

To explain this.

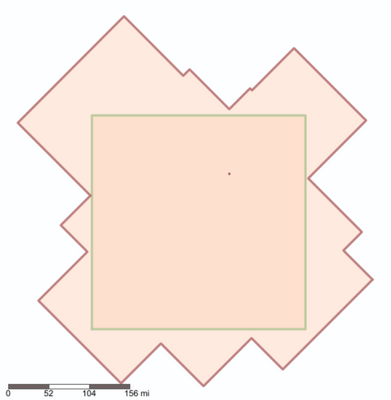

The top piece creates a combined polygon of the diamonds for the coverage for every sensor.

The bottom piece creates the bounding box.

Then you subtract the top from the bottom and that tells you what pieces remain.

Split them into polygons

- The example test had thin sliver-type polygons - so I did a bit more work to see if I could fit in a 1x1 beacon - but this wasn't needed on the final data.

Things to watch out for:

- Use X & Y as floating point long & lat

- Scale these values up / down to fit within the range of -2;2 (this allows you to use Alteryx spatial tools which only allow Earth coords and staying within the range of -2;2 reduces issues of curvature since spatial tools are not flat-plain geometry but geography (spherical earth).

Step 1: Overlapping sensor areas:

Step 2: Merge into one poly:

Subtract from Bounding Box:

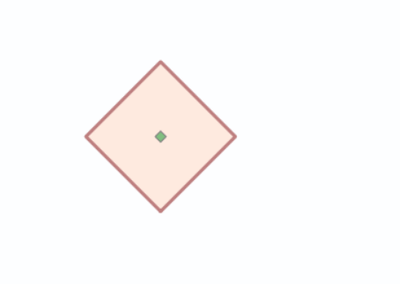

Final Resul (tiny box with the centroid in the middle)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My workflow is slow and had 2Billion+records, but it worked well enough.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

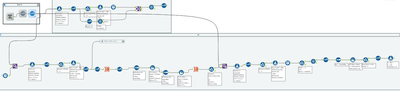

although get answer via spatial, but still want to find another method. take less than 10 seconds in total.

remember to take negative... stuck for hours due to the negative...

identify whether square touch the y = 2000000,

then merge the range instead of generating every position.

and deduct the Beacon.

part2:

using the equation method.

assume all the side is 4 equation

upper_upward = y = x + c + distance

lower_upward = y = x + c - distance

upper_downward = y = -x + c + distance

lower_downward = y = -x + c - distance

as only one position, the point must attached to 4 lines above. (2 lines in x and 2 lines in y)

hence there must have variance of 2 for each pair above.

get the average of the C (just -1 or +1 as they are 1 position above or bottom), that is the equation for x and y.

intersect the equation, we can get the point x and y

and multiple the required 4000000 + [y]

-

.Next

1 -

2020.4

1 -

AAH

3 -

AAH Welcome

8 -

ABB

1 -

Academy

222 -

ADAPT

9 -

ADAPT Program

1 -

Admin

1 -

Administration

2 -

Advent of Code

135 -

AHH

1 -

ALTER.NEXT

1 -

Alteryx Editions

5 -

Alteryx Practice

442 -

Analytic Apps

6 -

Analytic Hub

2 -

Analytics Hub

4 -

Analyzer

1 -

Announcement

73 -

Announcements

25 -

API

3 -

App Builder

9 -

Apps

1 -

Authentication

3 -

Automation

1 -

Automotive

1 -

Banking

1 -

Basic Creator

5 -

Best Practices

3 -

BI + Analytics + Data Science

1 -

Bugs & Issues

1 -

Calgary

1 -

CASS

1 -

CData

1 -

Certification

270 -

Chained App

2 -

Clients

3 -

Common Use Cases

3 -

Community

817 -

Computer Vision

1 -

Configuration

1 -

Connect

1 -

Connecting

1 -

Content Management

4 -

Contest

49 -

Contests

1 -

Conversation Starter

159 -

COVID-19

15 -

Data

1 -

Data Analyse

2 -

Data Analyst

1 -

Data Challenge

188 -

Data Connection

1 -

Data Investigation

1 -

Data Science

102 -

Database Connection

1 -

Database Connections

3 -

Datasets

3 -

Date type

1 -

Designer

1 -

Designer Integration

4 -

Developer

5 -

Developer Tools

2 -

Directory

1 -

Documentation

1 -

Download

3 -

download tool

1 -

Dynamic Input

1 -

Dynamic Processing

1 -

dynamically create tables for input files

1 -

Email

2 -

employment

1 -

employment opportunites

1 -

Engine

1 -

Enhancement

1 -

Enhancements

2 -

Enterprise (Edition)

2 -

Error Messages

3 -

Event

1 -

Events

110 -

Excel

1 -

Feedback

2 -

File Browse

1 -

Financial Services

1 -

Full Creator

2 -

Fun

156 -

Gallery

2 -

General

23 -

General Suggestion

1 -

Guidelines

13 -

Help

72 -

hub

2 -

hub upgrade 2021.1

1 -

Input

1 -

Install

2 -

Installation

4 -

interactive charts

1 -

Introduction

25 -

jobs

2 -

Licensing

3 -

Machine Learning

2 -

Macros

3 -

Make app private

1 -

Marketplace

8 -

Maveryx Chatter

12 -

meeting

1 -

migrate data

1 -

Networking

1 -

New comer

1 -

New user

1 -

News

26 -

ODBC

1 -

Off-Topic

125 -

Online demo

1 -

Output

2 -

PowerBi

1 -

Predictive Analysis

1 -

Preparation

3 -

Product Feedback

1 -

Professional (Edition)

2 -

Project Euler

21 -

Public Gallery

1 -

Question

1 -

queued

1 -

R

1 -

Reporting

1 -

reporting tools

1 -

Requirements

1 -

Resource

117 -

resume

1 -

Run Workflows

10 -

Salesforce

1 -

Santalytics

9 -

Schedule Workflows

6 -

Search Feedback

76 -

Server

2 -

Settings

2 -

Setup & Configuration

5 -

Sharepoint

2 -

Starter (Edition)

2 -

survey

1 -

System Administration

4 -

Tax & Audit

1 -

text translator

1 -

Thursday Thought

57 -

Tips and Tricks

6 -

Tips on how to study for the core certification exam

1 -

Topic of Interest

167 -

Udacity

2 -

User Interface

2 -

User Management

5 -

Workflow

4 -

Workflows

1

- « Previous

- Next »