Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: Using Error Message tool to block downstream p...

Using Error Message tool to block downstream processing in a macro

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have a macro that, when used, takes user input via Interface tools and makes API calls. I've set this up as a batch macro so the user can provide multiple rows of parameters that each correspond to a single API call, getting the results all "unioned" together back at the end. However, I want to prevent poorly formed parameters from even turning into an API request to avoid spending quota.

The Error Message tool seems designed to help with this but only as an analytic app. When triggering the error, it looks like the internal app workflow doesn't even run. However if I use the Error Message tool in a batch macro inside a normal workflow, then the Error Message turns into an error log error -- however, the macro's contents still run (and generate an API request, which immediately errors out).

Is there a way to have the workflow in the macro stop execution on error? The macro itself doesn't have a Runtime "Cancel running workflow on error" option.

The only (inelegant) solution I can think of is to embed another batch macro in the first batch macro. The outer macro accepts the user input parameters, forms it into a single record, QAs the parameters, and if there is an error, doesn't pass anything to the inner batch macro. Otherwise, the record containing the parameters passes through to the inner macro -- which executes the actual API call. Any alternatives?

Solved! Go to Solution.

- Labels:

-

API

-

Batch Macro

-

Error Message

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Scott_Snowman,

never faced this problem, but an idea would be to use a filter tool and test with this, if the test fails then no data output, another solution may be to use the crew macro pack and use the "blocking test macro", and last would be to use the message tool wiht message type " error - and stop passing records threw this tool".

Hope it helped!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Try this technique

Put your validation code on output 1 of a Block Until Done tool and the rest of your workflow on output 2. With the error tools set to Error and stop passing records, the Macro halts if one of your message tools fails and leg 2 is never executed. The calling workflow may continue if you don't have it set to Cancel Running on Error, but the rest of the code in the Macro shouldn't execute.

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@danilang and @Ladarthure , thanks so much for the feedback!

I'm sorry for not being more explicit, that's what I get for writing a post right before heading to bed.

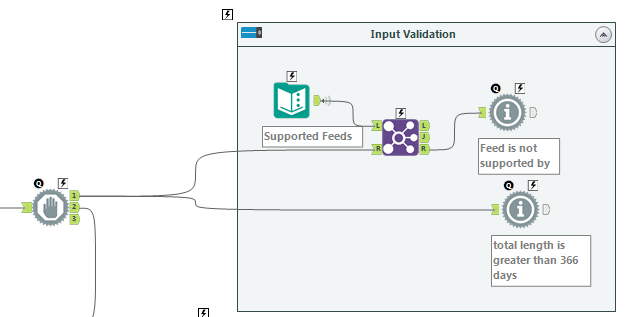

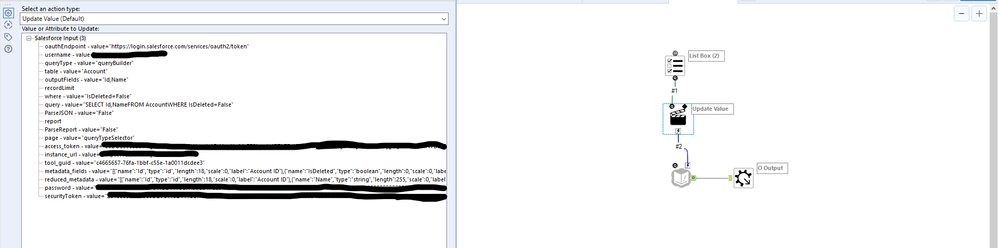

Both your approaches would normally work but the scenario I'm working with is similar to the below screenshot. There are multiple interface tools connecting right to an existing connector that I don't want to reverse-engineer. (I'm using the SalesForce connector in this example as a mock-up but it's not the SalesForce connector in the actual workflow.) So there is no workflow "before" the API request to check errors on. (That being said the Block Until Done technique you both suggested is a really good one for some other use cases I have!)

Any way to prevent the underlying connector from firing if the values in the Interface tools are malformed? If need be I can pass the results of the Interface tools to update a dummy record, QA as you've both described, and then feed that updated record into a macro that both updates and runs the connector, but I'd rather not "macro within macro" if I can avoid it.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

to my mind, it seems that you are on the right way, doing a macro within a macro, so that you can have more control over the queries you want to pass. I don't see any other solution, appart from reverse engineering the tool which could be complicated (more than a macro in a macro)

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

27 -

Alteryx Designer

7 -

Alteryx Editions

96 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

87 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,377 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

929 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,297 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,260 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,188 -

Topic of Interest

1,126 -

Transformation

3,733 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,983

- « Previous

- Next »