Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Re: SharePoint Input Tool - SharePoint Online

SharePoint Input Tool - SharePoint Online

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

We have had workflows with connections to a SharePoint List running for year's on our Prod Server because we have used SharePoint 2010 and we can utilize our Batch ID and password to gain access to the list.

We are moving to SharePoint Online and the SharePoint Input Tool's configuration asks for an email address... My BatchID doesnt have an email address, so I am at a loss for what to do.

Anyone know how to gain access to a list like this with a BatchID in use?

Thanks for any assistance that can be provided.

Dan

Solved! Go to Solution.

- Labels:

-

Input

-

Sharepoint

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@was Do you have an "All Items" view on each of the created Lists? That is one deficiency I see in the currently built SP connector, is that you can only populate that default view. If you want to populate differing views, you can go down the road of calling PS cmdlets, but I've normally just opted for making sure I've got a default view in place.

If you confirm they are both writing to that named view, then you've got another issue at hand.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@salbol1

Yes each has "All Items" view and both are default views.

Do you think I am having a different issue? 😣

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You aren't doing something like aliasing the system ID to serve as material number ID, right?

Also, is there anything in terms of using List A in a Flow/Power Apps to populate your List B?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

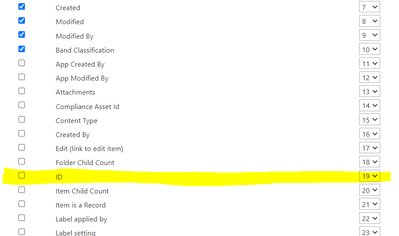

When creating a new list on SP, the first column is "Title". I did rename Title column to "MATL_NUM_D" in both SP sites.

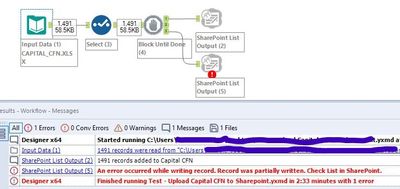

List A (Output 2) and List B (Output 5) are populated from the same data source (excel) and the same Alteryx workflow.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

very useful . thanks a bunch

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Any suggestion on what must be done annually when it expires . To re do this step is not a big deal ...but changing all workflows is not easy . Of course the SSO route I have to make changes once in 6 months . here it seems once in a year

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

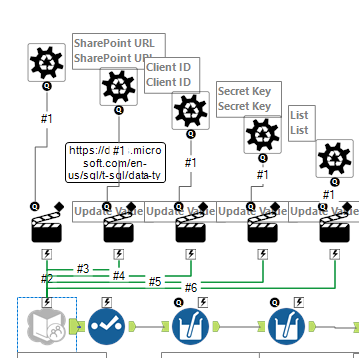

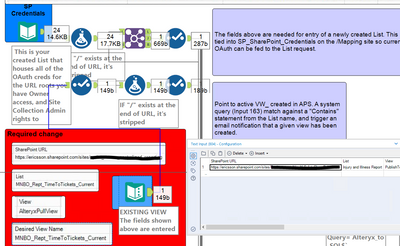

Create a List that tracks your differing URL OAuth creds, and then have a macro that has the 5 input actions against the SharePoint connector as the basis for which data to pull back data on your targeted List...

By setting up a template with a text input, you then just have to include the 5 elements you're seeking to be able to pull a current list of all your active SP creds, the output of which out of the Join is your feed into the macro you've created - and will return the dynamic OAuth creds info for your target --

These inputs feed the 5 inputs (URL, Client ID, Secret Key, List, View) you created with the macro to serve back the data from the List you are targeting. The Data Input with "SP Credentials" is a warehoused table in one of our datalakes we use as the reference to all of these URL's, so we don't have any expiration based tools in the workflow. Then you can setup notifications against your List indicating that you have expiring creds in X weeks, and of course if you really wanted to get ambitious you could script the renewal of your creds and run that as an event against a expiration date you'd house in the List you've created for your tracking. Then everything would be completely hands-off, and your module will live in perpetuity!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This works perfectly for Lists - Thank you

What about for Files? Ie Excel files that are stored on Sharepoint that I need to pull in. I Tried Sharepoint Input - Files - Provide Client ID and Secrete and Tenant ID - so the piece that I'm missing is tenant ID.

Any help appreciated

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@smccaghren -- That is provided internally by your IT/SharePoint admins. (not 100% sure which). It is not something the community will be able to provide to you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This connector is designed for Lists, but you can always utilize python for accessing the O365 libraries:

Package.installPackages(['Office365-REST-Python-Client','office365'])

This snippet below pulls in a URL and a set of Document Loads to a SP site that contain .zip files -

This is the python code for accessing the SP site documents in the identified sub folder (shown as 1 down in the bottom snippet)

from ayx import Alteryx

import numpy as np

import pandas as pd

from office365.sharepoint.client_context import ClientContext

from office365.runtime.auth.client_credential import ClientCredential

from office365.sharepoint.files.file import File

InputDf = Alteryx.read("#1")

SharePointURL = np.array2string(InputDf['SharePointURL'].values[:1])

SharePointURL = SharePointURL.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

SPFolder = np.array2string(InputDf['SPFolder'].values[:1])

SPFolder = SPFolder.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

#SharePointURL = "https://YOUR_COMPANY.sharepoint.com/sites/Some .zip Reports/"

site_url = "https://YOUR_COMPANY.sharepoint.com/sites/A COMPANY SUBTENANT SITE/A SUB SCHEMA OF THE TENANT"

sp_list = "SP_SharePoint_Credentials" ##(This is a SharePoint list where we house URL's, Client ID's and Secret Keys)

ClientId = "xxxxxxxxxxxxxxxxxxxxxxxxx" ##(Use your real ones)

ClientSecret = "yyyyyyyyyyyyyyyyyyyyyyyyyyy" ##(Use your real ones)

credentials = ClientCredential(ClientId, ClientSecret)

SPClientID = ""

SPSecKey = ""

###Connecting to Sharepoint Mapping list

ctx = ClientContext(site_url).with_credentials(credentials)

sp_lists = ctx.web.lists

s_list = sp_lists.get_by_title(sp_list)

l_items = s_list.get_items()

ctx.load(l_items)

ctx.execute_query()

###Looking for credentials

for item in l_items:

if item.properties['SharePointURL'] == SharePointURL:

SPClientID = item.properties['Client_ID']

SPSecKey = item.properties['SecretKey']

print("Credentials Found\n\n")

del ctx

data = {'SharePointURL':[SharePointURL],

'SPFolder':[SPFolder],

'Client_ID':[SPClientID],

'SecretKey':[SPSecKey]}

OutDf = pd.DataFrame(data)

#Writing Output

Alteryx.write(OutDf,1)

We then append to this output DF, the name of file we are looking for in the documents folder. The variable is 'FileName' and indicates we are looking for files with say, PM_Reporting in it's name (shown as 2 in the snippet at the bottom):

from ayx import Alteryx

import numpy as np

import pandas as pd

import datetime, os

from datetime import date, datetime

from office365.sharepoint.client_context import ClientContext

from office365.runtime.auth.client_credential import ClientCredential

from office365.sharepoint.files.file import File

InputDf = Alteryx.read("#1")

FilesPath = np.array2string(InputDf['LocalFolder'].values[:1])

FilesPath = FilesPath.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

SharePointURL = np.array2string(InputDf['SharePointURL'].values[:1])

SharePointURL = SharePointURL.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

SPFolder = np.array2string(InputDf['SPFolder'].values[:1])

SPFolder = SPFolder.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

FileName = np.array2string(InputDf['FileName'].values[:1])

FileName = FileName.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

ClientId = np.array2string(InputDf['Client_ID'].values[:1])

ClientId = ClientId.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

ClientSecret = np.array2string(InputDf['SecretKey'].values[:1])

ClientSecret = ClientSecret.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

credentials = ClientCredential(ClientId, ClientSecret)

TodayD = date.today().strftime("%Y-%m-%d")

print(SharePointURL)

print(SPFolder)

print(SharePointURL.replace("https://YOUR_COMPANY.sharepoint.com","") + SPFolder + "/" )

################Connecting to SP

ctx = ClientContext(SharePointURL[:-1]).with_credentials(credentials)

WebElem = dir(ctx.web)

SpWeb = ctx.web

files = ctx.web.get_folder_by_server_relative_url(SharePointURL.replace("https://YOUR_COMPANY.sharepoint.com","") + SPFolder + "/" ).files

ctx.load(files).execute_query()

file_list = []

for file in files:

if file.name.endswith(".zip") and file.time_last_modified[:10] == TodayD and FileName.lower() in file.name.lower():

file_list.append(file.name)

################Downloading Files

for i in file_list:

if i.endswith(".zip"):

print("Downloading " + i + "\n\n")

if os.path.exists(os.path.join(FilesPath, i)):

os.remove(os.path.join(FilesPath, i))

response = File.open_binary(ctx, SharePointURL.replace("https://YOUR_COMPANY.sharepoint.com","") + SPFolder + "/" + i)

with open(os.path.join(FilesPath, i), "wb") as local_file:

local_file.write(response.content)

del ctx

#Writing Output

Alteryx.write(InputDf,1)

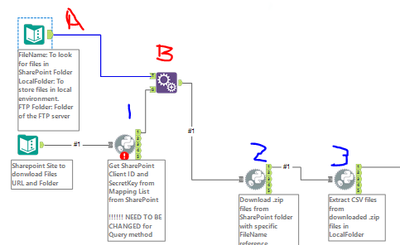

This portion then takes the csv files that are contained within the zip files, and loads them to a local directory path, also identified in the Text input tool shown here (A), and appended with the first output dataframe (B):

(from the python tool 3)

from ayx import Alteryx

import os, datetime

import pandas as pd

from zipfile import ZipFile

from datetime import datetime, date

import numpy as np

InputDf = Alteryx.read("#1")

FilesPath = np.array2string(InputDf['LocalFolder'].values[:1])

FilesPath = FilesPath.replace("\\\\","\\").replace("'","").replace("[","").replace("]","")

# Validate file date function

def ModifiedToday(FileName):

lastmodified = datetime.fromtimestamp(os.stat(FileName).st_mtime).strftime("%m-%d-%y")

TodayD = date.today().strftime("%m-%d-%y")

if lastmodified == TodayD:

return True

else:

return False

##############################Script Start

#list files in folder:

print(FilesPath)

DirFiles = os.listdir(FilesPath)

#Looping through dir validating files and retrieving grid files to write grid load sql

print("searching updated Zips and unzipping\n\n")

for i in DirFiles:

if ModifiedToday(os.path.join(FilesPath,i)) and i.endswith(".zip"):

with ZipFile(os.path.join(FilesPath,i), 'r') as zipObj:

for info in zipObj.infolist():

if info.filename.endswith(".csv"):

zipObj.extract(info.filename, FilesPath)

if os.path.exists(os.path.join(FilesPath,i.replace(".zip", ".csv"))):

os.remove(os.path.join(FilesPath,i.replace(".zip", ".csv")))

os.rename(os.path.join(FilesPath,info.filename),os.path.join(FilesPath,i.replace(".zip", ".csv")))

#Writing Output

Alteryx.write(InputDf,1)

print("All CSV Files in Path have been unzipped\n\n")

We have a team member that has been dubbed the 'king of SP python" that allows us to make great use of the REST and O365 client libraries.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

25 -

Alteryx Designer

7 -

Alteryx Editions

94 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

14 -

Batch Macro

1,558 -

Behavior Analysis

246 -

Best Practices

2,693 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,823 -

Community

26 -

Computer Vision

85 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,936 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,486 -

Data Science

3 -

Database Connection

2,220 -

Datasets

5,221 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

740 -

Developer

4,368 -

Developer Tools

3,528 -

Documentation

526 -

Download

1,037 -

Dynamic Processing

2,937 -

Email

927 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,256 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

711 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,705 -

In Database

966 -

Input

4,291 -

Installation

360 -

Interface Tools

1,900 -

Iterative Macro

1,094 -

Join

1,957 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

259 -

Macros

2,862 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

750 -

Output

5,252 -

Parse

2,327 -

Power BI

228 -

Predictive Analysis

936 -

Preparation

5,167 -

Prescriptive Analytics

205 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,431 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

629 -

Settings

933 -

Setup & Configuration

3 -

Sharepoint

626 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,726 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,974

- « Previous

- Next »