Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Need Help to Calculate Operational % over time

Need Help to Calculate Operational % over time

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

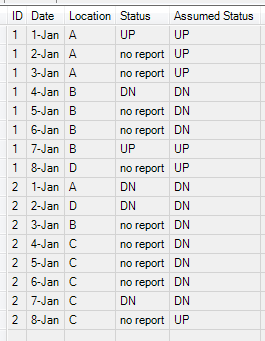

I'm looking to calculate Operational % of a thing.

This thing travels to different locations and has Many "States/Status's" is can be in.

Information comes in sporadically so we want to assume its last Status then calculate the time between the dates as shown below then Calculate what % of time in each state (% operational being specifically time it has been UP and used).

We also want to be able to see if that item spends more time DOWN at a specific location to look for trends in locations.

I can calc this in Tableau BUT it is too taxing for the vis given there are 2 million lines and counting daily (increase of 60k lines a week)

it is important the we see the parts lineage( location ) and operational time but I'm semi new to Alteryx and don't know the route to take.

| Date | 1-Jan | 2-Jan | 3-Jan | 4-Jan | 5-Jan | 6-Jan | 7-Jan | 8-Jan | %Operational |

| ID 1 | UP | no report | no report | DN | no report | no report | UP | no report | |

| Assumed info | UP | UP | UP | DN | DN | DN | UP | UP | 66% ish (don't judge my eyeball math) |

| ID 2 | DN | DN | no report | no report | no report | no report | DN | no report | |

| A | A | A | B | B | B | B | D | ||

| Assumed info | DN | DN | DN | DN | DN | DN | DN | UP | 3% ish |

| A | D | B | C | C | C | C | C | ||

| ID 1 Health BY location example | |||||||||

| A | 70% | ||||||||

| B | 75% | ||||||||

| D | 100 |

Solved! Go to Solution.

- Labels:

-

Date Time

-

Time Series

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @pitmansm

I feel like the structure of the example data has hindered responses from the Community. Let's see if I understand this correctly:

- The "Assumed info" is the assumed state of the "ID #" values above, yes? Would it make sense to have that directly adjacent to those ID values, or even replace them?

- The A, B, C, D values are locations at which these status values occur?

The first thing I would recommend is to transform this data a bit to make the calculations very easy.

-Let's talk about the sequence of rows. Is it possible for the rows to always be grouped together? Perhaps in the sequence Status, Assumed Status, Location? The order of rows in your example data leads me to assume that the first Location row should be related to "ID 1", but I don't know this for sure. It is possible to correct for this in Alteryx, but it can be tricky and (more importantly) circumstantially unstable.

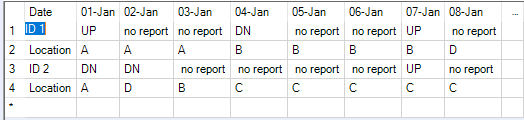

We can talk more about data transformation if you'd like, but the data schema I'd recommend getting to is this:

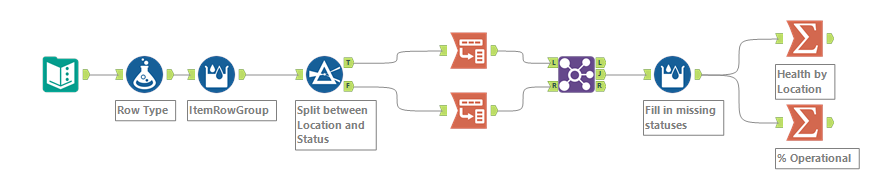

Once the data looks like this, the calculation you've requested are very simple and just take a couple tools. First, I assign a 1/0 to the Assumed status, and then we can use a Summarize tool to group by a field and average the new 1/0 field.

Check out the attached workflow to see an example in action.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @pitmansm

I took a stab at getting your input into long format. Assuming an input that looks like this

This workflow will pivot your data and then applying @CharlieS' very clever data mapping that avoids having to calculate totals and then dividing, gives an output close to what you're looking for

Dan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

OH yea the Data is in row format. And is shows like you described for sure. The Assumed portion is there to show the desired Logic. the data as it flows does not show the assumed data. Ill gen up an example of exactly how it looks. @CharlieS

| Part | Status | Date |

| 1 | UP | 1-Jan |

| 2 | UP | 1-Jan |

| 3 | DN | 1-Jan |

| 4 | DN | 10-Jan |

| 3 | DN | 10-Jan |

| 2 | UP | 10-Jan |

| 6 | up | 13-Jan |

| 1 | DN | 13-Jan |

| 2 | DN | 13-Jan |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My apologies as the intent was to show concept. My data is spread across 1600 csv's Im good combining it all just a matter of calculating the time between Status's and Assuming the last status that has me stumped.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Opp yea my apologies date and location are derived from file name.

I have a good grasp on how to agg these csv's pull the date out of them and the location from the name. The Operational % is the kicker for me.

Its a difficult data set to cleanse since I have to completely make up the data to give an example lol

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@CharlieS I appreciate your time!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Yea for sure. what made it click for me was the Average inside the aggregation. Thank you both for the help!

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,210 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,696 -

Bug

720 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,939 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,489 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,229 -

Demographic Analysis

186 -

Designer Cloud

743 -

Developer

4,376 -

Developer Tools

3,534 -

Documentation

528 -

Download

1,038 -

Dynamic Processing

2,941 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,262 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

714 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,711 -

In Database

966 -

Input

4,296 -

Installation

361 -

Interface Tools

1,902 -

Iterative Macro

1,095 -

Join

1,960 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,866 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,258 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,171 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

576 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

631 -

Settings

936 -

Setup & Configuration

3 -

Sharepoint

628 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

432 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,732 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,982

- « Previous

- Next »