Alteryx Designer Desktop Discussions

Find answers, ask questions, and share expertise about Alteryx Designer Desktop and Intelligence Suite.- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Designer Desktop

- :

- Dynamically updating Object Name in AWS S3 Upload ...

Dynamically updating Object Name in AWS S3 Upload Tool

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

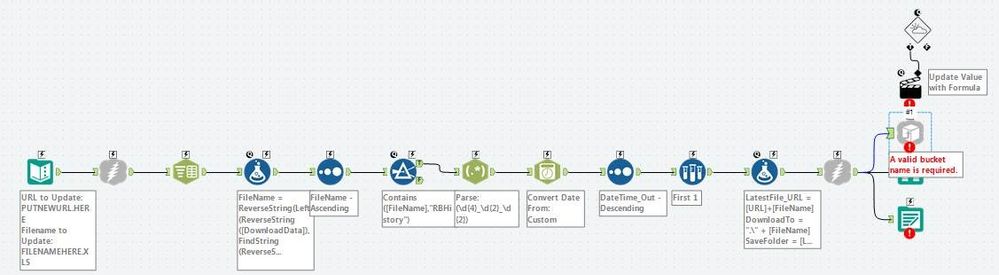

Good morning, I have the following workflow:

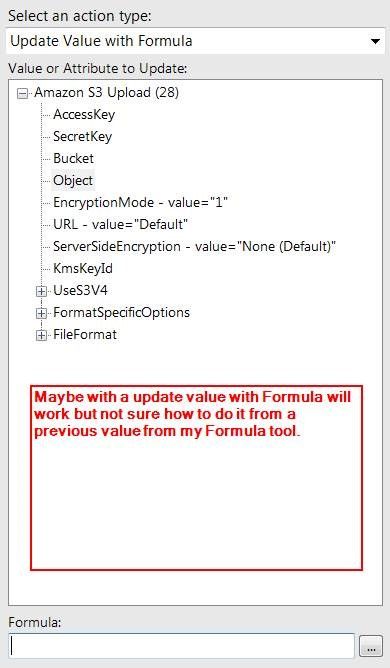

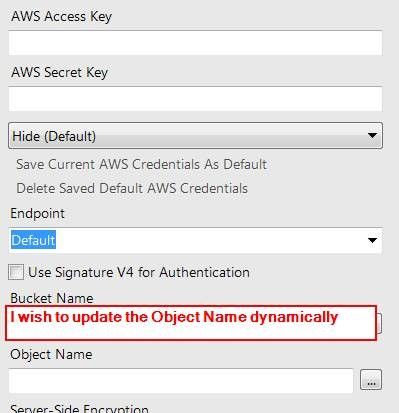

What it does is make an initial connection to an SFTP folder that contains a bunch of files. The tools following will then filter out dates from the file names and filter down to the latest file I need for a particular client. The problem I have after this is storing it dynamically onto an AWS S3 bucket. I want to be able to schedule the workflow without any human intervention to pull the latest file automatically daily. However, after doing a lot of research I only see options where others have used a macro to get a filename from a Question for the Object section in the S3 tool. What I’m hoping is to be able to pass in the dynamic name into the Object section of the S3 tool via one of the formula tools which is already in the workflow that dynamically saves the file. Do you know of a way of doing this without having to use a macro that asks a question?

Thanks for your input.

Solved! Go to Solution.

- Labels:

-

Amazon S3

-

Common Use Cases

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

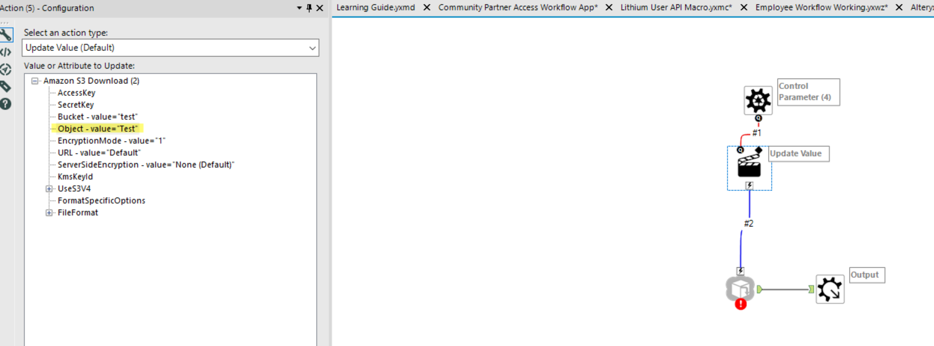

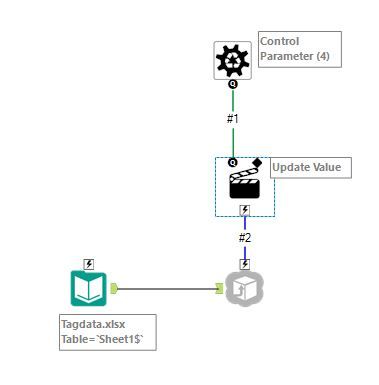

You will have to create a batch macro that uses a Control Parameter to update the Object. The Object name from your Formula tool would be fed into the Control Parameter which would then feed into the AWS tool and then output the data back into the workflow.

This post isn't quite what you are trying to do, but the setup of the macro is very similar

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks Dan! that was what I was looking for and I got it to work.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi kuoshihyang,

I'm facing the same challenge now in creating Amazon S3 uploads with a timestamp. I tried all possible example on Alteryx community but could materialise them much. Any help would be appreciated.

In action I've used 'DateTimeToday()' to update the file name value while uploading output in S3 bucket. Unfortunately it's taking the Object name which I had given as an input while configuring S3 Upload tool.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm experiencing the same problem. What's happening for me is that I've got this loaded into a Gallery application.

When I run it manually on the Gallery, the Action tool will work properly using the "Update Value with Formula" selection to add the timestamp.

However, when I schedule the workflow to run automatically, it bypasses the "Update Value with Formula" tool and just uploads with the object name, which if it runs over and over, will just upload and overwrite the same file with the placeholder object name I have in the S3 tool.

I will be experimenting with this today and will respond if I fix it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I created this exact setup and it successfully created the file with the name that I want.

However, when I use the macro it is painfully slow --- just keeps running (even for a small amount of data)

When I use the connector directly without the macro it runs very fast. Is there some setting in the macro that I need to adjust? Again I have it set up exactly as you outlined above.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I tried setting up the properties in the tools as mentioned, but it doesn't work. The S3 object getting created is with the same name that in the S3 upload tool. Can someone provide a working alteryx workflow? May be I am missing some important property.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi, I hope that you are well.

I am facing the same issue for several months, and could not find any solution about how to upload a bunch of files to aws s3, and then schedule it on Alteryx server. If you have any workflow for this issue or any guidance, I will be really grateful.

Please feel free to contact me in case of any information.

Kind regards, Sina

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi, how are you doing?

I saw your post in community This post about how to configure s3 aws upload via Alteryx. Do you know how to set it up in Alteryx Server, or do you have any documentation for Alteryx Server for this regard?

Actually, I tried to transfer files from file share to s3 bucket. I could not do it with Alteryx built-in tools. So, I wrote python codes inside it which requires aws credential and AWS CLI. It works fine in windows, but in server, it does not.

I know that you are really busy with your commitments, however, in case of any information, I will be genuinely grateful due to the fact that I have been blocked for a long time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@sinarazi your replying to a post which is 5 years old. Start a new one. Is your Server running on an EC2? If so - grant access to the S3 form the Ec2 - and use AWS CLI via role.

-

Academy

6 -

ADAPT

2 -

Adobe

204 -

Advent of Code

3 -

Alias Manager

78 -

Alteryx Copilot

26 -

Alteryx Designer

7 -

Alteryx Editions

95 -

Alteryx Practice

20 -

Amazon S3

149 -

AMP Engine

252 -

Announcement

1 -

API

1,208 -

App Builder

116 -

Apps

1,360 -

Assets | Wealth Management

1 -

Basic Creator

15 -

Batch Macro

1,559 -

Behavior Analysis

246 -

Best Practices

2,695 -

Bug

719 -

Bugs & Issues

1 -

Calgary

67 -

CASS

53 -

Chained App

268 -

Common Use Cases

3,825 -

Community

26 -

Computer Vision

86 -

Connectors

1,426 -

Conversation Starter

3 -

COVID-19

1 -

Custom Formula Function

1 -

Custom Tools

1,938 -

Data

1 -

Data Challenge

10 -

Data Investigation

3,487 -

Data Science

3 -

Database Connection

2,221 -

Datasets

5,223 -

Date Time

3,227 -

Demographic Analysis

186 -

Designer Cloud

742 -

Developer

4,372 -

Developer Tools

3,530 -

Documentation

527 -

Download

1,037 -

Dynamic Processing

2,939 -

Email

928 -

Engine

145 -

Enterprise (Edition)

1 -

Error Message

2,258 -

Events

198 -

Expression

1,868 -

Financial Services

1 -

Full Creator

2 -

Fun

2 -

Fuzzy Match

712 -

Gallery

666 -

GenAI Tools

3 -

General

2 -

Google Analytics

155 -

Help

4,708 -

In Database

966 -

Input

4,294 -

Installation

361 -

Interface Tools

1,901 -

Iterative Macro

1,094 -

Join

1,958 -

Licensing

252 -

Location Optimizer

60 -

Machine Learning

260 -

Macros

2,864 -

Marketo

12 -

Marketplace

23 -

MongoDB

82 -

Off-Topic

5 -

Optimization

751 -

Output

5,256 -

Parse

2,328 -

Power BI

228 -

Predictive Analysis

937 -

Preparation

5,169 -

Prescriptive Analytics

206 -

Professional (Edition)

4 -

Publish

257 -

Python

855 -

Qlik

39 -

Question

1 -

Questions

2 -

R Tool

476 -

Regex

2,339 -

Reporting

2,434 -

Resource

1 -

Run Command

575 -

Salesforce

277 -

Scheduler

411 -

Search Feedback

3 -

Server

630 -

Settings

935 -

Setup & Configuration

3 -

Sharepoint

627 -

Spatial Analysis

599 -

Starter (Edition)

1 -

Tableau

512 -

Tax & Audit

1 -

Text Mining

468 -

Thursday Thought

4 -

Time Series

431 -

Tips and Tricks

4,187 -

Topic of Interest

1,126 -

Transformation

3,730 -

Twitter

23 -

Udacity

84 -

Updates

1 -

Viewer

3 -

Workflow

9,980

- « Previous

- Next »